Matching pursuit on:

[Wikipedia]

[Google]

[Amazon]

Matching pursuit (MP) is a

Matching pursuit (MP) is a

If contains a large number of vectors, searching for the ''most'' sparse representation of is computationally unacceptable for practical applications.

In 1993, Mallat and Zhang proposed a greedy solution that they named "Matching Pursuit."

For any signal and any dictionary , the algorithm iteratively generates a sorted list of atom indices and weighting scalars, which form the sub-optimal solution to the problem of sparse signal representation.

Input: Signal: , dictionary with normalized columns .

Output: List of coefficients and indices for corresponding atoms .

Initialization:

;

;

Repeat:

Find with maximum inner product ;

;

;

;

Until stop condition (for example: )

return

In signal processing, the concept of matching pursuit is related to statistical projection pursuit, in which "interesting" projections are found; ones that deviate more from a

If contains a large number of vectors, searching for the ''most'' sparse representation of is computationally unacceptable for practical applications.

In 1993, Mallat and Zhang proposed a greedy solution that they named "Matching Pursuit."

For any signal and any dictionary , the algorithm iteratively generates a sorted list of atom indices and weighting scalars, which form the sub-optimal solution to the problem of sparse signal representation.

Input: Signal: , dictionary with normalized columns .

Output: List of coefficients and indices for corresponding atoms .

Initialization:

;

;

Repeat:

Find with maximum inner product ;

;

;

;

Until stop condition (for example: )

return

In signal processing, the concept of matching pursuit is related to statistical projection pursuit, in which "interesting" projections are found; ones that deviate more from a

Matching pursuit (MP) is a

Matching pursuit (MP) is a sparse approximation Sparse approximation (also known as sparse representation) theory deals with sparse solutions for systems of linear equations. Techniques for finding these solutions and exploiting them in applications have found wide use in image processing, sign ...

algorithm which finds the "best matching" projections of multidimensional data onto the span of an over-complete (i.e., redundant) dictionary . The basic idea is to approximately represent a signal from Hilbert space as a weighted sum of finitely many functions (called atoms) taken from . An approximation with atoms has the form

:

where is the th column of the matrix and is the scalar weighting factor (amplitude) for the atom . Normally, not every atom in will be used in this sum. Instead, matching pursuit chooses the atoms one at a time in order to maximally (greedily) reduce the approximation error. This is achieved by finding the atom that has the highest inner product with the signal (assuming the atoms are normalized), subtracting from the signal an approximation that uses only that one atom, and repeating the process until the signal is satisfactorily decomposed, i.e., the norm of the residual is small,

where the residual after calculating and is denoted by

:.

If converges quickly to zero, then only a few atoms are needed to get a good approximation to . Such sparse representations are desirable for signal coding and compression. More precisely, the sparsity problem that matching pursuit is intended to ''approximately'' solve is

:

where is the pseudo-norm (i.e. the number of nonzero elements of ). In the previous notation, the nonzero entries of are . Solving the sparsity problem exactly is NP-hard, which is why approximation methods like MP are used.

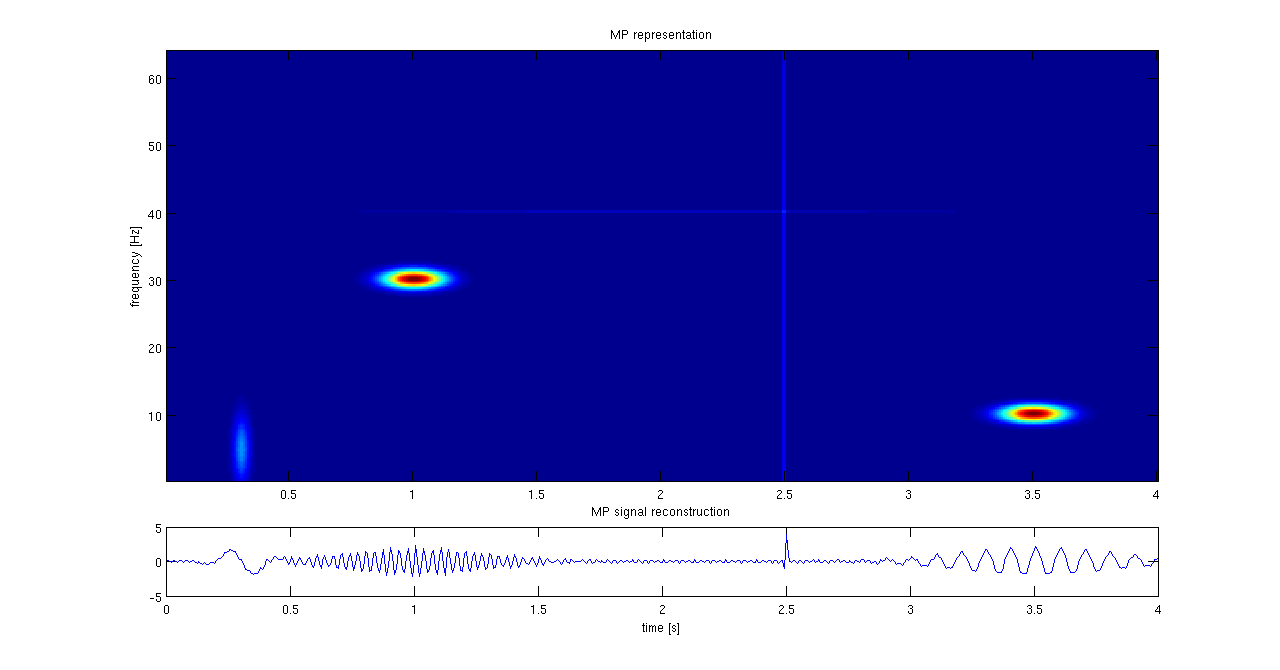

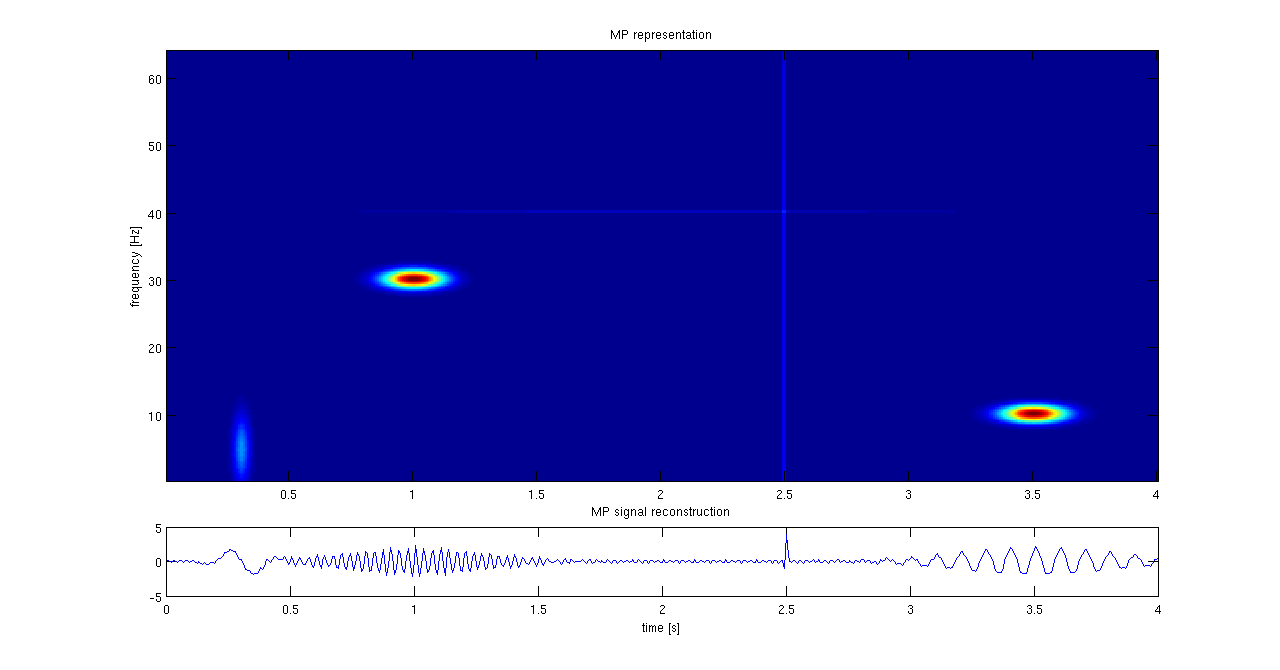

For comparison, consider the Fourier transform representation of a signal - this can be described using the terms given above, where the dictionary is built from sinusoidal basis functions (the smallest possible complete dictionary). The main disadvantage of Fourier analysis in signal processing is that it extracts only the global features of the signals and does not adapt to the analysed signals .

By taking an extremely redundant dictionary, we can look in it for atoms (functions) that best match a signal .

The algorithm

If contains a large number of vectors, searching for the ''most'' sparse representation of is computationally unacceptable for practical applications.

In 1993, Mallat and Zhang proposed a greedy solution that they named "Matching Pursuit."

For any signal and any dictionary , the algorithm iteratively generates a sorted list of atom indices and weighting scalars, which form the sub-optimal solution to the problem of sparse signal representation.

Input: Signal: , dictionary with normalized columns .

Output: List of coefficients and indices for corresponding atoms .

Initialization:

;

;

Repeat:

Find with maximum inner product ;

;

;

;

Until stop condition (for example: )

return

In signal processing, the concept of matching pursuit is related to statistical projection pursuit, in which "interesting" projections are found; ones that deviate more from a

If contains a large number of vectors, searching for the ''most'' sparse representation of is computationally unacceptable for practical applications.

In 1993, Mallat and Zhang proposed a greedy solution that they named "Matching Pursuit."

For any signal and any dictionary , the algorithm iteratively generates a sorted list of atom indices and weighting scalars, which form the sub-optimal solution to the problem of sparse signal representation.

Input: Signal: , dictionary with normalized columns .

Output: List of coefficients and indices for corresponding atoms .

Initialization:

;

;

Repeat:

Find with maximum inner product ;

;

;

;

Until stop condition (for example: )

return

In signal processing, the concept of matching pursuit is related to statistical projection pursuit, in which "interesting" projections are found; ones that deviate more from a normal distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

:

f(x) = \frac e^

The parameter \mu ...

are considered to be more interesting.

Properties

* The algorithm converges (i.e. ) for any that is in the space spanned by the dictionary. * The error decreases monotonically. * As at each step, the residual is orthogonal to the selected filter, the energy conservation equation is satisfied for each : :. * In the case that the vectors in are orthonormal, rather than being redundant, then MP is a form of principal component analysisApplications

Matching pursuit has been applied to signal, image and video coding, shape representation and recognition, 3D objects coding, and in interdisciplinary applications like structural health monitoring. It has been shown that it performs better than DCT based coding for low bit rates in both efficiency of coding and quality of image. The main problem with matching pursuit is the computational complexity of the encoder. In the basic version of an algorithm, the large dictionary needs to be searched at each iteration. Improvements include the use of approximate dictionary representations and suboptimal ways of choosing the best match at each iteration (atom extraction). The matching pursuit algorithm is used in MP/SOFT, a method of simulating quantum dynamics. MP is also used in dictionary learning. In this algorithm, atoms are learned from a database (in general, natural scenes such as usual images) and not chosen from generic dictionaries. A very recent application of MP is its use in linear computation coding to speed-up the computation of matrix-vector products.Extensions

A popular extension of Matching Pursuit (MP) is its orthogonal version: Orthogonal Matching Pursuit (OMP). The main difference from MP is that after every step, ''all'' the coefficients extracted so far are updated, by computing the orthogonal projection of the signal onto the subspace spanned by the set of atoms selected so far. This can lead to results better than standard MP, but requires more computation. OMP was shown to have stability and performance guarantees under certain restricted isometry conditions. Extensions such as Multichannel MP and Multichannel OMP allow one to process multicomponent signals. An obvious extension of Matching Pursuit is over multiple positions and scales, by augmenting the dictionary to be that of a wavelet basis. This can be done efficiently using the convolution operator without changing the core algorithm. Matching pursuit is related to the field ofcompressed sensing

Compressed sensing (also known as compressive sensing, compressive sampling, or sparse sampling) is a signal processing technique for efficiently acquiring and reconstructing a signal, by finding solutions to underdetermined linear systems. This ...

and has been extended by researchers in that community. Notable extensions are Orthogonal Matching Pursuit (OMP), Stagewise OMP (StOMP), compressive sampling matching pursuit (CoSaMP), Generalized OMP (gOMP), and Multipath Matching Pursuit (MMP).

See also

* CLEAN algorithm * Image processing *Least-squares spectral analysis

Least-squares spectral analysis (LSSA) is a method of estimating a frequency spectrum, based on a least squares fit of sinusoids to data samples, similar to Fourier analysis. Fourier analysis, the most used spectral method in science, generally ...

* Principal component analysis (PCA)

* Projection pursuit

* Signal processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing ''signals'', such as sound, images, and scientific measurements. Signal processing techniques are used to optimize transmissions, ...

* Sparse approximation Sparse approximation (also known as sparse representation) theory deals with sparse solutions for systems of linear equations. Techniques for finding these solutions and exploiting them in applications have found wide use in image processing, sign ...

* Stepwise regression

References

{{Reflist Multivariate statistics Signal processing