Compressed sensing on:

[Wikipedia]

[Google]

[Amazon]

Compressed sensing (also known as compressive sensing, compressive sampling, or sparse sampling) is a

In order to choose a solution to such a system, one must impose extra constraints or conditions (such as smoothness) as appropriate. In compressed sensing, one adds the constraint of sparsity, allowing only solutions which have a small number of nonzero coefficients. Not all underdetermined systems of linear equations have a sparse solution. However, if there is a unique sparse solution to the underdetermined system, then the compressed sensing framework allows the recovery of that solution.

In order to choose a solution to such a system, one must impose extra constraints or conditions (such as smoothness) as appropriate. In compressed sensing, one adds the constraint of sparsity, allowing only solutions which have a small number of nonzero coefficients. Not all underdetermined systems of linear equations have a sparse solution. However, if there is a unique sparse solution to the underdetermined system, then the compressed sensing framework allows the recovery of that solution.

Compressed sensing takes advantage of the redundancy in many interesting signals—they are not pure noise. In particular, many signals are sparse, that is, they contain many coefficients close to or equal to zero, when represented in some domain. This is the same insight used in many forms of

Compressed sensing takes advantage of the redundancy in many interesting signals—they are not pure noise. In particular, many signals are sparse, that is, they contain many coefficients close to or equal to zero, when represented in some domain. This is the same insight used in many forms of

In the CS reconstruction models using constrained minimization, larger coefficients are penalized heavily in the norm. It was proposed to have a weighted formulation of minimization designed to more democratically penalize nonzero coefficients. An iterative algorithm is used for constructing the appropriate weights.Lange, K.: Optimization, Springer Texts in Statistics. Springer, New York (2004) Each iteration requires solving one minimization problem by finding the local minimum of a concave penalty function that more closely resembles the norm. An additional parameter, usually to avoid any sharp transitions in the penalty function curve, is introduced into the iterative equation to ensure stability and so that a zero estimate in one iteration does not necessarily lead to a zero estimate in the next iteration. The method essentially involves using the current solution for computing the weights to be used in the next iteration.

In the CS reconstruction models using constrained minimization, larger coefficients are penalized heavily in the norm. It was proposed to have a weighted formulation of minimization designed to more democratically penalize nonzero coefficients. An iterative algorithm is used for constructing the appropriate weights.Lange, K.: Optimization, Springer Texts in Statistics. Springer, New York (2004) Each iteration requires solving one minimization problem by finding the local minimum of a concave penalty function that more closely resembles the norm. An additional parameter, usually to avoid any sharp transitions in the penalty function curve, is introduced into the iterative equation to ensure stability and so that a zero estimate in one iteration does not necessarily lead to a zero estimate in the next iteration. The method essentially involves using the current solution for computing the weights to be used in the next iteration.

This is an iterative CT reconstruction algorithm with edge-preserving TV regularization to reconstruct CT images from highly undersampled data obtained at low dose CT through low current levels (milliampere). In order to reduce the imaging dose, one of the approaches used is to reduce the number of x-ray projections acquired by the scanner detectors. However, this insufficient projection data which is used to reconstruct the CT image can cause streaking artifacts. Furthermore, using these insufficient projections in standard TV algorithms end up making the problem under-determined and thus leading to infinitely many possible solutions. In this method, an additional penalty weighted function is assigned to the original TV norm. This allows for easier detection of sharp discontinuities in intensity in the images and thereby adapt the weight to store the recovered edge information during the process of signal/image reconstruction. The parameter controls the amount of smoothing applied to the pixels at the edges to differentiate them from the non-edge pixels. The value of is changed adaptively based on the values of the histogram of the gradient magnitude so that a certain percentage of pixels have gradient values larger than . The edge-preserving total variation term, thus, becomes sparser and this speeds up the implementation. A two-step iteration process known as forward-backward splitting algorithm is used. The optimization problem is split into two sub-problems which are then solved with the conjugate gradient least squares method and the simple gradient descent method respectively. The method is stopped when the desired convergence has been achieved or if the maximum number of iterations is reached.

This is an iterative CT reconstruction algorithm with edge-preserving TV regularization to reconstruct CT images from highly undersampled data obtained at low dose CT through low current levels (milliampere). In order to reduce the imaging dose, one of the approaches used is to reduce the number of x-ray projections acquired by the scanner detectors. However, this insufficient projection data which is used to reconstruct the CT image can cause streaking artifacts. Furthermore, using these insufficient projections in standard TV algorithms end up making the problem under-determined and thus leading to infinitely many possible solutions. In this method, an additional penalty weighted function is assigned to the original TV norm. This allows for easier detection of sharp discontinuities in intensity in the images and thereby adapt the weight to store the recovered edge information during the process of signal/image reconstruction. The parameter controls the amount of smoothing applied to the pixels at the edges to differentiate them from the non-edge pixels. The value of is changed adaptively based on the values of the histogram of the gradient magnitude so that a certain percentage of pixels have gradient values larger than . The edge-preserving total variation term, thus, becomes sparser and this speeds up the implementation. A two-step iteration process known as forward-backward splitting algorithm is used. The optimization problem is split into two sub-problems which are then solved with the conjugate gradient least squares method and the simple gradient descent method respectively. The method is stopped when the desired convergence has been achieved or if the maximum number of iterations is reached.

The Augmented Lagrangian method for the orientation field, , involves initializing and then finding the approximate minimizer of with respect to these variables. The Lagrangian multipliers are then updated and the iterative process is stopped when convergence is achieved. For the iterative directional total variation refinement model, the augmented lagrangian method involves initializing .

Here, are newly introduced variables where = , = , = , and = . are the Lagrangian multipliers for . For each iteration, the approximate minimizer of with respect to variables () is calculated. And as in the field refinement model, the lagrangian multipliers are updated and the iterative process is stopped when convergence is achieved.

For the orientation field refinement model, the Lagrangian multipliers are updated in the iterative process as follows:

:

:

For the iterative directional total variation refinement model, the Lagrangian multipliers are updated as follows:

:

:

Here, are positive constants.

The Augmented Lagrangian method for the orientation field, , involves initializing and then finding the approximate minimizer of with respect to these variables. The Lagrangian multipliers are then updated and the iterative process is stopped when convergence is achieved. For the iterative directional total variation refinement model, the augmented lagrangian method involves initializing .

Here, are newly introduced variables where = , = , = , and = . are the Lagrangian multipliers for . For each iteration, the approximate minimizer of with respect to variables () is calculated. And as in the field refinement model, the lagrangian multipliers are updated and the iterative process is stopped when convergence is achieved.

For the orientation field refinement model, the Lagrangian multipliers are updated in the iterative process as follows:

:

:

For the iterative directional total variation refinement model, the Lagrangian multipliers are updated as follows:

:

:

Here, are positive constants.

Part 1Part 2

an

Part 3

video tutorial by Mark Davenport, Georgia Tech. a

SigView, the IEEE Signal Processing Society Tutorial Library

Using Math to Turn Lo-Res Datasets Into Hi-Res Samples

Wired Magazine article

Compressive Sensing Resources

at

Compressed Sensing Makes Every Pixel Count

– article in the AMS ''What's Happening in the Mathematical Sciences'' series

Wiki on sparse reconstruction

{{DEFAULTSORT:Compressed Sensing Information theory Signal estimation Linear algebra Mathematical optimization Mathematics in medicine

signal processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing '' signals'', such as sound, images, and scientific measurements. Signal processing techniques are used to optimize transmissions, ...

technique for efficiently acquiring and reconstructing a signal

In signal processing, a signal is a function that conveys information about a phenomenon. Any quantity that can vary over space or time can be used as a signal to share messages between observers. The '' IEEE Transactions on Signal Processing' ...

, by finding solutions to underdetermined linear systems. This is based on the principle that, through optimization, the sparsity of a signal can be exploited to recover it from far fewer samples than required by the Nyquist–Shannon sampling theorem

The Nyquist–Shannon sampling theorem is a theorem in the field of signal processing which serves as a fundamental bridge between continuous-time signals and discrete-time signals. It establishes a sufficient condition for a sample rate that per ...

. There are two conditions under which recovery is possible. The first one is sparsity, which requires the signal to be sparse in some domain. The second one is incoherence, which is applied through the isometric property, which is sufficient for sparse signals.

Overview

A common goal of the engineering field ofsignal processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing '' signals'', such as sound, images, and scientific measurements. Signal processing techniques are used to optimize transmissions, ...

is to reconstruct a signal from a series of sampling measurements. In general, this task is impossible because there is no way to reconstruct a signal during the times that the signal is not measured. Nevertheless, with prior knowledge or assumptions about the signal, it turns out to be possible to perfectly reconstruct a signal from a series of measurements (acquiring this series of measurements is called sampling). Over time, engineers have improved their understanding of which assumptions are practical and how they can be generalized.

An early breakthrough in signal processing was the Nyquist–Shannon sampling theorem

The Nyquist–Shannon sampling theorem is a theorem in the field of signal processing which serves as a fundamental bridge between continuous-time signals and discrete-time signals. It establishes a sufficient condition for a sample rate that per ...

. It states that if a real

Real may refer to:

Currencies

* Brazilian real (R$)

* Central American Republic real

* Mexican real

* Portuguese real

* Spanish real

* Spanish colonial real

Music Albums

* ''Real'' (L'Arc-en-Ciel album) (2000)

* ''Real'' (Bright album) (2010) ...

signal's highest frequency is less than half of the sampling rate, then the signal can be reconstructed perfectly by means of sinc interpolation. The main idea is that with prior knowledge about constraints on the signal's frequencies, fewer samples are needed to reconstruct the signal.

Around 2004, Emmanuel Candès, Justin Romberg, Terence Tao

Terence Chi-Shen Tao (; born 17 July 1975) is an Australian-American mathematician. He is a professor of mathematics at the University of California, Los Angeles (UCLA), where he holds the James and Carol Collins chair. His research includes ...

, and David Donoho proved that given knowledge about a signal's sparsity, the signal may be reconstructed with even fewer samples than the sampling theorem requires. This idea is the basis of compressed sensing.

History

Compressed sensing relies on techniques, which several other scientific fields have used historically. In statistics, theleast squares

The method of least squares is a standard approach in regression analysis to approximate the solution of overdetermined systems (sets of equations in which there are more equations than unknowns) by minimizing the sum of the squares of the re ...

method was complemented by the -norm, which was introduced by Laplace

Pierre-Simon, marquis de Laplace (; ; 23 March 1749 – 5 March 1827) was a French scholar and polymath whose work was important to the development of engineering, mathematics, statistics, physics, astronomy, and philosophy. He summarized ...

. Following the introduction of linear programming

Linear programming (LP), also called linear optimization, is a method to achieve the best outcome (such as maximum profit or lowest cost) in a mathematical model whose requirements are represented by linear relationships. Linear programming is ...

and Dantzig's simplex algorithm

In mathematical optimization, Dantzig's simplex algorithm (or simplex method) is a popular algorithm for linear programming.

The name of the algorithm is derived from the concept of a simplex and was suggested by T. S. Motzkin. Simplices are n ...

, the -norm was used in computational statistics

Computational statistics, or statistical computing, is the bond between statistics and computer science. It means statistical methods that are enabled by using computational methods. It is the area of computational science (or scientific computin ...

. In statistical theory, the -norm was used by George W. Brown and later writers on median-unbiased estimator

In statistics and probability theory, the median is the value separating the higher half from the lower half of a data sample, a population, or a probability distribution. For a data set, it may be thought of as "the middle" value. The basic fea ...

s. It was used by Peter J. Huber and others working on robust statistics

Robust statistics are statistics with good performance for data drawn from a wide range of probability distributions, especially for distributions that are not normal. Robust statistical methods have been developed for many common problems, su ...

. The -norm was also used in signal processing, for example, in the 1970s, when seismologists constructed images of reflective layers within the earth based on data that did not seem to satisfy the Nyquist–Shannon criterion. It was used in matching pursuit

Matching pursuit (MP) is a sparse approximation algorithm which finds the "best matching" projections of multidimensional data onto the span of an over-complete (i.e., redundant) dictionary D. The basic idea is to approximately represent a signal ...

in 1993, the LASSO estimator by Robert Tibshirani

Robert Tibshirani (born July 10, 1956) is a professor in the Departments of Statistics and Biomedical Data Science at Stanford University. He was a professor at the University of Toronto from 1985 to 1998. In his work, he develops statistical ...

in 1996 and basis pursuit

Basis pursuit is the mathematical optimization problem of the form

: \min_x \, x\, _1 \quad \text \quad y = Ax,

where ''x'' is a ''N''-dimensional solution vector (signal), ''y'' is a ''M''-dimensional vector of observations (measurements), ''A ...

in 1998. There were theoretical results describing when these algorithms recovered sparse solutions, but the required type and number of measurements were sub-optimal and subsequently greatly improved by compressed sensing.

At first glance, compressed sensing might seem to violate the sampling theorem, because compressed sensing depends on the sparsity of the signal in question and not its highest frequency. This is a misconception, because the sampling theorem guarantees perfect reconstruction given sufficient, not necessary, conditions. A sampling method fundamentally different from classical fixed-rate sampling cannot "violate" the sampling theorem. Sparse signals with high frequency components can be highly under-sampled using compressed sensing compared to classical fixed-rate sampling.

Method

Underdetermined linear system

An underdetermined system of linear equations has more unknowns than equations and generally has an infinite number of solutions. The figure below shows such an equation system where we want to find a solution for .Solution / reconstruction method

Compressed sensing takes advantage of the redundancy in many interesting signals—they are not pure noise. In particular, many signals are sparse, that is, they contain many coefficients close to or equal to zero, when represented in some domain. This is the same insight used in many forms of

Compressed sensing takes advantage of the redundancy in many interesting signals—they are not pure noise. In particular, many signals are sparse, that is, they contain many coefficients close to or equal to zero, when represented in some domain. This is the same insight used in many forms of lossy compression

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data si ...

.

Compressed sensing typically starts with taking a weighted linear combination of samples also called compressive measurements in a basis different from the basis in which the signal is known to be sparse. The results found by Emmanuel Candès, Justin Romberg, Terence Tao

Terence Chi-Shen Tao (; born 17 July 1975) is an Australian-American mathematician. He is a professor of mathematics at the University of California, Los Angeles (UCLA), where he holds the James and Carol Collins chair. His research includes ...

, and David Donoho showed that the number of these compressive measurements can be small and still contain nearly all the useful information. Therefore, the task of converting the image back into the intended domain involves solving an underdetermined matrix equation since the number of compressive measurements taken is smaller than the number of pixels in the full image. However, adding the constraint that the initial signal is sparse enables one to solve this underdetermined system of linear equations

In mathematics, a system of linear equations (or linear system) is a collection of one or more linear equations involving the same variables.

For example,

:\begin

3x+2y-z=1\\

2x-2y+4z=-2\\

-x+\fracy-z=0

\end

is a system of three equations in t ...

.

The least-squares solution to such problems is to minimize the norm—that is, minimize the amount of energy in the system. This is usually simple mathematically (involving only a matrix multiplication

In mathematics, particularly in linear algebra, matrix multiplication is a binary operation that produces a matrix from two matrices. For matrix multiplication, the number of columns in the first matrix must be equal to the number of rows in the s ...

by the pseudo-inverse of the basis sampled in). However, this leads to poor results for many practical applications, for which the unknown coefficients have nonzero energy.

To enforce the sparsity constraint when solving for the underdetermined system of linear equations, one can minimize the number of nonzero components of the solution. The function counting the number of non-zero components of a vector was called the "norm" by David Donoho.

Candès et al. proved that for many problems it is probable that the norm is equivalent to the norm, in a technical sense: This equivalence result allows one to solve the problem, which is easier than the problem. Finding the candidate with the smallest norm can be expressed relatively easily as a linear program

Linear programming (LP), also called linear optimization, is a method to achieve the best outcome (such as maximum profit or lowest cost) in a mathematical model whose requirements are represented by linear relationships. Linear programming i ...

, for which efficient solution methods already exist. When measurements may contain a finite amount of noise, basis pursuit denoising

In applied mathematics and statistics, basis pursuit denoising (BPDN) refers to a mathematical optimization problem of the form

: \min_x \left(\frac \, y - Ax\, ^2_2 + \lambda \, x\, _1\right),

where \lambda is a parameter that controls the tra ...

is preferred over linear programming, since it preserves sparsity in the face of noise and can be solved faster than an exact linear program.

Total variation-based CS reconstruction

Motivation and applications

= Role of TV regularization

= Total variation can be seen as anon-negative

In mathematics, the sign of a real number is its property of being either positive, negative, or zero. Depending on local conventions, zero may be considered as being neither positive nor negative (having no sign or a unique third sign), or it ...

real

Real may refer to:

Currencies

* Brazilian real (R$)

* Central American Republic real

* Mexican real

* Portuguese real

* Spanish real

* Spanish colonial real

Music Albums

* ''Real'' (L'Arc-en-Ciel album) (2000)

* ''Real'' (Bright album) (2010) ...

-valued functional

Functional may refer to:

* Movements in architecture:

** Functionalism (architecture)

** Form follows function

* Functional group, combination of atoms within molecules

* Medical conditions without currently visible organic basis:

** Functional sy ...

defined on the space of real-valued functions (for the case of functions of one variable) or on the space of integrable function

In mathematics, an integral assigns numbers to functions in a way that describes displacement, area, volume, and other concepts that arise by combining infinitesimal data. The process of finding integrals is called integration. Along with d ...

s (for the case of functions of several variables). For signals, especially, total variation refers to the integral of the absolute gradient

In vector calculus, the gradient of a scalar-valued differentiable function of several variables is the vector field (or vector-valued function) \nabla f whose value at a point p is the "direction and rate of fastest increase". If the gr ...

of the signal. In signal and image reconstruction, it is applied as total variation regularization where the underlying principle is that signals with excessive details have high total variation and that removing these details, while retaining important information such as edges, would reduce the total variation of the signal and make the signal subject closer to the original signal in the problem.

For the purpose of signal and image reconstruction, minimization models are used. Other approaches also include the least-squares as has been discussed before in this article. These methods are extremely slow and return a not-so-perfect reconstruction of the signal. The current CS Regularization models attempt to address this problem by incorporating sparsity priors of the original image, one of which is the total variation (TV). Conventional TV approaches are designed to give piece-wise constant solutions. Some of these include (as discussed ahead) – constrained -minimization which uses an iterative scheme. This method, though fast, subsequently leads to over-smoothing of edges resulting in blurred image edges. TV methods with iterative re-weighting have been implemented to reduce the influence of large gradient value magnitudes in the images. This has been used in computed tomography (CT) reconstruction as a method known as edge-preserving total variation. However, as gradient magnitudes are used for estimation of relative penalty weights between the data fidelity and regularization terms, this method is not robust to noise and artifacts and accurate enough for CS image/signal reconstruction and, therefore, fails to preserve smaller structures.

Recent progress on this problem involves using an iteratively directional TV refinement for CS reconstruction. This method would have 2 stages: the first stage would estimate and refine the initial orientation field – which is defined as a noisy point-wise initial estimate, through edge-detection, of the given image. In the second stage, the CS reconstruction model is presented by utilizing directional TV regularizer. More details about these TV-based approaches – iteratively reweighted l1 minimization, edge-preserving TV and iterative model using directional orientation field and TV- are provided below.

Existing approaches

=Iteratively reweighted minimization

= In the CS reconstruction models using constrained minimization, larger coefficients are penalized heavily in the norm. It was proposed to have a weighted formulation of minimization designed to more democratically penalize nonzero coefficients. An iterative algorithm is used for constructing the appropriate weights.Lange, K.: Optimization, Springer Texts in Statistics. Springer, New York (2004) Each iteration requires solving one minimization problem by finding the local minimum of a concave penalty function that more closely resembles the norm. An additional parameter, usually to avoid any sharp transitions in the penalty function curve, is introduced into the iterative equation to ensure stability and so that a zero estimate in one iteration does not necessarily lead to a zero estimate in the next iteration. The method essentially involves using the current solution for computing the weights to be used in the next iteration.

In the CS reconstruction models using constrained minimization, larger coefficients are penalized heavily in the norm. It was proposed to have a weighted formulation of minimization designed to more democratically penalize nonzero coefficients. An iterative algorithm is used for constructing the appropriate weights.Lange, K.: Optimization, Springer Texts in Statistics. Springer, New York (2004) Each iteration requires solving one minimization problem by finding the local minimum of a concave penalty function that more closely resembles the norm. An additional parameter, usually to avoid any sharp transitions in the penalty function curve, is introduced into the iterative equation to ensure stability and so that a zero estimate in one iteration does not necessarily lead to a zero estimate in the next iteration. The method essentially involves using the current solution for computing the weights to be used in the next iteration.

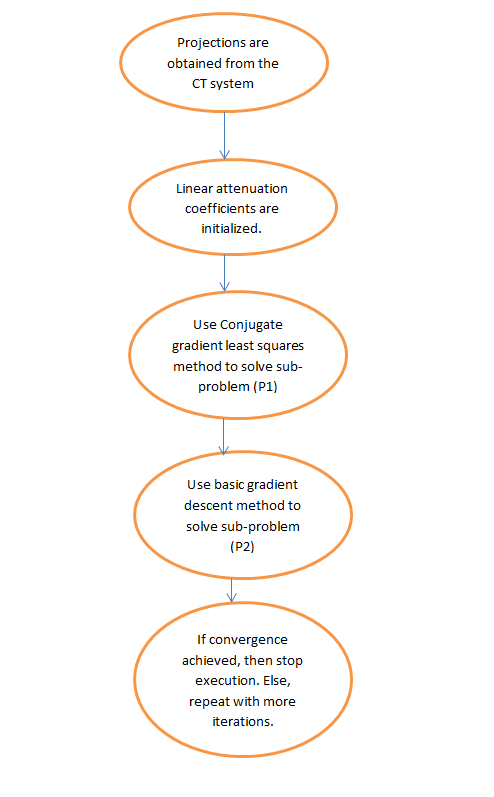

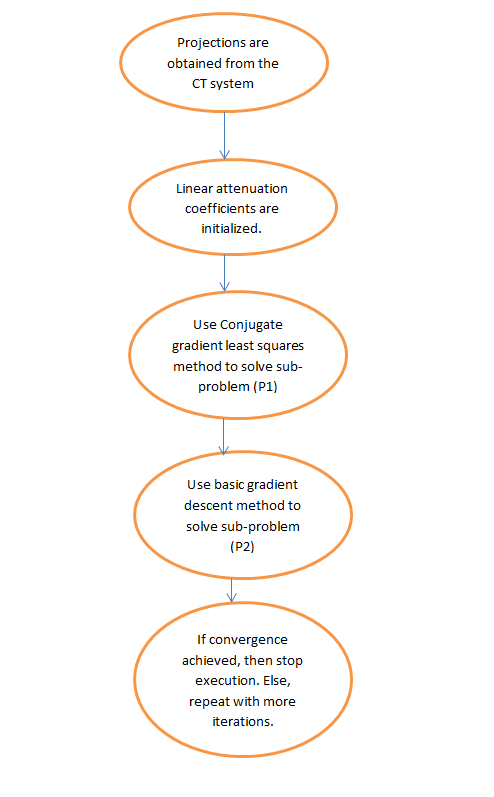

Advantages and disadvantages Early iterations may find inaccurate sample estimates, however this method will down-sample these at a later stage to give more weight to the smaller non-zero signal estimates. One of the disadvantages is the need for defining a valid starting point as a global minimum might not be obtained every time due to the concavity of the function. Another disadvantage is that this method tends to uniformly penalize the image gradient irrespective of the underlying image structures. This causes over-smoothing of edges, especially those of low contrast regions, subsequently leading to loss of low contrast information. The advantages of this method include: reduction of the sampling rate for sparse signals; reconstruction of the image while being robust to the removal of noise and other artifacts; and use of very few iterations. This can also help in recovering images with sparse gradients. In the figure shown below, P1 refers to the first-step of the iterative reconstruction process, of the projection matrix P of the fan-beam geometry, which is constrained by the data fidelity term. This may contain noise and artifacts as no regularization is performed. The minimization of P1 is solved through the conjugate gradient least squares method. P2 refers to the second step of the iterative reconstruction process wherein it utilizes the edge-preserving total variation regularization term to remove noise and artifacts, and thus improve the quality of the reconstructed image/signal. The minimization of P2 is done through a simple gradient descent method. Convergence is determined by testing, after each iteration, for image positivity, by checking if for the case when (Note that refers to the different x-ray linear attenuation coefficients at different voxels of the patient image).

=Edge-preserving total variation (TV)-based compressed sensing

= This is an iterative CT reconstruction algorithm with edge-preserving TV regularization to reconstruct CT images from highly undersampled data obtained at low dose CT through low current levels (milliampere). In order to reduce the imaging dose, one of the approaches used is to reduce the number of x-ray projections acquired by the scanner detectors. However, this insufficient projection data which is used to reconstruct the CT image can cause streaking artifacts. Furthermore, using these insufficient projections in standard TV algorithms end up making the problem under-determined and thus leading to infinitely many possible solutions. In this method, an additional penalty weighted function is assigned to the original TV norm. This allows for easier detection of sharp discontinuities in intensity in the images and thereby adapt the weight to store the recovered edge information during the process of signal/image reconstruction. The parameter controls the amount of smoothing applied to the pixels at the edges to differentiate them from the non-edge pixels. The value of is changed adaptively based on the values of the histogram of the gradient magnitude so that a certain percentage of pixels have gradient values larger than . The edge-preserving total variation term, thus, becomes sparser and this speeds up the implementation. A two-step iteration process known as forward-backward splitting algorithm is used. The optimization problem is split into two sub-problems which are then solved with the conjugate gradient least squares method and the simple gradient descent method respectively. The method is stopped when the desired convergence has been achieved or if the maximum number of iterations is reached.

This is an iterative CT reconstruction algorithm with edge-preserving TV regularization to reconstruct CT images from highly undersampled data obtained at low dose CT through low current levels (milliampere). In order to reduce the imaging dose, one of the approaches used is to reduce the number of x-ray projections acquired by the scanner detectors. However, this insufficient projection data which is used to reconstruct the CT image can cause streaking artifacts. Furthermore, using these insufficient projections in standard TV algorithms end up making the problem under-determined and thus leading to infinitely many possible solutions. In this method, an additional penalty weighted function is assigned to the original TV norm. This allows for easier detection of sharp discontinuities in intensity in the images and thereby adapt the weight to store the recovered edge information during the process of signal/image reconstruction. The parameter controls the amount of smoothing applied to the pixels at the edges to differentiate them from the non-edge pixels. The value of is changed adaptively based on the values of the histogram of the gradient magnitude so that a certain percentage of pixels have gradient values larger than . The edge-preserving total variation term, thus, becomes sparser and this speeds up the implementation. A two-step iteration process known as forward-backward splitting algorithm is used. The optimization problem is split into two sub-problems which are then solved with the conjugate gradient least squares method and the simple gradient descent method respectively. The method is stopped when the desired convergence has been achieved or if the maximum number of iterations is reached.

= Advantages and disadvantages

= Some of the disadvantages of this method are the absence of smaller structures in the reconstructed image and degradation of image resolution. This edge preserving TV algorithm, however, requires fewer iterations than the conventional TV algorithm. Analyzing the horizontal and vertical intensity profiles of the reconstructed images, it can be seen that there are sharp jumps at edge points and negligible, minor fluctuation at non-edge points. Thus, this method leads to low relative error and higher correlation as compared to the TV method. It also effectively suppresses and removes any form of image noise and image artifacts such as streaking.=Iterative model using a directional orientation field and directional total variation

= To prevent over-smoothing of edges and texture details and to obtain a reconstructed CS image which is accurate and robust to noise and artifacts, this method is used. First, an initial estimate of the noisy point-wise orientation field of the image , , is obtained. This noisy orientation field is defined so that it can be refined at a later stage to reduce the noise influences in orientation field estimation. A coarse orientation field estimation is then introduced based on structure tensor which is formulated as: . Here, refers to the structure tensor related with the image pixel point (i,j) having standard deviation . refers to the Gaussian kernel with standard deviation . refers to the manually defined parameter for the image below which the edge detection is insensitive to noise. refers to the gradient of the image and refers to the tensor product obtained by using this gradient. The structure tensor obtained is convolved with a Gaussian kernel to improve the accuracy of the orientation estimate with being set to high values to account for the unknown noise levels. For every pixel (i,j) in the image, the structure tensor J is a symmetric and positive semi-definite matrix. Convolving all the pixels in the image with , gives orthonormal eigen vectors ω and υ of the matrix. ω points in the direction of the dominant orientation having the largest contrast and υ points in the direction of the structure orientation having the smallest contrast. The orientation field coarse initial estimation is defined as = υ. This estimate is accurate at strong edges. However, at weak edges or on regions with noise, its reliability decreases. To overcome this drawback, a refined orientation model is defined in which the data term reduces the effect of noise and improves accuracy while the second penalty term with the L2-norm is a fidelity term which ensures accuracy of initial coarse estimation. This orientation field is introduced into the directional total variation optimization model for CS reconstruction through the equation: . is the objective signal which needs to be recovered. Y is the corresponding measurement vector, d is the iterative refined orientation field and is the CS measurement matrix. This method undergoes a few iterations ultimately leading to convergence. is the orientation field approximate estimation of the reconstructed image from the previous iteration (in order to check for convergence and the subsequent optical performance, the previous iteration is used). For the two vector fields represented by and , refers to the multiplication of respective horizontal and vertical vector elements of and followed by their subsequent addition. These equations are reduced to a series of convex minimization problems which are then solved with a combination of variable splitting and augmented Lagrangian (FFT-based fast solver with a closed form solution) methods. It (Augmented Lagrangian) is considered equivalent to the split Bregman iteration which ensures convergence of this method. The orientation field, d is defined as being equal to , where define the horizontal and vertical estimates of . The Augmented Lagrangian method for the orientation field, , involves initializing and then finding the approximate minimizer of with respect to these variables. The Lagrangian multipliers are then updated and the iterative process is stopped when convergence is achieved. For the iterative directional total variation refinement model, the augmented lagrangian method involves initializing .

Here, are newly introduced variables where = , = , = , and = . are the Lagrangian multipliers for . For each iteration, the approximate minimizer of with respect to variables () is calculated. And as in the field refinement model, the lagrangian multipliers are updated and the iterative process is stopped when convergence is achieved.

For the orientation field refinement model, the Lagrangian multipliers are updated in the iterative process as follows:

:

:

For the iterative directional total variation refinement model, the Lagrangian multipliers are updated as follows:

:

:

Here, are positive constants.

The Augmented Lagrangian method for the orientation field, , involves initializing and then finding the approximate minimizer of with respect to these variables. The Lagrangian multipliers are then updated and the iterative process is stopped when convergence is achieved. For the iterative directional total variation refinement model, the augmented lagrangian method involves initializing .

Here, are newly introduced variables where = , = , = , and = . are the Lagrangian multipliers for . For each iteration, the approximate minimizer of with respect to variables () is calculated. And as in the field refinement model, the lagrangian multipliers are updated and the iterative process is stopped when convergence is achieved.

For the orientation field refinement model, the Lagrangian multipliers are updated in the iterative process as follows:

:

:

For the iterative directional total variation refinement model, the Lagrangian multipliers are updated as follows:

:

:

Here, are positive constants.

=Advantages and disadvantages

= Based onpeak signal-to-noise ratio

Peak signal-to-noise ratio (PSNR) is an engineering term for the ratio between the maximum possible power of a signal and the power of corrupting noise that affects the fidelity of its representation. Because many signals have a very wide dynamic ...

(PSNR) and structural similarity

The structural similarity index measure (SSIM) is a method for predicting the perceived quality of digital television and cinematic pictures, as well as other kinds of digital images and videos. SSIM is used for measuring the similarity between tw ...

index (SSIM) metrics and known ground-truth images for testing performance, it is concluded that iterative directional total variation has a better reconstructed performance than the non-iterative methods in preserving edge and texture areas. The orientation field refinement model plays a major role in this improvement in performance as it increases the number of directionless pixels in the flat area while enhancing the orientation field consistency in the regions with edges.

Applications

The field of compressive sensing is related to several topics in signal processing and computational mathematics, such as underdetermined linear-systems, group testing, heavy hitters,sparse coding

Neural coding (or Neural representation) is a neuroscience field concerned with characterising the hypothetical relationship between the stimulus and the individual or ensemble neuronal responses and the relationship among the electrical activit ...

, multiplexing

In telecommunications and computer networking, multiplexing (sometimes contracted to muxing) is a method by which multiple analog or digital signals are combined into one signal over a shared medium. The aim is to share a scarce resource - ...

, sparse sampling, and finite rate of innovation. Its broad scope and generality has enabled several innovative CS-enhanced approaches in signal processing and compression, solution of inverse problems, design of radiating systems, radar and through-the-wall imaging, and antenna characterization. Imaging techniques having a strong affinity with compressive sensing include coded aperture

Coded apertures or coded-aperture masks are grids, gratings, or other patterns of materials opaque to various wavelengths of electromagnetic radiation. The wavelengths are usually high-energy radiation such as X-rays and gamma rays. By blocking ra ...

and computational photography

Computational photography refers to digital image capture and processing techniques that use digital computation instead of optical processes. Computational photography can improve the capabilities of a camera, or introduce features that were no ...

.

Conventional CS reconstruction uses sparse signals (usually sampled at a rate less than the Nyquist sampling rate) for reconstruction through constrained minimization. One of the earliest applications of such an approach was in reflection seismology which used sparse reflected signals from band-limited data for tracking changes between sub-surface layers. When the LASSO model came into prominence in the 1990s as a statistical method for selection of sparse models, this method was further used in computational harmonic analysis for sparse signal representation from over-complete dictionaries. Some of the other applications include incoherent sampling of radar pulses. The work by ''Boyd et al.'' has applied the LASSO model- for selection of sparse models- towards analog to digital converters (the current ones use a sampling rate higher than the Nyquist rate along with the quantized Shannon representation). This would involve a parallel architecture in which the polarity of the analog signal changes at a high rate followed by digitizing the integral at the end of each time-interval to obtain the converted digital signal.

Photography

Compressed sensing has been used in an experimental mobile phone camera sensor. The approach allows a reduction in image acquisition energy per image by as much as a factor of 15 at the cost of complex decompression algorithms; the computation may require an off-device implementation. Compressed sensing is used in single-pixel cameras fromRice University

William Marsh Rice University (Rice University) is a Private university, private research university in Houston, Houston, Texas. It is on a 300-acre campus near the Houston Museum District and adjacent to the Texas Medical Center. Rice is ranke ...

. Bell Labs

Nokia Bell Labs, originally named Bell Telephone Laboratories (1925–1984),

then AT&T Bell Laboratories (1984–1996)

and Bell Labs Innovations (1996–2007),

is an American industrial research and scientific development company owned by mul ...

employed the technique in a lensless single-pixel camera that takes stills using repeated snapshots of randomly chosen apertures from a grid. Image quality improves with the number of snapshots, and generally requires a small fraction of the data of conventional imaging, while eliminating lens/focus-related aberrations.

Holography

Compressed sensing can be used to improve image reconstruction inholography

Holography is a technique that enables a wavefront to be recorded and later re-constructed. Holography is best known as a method of generating real three-dimensional images, but it also has a wide range of other applications. In principle, i ...

by increasing the number of voxel

In 3D computer graphics, a voxel represents a value on a regular grid in three-dimensional space. As with pixels in a 2D bitmap, voxels themselves do not typically have their position (i.e. coordinates) explicitly encoded with their values. I ...

s one can infer from a single hologram. It is also used for image retrieval from undersampled measurements in optical and millimeter-wave holography.

Facial recognition

Compressed sensing has been used in facial recognition applications.Magnetic resonance imaging

Compressed sensing has been used to shortenmagnetic resonance imaging

Magnetic resonance imaging (MRI) is a medical imaging technique used in radiology to form pictures of the anatomy and the physiological processes of the body. MRI scanners use strong magnetic fields, magnetic field gradients, and radio wave ...

scanning sessions on conventional hardware. Reconstruction methods include

* ISTA

* FISTA

* SISTA

* ePRESS

* EWISTA

* EWISTARS etc.

Compressed sensing addresses the issue of high scan time by enabling faster acquisition by measuring fewer Fourier coefficients. This produces a high-quality image with relatively lower scan time. Another application (also discussed ahead) is for CT reconstruction with fewer X-ray projections. Compressed sensing, in this case, removes the high spatial gradient parts – mainly, image noise and artifacts. This holds tremendous potential as one can obtain high-resolution CT images at low radiation doses (through lower current-mA settings).

Network tomography

Compressed sensing has showed outstanding results in the application of network tomography tonetwork management

Network management is the process of administering and managing computer networks. Services provided by this discipline include fault analysis, performance management, provisioning of networks and maintaining quality of service. Network managem ...

. Network delay

Network delay is a design and performance characteristic of a telecommunications network. It specifies the latency for a bit of data to travel across the network from one communication endpoint to another. It is typically measured in multiples ...

estimation and network congestion

Network congestion in data networking and queueing theory is the reduced quality of service that occurs when a network node or link is carrying more data than it can handle. Typical effects include queueing delay, packet loss or the blocking of ...

detection can both be modeled as underdetermined systems of linear equations where the coefficient matrix is the network routing matrix. Moreover, in the Internet

The Internet (or internet) is the global system of interconnected computer networks that uses the Internet protocol suite (TCP/IP) to communicate between networks and devices. It is a '' network of networks'' that consists of private, p ...

, network routing matrices usually satisfy the criterion for using compressed sensing.

Shortwave-infrared cameras

In 2013 one company announced shortwave-infrared cameras which utilize compressed sensing. These cameras have light sensitivity from 0.9μm

The micrometre ( international spelling as used by the International Bureau of Weights and Measures; SI symbol: μm) or micrometer ( American spelling), also commonly known as a micron, is a unit of length in the International System of Uni ...

to 1.7 μm, wavelengths invisible to the human eye.

Aperture synthesis astronomy

Inradio astronomy

Radio astronomy is a subfield of astronomy that studies celestial objects at radio frequencies. The first detection of radio waves from an astronomical object was in 1933, when Karl Jansky at Bell Telephone Laboratories reported radiation comin ...

and optical astronomical interferometry

An astronomical interferometer or telescope array is a set of separate telescopes, mirror segments, or radio telescope antennas that work together as a single telescope to provide higher resolution images of astronomical objects such as stars, ne ...

, full coverage of the Fourier plane is usually absent and phase information is not obtained in most hardware configurations. In order to obtain aperture synthesis

Aperture synthesis or synthesis imaging is a type of interferometry that mixes signals from a collection of telescopes to produce images having the same angular resolution as an instrument the size of the entire collection. At each separation and ...

images, various compressed sensing algorithms are employed. The Högbom CLEAN algorithm has been in use since 1974 for the reconstruction of images obtained from radio interferometers, which is similar to the matching pursuit

Matching pursuit (MP) is a sparse approximation algorithm which finds the "best matching" projections of multidimensional data onto the span of an over-complete (i.e., redundant) dictionary D. The basic idea is to approximately represent a signal ...

algorithm mentioned above.

Transmission electron microscopy

Compressed sensing combined with a moving aperture has been used to increase the acquisition rate of images in atransmission electron microscope

Transmission electron microscopy (TEM) is a microscopy technique in which a beam of electrons is transmitted through a specimen to form an image. The specimen is most often an ultrathin section less than 100 nm thick or a suspension on a gr ...

. In scanning mode, compressive sensing combined with random scanning of the electron beam has enabled both faster acquisition and less electron dose, which allows for imaging of electron beam sensitive materials.

See also

*Noiselet

Noiselets are functions which gives the worst case behavior for the Haar wavelet packet analysis. In other words, noiselets are totally incompressible by the Haar wavelet packet analysis.R. Coifman, F. Geshwind, and Y. Meyer, Noiselets, Applied and ...

*Sparse approximation Sparse approximation (also known as sparse representation) theory deals with sparse solutions for systems of linear equations. Techniques for finding these solutions and exploiting them in applications have found wide use in image processing, sign ...

*Sparse coding

Neural coding (or Neural representation) is a neuroscience field concerned with characterising the hypothetical relationship between the stimulus and the individual or ensemble neuronal responses and the relationship among the electrical activit ...

*Low-density parity-check code

In information theory, a low-density parity-check (LDPC) code is a linear error correcting code, a method of transmitting a message over a noisy transmission channel. An LDPC code is constructed using a sparse Tanner graph (subclass of the bip ...

* Compressed sensing in speech signals

Notes

References

Further reading

* "The Fundamentals of Compressive SensingPart 1

an

Part 3

video tutorial by Mark Davenport, Georgia Tech. a

SigView, the IEEE Signal Processing Society Tutorial Library

Using Math to Turn Lo-Res Datasets Into Hi-Res Samples

Wired Magazine article

Compressive Sensing Resources

at

Rice University

William Marsh Rice University (Rice University) is a Private university, private research university in Houston, Houston, Texas. It is on a 300-acre campus near the Houston Museum District and adjacent to the Texas Medical Center. Rice is ranke ...

.

Compressed Sensing Makes Every Pixel Count

– article in the AMS ''What's Happening in the Mathematical Sciences'' series

Wiki on sparse reconstruction

{{DEFAULTSORT:Compressed Sensing Information theory Signal estimation Linear algebra Mathematical optimization Mathematics in medicine