Belt machine on:

[Wikipedia]

[Google]

[Amazon]

The history of general-purpose CPUs is a continuation of the earlier history of computing hardware.

In the early 1950s, each computer design was unique. There were no upward-compatible machines or computer architectures with multiple, differing implementations. Programs written for one machine would run on no other kind, even other kinds from the same company. This was not a major drawback then because no large body of software had been developed to run on computers, so starting programming from scratch was not seen as a large barrier.

The design freedom of the time was very important because designers were very constrained by the cost of electronics, and only starting to explore how a computer could best be organized. Some of the basic features introduced during this period included

In the early 1950s, each computer design was unique. There were no upward-compatible machines or computer architectures with multiple, differing implementations. Programs written for one machine would run on no other kind, even other kinds from the same company. This was not a major drawback then because no large body of software had been developed to run on computers, so starting programming from scratch was not seen as a large barrier.

The design freedom of the time was very important because designers were very constrained by the cost of electronics, and only starting to explore how a computer could best be organized. Some of the basic features introduced during this period included

The first commercial

The first commercial

The Making of the First Microprocessor

''IEEE Solid-State Circuits Magazine'', Winter 2009,Semiconductor History Museum of Japan

/ref>Jeffrey A. Hart & Sangbae Kim (2001)

The Defense of Intellectual Property Rights in the Global Information Order

International Studies Association, Chicago * 1973. NEC release 4-bit μCOM-4 (μPD751), combining the μPD707 and μPD708 into a single microprocessor. * 1974. Intel release the

1950s: Early designs

In the early 1950s, each computer design was unique. There were no upward-compatible machines or computer architectures with multiple, differing implementations. Programs written for one machine would run on no other kind, even other kinds from the same company. This was not a major drawback then because no large body of software had been developed to run on computers, so starting programming from scratch was not seen as a large barrier.

The design freedom of the time was very important because designers were very constrained by the cost of electronics, and only starting to explore how a computer could best be organized. Some of the basic features introduced during this period included

In the early 1950s, each computer design was unique. There were no upward-compatible machines or computer architectures with multiple, differing implementations. Programs written for one machine would run on no other kind, even other kinds from the same company. This was not a major drawback then because no large body of software had been developed to run on computers, so starting programming from scratch was not seen as a large barrier.

The design freedom of the time was very important because designers were very constrained by the cost of electronics, and only starting to explore how a computer could best be organized. Some of the basic features introduced during this period included index register

An index register in a computer's CPU is a processor register (or an assigned memory location) used for pointing to operand addresses during the run of a program. It is useful for stepping through strings and arrays. It can also be used for hol ...

s (on the Ferranti Mark 1

The Ferranti Mark 1, also known as the Manchester Electronic Computer in its sales literature, and thus sometimes called the Manchester Ferranti, was produced by British electrical engineering firm Ferranti Ltd. It was the world's first commer ...

), a return address

In postal mail, a return address is an explicit inclusion of the address of the person sending the message. It provides the recipient (and sometimes authorized intermediaries) with a means to determine how to respond to the sender of the message i ...

saving instruction (UNIVAC I

The UNIVAC I (Universal Automatic Computer I) was the first general-purpose electronic digital computer design for business application produced in the United States. It was designed principally by J. Presper Eckert and John Mauchly, the inven ...

), immediate operands ( IBM 704), and detecting invalid operations ( IBM 650).

By the end of the 1950s, commercial builders had developed factory-constructed, truck-deliverable computers. The most widely installed computer was the IBM 650, which used drum memory

Drum memory was a magnetic data storage device invented by Gustav Tauschek in 1932 in Austria. Drums were widely used in the 1950s and into the 1960s as computer memory.

For many early computers, drum memory formed the main working memory of ...

onto which programs were loaded using either paper punched tape

Five- and eight-hole punched paper tape

Paper tape reader on the Harwell computer with a small piece of five-hole tape connected in a circle – creating a physical program loop

Punched tape or perforated paper tape is a form of data storage ...

or punched card

A punched card (also punch card or punched-card) is a piece of stiff paper that holds digital data represented by the presence or absence of holes in predefined positions. Punched cards were once common in data processing applications or to di ...

s. Some very high-end machines also included core memory

Core or cores may refer to:

Science and technology

* Core (anatomy), everything except the appendages

* Core (manufacturing), used in casting and molding

* Core (optical fiber), the signal-carrying portion of an optical fiber

* Core, the centra ...

which provided higher speeds. Hard disks were also starting to grow popular.

A computer is an automatic abacus

The abacus (''plural'' abaci or abacuses), also called a counting frame, is a calculating tool which has been used since ancient times. It was used in the ancient Near East, Europe, China, and Russia, centuries before the adoption of the Hi ...

. The type of number system affects the way it works. In the early 1950s, most computers were built for specific numerical processing tasks, and many machines used decimal numbers as their basic number system; that is, the mathematical functions of the machines worked in base-10 instead of base-2 as is common today. These were not merely binary-coded decimal (BCD). Most machines had ten vacuum tubes per digit in each processor register. Some early Soviet

The Soviet Union,. officially the Union of Soviet Socialist Republics. (USSR),. was a transcontinental country that spanned much of Eurasia from 1922 to 1991. A flagship communist state, it was nominally a federal union of fifteen nation ...

computer designers implemented systems based on ternary logic

In logic, a three-valued logic (also trinary logic, trivalent, ternary, or trilean, sometimes abbreviated 3VL) is any of several many-valued logic systems in which there are three truth values indicating ''true'', ''false'' and some indeterminat ...

; that is, a bit could have three states: +1, 0, or -1, corresponding to positive, zero, or negative voltage.

An early project for the U.S. Air Force

The United States Air Force (USAF) is the air service branch of the United States Armed Forces, and is one of the eight uniformed services of the United States. Originally created on 1 August 1907, as a part of the United States Army Sign ...

, BINAC

BINAC (Binary Automatic Computer) was an early electronic computer designed for Northrop Aircraft Company by the Eckert–Mauchly Computer Corporation (EMCC) in 1949. Eckert and Mauchly, though they had started the design of EDVAC at the Unive ...

attempted to make a lightweight, simple computer by using binary arithmetic. It deeply impressed the industry.

As late as 1970, major computer languages were unable to standardize their numeric behavior because decimal computers had groups of users too large to alienate.

Even when designers used a binary system, they still had many odd ideas. Some used sign-magnitude arithmetic (-1 = 10001), or ones' complement

The ones' complement of a binary number is the value obtained by inverting all the bits in the binary representation of the number (swapping 0s and 1s). The name "ones' complement" (''note this is possessive of the plural "ones", not of a sin ...

(-1 = 11110), rather than modern two's complement

Two's complement is a mathematical operation to reversibly convert a positive binary number into a negative binary number with equivalent (but negative) value, using the binary digit with the greatest place value (the leftmost bit in big- endian ...

arithmetic (-1 = 11111). Most computers used six-bit character sets because they adequately encoded Hollerith punched card

A punched card (also punch card or punched-card) is a piece of stiff paper that holds digital data represented by the presence or absence of holes in predefined positions. Punched cards were once common in data processing applications or to di ...

s. It was a major revelation to designers of this period to realize that the data word should be a multiple of the character size. They began to design computers with 12-, 24- and 36-bit data words (e.g., see the TX-2

The MIT Lincoln Laboratory TX-2 computer was the successor to the Lincoln TX-0 and was known for its role in advancing both artificial intelligence and human–computer interaction. Wesley A. Clark was the chief architect of the TX-2.

Specific ...

).

In this era, Grosch's law

Grosch's law is the following observation of computer performance, made by Herb Grosch in 1953:

I believe that there is a fundamental rule, which I modestly call ''Grosch's law'', giving added economy only as the square root of the increase in spe ...

dominated computer design: computer cost increased as the square of its speed.

1960s: Computer revolution and CISC

One major problem with early computers was that a program for one would work on no others. Computer companies found that their customers had little reason to remain loyal to a given brand, as the next computer they bought would be incompatible anyway. At that point, the only concerns were usually price and performance. In 1962, IBM tried a new approach to designing computers. The plan was to make a family of computers that could all run the same software, but with different performances, and at different prices. As users' needs grew, they could move up to larger computers, and still keep all of their investment in programs, data and storage media. To do this, they designed one ''reference computer'' named '' System/360'' (S/360). This was a virtual computer, a reference instruction set, and abilities that all machines in the family would support. To provide different classes of machines, each computer in the family would use more or less hardware emulation, and more or lessmicroprogram

In processor design, microcode (μcode) is a technique that interposes a layer of computer organization between the central processing unit (CPU) hardware and the programmer-visible instruction set architecture of a computer. Microcode is a lay ...

emulation, to create a machine able to run the full S/360 instruction set.

For instance, a low-end machine could include a very simple processor for low cost. However this would require the use of a larger microcode emulator to provide the rest of the instruction set, which would slow it down. A high-end machine would use a much more complex processor that could directly process more of the S/360 design, thus running a much simpler and faster emulator.

IBM chose consciously to make the reference instruction set quite complex, and very capable. Even though the computer was complex, its ''control store A control store is the part of a CPU's control unit that stores the CPU's microprogram. It is usually accessed by a microsequencer. A control store implementation whose contents are unalterable is known as a Read Only Memory (ROM) or Read Only S ...

'' holding the microprogram

In processor design, microcode (μcode) is a technique that interposes a layer of computer organization between the central processing unit (CPU) hardware and the programmer-visible instruction set architecture of a computer. Microcode is a lay ...

would stay relatively small, and could be made with very fast memory. Another important effect was that one instruction could describe quite a complex sequence of operations. Thus the computers would generally have to fetch fewer instructions from the main memory, which could be made slower, smaller and less costly for a given mix of speed and price.

As the S/360 was to be a successor to both scientific machines like the 7090 and data processing machines like the 1401

Year 1401 ( MCDI) was a common year starting on Saturday (link will display the full calendar) of the Julian calendar.

Events

January–December

* January 6 – Rupert, King of Germany, is crowned King of the Romans at Cologne.

* ...

, it needed a design that could reasonably support all forms of processing. Hence the instruction set was designed to manipulate simple binary numbers, and text, scientific floating-point (similar to the numbers used in a calculator), and the binary-coded decimal arithmetic needed by accounting systems.

Almost all following computers included these innovations in some form. This basic set of features is now called ''complex instruction set computing

A complex instruction set computer (CISC ) is a computer architecture in which single instructions can execute several low-level operations (such as a load from memory, an arithmetic operation, and a memory store) or are capable of multi-step o ...

'' (CISC, pronounced "sisk"), a term not invented until many years later, when ''reduced instruction set computing

In computer engineering, a reduced instruction set computer (RISC) is a computer designed to simplify the individual instructions given to the computer to accomplish tasks. Compared to the instructions given to a complex instruction set compu ...

'' (RISC) began to get market share.

In many CISCs, an instruction could access either registers or memory, usually in several different ways. This made the CISCs easier to program, because a programmer could remember only thirty to a hundred instructions, and a set of three to ten addressing mode

Addressing modes are an aspect of the instruction set architecture in most central processing unit (CPU) designs. The various addressing modes that are defined in a given instruction set architecture define how the machine language instructions i ...

s rather than thousands of distinct instructions. This was called an ''orthogonal instruction set

In computer engineering, an orthogonal instruction set is an instruction set architecture where all instruction types can use all addressing modes. It is " orthogonal" in the sense that the instruction type and the addressing mode vary independe ...

''. The PDP-11 and Motorola 68000 architecture are examples of nearly orthogonal instruction sets.

There was also the ''BUNCH

Bunch may refer to:

* Bunch (surname)

* Bunch Davis (), American baseball player in the Negro leagues

* BUNCH, nickname of five computer manufacturing companies, IBM's main competitors in the 1970s

* Tussock (grass) or bunch grass, members of t ...

'' ( Burroughs, UNIVAC

UNIVAC (Universal Automatic Computer) was a line of electronic digital stored-program computers starting with the products of the Eckert–Mauchly Computer Corporation. Later the name was applied to a division of the Remington Rand company an ...

, NCR, Control Data Corporation, and Honeywell

Honeywell International Inc. is an American publicly traded, multinational conglomerate corporation headquartered in Charlotte, North Carolina. It primarily operates in four areas of business: aerospace, building technologies, performance ma ...

) that competed against IBM at this time; however, IBM dominated the era with S/360.

The Burroughs Corporation (which later merged with Sperry/Univac to form Unisys

Unisys Corporation is an American multinational information technology (IT) services and consulting company headquartered in Blue Bell, Pennsylvania. It provides digital workplace solutions, cloud, applications, and infrastructure solutions, ...

) offered an alternative to S/360 with their Burroughs large systems

The Burroughs Large Systems Group produced a family of large 48-bit mainframes using stack machine instruction sets with dense syllables.E.g., 12-bit syllables for B5000, 8-bit syllables for B6500 The first machine in the family was the B5000 in ...

B5000 series. In 1961, the B5000 had virtual memory, symmetric multiprocessing, a multiprogramming operating system (Master Control Program (MCP)), written in ALGOL 60, and the industry's first recursive-descent compilers as early as 1964.

1970s: Microprocessor revolution

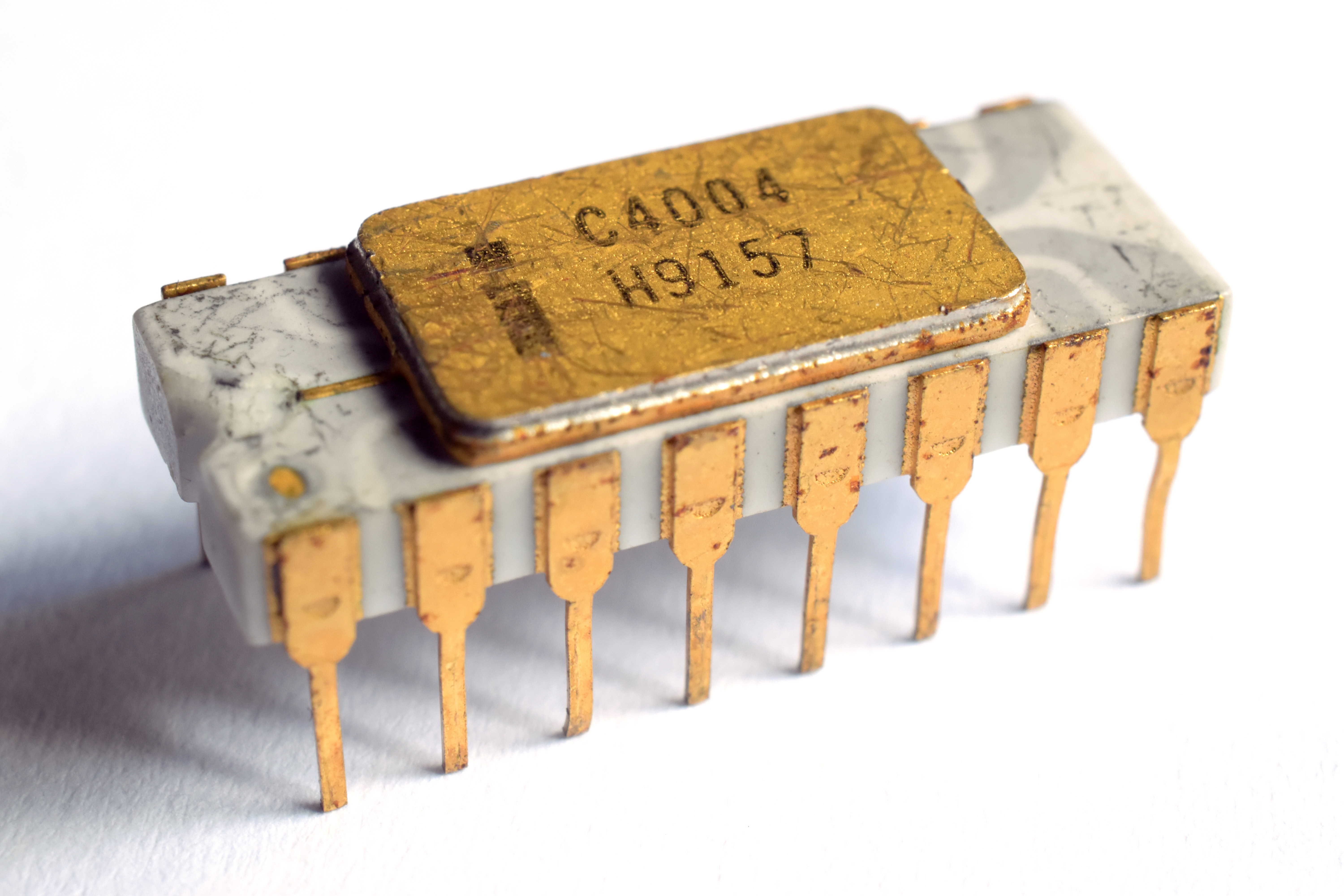

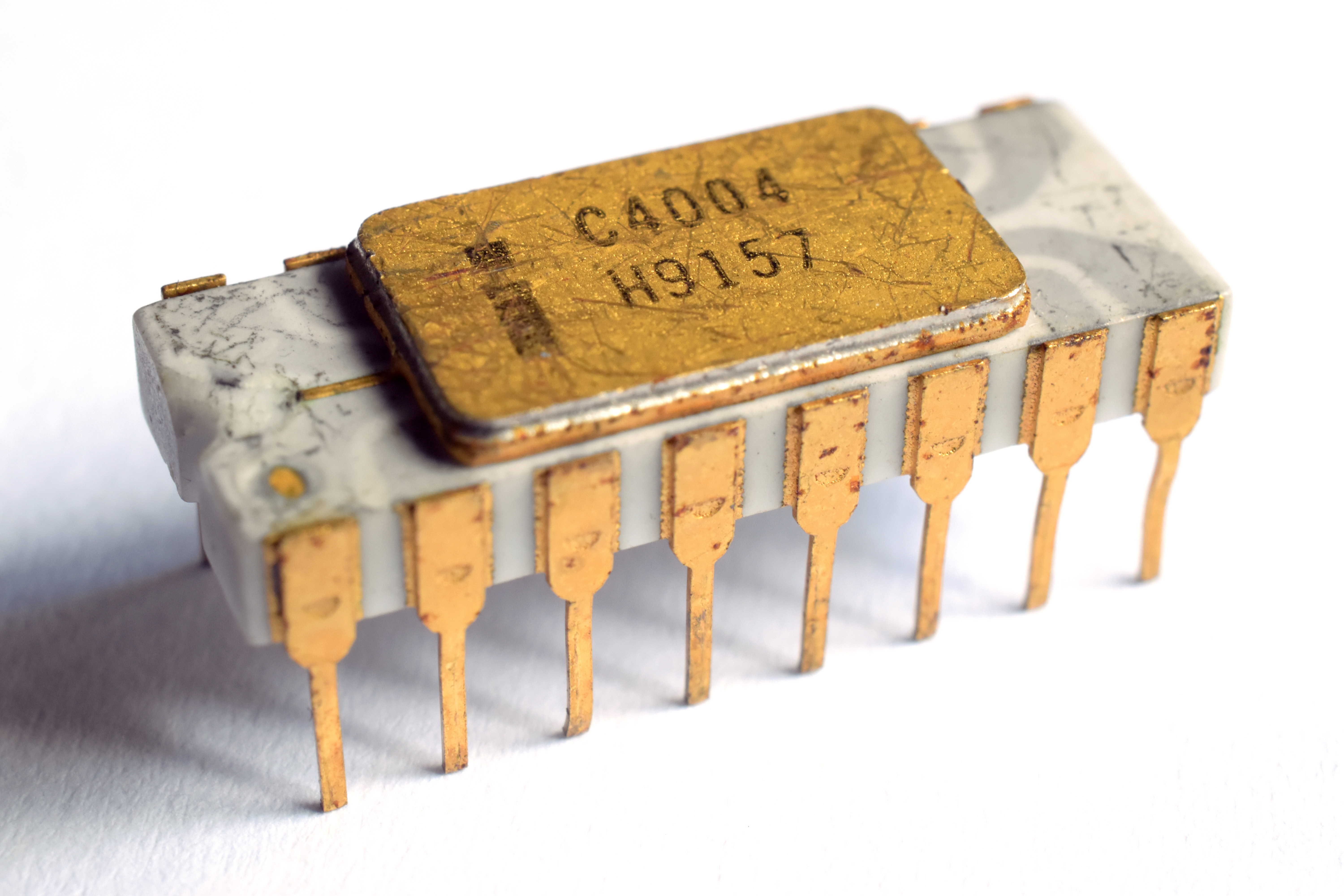

The first commercial

The first commercial microprocessor

A microprocessor is a computer processor where the data processing logic and control is included on a single integrated circuit, or a small number of integrated circuits. The microprocessor contains the arithmetic, logic, and control circ ...

, the binary-coded decimal (BCD) based Intel 4004

The Intel 4004 is a 4-bit central processing unit (CPU) released by Intel Corporation in 1971. Sold for US$60, it was the first commercially produced microprocessor, and the first in a long line of Intel CPUs.

The 4004 was the first significa ...

, was released by Intel

Intel Corporation is an American multinational corporation and technology company headquartered in Santa Clara, California. It is the world's largest semiconductor chip manufacturer by revenue, and is one of the developers of the x86 seri ...

in 1971. In March 1972, Intel introduced a microprocessor with an 8-bit architecture, the 8008

The Intel 8008 ("''eight-thousand-eight''" or "''eighty-oh-eight''") is an early byte-oriented microprocessor designed by Computer Terminal Corporation (CTC), implemented and manufactured by Intel, and introduced in April 1972. It is an 8-bit ...

, an integrated pMOS logic

PMOS or pMOS logic (from p-channel metal–oxide–semiconductor) is a family of digital circuits based on p-channel, enhancement mode metal–oxide–semiconductor field-effect transistors (MOSFETs). In the late 1960s and early 1970s, PMOS lo ...

re-implementation of the transistor–transistor logic Transistor–transistor logic (TTL) is a logic family built from bipolar junction transistors. Its name signifies that transistors perform both the logic function (the first "transistor") and the amplifying function (the second "transistor"), as o ...

(TTL) based Datapoint 2200

The Datapoint 2200 was a mass-produced programmable computer terminal usable as a computer, designed by Computer Terminal Corporation (CTC) founders Phil Ray and Gus Roche and announced by CTC in June 1970 (with units shipping in 1971). It was ...

CPU.

4004 designers Federico Faggin

Federico Faggin (, ; born 1 December 1941) is an Italian physicist, engineer, inventor and entrepreneur. He is best known for designing the first commercial microprocessor, the Intel 4004. He led the 4004 (MCS-4) project and the design group d ...

and Masatoshi Shima

is a Japanese electronics engineer. He was one of the architects of the world's first microprocessor, the Intel 4004. In 1968, Shima worked for Busicom in Japan, and did the logic design for a specialized CPU to be translated into three-chip c ...

went on to design the 8008's successor, the Intel 8080

The Intel 8080 (''"eighty-eighty"'') is the second 8-bit microprocessor designed and manufactured by Intel. It first appeared in April 1974 and is an extended and enhanced variant of the earlier 8008 design, although without binary compatibil ...

, a slightly more minicomputer-like microprocessor, largely based on customer feedback on the limited 8008. Much like the 8008, it was used for applications such as terminals, printers, cash registers and industrial robots. However, the more able 8080 also became the original target CPU for an early de facto standard

A ''de facto'' standard is a custom or convention that has achieved a dominant position by public acceptance or market forces (for example, by early entrance to the market). is a Latin phrase (literally " in fact"), here meaning "in practice b ...

personal computer

A personal computer (PC) is a multi-purpose microcomputer whose size, capabilities, and price make it feasible for individual use. Personal computers are intended to be operated directly by an end user, rather than by a computer expert or tec ...

operating system

An operating system (OS) is system software that manages computer hardware, software resources, and provides common services for computer programs.

Time-sharing operating systems schedule tasks for efficient use of the system and may also i ...

called CP/M and was used for such demanding control tasks as cruise missiles, and many other uses. Released in 1974, the 8080 became one of the first really widespread microprocessors.

By the mid-1970s, the use of integrated circuits in computers was common. The decade was marked by market upheavals caused by the shrinking price of transistors.

It became possible to put an entire CPU on one printed circuit board. The result was that minicomputers, usually with 16-bit words, and 4K to 64K of memory, became common.

CISCs were believed to be the most powerful types of computers, because their microcode was small and could be stored in very high-speed memory. The CISC architecture also addressed the ''semantic gap'' as it was then perceived. This was a defined distance between the machine language, and the higher level programming languages used to program a machine. It was felt that compilers could do a better job with a richer instruction set.

Custom CISCs were commonly constructed using ''bit slice'' computer logic such as the AMD 2900 chips, with custom microcode. A bit slice component is a piece of an arithmetic logic unit (ALU), register file or microsequencer. Most bit-slice integrated circuits were 4 bits wide.

By the early 1970s, the 16-bit

16-bit microcomputers are microcomputers that use 16-bit microprocessors.

A 16-bit register can store 216 different values. The range of integer values that can be stored in 16 bits depends on the integer representation used. With the two mo ...

PDP-11 minicomputer was developed, arguably the most advanced small computer of its day. In the late 1970's, wider-word superminicomputer

A superminicomputer, colloquially supermini, is a high-end minicomputer. The term is used to distinguish the emerging 32-bit architecture midrange computers introduced in the mid to late 1970s from the classical 16-bit systems that preceded them. ...

s were introduced, such as the 32-bit VAX

VAX (an acronym for Virtual Address eXtension) is a series of computers featuring a 32-bit instruction set architecture (ISA) and virtual memory that was developed and sold by Digital Equipment Corporation (DEC) in the late 20th century. The V ...

.

IBM continued to make large, fast computers. However, the definition of large and fast now meant more than a megabyte of RAM, clock speeds near one megahertz, and tens of megabytes of disk drives.

IBM's System 370 was a version of the 360 tweaked to run virtual computing environments. The virtual computer was developed to reduce the chances of an unrecoverable software failure.

The Burroughs large systems

The Burroughs Large Systems Group produced a family of large 48-bit mainframes using stack machine instruction sets with dense syllables.E.g., 12-bit syllables for B5000, 8-bit syllables for B6500 The first machine in the family was the B5000 in ...

(B5000, B6000, B7000) series reached its largest market share. It was a stack computer whose OS was programmed in a dialect of Algol.

All these different developments competed for market share.

The first single-chip 16-bit

16-bit microcomputers are microcomputers that use 16-bit microprocessors.

A 16-bit register can store 216 different values. The range of integer values that can be stored in 16 bits depends on the integer representation used. With the two mo ...

microprocessor was introduced in 1975. Panafacom

Panafacom was a Japanese microprocessor design firm formed on 2 July 1973 by a consortium including Fujitsu, Fuji Electric and Matsushita (Panasonic). The company was formed to design and manufacture the MN1610, a 16-bit microprocessor. It was re ...

, a conglomerate formed by Japanese companies Fujitsu, Fuji Electric

, operating under the brand name FE, is a Japanese electrical equipment company, manufacturing pressure transmitters, flowmeters, gas analyzers, controllers, inverters, pumps, generators, ICs, motors, and power equipment.

History

Fuji Electric ...

, and Matsushita, introduced the MN1610, a commercial 16-bit microprocessor.PANAFACOM Lkit-16Information Processing Society of Japan

The Information Processing Society of Japan ("IPSJ") is a Japanese learned society for computing. Founded in 1960, it is headquartered in Tokyo, Japan. IPSJ publishes a magazine and several professional journals mainly in Japanese, and sponsors c ...

According to Fujitsu, it was "the world's first 16-bit microcomputer on a single chip".

The Intel 8080 was the basis for the 16-bit Intel 8086

The 8086 (also called iAPX 86) is a 16-bit microprocessor chip designed by Intel between early 1976 and June 8, 1978, when it was released. The Intel 8088, released July 1, 1979, is a slightly modified chip with an external 8-bit data bus (allowi ...

, which is a direct ancestor to today's ubiquitous x86

x86 (also known as 80x86 or the 8086 family) is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel based on the Intel 8086 microprocessor and its 8088 variant. The 8086 was intr ...

family (including Pentium

Pentium is a brand used for a series of x86 architecture-compatible microprocessors produced by Intel. The original Pentium processor from which the brand took its name was first released on March 22, 1993. After that, the Pentium II and P ...

and Core i7

The following is a list of Intel Core i7 brand microprocessors. Introduced in 2008, the Core i7 line of microprocessors are intended to be used by high-end users.

Desktop processors

Nehalem microarchitecture (1st generation)

"Bloomfield" ...

). Every instruction of the 8080 has a direct equivalent in the large x86 instruction set, although the opcode values are different in the latter.

Early 1980s–1990s: Lessons of RISC

In the early 1980s, researchers atUC Berkeley

The University of California, Berkeley (UC Berkeley, Berkeley, Cal, or California) is a public land-grant research university in Berkeley, California. Established in 1868 as the University of California, it is the state's first land-grant uni ...

and IBM both discovered that most computer language compilers and interpreters used only a small subset of the instructions of complex instruction set computing

A complex instruction set computer (CISC ) is a computer architecture in which single instructions can execute several low-level operations (such as a load from memory, an arithmetic operation, and a memory store) or are capable of multi-step o ...

(CISC). Much of the power of the CPU was being ignored in real-world use. They realized that by making the computer simpler and less orthogonal, they could make it faster and less costly at the same time.

At the same time, CPU calculation became faster in relation to the time for needed memory accesses. Designers also experimented with using large sets of internal registers. The goal was to cache

Cache, caching, or caché may refer to:

Places United States

* Cache, Idaho, an unincorporated community

* Cache, Illinois, an unincorporated community

* Cache, Oklahoma, a city in Comanche County

* Cache, Utah, Cache County, Utah

* Cache County ...

intermediate results in the registers under the control of the compiler. This also reduced the number of addressing mode

Addressing modes are an aspect of the instruction set architecture in most central processing unit (CPU) designs. The various addressing modes that are defined in a given instruction set architecture define how the machine language instructions i ...

s and orthogonality.

The computer designs based on this theory were called reduced instruction set computing

In computer engineering, a reduced instruction set computer (RISC) is a computer designed to simplify the individual instructions given to the computer to accomplish tasks. Compared to the instructions given to a complex instruction set compu ...

(RISC). RISCs usually had larger numbers of registers, accessed by simpler instructions, with a few instructions specifically to load and store data to memory. The result was a very simple core CPU running at very high speed, supporting the sorts of operations the compilers were using anyway.

A common variant on the RISC design employs the Harvard architecture

The Harvard architecture is a computer architecture with separate storage and signal pathways for instructions and data. It contrasts with the von Neumann architecture, where program instructions and data share the same memory and pathways.

...

, versus Von Neumann architecture

The von Neumann architecture — also known as the von Neumann model or Princeton architecture — is a computer architecture based on a 1945 description by John von Neumann, and by others, in the '' First Draft of a Report on the EDVAC''. T ...

or stored program architecture common to most other designs. In a Harvard Architecture machine, the program and data occupy separate memory devices and can be accessed simultaneously. In Von Neumann machines, the data and programs are mixed in one memory device, requiring sequential accessing which produces the so-called ''Von Neumann bottleneck

The von Neumann architecture — also known as the von Neumann model or Princeton architecture — is a computer architecture based on a 1945 description by John von Neumann, and by others, in the '' First Draft of a Report on the EDVAC''. T ...

''.

One downside to the RISC design was that the programs that run on them tend to be larger. This is because compiler

In computing, a compiler is a computer program that translates computer code written in one programming language (the ''source'' language) into another language (the ''target'' language). The name "compiler" is primarily used for programs tha ...

s must generate longer sequences of the simpler instructions to perform the same results. Since these instructions must be loaded from memory anyway, the larger code offsets some of the RISC design's fast memory handling.

In the early 1990s, engineers at Japan's Hitachi found ways to compress the reduced instruction sets so they fit in even smaller memory systems than CISCs. Such compression schemes were used for the instruction set of their SuperH

SuperH (or SH) is a 32-bit reduced instruction set computing (RISC) instruction set architecture (ISA) developed by Hitachi and currently produced by Renesas. It is implemented by microcontrollers and microprocessors for embedded systems.

At the ...

series of microprocessors, introduced in 1992. The SuperH instruction set was later adapted for ARM architecture's ''Thumb'' instruction set. In applications that do not need to run older binary software, compressed RISCs are growing to dominate sales.

Another approach to RISCs was the minimal instruction set computer

Minimal instruction set computer (MISC) is a central processing unit (CPU) architecture, usually in the form of a microprocessor, with a very small number of basic operations and corresponding opcodes, together forming an instruction set. Such ...

(MISC), ''niladic'', or ''zero-operand'' instruction set. This approach realized that most space in an instruction was used to identify the operands of the instruction. These machines placed the operands on a push-down (last-in, first out) stack. The instruction set was supplemented with a few instructions to fetch and store memory. Most used simple caching to provide extremely fast RISC machines, with very compact code. Another benefit was that the interrupt latencies were very small, smaller than most CISC machines (a rare trait in RISC machines). The Burroughs large systems

The Burroughs Large Systems Group produced a family of large 48-bit mainframes using stack machine instruction sets with dense syllables.E.g., 12-bit syllables for B5000, 8-bit syllables for B6500 The first machine in the family was the B5000 in ...

architecture used this approach. The B5000 was designed in 1961, long before the term ''RISC'' was invented. The architecture puts six 8-bit instructions in a 48-bit word, and was a precursor to ''very long instruction word

Very long instruction word (VLIW) refers to instruction set architectures designed to exploit instruction level parallelism (ILP). Whereas conventional central processing units (CPU, processor) mostly allow programs to specify instructions to exe ...

'' (VLIW) design (see below: 1990 to today).

The Burroughs architecture was one of the inspirations for Charles H. Moore's programming language Forth

Forth or FORTH may refer to:

Arts and entertainment

* ''forth'' magazine, an Internet magazine

* ''Forth'' (album), by The Verve, 2008

* ''Forth'', a 2011 album by Proto-Kaw

* Radio Forth, a group of independent local radio stations in Scotla ...

, which in turn inspired his later MISC chip designs. For example, his f20 cores had 31 5-bit instructions, which fit four to a 20-bit word.

RISC chips now dominate the market for 32-bit embedded systems. Smaller RISC chips are even growing common in the cost-sensitive 8-bit embedded-system market. The main market for RISC CPUs has been systems that need low power or small size.

Even some CISC processors (based on architectures that were created before RISC grew dominant), such as newer x86

x86 (also known as 80x86 or the 8086 family) is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel based on the Intel 8086 microprocessor and its 8088 variant. The 8086 was intr ...

processors, translate instructions internally into a RISC-like instruction set.

These numbers may surprise many, because the ''market'' is perceived as desktop computers. x86 designs dominate desktop and notebook computer sales, but such computers are only a tiny fraction of the computers now sold. Most people in industrialised countries own more computers in embedded systems in their car and house, than on their desks.

Mid-to-late 1980s: Exploiting instruction level parallelism

In the mid-to-late 1980s, designers began using a technique termed ''instruction pipelining

In computer engineering, instruction pipelining or ILP is a technique for implementing instruction-level parallelism within a single processor. Pipelining attempts to keep every part of the processor busy with some instruction by dividing incom ...

'', in which the processor works on multiple instructions in different stages of completion. For example, the processor can retrieve the operands for the next instruction while calculating the result of the current one. Modern CPUs may use over a dozen such stages. (Pipelining was originally developed in the late 1950s by International Business Machines (IBM) on their 7030 (Stretch) mainframe computer.) Minimal instruction set computer

Minimal instruction set computer (MISC) is a central processing unit (CPU) architecture, usually in the form of a microprocessor, with a very small number of basic operations and corresponding opcodes, together forming an instruction set. Such ...

s (MISC) can execute instructions in one cycle with no need for pipelining.

A similar idea, introduced only a few years later, was to execute multiple instructions in parallel on separate arithmetic logic units (ALUs). Instead of operating on only one instruction at a time, the CPU will look for several similar instructions that do not depend on each other, and execute them in parallel. This approach is called superscalar processor design.

Such methods are limited by the degree of instruction level parallelism

Instruction-level parallelism (ILP) is the parallel or simultaneous execution of a sequence of instructions in a computer program. More specifically ILP refers to the average number of instructions run per step of this parallel execution.

Disc ...

(ILP), the number of non-dependent instructions in the program code. Some programs can run very well on superscalar processors due to their inherent high ILP, notably graphics. However, more general problems have far less ILP, thus lowering the possible speedups from these methods.

Branching is one major culprit. For example, a program may add two numbers and branch to a different code segment if the number is bigger than a third number. In this case, even if the branch operation is sent to the second ALU for processing, it still must wait for the results from the addition. It thus runs no faster than if there was only one ALU. The most common solution for this type of problem is to use a type of branch prediction

In computer architecture, a branch predictor is a digital circuit that tries to guess which way a branch (e.g., an if–then–else structure) will go before this is known definitively. The purpose of the branch predictor is to improve the flow ...

.

To further the efficiency of multiple functional units which are available in superscalar designs, operand register dependencies were found to be another limiting factor. To minimize these dependencies, out-of-order execution of instructions was introduced. In such a scheme, the instruction results which complete out-of-order must be re-ordered in program order by the processor for the program to be restartable after an exception. Out-of-order execution was the main advance of the computer industry during the 1990s.

A similar concept is speculative execution

Speculative execution is an optimization technique where a computer system performs some task that may not be needed. Work is done before it is known whether it is actually needed, so as to prevent a delay that would have to be incurred by doing ...

, where instructions from one direction of a branch (the predicted direction) are executed before the branch direction is known. When the branch direction is known, the predicted direction and the actual direction are compared. If the predicted direction was correct, the speculatively executed instructions and their results are kept; if it was incorrect, these instructions and their results are erased. Speculative execution, coupled with an accurate branch predictor, gives a large performance gain.

These advances, which were originally developed from research for RISC-style designs, allow modern CISC processors to execute twelve or more instructions per clock cycle, when traditional CISC designs could take twelve or more cycles to execute one instruction.

The resulting instruction scheduling logic of these processors is large, complex and difficult to verify. Further, higher complexity needs more transistors, raising power consumption and heat. In these, RISC is superior because the instructions are simpler, have less interdependence, and make superscalar implementations easier. However, as Intel has demonstrated, the concepts can be applied to a complex instruction set computing

A complex instruction set computer (CISC ) is a computer architecture in which single instructions can execute several low-level operations (such as a load from memory, an arithmetic operation, and a memory store) or are capable of multi-step o ...

(CISC) design, given enough time and money.

1990 to today: Looking forward

VLIW and EPIC

The instruction scheduling logic that makes a superscalar processor is boolean logic. In the early 1990s, a significant innovation was to realize that the coordination of a multi-ALU computer could be moved into thecompiler

In computing, a compiler is a computer program that translates computer code written in one programming language (the ''source'' language) into another language (the ''target'' language). The name "compiler" is primarily used for programs tha ...

, the software that translates a programmer's instructions into machine-level instructions.

This type of computer is called a ''very long instruction word

Very long instruction word (VLIW) refers to instruction set architectures designed to exploit instruction level parallelism (ILP). Whereas conventional central processing units (CPU, processor) mostly allow programs to specify instructions to exe ...

'' (VLIW) computer.

Scheduling instructions statically in the compiler (versus scheduling dynamically in the processor) can reduce CPU complexity. This can improve performance, and reduce heat and cost.

Unfortunately, the compiler lacks accurate knowledge of runtime scheduling issues. Merely changing the CPU core frequency multiplier will have an effect on scheduling. Operation of the program, as determined by input data, will have major effects on scheduling. To overcome these severe problems, a VLIW system may be enhanced by adding the normal dynamic scheduling, losing some of the VLIW advantages.

Static scheduling in the compiler also assumes that dynamically generated code will be uncommon. Before the creation of Java

Java (; id, Jawa, ; jv, ꦗꦮ; su, ) is one of the Greater Sunda Islands in Indonesia. It is bordered by the Indian Ocean to the south and the Java Sea to the north. With a population of 151.6 million people, Java is the world's mos ...

and the Java virtual machine, this was true. It was reasonable to assume that slow compiles would only affect software developers. Now, with just-in-time compilation

In computing, just-in-time (JIT) compilation (also dynamic translation or run-time compilations) is a way of executing computer code that involves compilation during execution of a program (at run time) rather than before execution. This may co ...

(JIT) virtual machines being used for many languages, slow code generation affects users also.

There were several unsuccessful attempts to commercialize VLIW. The basic problem is that a VLIW computer does not scale to different price and performance points, as a dynamically scheduled computer can. Another issue is that compiler design for VLIW computers is very difficult, and compilers, as of 2005, often emit suboptimal code for these platforms.

Also, VLIW computers optimise for throughput, not low latency, so they were unattractive to engineers designing controllers and other computers embedded in machinery. The embedded system

An embedded system is a computer system—a combination of a computer processor, computer memory, and input/output peripheral devices—that has a dedicated function within a larger mechanical or electronic system. It is ''embedded'' ...

s markets had often pioneered other computer improvements by providing a large market unconcerned about compatibility with older software.

In January 2000, Transmeta Corporation

Transmeta Corporation was an American fabless semiconductor company based in Santa Clara, California. It developed low power x86 compatible microprocessors based on a VLIW core and a software layer called Code Morphing Software.

Code Morphing ...

took the novel step of placing a compiler in the central processing unit, and making the compiler translate from a reference byte code (in their case, x86

x86 (also known as 80x86 or the 8086 family) is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel based on the Intel 8086 microprocessor and its 8088 variant. The 8086 was intr ...

instructions) to an internal VLIW instruction set. This method combines the hardware simplicity, low power and speed of VLIW RISC with the compact main memory system and software reverse-compatibility provided by popular CISC.

Intel

Intel Corporation is an American multinational corporation and technology company headquartered in Santa Clara, California. It is the world's largest semiconductor chip manufacturer by revenue, and is one of the developers of the x86 seri ...

's Itanium

Itanium ( ) is a discontinued family of 64-bit Intel microprocessors that implement the Intel Itanium architecture (formerly called IA-64). Launched in June 2001, Intel marketed the processors for enterprise servers and high-performance comput ...

chip is based on what they call an explicitly parallel instruction computing

Explicitly parallel instruction computing (EPIC) is a term coined in 1997 by the HP–Intel alliance to describe a computing paradigm that researchers had been investigating since the early 1980s. This paradigm is also called ''Independence'' a ...

(EPIC) design. This design supposedly provides the VLIW advantage of increased instruction throughput. However, it avoids some of the issues of scaling and complexity, by explicitly providing in each ''bundle'' of instructions information concerning their dependencies. This information is calculated by the compiler, as it would be in a VLIW design. The early versions are also backward-compatible with newer x86

x86 (also known as 80x86 or the 8086 family) is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel based on the Intel 8086 microprocessor and its 8088 variant. The 8086 was intr ...

software by means of an on-chip emulator

In computing, an emulator is hardware or software that enables one computer system (called the ''host'') to behave like another computer system (called the ''guest''). An emulator typically enables the host system to run software or use pe ...

mode. Integer performance was disappointing and despite improvements, sales in volume markets continue to be low.

Multi-threading

Current designs work best when the computer is running only one program. However, nearly all modernoperating system

An operating system (OS) is system software that manages computer hardware, software resources, and provides common services for computer programs.

Time-sharing operating systems schedule tasks for efficient use of the system and may also i ...

s allow running multiple programs together. For the CPU to change over and do work on another program needs costly context switching

In computing, a context switch is the process of storing the state of a process or thread, so that it can be restored and resume execution at a later point, and then restoring a different, previously saved, state. This allows multiple processes ...

. In contrast, multi-threaded CPUs can handle instructions from multiple programs at once.

To do this, such CPUs include several sets of registers. When a context switch occurs, the contents of the ''working registers'' are simply copied into one of a set of registers for this purpose.

Such designs often include thousands of registers instead of hundreds as in a typical design. On the downside, registers tend to be somewhat costly in chip space needed to implement them. This chip space might be used otherwise for some other purpose.

Intel calls this technology "hyperthreading" and offers two threads per core in its current Core i3, Core i5, Core i7 and Core i9 Desktop lineup (as well as in its Core i3, Core i5 and Core i7 Mobile lineup), as well as offering up to four threads per core in high-end Xeon Phi processors.

Multi-core

Multi-core CPUs are typically multiple CPU cores on the same die, connected to each other via a shared L2 or L3 cache, an on-diebus

A bus (contracted from omnibus, with variants multibus, motorbus, autobus, etc.) is a road vehicle that carries significantly more passengers than an average car or van. It is most commonly used in public transport, but is also in use for cha ...

, or an on-die crossbar switch. All the CPU cores on the die share interconnect components with which to interface to other processors and the rest of the system. These components may include a front-side bus

A front-side bus (FSB) is a computer communication interface (bus) that was often used in Intel-chip-based computers during the 1990s and 2000s. The EV6 bus served the same function for competing AMD CPUs. Both typically carry data between the ...

interface, a memory controller

The memory controller is a digital circuit that manages the flow of data going to and from the computer's main memory. A memory controller can be a separate chip or integrated into another chip, such as being placed on the same die or as an int ...

to interface with dynamic random access memory (DRAM), a cache coherent link to other processors, and a non-coherent link to the southbridge and I/O devices. The terms '' multi-core'' and ''microprocessor

A microprocessor is a computer processor where the data processing logic and control is included on a single integrated circuit, or a small number of integrated circuits. The microprocessor contains the arithmetic, logic, and control circ ...

unit'' (MPU) have come into general use for one die having multiple CPU cores.

Intelligent RAM

One way to work around theVon Neumann bottleneck

The von Neumann architecture — also known as the von Neumann model or Princeton architecture — is a computer architecture based on a 1945 description by John von Neumann, and by others, in the '' First Draft of a Report on the EDVAC''. T ...

is to mix a processor and DRAM all on one chip.

* The Berkeley IRAM Project

* eDRAM

* Computational RAM

* Memristor

A memristor (; a portmanteau of ''memory resistor'') is a non-linear two-terminal electrical component relating electric charge and magnetic flux linkage. It was described and named in 1971 by Leon Chua, completing a theoretical quartet of fu ...

Reconfigurable logic

Another track of development is to combine reconfigurable logic with a general-purpose CPU. In this scheme, a special computer language compiles fast-running subroutines into a bit-mask to configure the logic. Slower, or less-critical parts of the program can be run by sharing their time on the CPU. This process allows creating devices such as softwareradio

Radio is the technology of signaling and communicating using radio waves. Radio waves are electromagnetic waves of frequency between 30 hertz (Hz) and 300 gigahertz (GHz). They are generated by an electronic device called a transmi ...

s, by using digital signal processing to perform functions usually performed by analog electronics

The field of electronics is a branch of physics and electrical engineering that deals with the emission, behaviour and effects of electrons using electronic devices. Electronics uses active devices to control electron flow by amplification ...

.

Open source processors

As the lines between hardware and software increasingly blur due to progress in design methodology and availability of chips such as field-programmable gate arrays (FPGA) and cheaper production processes, evenopen source hardware

Open-source hardware (OSH) consists of physical artifacts of technology designed and offered by the open-design movement. Both free and open-source software (FOSS) and open-source hardware are created by this open-source culture movement and ...

has begun to appear. Loosely knit communities like OpenCores

OpenCores is a community developing digital open-source hardware through electronic design automation (EDA), with a similar ethos as the free software movement. OpenCores hopes to eliminate redundant design work and slash development costs. A ...

and RISC-V

RISC-V (pronounced "risk-five" where five refers to the number of generations of RISC architecture that were developed at the University of California, Berkeley since 1981) is an open standard instruction set architecture (ISA) based on estab ...

have recently announced fully open CPU architectures such as the OpenRISC

OpenRISC is a project to develop a series of open-source hardware based central processing units (CPUs) on established reduced instruction set computer (RISC) principles. It includes an instruction set architecture (ISA) using an open-source lic ...

which can be readily implemented on FPGAs or in custom produced chips, by anyone, with no license fees, and even established processor makers like Sun Microsystems have released processor designs (e.g., OpenSPARC OpenSPARC is an open-source hardware project started in December 2005. The initial contribution to the project was Sun Microsystems' register-transfer level (RTL) Verilog code for a full 64-bit, 32- thread microprocessor, the UltraSPARC T1 process ...

) under open-source licenses.

Asynchronous CPUs

Yet another option is a ''clockless'' or ''asynchronous CPU

Asynchronous circuit (clockless or self-timed circuit) is a sequential digital logic circuit that does not use a global clock circuit or signal generator to synchronize its components. Instead, the components are driven by a handshaking circu ...

''. Unlike conventional processors, clockless processors have no central clock to coordinate the progress of data through the pipeline. Instead, stages of the CPU are coordinated using logic devices called ''pipe line controls'' or ''FIFO sequencers''. Basically, the pipeline controller clocks the next stage of logic when the existing stage is complete. Thus, a central clock is unneeded.

Relative to clocked logic, it may be easier to implement high performance devices in asynchronous logic:

* In a clocked CPU, no component can run faster than the clock rate. In a clockless CPU, components can run at different speeds.

* In a clocked CPU, the clock can go no faster than the worst-case performance of the slowest stage. In a clockless CPU, when a stage finishes faster than normal, the next stage can immediately take the results rather than waiting for the next clock tick. A stage might finish faster than normal because of the type of data inputs (e.g., multiplication can be very fast if it occurs by 0 or 1), or because it is running at a higher voltage or lower temperature than normal.

Asynchronous logic proponents believe these abilities would have these benefits:

* lower power dissipation for a given performance

* highest possible execution speeds

The biggest disadvantage of the clockless CPU is that most CPU design tools assume a clocked CPU (a synchronous circuit

In digital electronics, a synchronous circuit is a digital circuit in which the changes in the state of memory elements are synchronized by a clock signal. In a sequential digital logic circuit, data are stored in memory devices called flip-f ...

), so making a clockless CPU (designing an asynchronous circuit

Asynchronous circuit (clockless or self-timed circuit) is a sequential digital logic circuit that does not use a global clock circuit or signal generator to synchronize its components. Instead, the components are driven by a handshaking circui ...

) involves modifying the design tools to handle clockless logic and doing extra testing to ensure the design avoids metastability

In chemistry and physics, metastability denotes an intermediate energetic state within a dynamical system other than the system's state of least energy.

A ball resting in a hollow on a slope is a simple example of metastability. If the ball i ...

problems.

Even so, several asynchronous CPUs have been built, including

* the ORDVAC

The ORDVAC (''Ordnance Discrete Variable Automatic Computer)'', is an early computer built by the University of Illinois for the Ballistic Research Laboratory at Aberdeen Proving Ground. A successor to the ENIAC (along with EDVAC built earlier). ...

and the identical ILLIAC I

The ILLIAC I (Illinois Automatic Computer), a pioneering computer in the ILLIAC series of computers built in 1952 by the University of Illinois, was the first computer built and owned entirely by a United States educational institution.

Compute ...

(1951)

* the ILLIAC II

The ILLIAC II was a revolutionary super-computer built by the University of Illinois that became operational in 1962.

Description

The concept, proposed in 1958, pioneered Emitter-coupled logic (ECL) circuitry, pipelining, and transistor memor ...

(1962), then the fastest computer on Earth

* The Caltech Asynchronous Microprocessor, the world-first asynchronous microprocessor (1988)

* the ARM

In human anatomy, the arm refers to the upper limb in common usage, although academically the term specifically means the upper arm between the glenohumeral joint (shoulder joint) and the elbow joint. The distal part of the upper limb between th ...

-implementing AMULET (1993 and 2000)

* the asynchronous implementation of MIPS Technologies

MIPS Technologies, Inc., formerly MIPS Computer Systems, Inc., was an American fabless semiconductor design company that is most widely known for developing the MIPS architecture and a series of RISC CPU chips based on it. MIPS provides proc ...

R3000

The R3000 is a 32-bit RISC microprocessor chipset developed by MIPS Computer Systems that implemented the MIPS I instruction set architecture (ISA). Introduced in June 1988, it was the second MIPS implementation, succeeding the R2000 as the flag ...

, named MiniMIPS (1998)

* the SEAforth multi-core processor from Charles H. Moore

Optical communication

One promising option is to eliminate thefront-side bus

A front-side bus (FSB) is a computer communication interface (bus) that was often used in Intel-chip-based computers during the 1990s and 2000s. The EV6 bus served the same function for competing AMD CPUs. Both typically carry data between the ...

. Modern vertical laser diode

The laser diode chip removed and placed on the eye of a needle for scale

A laser diode (LD, also injection laser diode or ILD, or diode laser) is a semiconductor device similar to a light-emitting diode in which a diode pumped directly with e ...

s enable this change. In theory, an optical computer's components could directly connect through a holographic or phased open-air switching system. This would provide a large increase in effective speed and design flexibility, and a large reduction in cost. Since a computer's connectors are also its most likely failure points, a busless system may be more reliable.

Further, as of 2010, modern processors use 64- or 128-bit logic. Optical wavelength superposition could allow data lanes and logic many orders of magnitude higher than electronics, with no added space or copper wires.

Optical processors

Another long-term option is to use light instead of electricity for digital logic. In theory, this could run about 30% faster and use less power, and allow a direct interface with quantum computing devices. The main problems with this approach are that, for the foreseeable future, electronic computing elements are faster, smaller, cheaper, and more reliable. Such elements are already smaller than some wavelengths of light. Thus, even waveguide-based optical logic may be uneconomic relative to electronic logic. As of 2016, most development effort is for electronic circuitry.Ionic processors

Early experimental work has been done on using ion-based chemical reactions instead of electronic or photonic actions to implement elements of a logic processor.Belt machine architecture

Relative to conventionalregister machine

In mathematical logic and theoretical computer science a register machine is a generic class of abstract machines used in a manner similar to a Turing machine. All the models are Turing equivalent.

Overview

The register machine gets its name fro ...

or stack machine architecture, yet similar to Intel's Itanium

Itanium ( ) is a discontinued family of 64-bit Intel microprocessors that implement the Intel Itanium architecture (formerly called IA-64). Launched in June 2001, Intel marketed the processors for enterprise servers and high-performance comput ...

architecture, a temporal register addressing scheme has been proposed by Ivan Godard and company that is intended to greatly reduce the complexity of CPU hardware (specifically the number of internal registers and the resulting huge multiplexer

In electronics, a multiplexer (or mux; spelled sometimes as multiplexor), also known as a data selector, is a device that selects between several analog or digital input signals and forwards the selected input to a single output line. The sel ...

trees). While somewhat harder to read and debug than general-purpose register names, it aids understanding to view the belt as a moving '' conveyor belt'' where the oldest values ''drop off'' the belt and vanish. It is implemented in the Mill architecture.

Timeline of events

* 1964. IBM release the 32-bit IBM System/360 with memory protection. * 1969.Intel 4004

The Intel 4004 is a 4-bit central processing unit (CPU) released by Intel Corporation in 1971. Sold for US$60, it was the first commercially produced microprocessor, and the first in a long line of Intel CPUs.

The 4004 was the first significa ...

's initial design led by Intel's Ted Hoff and Busicom's Masatoshi Shima

is a Japanese electronics engineer. He was one of the architects of the world's first microprocessor, the Intel 4004. In 1968, Shima worked for Busicom in Japan, and did the logic design for a specialized CPU to be translated into three-chip c ...

.Federico Faggin

Federico Faggin (, ; born 1 December 1941) is an Italian physicist, engineer, inventor and entrepreneur. He is best known for designing the first commercial microprocessor, the Intel 4004. He led the 4004 (MCS-4) project and the design group d ...

The Making of the First Microprocessor

''IEEE Solid-State Circuits Magazine'', Winter 2009,

IEEE Xplore

IEEE Xplore digital library is a research database for discovery and access to journal articles, conference proceedings, technical standards, and related materials on computer science, electrical engineering and electronics, and allied fields. It ...

* 1970. Intel 4004's design completed by Intel's Federico Faggin

Federico Faggin (, ; born 1 December 1941) is an Italian physicist, engineer, inventor and entrepreneur. He is best known for designing the first commercial microprocessor, the Intel 4004. He led the 4004 (MCS-4) project and the design group d ...

and Busicom's Masatoshi Shima.

* 1971. IBM release the IBM System/370

The IBM System/370 (S/370) is a model range of IBM mainframe computers announced on June 30, 1970, as the successors to the System/360 family. The series mostly maintains backward compatibility with the S/360, allowing an easy migration path ...

successor to System/360.

* 1971. Intel

Intel Corporation is an American multinational corporation and technology company headquartered in Santa Clara, California. It is the world's largest semiconductor chip manufacturer by revenue, and is one of the developers of the x86 seri ...

release the 4-bit Intel 4004

The Intel 4004 is a 4-bit central processing unit (CPU) released by Intel Corporation in 1971. Sold for US$60, it was the first commercially produced microprocessor, and the first in a long line of Intel CPUs.

The 4004 was the first significa ...

, the first commercial microprocessor

A microprocessor is a computer processor where the data processing logic and control is included on a single integrated circuit, or a small number of integrated circuits. The microprocessor contains the arithmetic, logic, and control circ ...

.

* 1971. NEC

is a Japanese multinational information technology and electronics corporation, headquartered in Minato, Tokyo. The company was known as the Nippon Electric Company, Limited, before rebranding in 1983 as NEC. It provides IT and network soluti ...

release the μPD707 and μPD708, a two-chip 4-bit CPU.

* 1972. IBM announce "System/370 Advanced Function", adding support for virtual memory

In computing, virtual memory, or virtual storage is a memory management technique that provides an "idealized abstraction of the storage resources that are actually available on a given machine" which "creates the illusion to users of a very ...

with demand paging

In computer operating systems, demand paging (as opposed to anticipatory paging) is a method of virtual memory management. In a system that uses demand paging, the operating system copies a disk page into physical memory only if an attempt is mad ...

* 1972. NEC release single-chip 4-bit microprocessor, μPD700.1970年代 マイコンの開発と発展 ~集積回路/ref>Jeffrey A. Hart & Sangbae Kim (2001)

The Defense of Intellectual Property Rights in the Global Information Order

International Studies Association, Chicago * 1973. NEC release 4-bit μCOM-4 (μPD751), combining the μPD707 and μPD708 into a single microprocessor. * 1974. Intel release the

Intel 8080

The Intel 8080 (''"eighty-eighty"'') is the second 8-bit microprocessor designed and manufactured by Intel. It first appeared in April 1974 and is an extended and enhanced variant of the earlier 8008 design, although without binary compatibil ...

, an 8-bit microprocessor, designed by Federico Faggin

Federico Faggin (, ; born 1 December 1941) is an Italian physicist, engineer, inventor and entrepreneur. He is best known for designing the first commercial microprocessor, the Intel 4004. He led the 4004 (MCS-4) project and the design group d ...

and Masatoshi Shima

is a Japanese electronics engineer. He was one of the architects of the world's first microprocessor, the Intel 4004. In 1968, Shima worked for Busicom in Japan, and did the logic design for a specialized CPU to be translated into three-chip c ...

.

* 1975. MOS Technology

MOS Technology, Inc. ("MOS" being short for Metal Oxide Semiconductor), later known as CSG (Commodore Semiconductor Group) and GMT Microelectronics, was a semiconductor design and fabrication company based in Audubon, Pennsylvania. It is mos ...

release the 8-bit MOS Technology 6502

The MOS Technology 6502 (typically pronounced "sixty-five-oh-two" or "six-five-oh-two") William Mensch and the moderator both pronounce the 6502 microprocessor as ''"sixty-five-oh-two"''. is an 8-bit microprocessor that was designed by a small te ...

, the first integrated processor to have an affordable price of $25 when the 6800 rival was $175.

* 1976. Zilog introduce the 8-bit Zilog Z80

The Z80 is an 8-bit microprocessor introduced by Zilog as the startup company's first product. The Z80 was conceived by Federico Faggin in late 1974 and developed by him and his 11 employees starting in early 1975. The first working samples were ...

, designed by Federico Faggin

Federico Faggin (, ; born 1 December 1941) is an Italian physicist, engineer, inventor and entrepreneur. He is best known for designing the first commercial microprocessor, the Intel 4004. He led the 4004 (MCS-4) project and the design group d ...

and Masatoshi Shima

is a Japanese electronics engineer. He was one of the architects of the world's first microprocessor, the Intel 4004. In 1968, Shima worked for Busicom in Japan, and did the logic design for a specialized CPU to be translated into three-chip c ...

.

* 1977. Digital Equipment Corporation

Digital Equipment Corporation (DEC ), using the trademark Digital, was a major American company in the computer industry from the 1960s to the 1990s. The company was co-founded by Ken Olsen and Harlan Anderson in 1957. Olsen was president un ...

introduced its first 32-bit VAX

VAX (an acronym for Virtual Address eXtension) is a series of computers featuring a 32-bit instruction set architecture (ISA) and virtual memory that was developed and sold by Digital Equipment Corporation (DEC) in the late 20th century. The V ...

superminicomputer

A superminicomputer, colloquially supermini, is a high-end minicomputer. The term is used to distinguish the emerging 32-bit architecture midrange computers introduced in the mid to late 1970s from the classical 16-bit systems that preceded them. ...

, the VAX-11

The VAX-11 is a discontinued family of 32-bit superminicomputers, running the Virtual Address eXtension (VAX) instruction set architecture (ISA), developed and manufactured by Digital Equipment Corporation (DEC). Development began in 1976. In a ...

/780.

* 1978. Intel introduces the Intel 8086 and Intel 8088

The Intel 8088 ("''eighty-eighty-eight''", also called iAPX 88) microprocessor is a variant of the Intel 8086. Introduced on June 1, 1979, the 8088 has an eight-bit external data bus instead of the 16-bit bus of the 8086. The 16-bit registers and ...

, the first x86 chips.

* 1978. Fujitsu releases the MB8843 microprocessor.

* 1979. Zilog release the Zilog Z8000

The Z8000 ("''zee-'' or ''zed-eight-thousand''") is a 16-bit microprocessor introduced by Zilog in early 1979. The architecture was designed by Bernard Peuto while the logic and physical implementation was done by Masatoshi Shima, assisted by a ...

, a 16-bit microprocessor, designed by Federico Faggin

Federico Faggin (, ; born 1 December 1941) is an Italian physicist, engineer, inventor and entrepreneur. He is best known for designing the first commercial microprocessor, the Intel 4004. He led the 4004 (MCS-4) project and the design group d ...

and Masatoshi Shima

is a Japanese electronics engineer. He was one of the architects of the world's first microprocessor, the Intel 4004. In 1968, Shima worked for Busicom in Japan, and did the logic design for a specialized CPU to be translated into three-chip c ...

.

* 1979. Motorola

Motorola, Inc. () was an American multinational telecommunications company based in Schaumburg, Illinois, United States. After having lost $4.3 billion from 2007 to 2009, the company split into two independent public companies, Motorol ...

introduce the Motorola 68000, a 16/32-bit microprocessor.

* 1981. Stanford MIPS introduced, one of the first reduced instruction set computing

In computer engineering, a reduced instruction set computer (RISC) is a computer designed to simplify the individual instructions given to the computer to accomplish tasks. Compared to the instructions given to a complex instruction set compu ...

(RISC) designs.

* 1982. Intel introduces the Intel 80286

The Intel 80286 (also marketed as the iAPX 286 and often called Intel 286) is a 16-bit microprocessor that was introduced on February 1, 1982. It was the first 8086-based CPU with separate, non- multiplexed address and data buses and also the ...

, which was the first Intel processor that could run all the software written for its predecessors, the 8086 and 8088.

* 1984. Motorola

Motorola, Inc. () was an American multinational telecommunications company based in Schaumburg, Illinois, United States. After having lost $4.3 billion from 2007 to 2009, the company split into two independent public companies, Motorol ...

introduces the Motorola 68020

The Motorola 68020 ("''sixty-eight-oh-twenty''", "''sixty-eight-oh-two-oh''" or "''six-eight-oh-two-oh''") is a 32-bit microprocessor from Motorola, released in 1984. A lower-cost version was also made available, known as the 68EC020. In keeping ...

, which enabled full 32-bit addressing, and the 68851 memory management unit

A memory management unit (MMU), sometimes called paged memory management unit (PMMU), is a computer hardware unit having all memory references passed through itself, primarily performing the translation of virtual memory addresses to physical a ...

, which supported demand paging.

* 1985. Intel introduces the Intel 80386

The Intel 386, originally released as 80386 and later renamed i386, is a 32-bit microprocessor introduced in 1985. The first versions had 275,000 transistorsARM architecture introduced.

* 1989. Intel introduces the Intel 80486.

* 1992. Hitachi introduces

Great moments in microprocessor history by W. Warner, 2004

* ''Bit by Bit: An Illustrated History of Computers'', Stan Augarten, 1984

OCR with permission of the author

* Gallery of CPU and related PCBs (in Italian

{{CPU technologies Central processing unit History of computing hardware History of computing

SuperH

SuperH (or SH) is a 32-bit reduced instruction set computing (RISC) instruction set architecture (ISA) developed by Hitachi and currently produced by Renesas. It is implemented by microcontrollers and microprocessors for embedded systems.

At the ...

architecture, which provides the basis for ARM's Thumb instruction set.

* 1993. Intel launches the original Pentium

Pentium is a brand used for a series of x86 architecture-compatible microprocessors produced by Intel. The original Pentium processor from which the brand took its name was first released on March 22, 1993. After that, the Pentium II and P ...

microprocessor, the first processor with a x86 superscalar microarchitecture.

* 1994. IBM introduce the first IBM mainframe models to use single-chip microprocessors as CPUs, the IBM System/390

The IBM System/390 is a discontinued mainframe product family implementing the ESA/390, the fifth generation of the System/360 instruction set architecture. The first computers to use the ESA/390 were the Enterprise System/9000 (ES/9000) ...

9672 series.

* 1994. ARM's Thumb instruction set introduced, based on Hitachi's SuperH

SuperH (or SH) is a 32-bit reduced instruction set computing (RISC) instruction set architecture (ISA) developed by Hitachi and currently produced by Renesas. It is implemented by microcontrollers and microprocessors for embedded systems.

At the ...

instruction set.

* 1995. Intel introduces the Pentium Pro

The Pentium Pro is a sixth-generation x86 microprocessor developed and manufactured by Intel and introduced on November 1, 1995. It introduced the P6 microarchitecture (sometimes termed i686) and was originally intended to replace the original ...

which becomes the foundation for the Pentium II

The Pentium II brand refers to Intel's sixth-generation microarchitecture (" P6") and x86-compatible microprocessors introduced on May 7, 1997. Containing 7.5 million transistors (27.4 million in the case of the mobile Dixon with 256 KB ...

, Pentium III

The Pentium III (marketed as Intel Pentium III Processor, informally PIII or P3) brand refers to Intel's 32-bit x86 desktop and mobile CPUs based on the sixth-generation P6 microarchitecture introduced on February 28, 1999. The brand's initial ...

, Pentium M

The Pentium M is a family of mobile 32-bit single-core x86 microprocessors (with the modified Intel P6 microarchitecture) introduced in March 2003 and forming a part of the Intel Carmel notebook platform under the then new Centrino brand. The ...

and Intel Core

Intel Core is a line of streamlined midrange consumer, workstation and enthusiast computer central processing units (CPUs) marketed by Intel Corporation. These processors displaced the existing mid- to high-end Pentium processors at the time o ...

architectures.

* 2000. IBM introduce z/Architecture

z/Architecture, initially and briefly called ESA Modal Extensions (ESAME), is IBM's 64-bit complex instruction set computer (CISC) instruction set architecture, implemented by its mainframe computers. IBM introduced its first z/Architecture ...

, the 64-bit version of their mainframe architecture.

* 2000. AMD

Advanced Micro Devices, Inc. (AMD) is an American multinational semiconductor company based in Santa Clara, California, that develops computer processors and related technologies for business and consumer markets. While it initially manufactur ...

announced x86-64

x86-64 (also known as x64, x86_64, AMD64, and Intel 64) is a 64-bit version of the x86 instruction set, first released in 1999. It introduced two new modes of operation, 64-bit mode and compatibility mode, along with a new 4-level paging ...

64-bit extension to the x86 microarchitecture.

* 2000. AMD hits 1 GHz with its Athlon

Athlon is the brand name applied to a series of x86-compatible microprocessors designed and manufactured by Advanced Micro Devices (AMD). The original Athlon (now called Athlon Classic) was the first seventh-generation x86 processor and the fi ...

microprocessor.

* 2000. Analog Devices introduces the Blackfin architecture.

* 2002. Intel released a Pentium 4

Pentium 4 is a series of single-core CPUs for desktops, laptops and entry-level servers manufactured by Intel. The processors were shipped from November 20, 2000 until August 8, 2008. The production of Netburst processors was active from 200 ...

with hyper-threading

Hyper-threading (officially called Hyper-Threading Technology or HT Technology and abbreviated as HTT or HT) is Intel's proprietary simultaneous multithreading (SMT) implementation used to improve parallelization of computations (doing multipl ...

, the first modern desktop processor to implement simultaneous multithreading (SMT).