Arithmetic logic unit on:

[Wikipedia]

[Google]

[Amazon]

In

In

An ALU is a combinational logic circuit, meaning that its outputs will change asynchronously in response to input changes. In normal operation, stable signals are applied to all of the ALU inputs and, when enough time (known as the " propagation delay") has passed for the signals to propagate through the ALU circuitry, the result of the ALU operation appears at the ALU outputs. The external circuitry connected to the ALU is responsible for ensuring the stability of ALU input signals throughout the operation, and for allowing sufficient time for the signals to propagate through the ALU before sampling the ALU result.

In general, external circuitry controls an ALU by applying signals to its inputs. Typically, the external circuitry employs

An ALU is a combinational logic circuit, meaning that its outputs will change asynchronously in response to input changes. In normal operation, stable signals are applied to all of the ALU inputs and, when enough time (known as the " propagation delay") has passed for the signals to propagate through the ALU circuitry, the result of the ALU operation appears at the ALU outputs. The external circuitry connected to the ALU is responsible for ensuring the stability of ALU input signals throughout the operation, and for allowing sufficient time for the signals to propagate through the ALU before sampling the ALU result.

In general, external circuitry controls an ALU by applying signals to its inputs. Typically, the external circuitry employs

entity alu is

port ( -- the alu connections to external circuitry:

A : in signed(7 downto 0); -- operand A

B : in signed(7 downto 0); -- operand B

OP : in unsigned(2 downto 0); -- opcode

Y : out signed(7 downto 0)); -- operation result

end alu;

architecture behavioral of alu is

begin

case OP is -- decode the opcode and perform the operation:

when "000" => Y <= A + B; -- add

when "001" => Y <= A - B; -- subtract

when "010" => Y <= A - 1; -- decrement

when "011" => Y <= A + 1; -- increment

when "100" => Y <= not A; -- 1's complement

when "101" => Y <= A and B; -- bitwise AND

when "110" => Y <= A or B; -- bitwise OR

when "111" => Y <= A xor B; -- bitwise XOR

when others => Y <= (others => 'X');

end case;

end behavioral;

In

In computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computing machinery. It includes the study and experimentation of algorithmic processes, and development of both hardware and software. Computing has scientific, ...

, an arithmetic logic unit (ALU) is a combinational digital circuit In theoretical computer science, a circuit is a model of computation in which input values proceed through a sequence of gates, each of which computes a function. Circuits of this kind provide a generalization of Boolean circuits and a mathemati ...

that performs arithmetic

Arithmetic () is an elementary part of mathematics that consists of the study of the properties of the traditional operations on numbers— addition, subtraction, multiplication, division, exponentiation, and extraction of roots. In the 19th ...

and bitwise operation

In computer programming, a bitwise operation operates on a bit string, a bit array or a binary numeral (considered as a bit string) at the level of its individual bits. It is a fast and simple action, basic to the higher-level arithmetic oper ...

s on integer

An integer is the number zero (), a positive natural number (, , , etc.) or a negative integer with a minus sign ( −1, −2, −3, etc.). The negative numbers are the additive inverses of the corresponding positive numbers. In the languag ...

binary number

A binary number is a number expressed in the base-2 numeral system or binary numeral system, a method of mathematical expression which uses only two symbols: typically "0" ( zero) and "1" (one).

The base-2 numeral system is a positional notati ...

s. This is in contrast to a floating-point unit (FPU), which operates on floating point

In computing, floating-point arithmetic (FP) is arithmetic that represents real numbers approximately, using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. For example, 12.345 can ...

numbers. It is a fundamental building block of many types of computing circuits, including the central processing unit

A central processing unit (CPU), also called a central processor, main processor or just processor, is the electronic circuitry that executes instructions comprising a computer program. The CPU performs basic arithmetic, logic, controlling, a ...

(CPU) of computers, FPUs, and graphics processing unit

A graphics processing unit (GPU) is a specialized electronic circuit designed to manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. GPUs are used in embedded systems, m ...

s (GPUs).

The inputs to an ALU are the data to be operated on, called operand

In mathematics, an operand is the object of a mathematical operation, i.e., it is the object or quantity that is operated on.

Example

The following arithmetic expression shows an example of operators and operands:

:3 + 6 = 9

In the above exam ...

s, and a code indicating the operation to be performed; the ALU's output is the result of the performed operation. In many designs, the ALU also has status inputs or outputs, or both, which convey information about a previous operation or the current operation, respectively, between the ALU and external status registers.

Signals

An ALU has a variety of input and output nets, which are the electrical conductors used to conveydigital signal

A digital signal is a signal that represents data as a sequence of discrete values; at any given time it can only take on, at most, one of a finite number of values. This contrasts with an analog signal, which represents continuous values; a ...

s between the ALU and external circuitry. When an ALU is operating, external circuits apply signals to the ALU inputs and, in response, the ALU produces and conveys signals to external circuitry via its outputs.

Data

A basic ALU has three parallel databuses

A bus (contracted from omnibus, with variants multibus, motorbus, autobus, etc.) is a road vehicle that carries significantly more passengers than an average car or van. It is most commonly used in public transport, but is also in use for char ...

consisting of two input operand

In mathematics, an operand is the object of a mathematical operation, i.e., it is the object or quantity that is operated on.

Example

The following arithmetic expression shows an example of operators and operands:

:3 + 6 = 9

In the above exam ...

s (''A'' and ''B'') and a result output (''Y''). Each data bus is a group of signals that conveys one binary integer number. Typically, the A, B and Y bus widths (the number of signals comprising each bus) are identical and match the native word size of the external circuitry (e.g., the encapsulating CPU or other processor).

Opcode

The ''opcode'' input is a parallel bus that conveys to the ALU an operation selection code, which is an enumerated value that specifies the desired arithmetic or logic operation to be performed by the ALU. The opcode size (its bus width) determines the maximum number of distinct operations the ALU can perform; for example, a four-bit opcode can specify up to sixteen different ALU operations. Generally, an ALU opcode is not the same as a machine language opcode, though in some cases it may be directly encoded as a bit field within a machine language opcode.Status

Outputs

The status outputs are various individual signals that convey supplemental information about the result of the current ALU operation. General-purpose ALUs commonly have status signals such as: * ''Carry-out'', which conveys the carry resulting from an addition operation, the borrow resulting from a subtraction operation, or the overflow bit resulting from a binary shift operation. * ''Zero'', which indicates all bits of Y are logic zero. * ''Negative'', which indicates the result of an arithmetic operation is negative. * '' Overflow'', which indicates the result of an arithmetic operation has exceeded the numeric range of Y. * ''Parity

Parity may refer to:

* Parity (computing)

** Parity bit in computing, sets the parity of data for the purpose of error detection

** Parity flag in computing, indicates if the number of set bits is odd or even in the binary representation of the ...

'', which indicates whether an even or odd number of bits in Y are logic one.

Upon completion of each ALU operation, the status output signals are usually stored in external registers to make them available for future ALU operations (e.g., to implement multiple-precision arithmetic) or for controlling conditional branching. The collection of bit registers that store the status outputs are often treated as a single, multi-bit register, which is referred to as the "status register" or "condition code register".

Inputs

The status inputs allow additional information to be made available to the ALU when performing an operation. Typically, this is a single "carry-in" bit that is the stored carry-out from a previous ALU operation.Circuit operation

An ALU is a combinational logic circuit, meaning that its outputs will change asynchronously in response to input changes. In normal operation, stable signals are applied to all of the ALU inputs and, when enough time (known as the " propagation delay") has passed for the signals to propagate through the ALU circuitry, the result of the ALU operation appears at the ALU outputs. The external circuitry connected to the ALU is responsible for ensuring the stability of ALU input signals throughout the operation, and for allowing sufficient time for the signals to propagate through the ALU before sampling the ALU result.

In general, external circuitry controls an ALU by applying signals to its inputs. Typically, the external circuitry employs

An ALU is a combinational logic circuit, meaning that its outputs will change asynchronously in response to input changes. In normal operation, stable signals are applied to all of the ALU inputs and, when enough time (known as the " propagation delay") has passed for the signals to propagate through the ALU circuitry, the result of the ALU operation appears at the ALU outputs. The external circuitry connected to the ALU is responsible for ensuring the stability of ALU input signals throughout the operation, and for allowing sufficient time for the signals to propagate through the ALU before sampling the ALU result.

In general, external circuitry controls an ALU by applying signals to its inputs. Typically, the external circuitry employs sequential logic

In automata theory, sequential logic is a type of logic circuit whose output depends on the present value of its input signals and on the sequence of past inputs, the input history. This is in contrast to ''combinational logic'', whose output ...

to control the ALU operation, which is paced by a clock signal of a sufficiently low frequency to ensure enough time for the ALU outputs to settle under worst-case conditions.

For example, a CPU begins an ALU addition operation by routing operands from their sources (which are usually registers) to the ALU's operand inputs, while the control unit simultaneously applies a value to the ALU's opcode input, configuring it to perform addition. At the same time, the CPU also routes the ALU result output to a destination register that will receive the sum. The ALU's input signals, which are held stable until the next clock, are allowed to propagate through the ALU and to the destination register while the CPU waits for the next clock. When the next clock arrives, the destination register stores the ALU result and, since the ALU operation has completed, the ALU inputs may be set up for the next ALU operation.

Functions

A number of basic arithmetic and bitwise logic functions are commonly supported by ALUs. Basic, general purpose ALUs typically include these operations in their repertoires:Arithmetic operations

* ''Add

Addition (usually signified by the plus symbol ) is one of the four basic operations of arithmetic, the other three being subtraction, multiplication and division. The addition of two whole numbers results in the total amount or '' sum'' of ...

'': A and B are summed and the sum appears at Y and carry-out.

* ''Add with carry'': A, B and carry-in are summed and the sum appears at Y and carry-out.

* '' Subtract'': B is subtracted from A (or vice versa) and the difference appears at Y and carry-out. For this function, carry-out is effectively a "borrow" indicator. This operation may also be used to compare the magnitudes of A and B; in such cases the Y output may be ignored by the processor, which is only interested in the status bits (particularly zero and negative) that result from the operation.

* ''Subtract with borrow'': B is subtracted from A (or vice versa) with borrow (carry-in) and the difference appears at Y and carry-out (borrow out).

* ''Two's complement (negate)'': A (or B) is subtracted from zero and the difference appears at Y.

* ''Increment'': A (or B) is increased by one and the resulting value appears at Y.

* ''Decrement'': A (or B) is decreased by one and the resulting value appears at Y.

* ''Pass through'': all bits of A (or B) appear unmodified at Y. This operation is typically used to determine the parity of the operand or whether it is zero or negative, or to load the operand into a processor register.

Bitwise logical operations

* ''AND

or AND may refer to:

Logic, grammar, and computing

* Conjunction (grammar), connecting two words, phrases, or clauses

* Logical conjunction in mathematical logic, notated as "∧", "⋅", "&", or simple juxtaposition

* Bitwise AND, a boolea ...

'': the bitwise AND of A and B appears at Y.

* '' OR'': the bitwise OR of A and B appears at Y.

* '' Exclusive-OR'': the bitwise XOR of A and B appears at Y.

* '' Ones' complement'': all bits of A (or B) are inverted and appear at Y.

Bit shift operations

ALU shift operations cause operand A (or B) to shift left or right (depending on the opcode) and the shifted operand appears at Y. Simple ALUs typically can shift the operand by only one bit position, whereas more complex ALUs employ barrel shifters that allow them to shift the operand by an arbitrary number of bits in one operation. In all single-bit shift operations, the bit shifted out of the operand appears on carry-out; the value of the bit shifted into the operand depends on the type of shift. * '' Arithmetic shift'': the operand is treated as a two's complement integer, meaning that the most significant bit is a "sign" bit and is preserved. * '' Logical shift'': a logic zero is shifted into the operand. This is used to shift unsigned integers. * '' Rotate'': the operand is treated as a circular buffer of bits so its least and most significant bits are effectively adjacent. * '' Rotate through carry'': the carry bit and operand are collectively treated as a circular buffer of bits.Applications

Multiple-precision arithmetic

In integer arithmetic computations, multiple-precision arithmetic is an algorithm that operates on integers which are larger than the ALU word size. To do this, the algorithm treats each operand as an ordered collection of ALU-size fragments, arranged from most-significant (MS) to least-significant (LS) or vice versa. For example, in the case of an 8-bit ALU, the 24-bit integer0x123456 would be treated as a collection of three 8-bit fragments: 0x12 (MS), 0x34, and 0x56 (LS). Since the size of a fragment exactly matches the ALU word size, the ALU can directly operate on this "piece" of operand.

The algorithm uses the ALU to directly operate on particular operand fragments and thus generate a corresponding fragment (a "partial") of the multi-precision result. Each partial, when generated, is written to an associated region of storage that has been designated for the multiple-precision result. This process is repeated for all operand fragments so as to generate a complete collection of partials, which is the result of the multiple-precision operation.

In arithmetic operations (e.g., addition, subtraction), the algorithm starts by invoking an ALU operation on the operands' LS fragments, thereby producing both a LS partial and a carry out bit. The algorithm writes the partial to designated storage, whereas the processor's state machine typically stores the carry out bit to an ALU status register. The algorithm then advances to the next fragment of each operand's collection and invokes an ALU operation on these fragments along with the stored carry bit from the previous ALU operation, thus producing another (more significant) partial and a carry out bit. As before, the carry bit is stored to the status register and the partial is written to designated storage. This process repeats until all operand fragments have been processed, resulting in a complete collection of partials in storage, which comprise the multi-precision arithmetic result.

In multiple-precision shift operations, the order of operand fragment processing depends on the shift direction. In left-shift operations, fragments are processed LS first because the LS bit of each partial—which is conveyed via the stored carry bit—must be obtained from the MS bit of the previously left-shifted, less-significant operand. Conversely, operands are processed MS first in right-shift operations because the MS bit of each partial must be obtained from the LS bit of the previously right-shifted, more-significant operand.

In bitwise logical operations (e.g., logical AND, logical OR), the operand fragments may be processed in any arbitrary order because each partial depends only on the corresponding operand fragments (the stored carry bit from the previous ALU operation is ignored).

Complex operations

Although an ALU can be designed to perform complex functions, the resulting higher circuit complexity, cost, power consumption and larger size makes this impractical in many cases. Consequently, ALUs are often limited to simple functions that can be executed at very high speeds (i.e., very short propagation delays), and the external processor circuitry is responsible for performing complex functions by orchestrating a sequence of simpler ALU operations. For example, computing the square root of a number might be implemented in various ways, depending on ALU complexity: * ''Calculation in a single clock'': a very complex ALU that calculates a square root in one operation. * '' Calculation pipeline'': a group of simple ALUs that calculates a square root in stages, with intermediate results passing through ALUs arranged like a factoryproduction line

A production line is a set of sequential operations established in a factory where components are assembled to make a finished article or where materials are put through a refining process to produce an end-product that is suitable for onward c ...

. This circuit can accept new operands before finishing the previous ones and produces results as fast as the very complex ALU, though the results are delayed by the sum of the propagation delays of the ALU stages. For more information, see the article on instruction pipelining.

* ''Iterative calculation'': a simple ALU that calculates the square root through several steps under the direction of a control unit.

The implementations above transition from fastest and most expensive to slowest and least costly. The square root is calculated in all cases, but processors with simple ALUs will take longer to perform the calculation because multiple ALU operations must be performed.

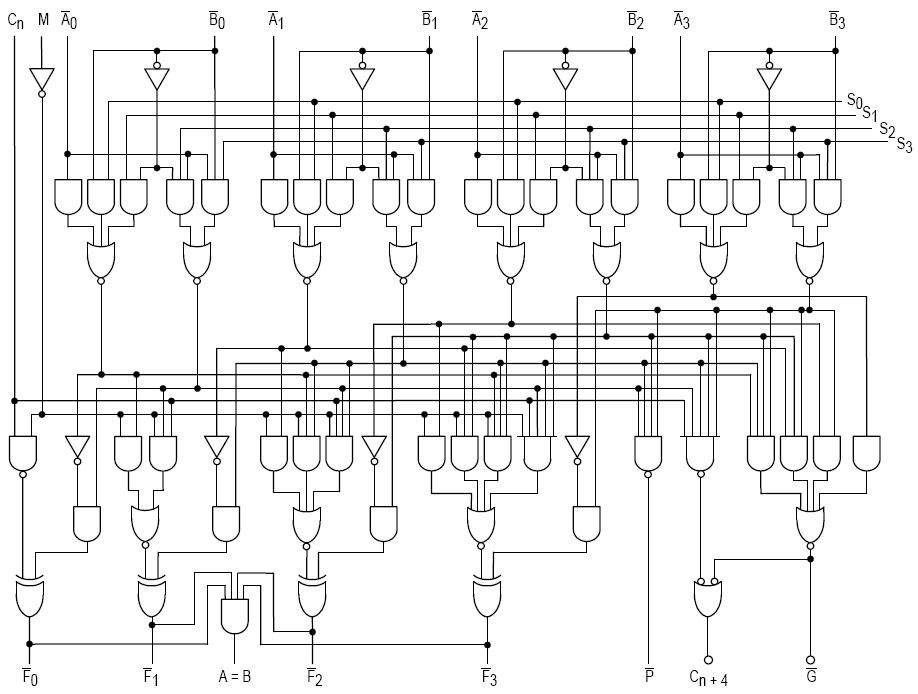

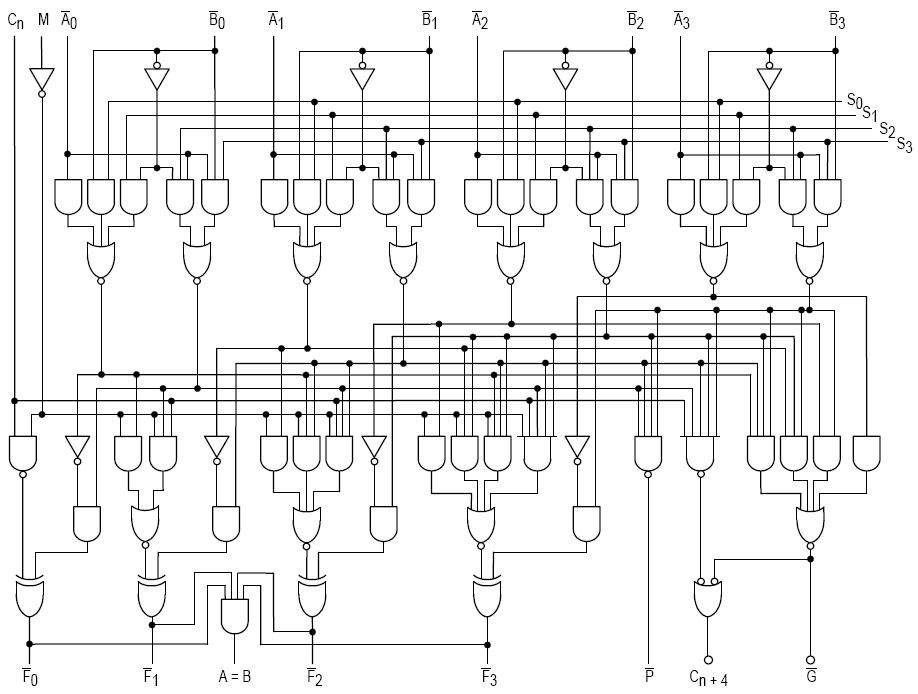

Implementation

An ALU is usually implemented either as a stand-aloneintegrated circuit

An integrated circuit or monolithic integrated circuit (also referred to as an IC, a chip, or a microchip) is a set of electronic circuits on one small flat piece (or "chip") of semiconductor material, usually silicon. Large numbers of tiny ...

(IC), such as the 74181, or as part of a more complex IC. In the latter case, an ALU is typically instantiated by synthesizing it from a description written in VHDL, Verilog or some other hardware description language. For example, the following VHDL code describes a very simple 8-bit

In computer architecture, 8-bit integers or other data units are those that are 8 bits wide (1 octet). Also, 8-bit central processing unit (CPU) and arithmetic logic unit (ALU) architectures are those that are based on registers or data buses ...

ALU:

History

MathematicianJohn von Neumann

John von Neumann (; hu, Neumann János Lajos, ; December 28, 1903 – February 8, 1957) was a Hungarian-American mathematician, physicist, computer scientist, engineer and polymath. He was regarded as having perhaps the widest c ...

proposed the ALU concept in 1945 in a report on the foundations for a new computer called the EDVAC.

The cost, size, and power consumption of electronic circuitry was relatively high throughout the infancy of the information age

The Information Age (also known as the Computer Age, Digital Age, Silicon Age, or New Media Age) is a historical period that began in the mid-20th century. It is characterized by a rapid shift from traditional industries, as established during ...

. Consequently, all serial computer

A serial computer is a computer typified by bit-serial architecture i.e., internally operating on one bit or digit for each clock cycle. Machines with serial main storage devices such as acoustic or magnetostrictive delay lines and rotating m ...

s and many early computers, such as the PDP-8, had a simple ALU that operated on one data bit at a time, although they often presented a wider word size to programmers. One of the earliest computers to have multiple discrete single-bit ALU circuits was the 1948 Whirlwind I, which employed sixteen such "math units" to enable it to operate on 16-bit words.

In 1967, Fairchild introduced the first ALU implemented as an integrated circuit, the Fairchild 3800, consisting of an eight-bit ALU with accumulator. Other integrated-circuit ALUs soon emerged, including four-bit ALUs such as the Am2901 and 74181. These devices were typically "bit slice

Bit slicing is a technique for constructing a processor from modules of processors of smaller bit width, for the purpose of increasing the word length; in theory to make an arbitrary ''n''-bit central processing unit (CPU). Each of these com ...

" capable, meaning they had "carry look ahead" signals that facilitated the use of multiple interconnected ALU chips to create an ALU with a wider word size. These devices quickly became popular and were widely used in bit-slice minicomputers.

Microprocessors began to appear in the early 1970s. Even though transistors had become smaller, there was often insufficient die space for a full-word-width ALU and, as a result, some early microprocessors employed a narrow ALU that required multiple cycles per machine language instruction. Examples of this includes the popular Zilog Z80, which performed eight-bit additions with a four-bit ALU. Over time, transistor geometries shrank further, following Moore's law, and it became feasible to build wider ALUs on microprocessors.

Modern integrated circuit (IC) transistors are orders of magnitude smaller than those of the early microprocessors, making it possible to fit highly complex ALUs on ICs. Today, many modern ALUs have wide word widths, and architectural enhancements such as barrel shifters and binary multipliers that allow them to perform, in a single clock cycle, operations that would have required multiple operations on earlier ALUs.

ALUs can be realized as mechanical

Mechanical may refer to:

Machine

* Machine (mechanical), a system of mechanisms that shape the actuator input to achieve a specific application of output forces and movement

* Mechanical calculator, a device used to perform the basic operations ...

, electro-mechanical or electronic circuits and, in recent years, research into biological ALUs has been carried out (e.g., actin

Actin is a family of globular multi-functional proteins that form microfilaments in the cytoskeleton, and the thin filaments in muscle fibrils. It is found in essentially all eukaryotic cells, where it may be present at a concentration of ov ...

-based).

See also

* Adder (electronics) *Address generation unit

The address generation unit (AGU), sometimes also called address computation unit (ACU), is an execution unit inside central processing units (CPUs) that calculates addresses used by the CPU to access main memory. By having address calculations ...

(AGU)

* Load–store unit

In computer engineering, a load–store unit (LSU) is a specialized execution unit responsible for executing all load and store instructions, generating virtual addresses of load and store operations and loading data from memory or storing it back ...

* Binary multiplier

* Execution unit

References

Further reading

* *External links

{{DEFAULTSORT:Arithmetic Logic Unit Digital circuits Central processing unit Computer arithmetic Computer architecture Arithmetic logic circuits