|

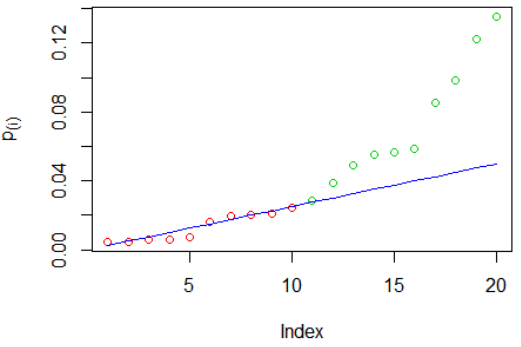

False Discovery Rate

In statistics, the false discovery rate (FDR) is a method of conceptualizing the rate of type I errors in null hypothesis testing when conducting multiple comparisons. FDR-controlling procedures are designed to control the FDR, which is the expected proportion of "discoveries" (rejected null hypotheses) that are false (incorrect rejections of the null). Equivalently, the FDR is the expected ratio of the number of false positive classifications (false discoveries) to the total number of positive classifications (rejections of the null). The total number of rejections of the null include both the number of false positives (FP) and true positives (TP). Simply put, FDR = FP / (FP + TP). FDR-controlling procedures provide less stringent control of Type I errors compared to family-wise error rate (FWER) controlling procedures (such as the Bonferroni correction), which control the probability of ''at least one'' Type I error. Thus, FDR-controlling procedures have greater power, at the co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bonferroni Adjustment

In statistics, the Bonferroni correction is a method to counteract the multiple comparisons problem. Background The method is named for its use of the Bonferroni inequalities. An extension of the method to confidence intervals was proposed by Olive Jean Dunn. Statistical hypothesis testing is based on rejecting the null hypothesis if the likelihood of the observed data under the null hypotheses is low. If multiple hypotheses are tested, the probability of observing a rare event increases, and therefore, the likelihood of incorrectly rejecting a null hypothesis (i.e., making a Type I error) increases. The Bonferroni correction compensates for that increase by testing each individual hypothesis at a significance level of \alpha/m, where \alpha is the desired overall alpha level and m is the number of hypotheses. For example, if a trial is testing m = 20 hypotheses with a desired \alpha = 0.05, then the Bonferroni correction would test each individual hypothesis at \alpha = 0.05/20 = ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Adaptive

Adaptation, in biology, is the process or trait by which organisms or population better match their environment Adaptation may also refer to: Arts * Adaptation (arts), a transfer of a work of art from one medium to another ** Film adaptation, a story from another work, adapted into a film ** Literary adaptation, a story from a literary source, adapted into another work ** ** Theatrical adaptation, a story from another work, adapted into a play * ''Adaptation'' (film), a 2002 film by Spike Jonze * "Adaptation" (''The Walking Dead''), a television episode *''Adaptation'', a 2012 novel by Malinda Lo Biology and medicine * Adaptation (eye), the eye's adjustment to light ** Chromatic adaptation, visual systems' adjustments to changes in illumination for preservation of colors ** Prism adaptation, sensory-motor adjustments after the visual field has been artificially shifted * Cellular adaptation, changes by cells/tissues in response to changed microenvironments * High-altitude a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

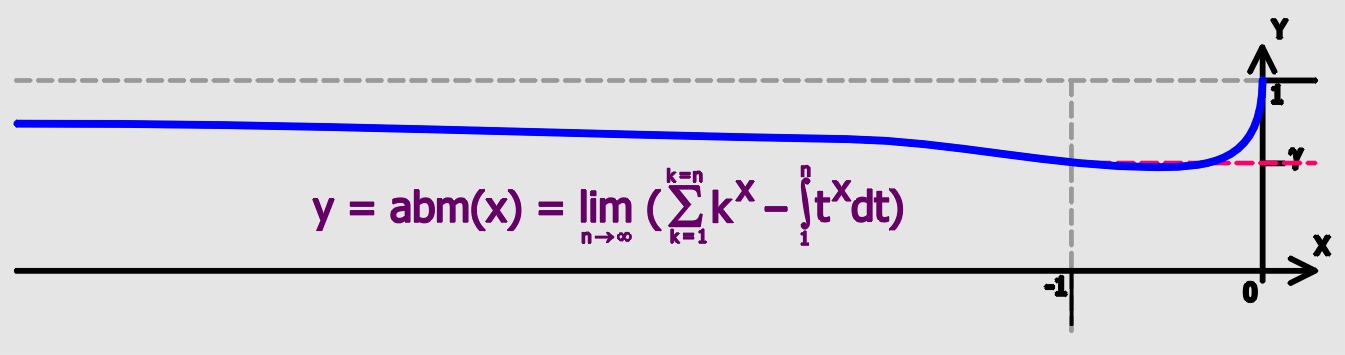

Euler–Mascheroni Constant

Euler's constant (sometimes also called the Euler–Mascheroni constant) is a mathematical constant usually denoted by the lowercase Greek letter gamma (). It is defined as the limiting difference between the harmonic series and the natural logarithm, denoted here by \log: :\begin \gamma &= \lim_\left(-\log n + \sum_^n \frac1\right)\\ px&=\int_1^\infty\left(-\frac1x+\frac1\right)\,dx. \end Here, \lfloor x\rfloor represents the floor function. The numerical value of Euler's constant, to 50 decimal places, is: : History The constant first appeared in a 1734 paper by the Swiss mathematician Leonhard Euler, titled ''De Progressionibus harmonicis observationes'' (Eneström Index 43). Euler used the notations and for the constant. In 1790, Italian mathematician Lorenzo Mascheroni used the notations and for the constant. The notation appears nowhere in the writings of either Euler or Mascheroni, and was chosen at a later time perhaps because of the constant's connecti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Harmonic Number

In mathematics, the -th harmonic number is the sum of the reciprocals of the first natural numbers: H_n= 1+\frac+\frac+\cdots+\frac =\sum_^n \frac. Starting from , the sequence of harmonic numbers begins: 1, \frac, \frac, \frac, \frac, \dots Harmonic numbers are related to the harmonic mean in that the -th harmonic number is also times the reciprocal of the harmonic mean of the first positive integers. Harmonic numbers have been studied since antiquity and are important in various branches of number theory. They are sometimes loosely termed harmonic series, are closely related to the Riemann zeta function, and appear in the expressions of various special functions. The harmonic numbers roughly approximate the natural logarithm function and thus the associated harmonic series grows without limit, albeit slowly. In 1737, Leonhard Euler used the divergence of the harmonic series to provide a new proof of the infinity of prime numbers. His work was extended into the co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Harmonic Number

In mathematics, the -th harmonic number is the sum of the reciprocals of the first natural numbers: H_n= 1+\frac+\frac+\cdots+\frac =\sum_^n \frac. Starting from , the sequence of harmonic numbers begins: 1, \frac, \frac, \frac, \frac, \dots Harmonic numbers are related to the harmonic mean in that the -th harmonic number is also times the reciprocal of the harmonic mean of the first positive integers. Harmonic numbers have been studied since antiquity and are important in various branches of number theory. They are sometimes loosely termed harmonic series, are closely related to the Riemann zeta function, and appear in the expressions of various special functions. The harmonic numbers roughly approximate the natural logarithm function and thus the associated harmonic series grows without limit, albeit slowly. In 1737, Leonhard Euler used the divergence of the harmonic series to provide a new proof of the infinity of prime numbers. His work was extended into the co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Independence

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. Mutual independence implies pairwise independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Classification Of Multiple Hypothesis Tests

In statistics, the multiple comparisons, multiplicity or multiple testing problem occurs when one considers a set of statistical inferences simultaneously or infers a subset of parameters selected based on the observed values. The more inferences are made, the more likely erroneous inferences become. Several statistical techniques have been developed to address that problem, typically by requiring a stricter significance threshold for individual comparisons, so as to compensate for the number of inferences being made. History The problem of multiple comparisons received increased attention in the 1950s with the work of statisticians such as Tukey and Scheffé. Over the ensuing decades, many procedures were developed to address the problem. In 1996, the first international conference on multiple comparison procedures took place in Israel. Definition Multiple comparisons arise when a statistical analysis involves multiple simultaneous statistical tests, each of which has a poten ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Division By Zero

In mathematics, division by zero is division where the divisor (denominator) is zero. Such a division can be formally expressed as \tfrac, where is the dividend (numerator). In ordinary arithmetic, the expression has no meaning, as there is no number that, when multiplied by , gives (assuming a \neq 0); thus, division by zero is undefined. Since any number multiplied by zero is zero, the expression \tfrac is also undefined; when it is the form of a limit, it is an indeterminate form. Historically, one of the earliest recorded references to the mathematical impossibility of assigning a value to \tfrac is contained in Anglo-Irish philosopher George Berkeley's criticism of infinitesimal calculus in 1734 in '' The Analyst'' ("ghosts of departed quantities"). There are mathematical structures in which \tfrac is defined for some such as in the Riemann sphere (a model of the extended complex plane) and the Projectively extended real line; however, such structures do not satisf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Value

In probability theory, the expected value (also called expectation, expectancy, mathematical expectation, mean, average, or first moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of a large number of independently selected outcomes of a random variable. The expected value of a random variable with a finite number of outcomes is a weighted average of all possible outcomes. In the case of a continuum of possible outcomes, the expectation is defined by integration. In the axiomatic foundation for probability provided by measure theory, the expectation is given by Lebesgue integration. The expected value of a random variable is often denoted by , , or , with also often stylized as or \mathbb. History The idea of the expected value originated in the middle of the 17th century from the study of the so-called problem of points, which seeks to divide the stakes ''in a fair way'' between two players, who have to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Simes Procedure

SIMes (or H2Imes) is an ''N''-heterocyclic carbene. It is a white solid that dissolves in organic solvents. The compound is used as a ligand in organometallic chemistry Organometallic chemistry is the study of organometallic compounds, chemical compounds containing at least one chemical bond between a carbon atom of an organic molecule and a metal, including alkali, alkaline earth, and transition metals, and s .... It is structurally related to the more common ligand IMes but with a saturated backbone (the S of SIMes indicates a saturated backbone). It is slightly more flexible and is a component in Grubbs II. It is prepared by alkylation of trimethylaniline by dibromoethane followed by ring closure and dehydrohalogenation. References {{organic-compound-stub Carbenes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |