|

Overfitting

mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfitted model is a mathematical model that contains more parameters than can be justified by the data. The essence of overfitting is to have unknowingly extracted some of the residual variation (i.e., the noise) as if that variation represented underlying model structure. Underfitting occurs when a mathematical model cannot adequately capture the underlying structure of the data. An under-fitted model is a model where some parameters or terms that would appear in a correctly specified model are missing. Under-fitting would occur, for example, when fitting a linear model to non-linear data. Such a model will tend to have poor predictive performance. The possibility of over-fitting exists because the criterion used for selecting the model is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Overfitting

mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfitted model is a mathematical model that contains more parameters than can be justified by the data. The essence of overfitting is to have unknowingly extracted some of the residual variation (i.e., the noise) as if that variation represented underlying model structure. Underfitting occurs when a mathematical model cannot adequately capture the underlying structure of the data. An under-fitted model is a model where some parameters or terms that would appear in a correctly specified model are missing. Under-fitting would occur, for example, when fitting a linear model to non-linear data. Such a model will tend to have poor predictive performance. The possibility of over-fitting exists because the criterion used for selecting the model is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Early Stopping

In machine learning, early stopping is a form of regularization used to avoid overfitting when training a learner with an iterative method, such as gradient descent. Such methods update the learner so as to make it better fit the training data with each iteration. Up to a point, this improves the learner's performance on data outside of the training set. Past that point, however, improving the learner's fit to the training data comes at the expense of increased generalization error. Early stopping rules provide guidance as to how many iterations can be run before the learner begins to over-fit. Early stopping rules have been employed in many different machine learning methods, with varying amounts of theoretical foundation. Background This section presents some of the basic machine-learning concepts required for a description of early stopping methods. Overfitting Machine learning algorithms train a model based on a finite set of training data. During this training, the model ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bias–variance Tradeoff

In statistics and machine learning, the bias–variance tradeoff is the property of a model that the variance of the parameter estimated across samples can be reduced by increasing the bias in the estimated parameters. The bias–variance dilemma or bias–variance problem is the conflict in trying to simultaneously minimize these two sources of error that prevent supervised learning algorithms from generalizing beyond their training set: * The ''bias'' error is an error from erroneous assumptions in the learning algorithm. High bias can cause an algorithm to miss the relevant relations between features and target outputs (underfitting). * The ''variance'' is an error from sensitivity to small fluctuations in the training set. High variance may result from an algorithm modeling the random noise in the training data (overfitting). The bias–variance decomposition is a way of analyzing a learning algorithm's expected generalization error with respect to a particular proble ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Training Data

In machine learning, a common task is the study and construction of algorithms that can learn from and make predictions on data. Such algorithms function by making data-driven predictions or decisions, through building a mathematical model from input data. These input data used to build the model are usually divided in multiple data sets. In particular, three data sets are commonly used in different stages of the creation of the model: training, validation and test sets. The model is initially fit on a training data set, which is a set of examples used to fit the parameters (e.g. weights of connections between neurons in artificial neural networks) of the model. The model (e.g. a naive Bayes classifier) is trained on the training data set using a supervised learning method, for example using optimization methods such as gradient descent or stochastic gradient descent. In practice, the training data set often consists of pairs of an input vector (or scalar) and the corresponding ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regularization (mathematics)

In mathematics, statistics, finance, computer science, particularly in machine learning and inverse problems, regularization is a process that changes the result answer to be "simpler". It is often used to obtain results for ill-posed problems or to prevent overfitting. Although regularization procedures can be divided in many ways, following delineation is particularly helpful: * Explicit regularization is regularization whenever one explicitly adds a term to the optimization problem. These terms could be priors, penalties, or constraints. Explicit regularization is commonly employed with ill-posed optimization problems. The regularization term, or penalty, imposes a cost on the optimization function to make the optimal solution unique. * Implicit regularization is all other forms of regularization. This includes, for example, early stopping, using a robust loss function, and discarding outliers. Implicit regularization is essentially ubiquitous in modern machine learning app ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-validation (statistics)

Cross-validation, sometimes called rotation estimation or out-of-sample testing, is any of various similar model validation techniques for assessing how the results of a statistical analysis will generalize to an independent data set. Cross-validation is a resampling method that uses different portions of the data to test and train a model on different iterations. It is mainly used in settings where the goal is prediction, and one wants to estimate how accurately a predictive model will perform in practice. In a prediction problem, a model is usually given a dataset of ''known data'' on which training is run (''training dataset''), and a dataset of ''unknown data'' (or ''first seen'' data) against which the model is tested (called the validation dataset or ''testing set''). The goal of cross-validation is to test the model's ability to predict new data that was not used in estimating it, in order to flag problems like overfitting or selection bias and to give an insight on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

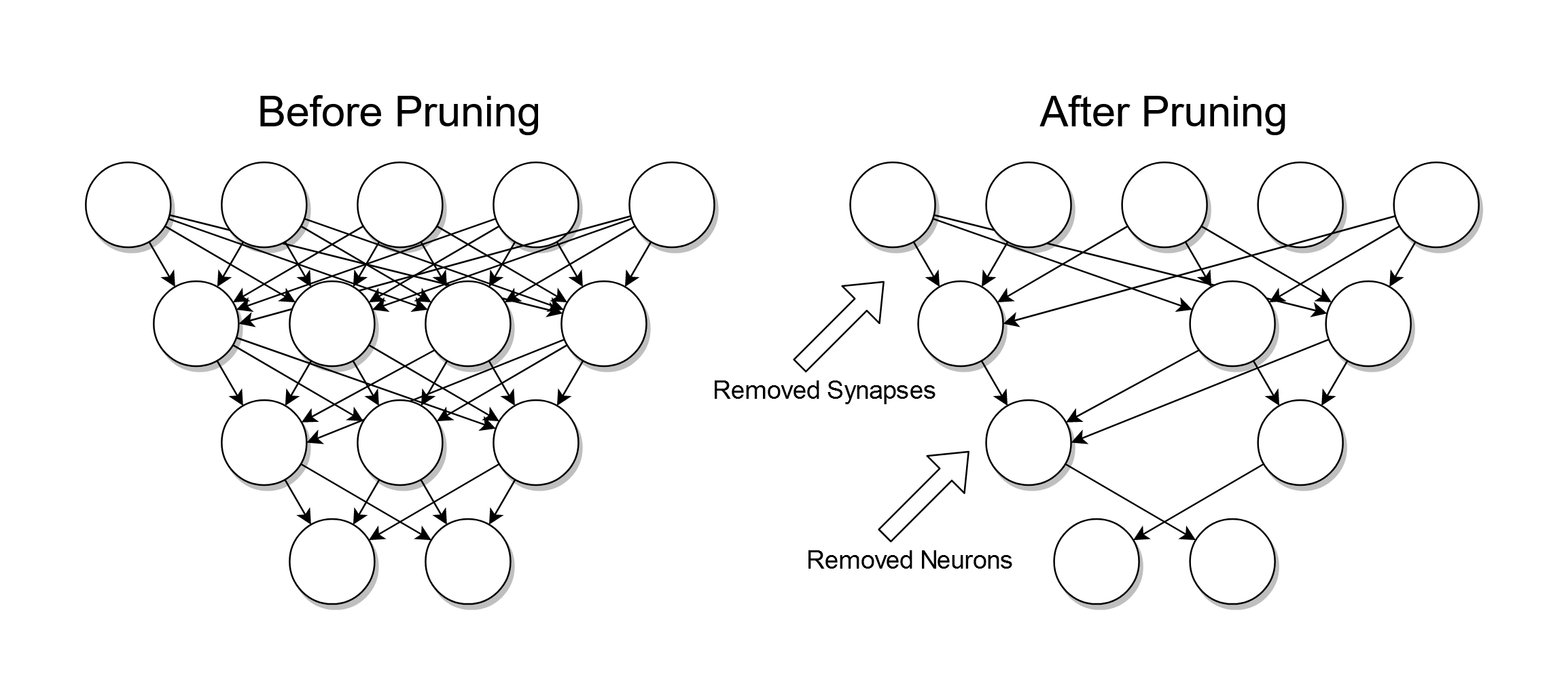

Pruning (algorithm)

Pruning is a data compression technique in machine learning and search algorithms that reduces the size of decision trees by removing sections of the tree that are non-critical and redundant to classify instances. Pruning reduces the complexity of the final classifier, and hence improves predictive accuracy by the reduction of overfitting. One of the questions that arises in a decision tree algorithm is the optimal size of the final tree. A tree that is too large risks overfitting the training data and poorly generalizing to new samples. A small tree might not capture important structural information about the sample space. However, it is hard to tell when a tree algorithm should stop because it is impossible to tell if the addition of a single extra node will dramatically decrease error. This problem is known as the horizon effect. A common strategy is to grow the tree until each node contains a small number of instances then use pruning to remove nodes that do not provide ad ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

One In Ten Rule

In statistics, the one in ten rule is a rule of thumb for how many predictor parameters can be estimated from data when doing regression analysis (in particular proportional hazards models in survival analysis and logistic regression) while keeping the risk of overfitting low. The rule states that one predictive variable can be studied for every ten events. For logistic regression the number of events is given by the size of the smallest of the outcome categories, and for survival analysis it is given by the number of uncensored events. For example, if a sample of 200 patients is studied and 20 patients die during the study (so that 180 patients survive), the one in ten rule implies that two pre-specified predictors can reliably be fitted to the total data. Similarly, if 100 patients die during the study (so that 100 patients survive), ten pre-specified predictors can be fitted reliably. If more are fitted, the rule implies that overfitting is likely and the results will not pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dropout (neural Networks)

Dilution and dropout (also called DropConnect) are regularization techniques for reducing overfitting in artificial neural networks by preventing complex co-adaptations on training data. They are an efficient way of performing model averaging with neural networks. ''Dilution'' refers to thinning weights, while ''dropout'' refers to randomly "dropping out", or omitting, units (both hidden and visible) during the training process of a neural network. Both trigger the same type of regularization. Types and uses Dilution is usually split in ''weak dilution'' and ''strong dilution''. Weak dilution describes the process in which the finite fraction of removed connections is small, and strong dilution refers to when this fraction is large. There is no clear distinction on where the limit between strong and weak dilution is, and often the distinction is dependent on the precedent of a specific use-case and has implications for how to solve for exact solutions. Sometimes dilution is use ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Regression

In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables). The case of one explanatory variable is called '' simple linear regression''; for more than one, the process is called multiple linear regression. This term is distinct from multivariate linear regression, where multiple correlated dependent variables are predicted, rather than a single scalar variable. In linear regression, the relationships are modeled using linear predictor functions whose unknown model parameters are estimated from the data. Such models are called linear models. Most commonly, the conditional mean of the response given the values of the explanatory variables (or predictors) is assumed to be an affine function of those values; less commonly, the conditional median or some other quantile is used. Like all forms of regression analysis, linear regression focuse ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logistic Regression

In statistics, the logistic model (or logit model) is a statistical model that models the probability of an event taking place by having the log-odds for the event be a linear combination of one or more independent variables. In regression analysis, logistic regression (or logit regression) is estimating the parameters of a logistic model (the coefficients in the linear combination). Formally, in binary logistic regression there is a single binary dependent variable, coded by an indicator variable, where the two values are labeled "0" and "1", while the independent variables can each be a binary variable (two classes, coded by an indicator variable) or a continuous variable (any real value). The corresponding probability of the value labeled "1" can vary between 0 (certainly the value "0") and 1 (certainly the value "1"), hence the labeling; the function that converts log-odds to probability is the logistic function, hence the name. The unit of measurement for the log-odds ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principle Of Parsimony

Occam's razor, Ockham's razor, or Ocham's razor ( la, novacula Occami), also known as the principle of parsimony or the law of parsimony ( la, lex parsimoniae), is the problem-solving principle that "entities should not be multiplied beyond necessity". It is generally understood in the sense that with competing theories or explanations, the simpler one, for example a model with fewer parameters, is to be preferred. The idea is frequently attributed to English Franciscan friar William of Ockham (), a scholastic philosopher and theologian, although he never used these exact words. This philosophical razor advocates that when presented with competing hypotheses about the same prediction, one should select the solution with the fewest assumptions, and that this is not meant to be a way of choosing between hypotheses that make different predictions. Similarly, in science, Occam's razor is used as an abductive heuristic in the development of theoretical models rather than as a rigorou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |