|

LIMDEP

LIMDEP is an econometric and statistical software package with a variety of estimation tools. In addition to the core econometric tools for analysis of cross sections and time series, LIMDEP supports methods for panel data analysis, frontier and efficiency estimation and discrete choice modeling. The package also provides a programming language to allow the user to specify, estimate and analyze models that are not contained in the built in menus of model forms. History LIMDEP was first developed in the early 1980s. Econometric Software, Inc. was founded in 1985 by William H. Greene. The program was initially developed as an easy to use tobit estimator—hence the name, ''LIM''ited ''DEP''endent variable models. Econometric Software has continually expanded since the early 1980s and currently has locations in the United States and Australia. The ongoing development of LIMDEP has been based partly on interaction and feedback from users and from the collaboration of many rese ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LIMDEP Logo

LIMDEP is an econometric and statistical software package with a variety of estimation tools. In addition to the core econometric tools for analysis of cross sections and time series, LIMDEP supports methods for panel data analysis, frontier and efficiency estimation and discrete choice modeling. The package also provides a programming language to allow the user to specify, estimate and analyze models that are not contained in the built in menus of model forms. History LIMDEP was first developed in the early 1980s. Econometric Software, Inc. was founded in 1985 by William H. Greene. The program was initially developed as an easy to use tobit estimator—hence the name, ''LIM''ited ''DEP''endent variable models. Econometric Software has continually expanded since the early 1980s and currently has locations in the United States and Australia. The ongoing development of LIMDEP has been based partly on interaction and feedback from users and from the collaboration of many rese ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LIMDEP Screenshot

LIMDEP is an econometric and statistical software package with a variety of estimation tools. In addition to the core econometric tools for analysis of cross sections and time series, LIMDEP supports methods for panel data analysis, frontier and efficiency estimation and discrete choice modeling. The package also provides a programming language to allow the user to specify, estimate and analyze models that are not contained in the built in menus of model forms. History LIMDEP was first developed in the early 1980s. Econometric Software, Inc. was founded in 1985 by William H. Greene. The program was initially developed as an easy to use tobit estimator—hence the name, ''LIM''ited ''DEP''endent variable models. Econometric Software has continually expanded since the early 1980s and currently has locations in the United States and Australia. The ongoing development of LIMDEP has been based partly on interaction and feedback from users and from the collaboration of many rese ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NLOGIT

NLOGIT is an extension of the econometric and statistical software package LIMDEP. In addition to the estimation tools in LIMDEP, NLOGIT provides programs for estimation, model simulation and analysis of multinomial choice data, such as brand choice, transportation mode and for survey and market data in which consumers choose among a set of competing alternatives. In addition to the economic sciences, NLOGIT has applications in biostatistics, noneconomic social sciences, physical sciences, and health outcomes research. History Econometric Software, Inc. was founded in the early 1980s by William H. Greene. NLOGIT was released in 1996 with the development of the FIML nested logit estimator, originally an extension of the multinomial logit model in LIMDEP. The program derives its name from the Nested LOGIT model. With the additions of the multinomial probit model and the mixed logit model among several others, NLOGIT became a self standing superset of LIMDEP. Models NLOGIT is a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

William Greene (economist)

William H. Greene (born January 16, 1951) is an American economist. He is the Robert Stansky Professor of Economics and Statistics at Stern School of Business at New York University. In 1972, Greene graduated with a Bachelor of Science in business administration from Ohio State University. He also earned a master's degree (1974) and a Ph.D. (1976) in econometrics from the University of Wisconsin–Madison. Before accepting his position in NYU, Greene worked as a consultant for the Civil Aeronautics Board in Washington, D.C. Greene is the author of a popular graduate-level econometrics textbook: ''Econometric Analysis,'' which has run to 8th edition . He is the founding editor-in-chief of Foundations and Trends in Econometrics ''Foundations and Trends in Econometrics'' is a peer-reviewed scientific journal that publishes long survey and tutorial articles in the field of econometrics. It was established in 2005 and is published by Now Publishers. The founding editor-in- ... j ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

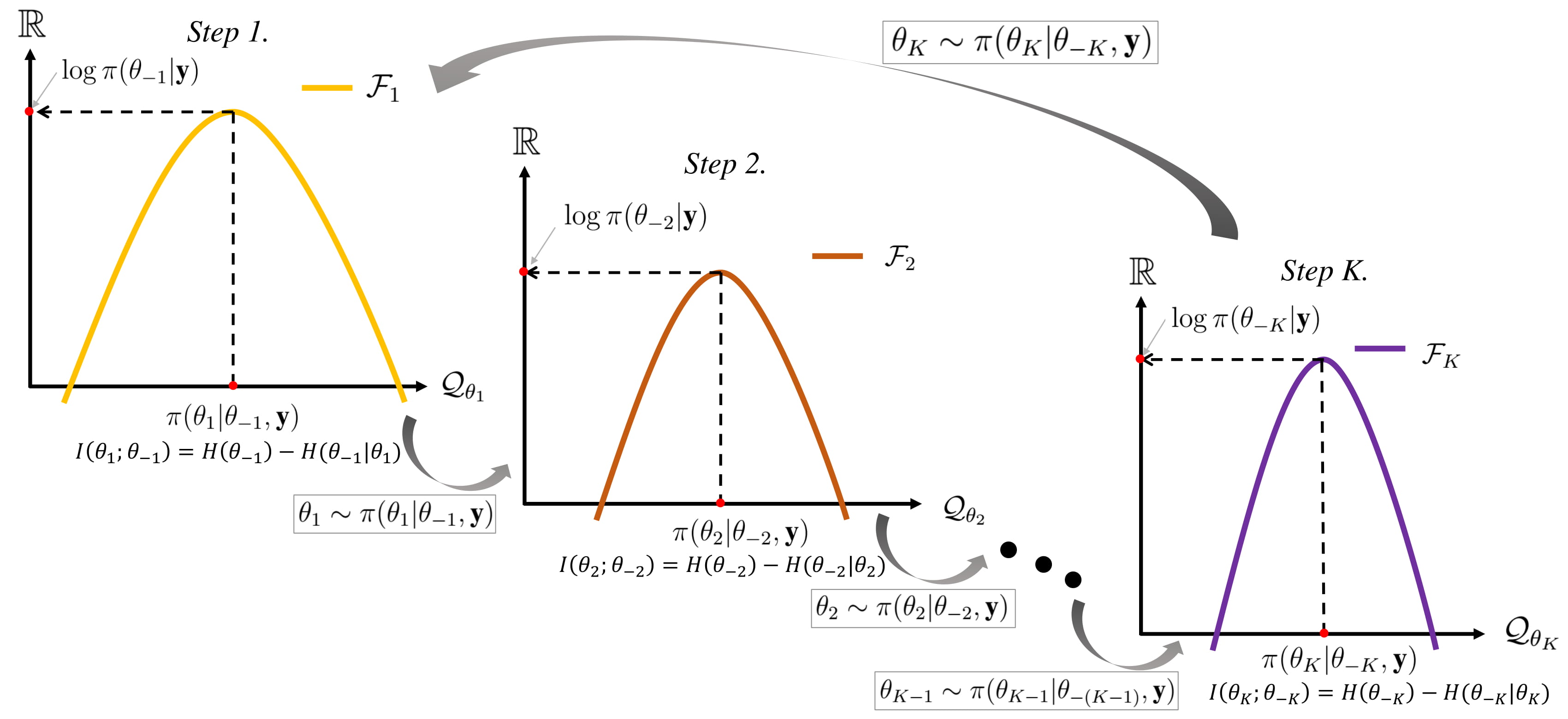

Gibbs Sampling

In statistics, Gibbs sampling or a Gibbs sampler is a Markov chain Monte Carlo (MCMC) algorithm for obtaining a sequence of observations which are approximated from a specified multivariate probability distribution, when direct sampling is difficult. This sequence can be used to approximate the joint distribution (e.g., to generate a histogram of the distribution); to approximate the marginal distribution of one of the variables, or some subset of the variables (for example, the unknown parameters or latent variables); or to compute an integral (such as the expected value of one of the variables). Typically, some of the variables correspond to observations whose values are known, and hence do not need to be sampled. Gibbs sampling is commonly used as a means of statistical inference, especially Bayesian inference. It is a randomized algorithm (i.e. an algorithm that makes use of random numbers), and is an alternative to deterministic algorithms for statistical inferenc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bootstrapping (statistics)

Bootstrapping is any test or metric that uses random sampling with replacement (e.g. mimicking the sampling process), and falls under the broader class of resampling methods. Bootstrapping assigns measures of accuracy (bias, variance, confidence intervals, prediction error, etc.) to sample estimates.software This technique allows estimation of the sampling distribution of almost any statistic using random sampling methods. Bootstrapping estimates the properties of an estimand (such as its variance) by measuring those properties when sampling from an approximating distribution. One standard choice for an approximating distribution is the [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix (mathematics)

In mathematics, a matrix (plural matrices) is a rectangular array or table of numbers, symbols, or expressions, arranged in rows and columns, which is used to represent a mathematical object or a property of such an object. For example, \begin1 & 9 & -13 \\20 & 5 & -6 \end is a matrix with two rows and three columns. This is often referred to as a "two by three matrix", a "-matrix", or a matrix of dimension . Without further specifications, matrices represent linear maps, and allow explicit computations in linear algebra. Therefore, the study of matrices is a large part of linear algebra, and most properties and operations of abstract linear algebra can be expressed in terms of matrices. For example, matrix multiplication represents composition of linear maps. Not all matrices are related to linear algebra. This is, in particular, the case in graph theory, of incidence matrices, and adjacency matrices. ''This article focuses on matrices related to linear algebra, and, un ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Number Generation

Random number generation is a process by which, often by means of a random number generator (RNG), a sequence of numbers or symbols that cannot be reasonably predicted better than by random chance is generated. This means that the particular outcome sequence will contain some patterns detectable in hindsight but unpredictable to foresight. True random number generators can be '' hardware random-number generators'' (HRNGS) that generate random numbers, wherein each generation is a function of the current value of a physical environment's attribute that is constantly changing in a manner that is practically impossible to model. This would be in contrast to so-called "random number generations" done by '' pseudorandom number generators'' (PRNGs) that generate numbers that only look random but are in fact pre-determined—these generations can be reproduced simply by knowing the state of the PRNG. Various applications of randomness have led to the development of several different met ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Panel Data

In statistics and econometrics, panel data and longitudinal data are both multi-dimensional data involving measurements over time. Panel data is a subset of longitudinal data where observations are for the same subjects each time. Time series and cross-sectional data can be thought of as special cases of panel data that are in one dimension only (one panel member or individual for the former, one time point for the latter). A study that uses panel data is called a longitudinal study or panel study. Example In the multiple response permutation procedure (MRPP) example above, two datasets with a panel structure are shown and the objective is to test whether there's a significant difference between people in the sample data. Individual characteristics (income, age, sex) are collected for different persons and different years. In the first dataset, two persons (1, 2) are observed every year for three years (2016, 2017, 2018). In the second dataset, three persons (1, 2, 3) are obse ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

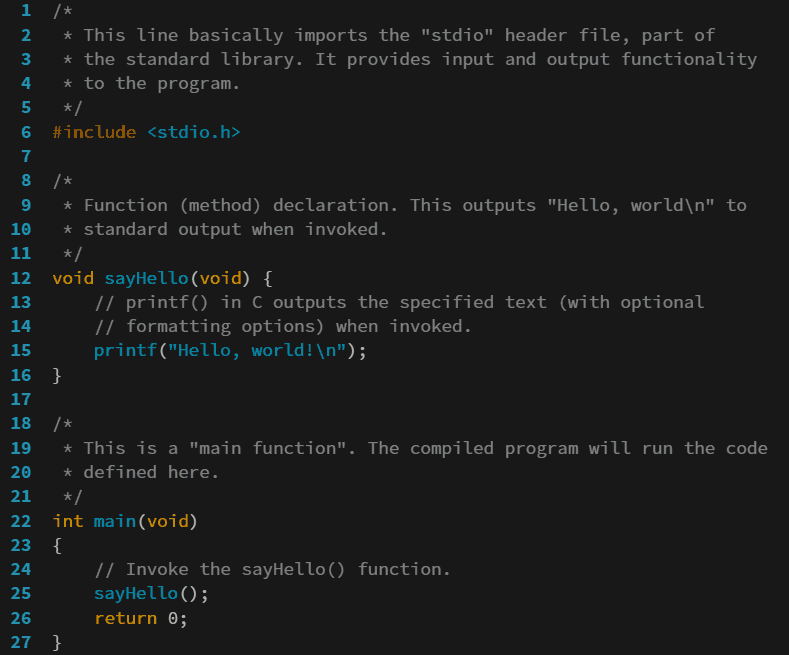

Programming Language

A programming language is a system of notation for writing computer programs. Most programming languages are text-based formal languages, but they may also be graphical. They are a kind of computer language. The description of a programming language is usually split into the two components of syntax (form) and semantics (meaning), which are usually defined by a formal language. Some languages are defined by a specification document (for example, the C programming language is specified by an ISO Standard) while other languages (such as Perl) have a dominant Programming language implementation, implementation that is treated as a reference implementation, reference. Some languages have both, with the basic language defined by a standard and extensions taken from the dominant implementation being common. Programming language theory is the subfield of computer science that studies the design, implementation, analysis, characterization, and classification of programming lan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Transformation (statistics)

In statistics, data transformation is the application of a deterministic mathematical function to each point in a data set—that is, each data point ''zi'' is replaced with the transformed value ''yi'' = ''f''(''zi''), where ''f'' is a function. Transforms are usually applied so that the data appear to more closely meet the assumptions of a statistical inference procedure that is to be applied, or to improve the interpretability or appearance of graphs. Nearly always, the function that is used to transform the data is invertible, and generally is continuous. The transformation is usually applied to a collection of comparable measurements. For example, if we are working with data on peoples' incomes in some currency unit, it would be common to transform each person's income value by the logarithm function. Motivation Guidance for how data should be transformed, or whether a transformation should be applied at all, should come from the particular statistical analysis to be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |