|

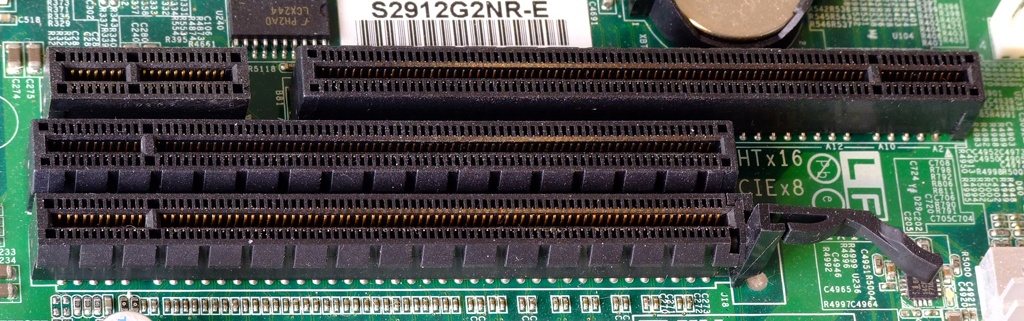

Hypertransport

HyperTransport (HT), formerly known as Lightning Data Transport, is a technology for interconnection of computer processors. It is a bidirectional serial/ parallel high-bandwidth, low- latency point-to-point link that was introduced on April 2, 2001. The HyperTransport Consortium is in charge of promoting and developing HyperTransport technology. HyperTransport is best known as the system bus architecture of AMD central processing units (CPUs) from Athlon 64 through AMD FX and the associated motherboard chipsets. HyperTransport has also been used by IBM and Apple for the Power Mac G5 machines, as well as a number of modern MIPS systems. The current specification HTX 3.1 remained competitive for 2014 high-speed (2666 and 3200 MT/s or about 10.4 GB/s and 12.8 GB/s) DDR4 RAM and slower (around 1 GB/similar to high end Solid-state drive#Standard card form factors, PCIe SSDs ULLtraDIMM flash RAM) technology—a wider range of RAM speeds on a common ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Athlon 64

The Athlon 64 is a ninth-generation, AMD64-architecture microprocessor produced by Advanced Micro Devices (AMD), released on September 23, 2003. It is the third processor to bear the name ''Athlon'', and the immediate successor to the Athlon XP. The second processor (after the Opteron) to implement the AMD64 architecture and the first 64-bit processor targeted at the average consumer, it was AMD's primary consumer CPU, and primarily competed with Intel's Pentium 4, especially the ''Prescott'' and ''Cedar Mill'' core revisions. It is AMD's first K8, eighth-generation processor core for desktop and mobile computers. Despite being natively 64-bit, the AMD64 architecture is backward-compatible with 32-bit x86 instructions. Athlon 64s have been produced for Socket 754, Socket 939, Socket 940, and Socket AM2. The line was succeeded by the dual-core Athlon 64 X2 and Athlon X2 lines. Background The Athlon 64 was originally codenamed ''ClawHammer'' by AMD, and was referred to as such in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyper-Threading

Hyper-threading (officially called Hyper-Threading Technology or HT Technology and abbreviated as HTT or HT) is Intel's proprietary simultaneous multithreading (SMT) implementation used to improve parallelization of computations (doing multiple tasks at once) performed on x86 microprocessors. It was introduced on Xeon server processors in February 2002 and on Pentium 4 desktop processors in November 2002. Since then, Intel has included this technology in Itanium, Atom, and Core 'i' Series CPUs, among others. For each processor core that is physically present, the operating system addresses two virtual (logical) cores and shares the workload between them when possible. The main function of hyper-threading is to increase the number of independent instructions in the pipeline; it takes advantage of superscalar architecture, in which multiple instructions operate on separate data in parallel. With HTT, one physical core appears as two processors to the operating system, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MIPS Architecture

MIPS (Microprocessor without Interlocked Pipelined Stages) is a family of reduced instruction set computer (RISC) instruction set architectures (ISA)Price, Charles (September 1995). ''MIPS IV Instruction Set'' (Revision 3.2), MIPS Technologies, Inc. developed by MIPS Computer Systems, now MIPS Technologies, based in the United States. There are multiple versions of MIPS: including MIPS I, II, III, IV, and V; as well as five releases of MIPS32/64 (for 32- and 64-bit implementations, respectively). The early MIPS architectures were 32-bit; 64-bit versions were developed later. As of April 2017, the current version of MIPS is MIPS32/64 Release 6. MIPS32/64 primarily differs from MIPS I–V by defining the privileged kernel mode System Control Coprocessor in addition to the user mode architecture. The MIPS architecture has several optional extensions. MIPS-3D which is a simple set of floating-point SIMD instructions dedicated to common 3D tasks, MDMX (MaDMaX) which is a more extens ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

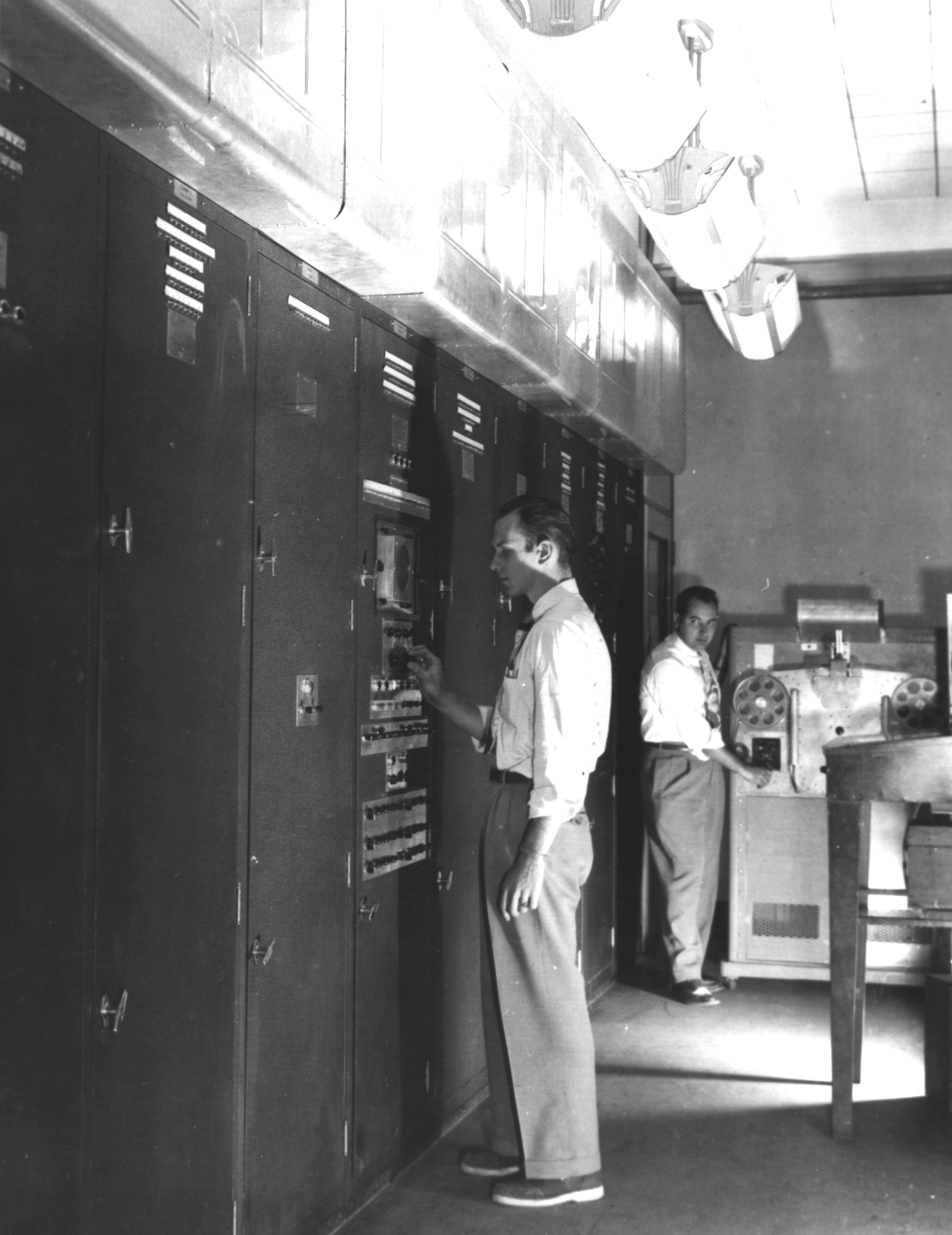

Central Processing Unit

A central processing unit (CPU), also called a central processor, main processor or just Processor (computing), processor, is the electronic circuitry that executes Instruction (computing), instructions comprising a computer program. The CPU performs basic arithmetic, logic, controlling, and input/output (I/O) operations specified by the instructions in the program. This contrasts with external components such as main memory and I/O circuitry, and specialized processors such as graphics processing units (GPUs). The form, CPU design, design, and implementation of CPUs have changed over time, but their fundamental operation remains almost unchanged. Principal components of a CPU include the arithmetic–logic unit (ALU) that performs arithmetic and logic operations, processor registers that supply operands to the ALU and store the results of ALU operations, and a control unit that orchestrates the #Fetch, fetching (from memory), #Decode, decoding and #Execute, execution (of instruc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

8-bit

In computer architecture, 8-bit integers or other data units are those that are 8 bits wide (1 octet). Also, 8-bit central processing unit (CPU) and arithmetic logic unit (ALU) architectures are those that are based on registers or data buses of that size. Memory addresses (and thus address buses) for 8-bit CPUs are generally larger than 8-bit, usually 16-bit. 8-bit microcomputers are microcomputers that use 8-bit microprocessors. The term '8-bit' is also applied to the character sets that could be used on computers with 8-bit bytes, the best known being various forms of extended ASCII, including the ISO/IEC 8859 series of national character sets especially Latin 1 for English and Western European languages. The IBM System/360 introduced byte-addressable memory with 8-bit bytes, as opposed to bit-addressable or decimal digit-addressable or word-addressable memory, although its general-purpose registers were 32 bits wide, and addresses were contained in the lower 24 bit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

16-bit

16-bit microcomputers are microcomputers that use 16-bit microprocessors. A 16-bit register can store 216 different values. The range of integer values that can be stored in 16 bits depends on the integer representation used. With the two most common representations, the range is 0 through 65,535 (216 − 1) for representation as an ( unsigned) binary number, and −32,768 (−1 × 215) through 32,767 (215 − 1) for representation as two's complement. Since 216 is 65,536, a processor with 16-bit memory addresses can directly access 64 KB (65,536 bytes) of byte-addressable memory. If a system uses segmentation with 16-bit segment offsets, more can be accessed. 16-bit architecture The MIT Whirlwind ( 1951) was quite possibly the first-ever 16-bit computer. It was an unusual word size for the era; most systems used six-bit character code and used a word length of some multiple of 6-bits. This changed with the effort to introduce ASCII, which used a 7-bit code and naturally ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gigabyte

The gigabyte () is a multiple of the unit byte for digital information. The prefix '' giga'' means 109 in the International System of Units (SI). Therefore, one gigabyte is one billion bytes. The unit symbol for the gigabyte is GB. This definition is used in all contexts of science (especially data science), engineering, business, and many areas of computing, including storage capacities of hard drives, solid state drives, and tapes, as well as data transmission speeds. However, the term is also used in some fields of computer science and information technology to denote (10243 or 230) bytes, particularly for sizes of RAM. Thus, prior to 1998, some usage of ''gigabyte'' has been ambiguous. To resolve this difficulty, IEC 80000-13 clarifies that a ''gigabyte'' (GB) is 109 bytes and specifies the term ''gibibyte'' (GiB) to denote 230 bytes. These differences are still readily seen for example, when a 400 GB drive's capacity is displayed by Microsoft Windows as 372 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

32-bit

In computer architecture, 32-bit computing refers to computer systems with a processor, memory, and other major system components that operate on data in 32- bit units. Compared to smaller bit widths, 32-bit computers can perform large calculations more efficiently and process more data per clock cycle. Typical 32-bit personal computers also have a 32-bit address bus, permitting up to 4 GB of RAM to be accessed; far more than previous generations of system architecture allowed. 32-bit designs have been used since the earliest days of electronic computing, in experimental systems and then in large mainframe and minicomputer systems. The first hybrid 16/32-bit microprocessor, the Motorola 68000, was introduced in the late 1970s and used in systems such as the original Apple Macintosh. Fully 32-bit microprocessors such as the Motorola 68020 and Intel 80386 were launched in the early to mid 1980s and became dominant by the early 1990s. This generation of personal computers coin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Clock Signal

In electronics and especially synchronous digital circuits, a clock signal (historically also known as ''logic beat'') oscillates between a high and a low state and is used like a metronome to coordinate actions of digital circuits. A clock signal is produced by a clock generator. Although more complex arrangements are used, the most common clock signal is in the form of a square wave with a 50% duty cycle, usually with a fixed, constant frequency. Circuits using the clock signal for synchronization may become active at either the rising edge, falling edge, or, in the case of double data rate, both in the rising and in the falling edges of the clock cycle. Digital circuits Most integrated circuits (ICs) of sufficient complexity use a clock signal in order to synchronize different parts of the circuit, cycling at a rate slower than the worst-case internal propagation delays. In some cases, more than one clock cycle is required to perform a predictable action. As ICs beco ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Double Data Rate

In computing, a computer bus operating with double data rate (DDR) transfers data on both the rising and falling edges of the clock signal. This is also known as double pumped, dual-pumped, and double transition. The term toggle mode is used in the context of NAND flash memory. Overview The simplest way to design a clocked electronic circuit is to make it perform one transfer per full cycle (rise and fall) of a clock signal. This, however, requires that the clock signal changes twice per transfer, while the data lines change at most once per transfer. When operating at a high bandwidth, signal integrity limitations constrain the clock frequency. By using both edges of the clock, the data signals operate with the same limiting frequency, thereby doubling the data transmission rate. This technique has been used for microprocessor front-side busses, Ultra-3 SCSI, expansion buses ( AGP, PCI-X), graphics memory ( GDDR), main memory (both RDRAM and DDR1 through DDR5), and t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hertz

The hertz (symbol: Hz) is the unit of frequency in the International System of Units (SI), equivalent to one event (or cycle) per second. The hertz is an SI derived unit whose expression in terms of SI base units is s−1, meaning that one hertz is the reciprocal of one second. It is named after Heinrich Rudolf Hertz (1857–1894), the first person to provide conclusive proof of the existence of electromagnetic waves. Hertz are commonly expressed in multiples: kilohertz (kHz), megahertz (MHz), gigahertz (GHz), terahertz (THz). Some of the unit's most common uses are in the description of periodic waveforms and musical tones, particularly those used in radio- and audio-related applications. It is also used to describe the clock speeds at which computers and other electronics are driven. The units are sometimes also used as a representation of the energy of a photon, via the Planck relation ''E'' = ''hν'', where ''E'' is the photon's energy, ''ν'' is its f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Front-side Bus

A front-side bus (FSB) is a computer communication interface (bus) that was often used in Intel-chip-based computers during the 1990s and 2000s. The EV6 bus served the same function for competing AMD CPUs. Both typically carry data between the central processing unit (CPU) and a memory controller hub, known as the northbridge. Depending on the implementation, some computers may also have a back-side bus that connects the CPU to the cache. This bus and the cache connected to it are faster than accessing the system memory (or RAM) via the front-side bus. The speed of the front side bus is often used as an important measure of the performance of a computer. The original front-side bus architecture has been replaced by HyperTransport, Intel QuickPath Interconnect or Direct Media Interface in modern volume CPUs. History The term came into use by Intel Corporation about the time the Pentium Pro and Pentium II products were announced, in the 1990s. "Front side" refers to the exte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |