Representation learning on:

[Wikipedia]

[Google]

[Amazon]

In

In

In

In machine learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence.

Machine ...

, feature learning or representation learning is a set of techniques that allows a system to automatically discover the representations needed for feature

Feature may refer to:

Computing

* Feature (CAD), could be a hole, pocket, or notch

* Feature (computer vision), could be an edge, corner or blob

* Feature (software design) is an intentional distinguishing characteristic of a software item ...

detection or classification from raw data. This replaces manual feature engineering

Feature engineering or feature extraction or feature discovery is the process of using domain knowledge to extract features (characteristics, properties, attributes) from raw data. The motivation is to use these extra features to improve the qua ...

and allows a machine to both learn the features and use them to perform a specific task.

Feature learning is motivated by the fact that machine learning tasks such as classification Classification is a process related to categorization, the process in which ideas and objects are recognized, differentiated and understood.

Classification is the grouping of related facts into classes.

It may also refer to:

Business, organizat ...

often require input that is mathematically and computationally convenient to process. However, real-world data such as images, video, and sensor data has not yielded to attempts to algorithmically define specific features. An alternative is to discover such features or representations through examination, without relying on explicit algorithms.

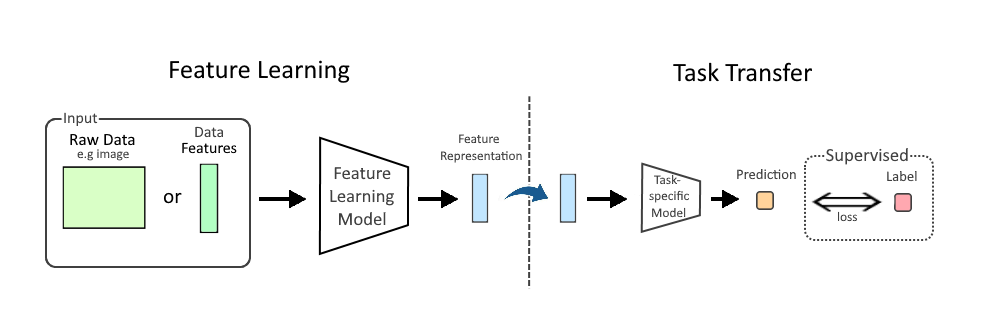

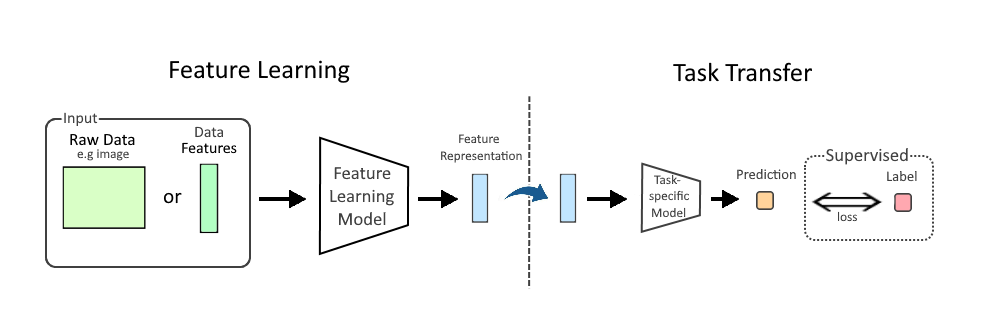

Feature learning can be either supervised, unsupervised or self-supervised.

* In supervised feature learning, features

Feature may refer to:

Computing

* Feature (CAD), could be a hole, pocket, or notch

* Feature (computer vision), could be an edge, corner or blob

* Feature (software design) is an intentional distinguishing characteristic of a software ite ...

are learned using labeled input data. Labeled data includes input-label pairs where the input is given to the model and it must produce the ground truth label as the correct answer. This can be leveraged to generate feature representations with the model which result in high label prediction accuracy. Examples include supervised neural networks, multilayer perceptron and (supervised) dictionary learning.

* In unsupervised feature learning

In machine learning, feature learning or representation learning is a set of techniques that allows a system to automatically discover the representations needed for feature detection or classification from raw data. This replaces manual feature e ...

, features

Feature may refer to:

Computing

* Feature (CAD), could be a hole, pocket, or notch

* Feature (computer vision), could be an edge, corner or blob

* Feature (software design) is an intentional distinguishing characteristic of a software ite ...

are learned with unlabeled input data by analyzing the relationship between points in the dataset. Examples include dictionary learning, independent component analysis

In signal processing, independent component analysis (ICA) is a computational method for separating a multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and that the subcomponents ar ...

, matrix factorization and various forms of clustering.

* In self-supervised feature learning, features

Feature may refer to:

Computing

* Feature (CAD), could be a hole, pocket, or notch

* Feature (computer vision), could be an edge, corner or blob

* Feature (software design) is an intentional distinguishing characteristic of a software ite ...

are learned using unlabeled data like unsupervised learning, however input-label pairs are constructed from each data point, which enables learning the structure of the data through supervised methods such as gradient descent. Classical examples include word embeddings and autoencoder

An autoencoder is a type of artificial neural network used to learn efficient codings of unlabeled data ( unsupervised learning). The encoding is validated and refined by attempting to regenerate the input from the encoding. The autoencoder lea ...

s.Goodfellow, Ian (2016). ''Deep learning''. Yoshua Bengio, Aaron Courville. Cambridge, Massachusetts. pp. 499–516. . . SSL SSL may refer to:

Entertainment

* RoboCup Small Size League, robotics football competition

* ''Sesame Street Live'', a touring version of the children's television show

* StarCraft II StarLeague, a Korean league in the video game

Natural language ...

has since been applied to many modalities through the use of deep neural network architectures such as CNNs and Transformers

''Transformers'' is a media franchise produced by American toy company Hasbro and Japanese toy company Takara Tomy. It primarily follows the Autobots and the Decepticons, two alien robot factions at war that can transform into other forms, ...

.

Supervised

Supervised feature learning is learning features from labeled data. The data label allows the system to compute an error term, the degree to which the system fails to produce the label, which can then be used as feedback to correct the learning process (reduce/minimize the error). Approaches include:Supervised dictionary learning

Dictionary learning develops a set (dictionary) of representative elements from the input data such that each data point can be represented as a weighted sum of the representative elements. The dictionary elements and the weights may be found by minimizing the average representation error (over the input data), together with ''L1'' regularization on the weights to enable sparsity (i.e., the representation of each data point has only a few nonzero weights). Supervised dictionary learning exploits both the structure underlying the input data and the labels for optimizing the dictionary elements. For example, this supervised dictionary learning technique applies dictionary learning on classification problems by jointly optimizing the dictionary elements, weights for representing data points, and parameters of the classifier based on the input data. In particular, a minimization problem is formulated, where the objective function consists of the classification error, the representation error, an ''L1'' regularization on the representing weights for each data point (to enable sparse representation of data), and an ''L2'' regularization on the parameters of the classifier.Neural networks

Neural networks

A neural network is a network or circuit of biological neurons, or, in a modern sense, an artificial neural network, composed of artificial neurons or nodes. Thus, a neural network is either a biological neural network, made up of biological ...

are a family of learning algorithms that use a "network" consisting of multiple layers of inter-connected nodes. It is inspired by the animal nervous system, where the nodes are viewed as neurons and edges are viewed as synapses. Each edge has an associated weight, and the network defines computational rules for passing input data from the network's input layer to the output layer. A network function associated with a neural network characterizes the relationship between input and output layers, which is parameterized by the weights. With appropriately defined network functions, various learning tasks can be performed by minimizing a cost function over the network function (weights).

Multilayer neural network

A neural network is a network or circuit of biological neurons, or, in a modern sense, an artificial neural network, composed of artificial neurons or nodes. Thus, a neural network is either a biological neural network, made up of biological ...

s can be used to perform feature learning, since they learn a representation of their input at the hidden layer(s) which is subsequently used for classification or regression at the output layer. The most popular network architecture of this type is Siamese networks.

Unsupervised

Unsupervised feature learning is learning features from unlabeled data. The goal of unsupervised feature learning is often to discover low-dimensional features that capture some structure underlying the high-dimensional input data. When the feature learning is performed in an unsupervised way, it enables a form ofsemisupervised learning Weak supervision is a branch of machine learning where noisy, limited, or imprecise sources are used to provide supervision signal for labeling large amounts of training data in a supervised learning setting. This approach alleviates the burden of o ...

where features learned from an unlabeled dataset are then employed to improve performance in a supervised setting with labeled data. Several approaches are introduced in the following.

''K''-means clustering

''K''-means clustering is an approach for vector quantization. In particular, given a set of ''n'' vectors, ''k''-means clustering groups them into k clusters (i.e., subsets) in such a way that each vector belongs to the cluster with the closest mean. The problem is computationallyNP-hard

In computational complexity theory, NP-hardness ( non-deterministic polynomial-time hardness) is the defining property of a class of problems that are informally "at least as hard as the hardest problems in NP". A simple example of an NP-hard pr ...

, although suboptimal greedy algorithm

A greedy algorithm is any algorithm that follows the problem-solving heuristic of making the locally optimal choice at each stage. In many problems, a greedy strategy does not produce an optimal solution, but a greedy heuristic can yield locally ...

s have been developed.

K-means clustering can be used to group an unlabeled set of inputs into ''k'' clusters, and then use the centroid

In mathematics and physics, the centroid, also known as geometric center or center of figure, of a plane figure or solid figure is the arithmetic mean position of all the points in the surface of the figure. The same definition extends to any ...

s of these clusters to produce features. These features can be produced in several ways. The simplest is to add ''k'' binary features to each sample, where each feature ''j'' has value one iff the ''j''th centroid learned by ''k''-means is the closest to the sample under consideration. It is also possible to use the distances to the clusters as features, perhaps after transforming them through a radial basis function A radial basis function (RBF) is a real-valued function \varphi whose value depends only on the distance between the input and some fixed point, either the origin, so that \varphi(\mathbf) = \hat\varphi(\left\, \mathbf\right\, ), or some other fixed ...

(a technique that has been used to train RBF network

In the field of mathematical modeling, a radial basis function network is an artificial neural network that uses radial basis functions as activation functions. The output of the network is a linear combination of radial basis functions of the inp ...

s). Coates and Ng note that certain variants of ''k''-means behave similarly to sparse coding

Neural coding (or Neural representation) is a neuroscience field concerned with characterising the hypothetical relationship between the stimulus and the individual or ensemble neuronal responses and the relationship among the electrical activit ...

algorithms.

In a comparative evaluation of unsupervised feature learning methods, Coates, Lee and Ng found that ''k''-means clustering with an appropriate transformation outperforms the more recently invented auto-encoders and RBMs on an image classification task. ''K''-means also improves performance in the domain of NLP, specifically for named-entity recognition; there, it competes with Brown clustering, as well as with distributed word representations (also known as neural word embeddings).

Principal component analysis

Principal component analysis

Principal component analysis (PCA) is a popular technique for analyzing large datasets containing a high number of dimensions/features per observation, increasing the interpretability of data while preserving the maximum amount of information, and ...

(PCA) is often used for dimension reduction. Given an unlabeled set of ''n'' input data vectors, PCA generates ''p'' (which is much smaller than the dimension of the input data) right singular vectors corresponding to the ''p'' largest singular values of the data matrix, where the ''k''th row of the data matrix is the ''k''th input data vector shifted by the sample mean

The sample mean (or "empirical mean") and the sample covariance are statistics computed from a sample of data on one or more random variables.

The sample mean is the average value (or mean value) of a sample of numbers taken from a larger popu ...

of the input (i.e., subtracting the sample mean from the data vector). Equivalently, these singular vectors are the eigenvector

In linear algebra, an eigenvector () or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denoted ...

s corresponding to the ''p'' largest eigenvalues of the sample covariance matrix

The sample mean (or "empirical mean") and the sample covariance are statistics computed from a sample of data on one or more random variables.

The sample mean is the average value (or mean value) of a sample of numbers taken from a larger popul ...

of the input vectors. These ''p'' singular vectors are the feature vectors learned from the input data, and they represent directions along which the data has the largest variations.

PCA is a linear feature learning approach since the ''p'' singular vectors are linear functions of the data matrix. The singular vectors can be generated via a simple algorithm with ''p'' iterations. In the ''i''th iteration, the projection of the data matrix on the ''(i-1)''th eigenvector is subtracted, and the ''i''th singular vector is found as the right singular vector corresponding to the largest singular of the residual data matrix.

PCA has several limitations. First, it assumes that the directions with large variance are of most interest, which may not be the case. PCA only relies on orthogonal transformations of the original data, and it exploits only the first- and second-order moments of the data, which may not well characterize the data distribution. Furthermore, PCA can effectively reduce dimension only when the input data vectors are correlated (which results in a few dominant eigenvalues).

Local linear embedding

Local linear embedding (LLE) is a nonlinear learning approach for generating low-dimensional neighbor-preserving representations from (unlabeled) high-dimension input. The approach was proposed by Roweis and Saul (2000). The general idea of LLE is to reconstruct the original high-dimensional data using lower-dimensional points while maintaining some geometric properties of the neighborhoods in the original data set. LLE consists of two major steps. The first step is for "neighbor-preserving", where each input data point ''Xi'' is reconstructed as a weighted sum of ''K'' nearest neighbor data points, and the optimal weights are found by minimizing the average squared reconstruction error (i.e., difference between an input point and its reconstruction) under the constraint that the weights associated with each point sum up to one. The second step is for "dimension reduction," by looking for vectors in a lower-dimensional space that minimizes the representation error using the optimized weights in the first step. Note that in the first step, the weights are optimized with fixed data, which can be solved as aleast squares

The method of least squares is a standard approach in regression analysis to approximate the solution of overdetermined systems (sets of equations in which there are more equations than unknowns) by minimizing the sum of the squares of the re ...

problem. In the second step, lower-dimensional points are optimized with fixed weights, which can be solved via sparse eigenvalue decomposition.

The reconstruction weights obtained in the first step capture the "intrinsic geometric properties" of a neighborhood in the input data. It is assumed that original data lie on a smooth lower-dimensional manifold

In mathematics, a manifold is a topological space that locally resembles Euclidean space near each point. More precisely, an n-dimensional manifold, or ''n-manifold'' for short, is a topological space with the property that each point has a n ...

, and the "intrinsic geometric properties" captured by the weights of the original data are also expected to be on the manifold. This is why the same weights are used in the second step of LLE. Compared with PCA, LLE is more powerful in exploiting the underlying data structure.

Independent component analysis

Independent component analysis

In signal processing, independent component analysis (ICA) is a computational method for separating a multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and that the subcomponents ar ...

(ICA) is a technique for forming a data representation using a weighted sum of independent non-Gaussian components. The assumption of non-Gaussian is imposed since the weights cannot be uniquely determined when all the components follow Gaussian

Carl Friedrich Gauss (1777–1855) is the eponym of all of the topics listed below.

There are over 100 topics all named after this German mathematician and scientist, all in the fields of mathematics, physics, and astronomy. The English eponym ...

distribution.

Unsupervised dictionary learning

Unsupervised dictionary learning does not utilize data labels and exploits the structure underlying the data for optimizing dictionary elements. An example of unsupervised dictionary learning issparse coding

Neural coding (or Neural representation) is a neuroscience field concerned with characterising the hypothetical relationship between the stimulus and the individual or ensemble neuronal responses and the relationship among the electrical activit ...

, which aims to learn basis functions (dictionary elements) for data representation from unlabeled input data. Sparse coding can be applied to learn overcomplete dictionaries, where the number of dictionary elements is larger than the dimension of the input data. Aharon et al. proposed algorithm K-SVD for learning a dictionary of elements that enables sparse representation.

Multilayer/deep architectures

The hierarchical architecture of the biological neural system inspiresdeep learning

Deep learning (also known as deep structured learning) is part of a broader family of machine learning methods based on artificial neural networks with representation learning. Learning can be supervised, semi-supervised or unsupervised.

...

architectures for feature learning by stacking multiple layers of learning nodes. These architectures are often designed based on the assumption of distributed representation

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains.

An ANN is based on a collection of connected units ...

: observed data is generated by the interactions of many different factors on multiple levels. In a deep learning architecture, the output of each intermediate layer can be viewed as a representation of the original input data. Each level uses the representation produced by previous level as input, and produces new representations as output, which is then fed to higher levels. The input at the bottom layer is raw data, and the output of the final layer is the final low-dimensional feature or representation.

Restricted Boltzmann machine

Restricted Boltzmann machines (RBMs) are often used as a building block for multilayer learning architectures. An RBM can be represented by an undirectedbipartite graph

In the mathematical field of graph theory, a bipartite graph (or bigraph) is a graph whose vertices can be divided into two disjoint and independent sets U and V, that is every edge connects a vertex in U to one in V. Vertex sets U and V a ...

consisting of a group of binary hidden variables, a group of visible variables, and edges connecting the hidden and visible nodes. It is a special case of the more general Boltzmann machine

A Boltzmann machine (also called Sherrington–Kirkpatrick model with external field or stochastic Ising–Lenz–Little model) is a stochastic spin-glass model with an external field, i.e., a Sherrington–Kirkpatrick model, that is a stochastic ...

s with the constraint of no intra-node connections. Each edge in an RBM is associated with a weight. The weights together with the connections define an energy function

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfi ...

, based on which a joint distribution

Given two random variables that are defined on the same probability space, the joint probability distribution is the corresponding probability distribution on all possible pairs of outputs. The joint distribution can just as well be considered ...

of visible and hidden nodes can be devised. Based on the topology of the RBM, the hidden (visible) variables are independent, conditioned on the visible (hidden) variables. Such conditional independence facilitates computations.

An RBM can be viewed as a single layer architecture for unsupervised feature learning. In particular, the visible variables correspond to input data, and the hidden variables correspond to feature detectors. The weights can be trained by maximizing the probability of visible variables using Hinton's contrastive divergence

A restricted Boltzmann machine (RBM) is a generative stochastic artificial neural network that can learn a probability distribution over its set of inputs.

RBMs were initially invented under the name Harmonium by Paul Smolensky in 1986,

and rose ...

(CD) algorithm.

In general training RBM by solving the maximization problem tends to result in non-sparse representations. Sparse RBM was proposed to enable sparse representations. The idea is to add a regularization

Regularization may refer to:

* Regularization (linguistics)

* Regularization (mathematics)

* Regularization (physics)

* Regularization (solid modeling)

* Regularization Law, an Israeli law intended to retroactively legalize settlements

See also ...

term in the objective function of data likelihood, which penalizes the deviation of the expected hidden variables from a small constant .

Autoencoder

Anautoencoder

An autoencoder is a type of artificial neural network used to learn efficient codings of unlabeled data ( unsupervised learning). The encoding is validated and refined by attempting to regenerate the input from the encoding. The autoencoder lea ...

consisting of an encoder and a decoder is a paradigm for deep learning architectures. An example is provided by Hinton and Salakhutdinov where the encoder uses raw data (e.g., image) as input and produces feature or representation as output and the decoder uses the extracted feature from the encoder as input and reconstructs the original input raw data as output. The encoder and decoder are constructed by stacking multiple layers of RBMs. The parameters involved in the architecture were originally trained in a greedy layer-by-layer manner: after one layer of feature detectors is learned, they are fed up as visible variables for training the corresponding RBM. Current approaches typically apply end-to-end training with stochastic gradient descent

Stochastic gradient descent (often abbreviated SGD) is an iterative method for optimizing an objective function with suitable smoothness properties (e.g. differentiable or subdifferentiable). It can be regarded as a stochastic approximation of ...

methods. Training can be repeated until some stopping criteria are satisfied.

Self-supervised

Self-supervised representation learning is learning features by training on the structure of unlabeled data rather than relying on explicit labels for an information signal. This approach has enabled the combined use of deep neural network architectures and larger unlabeled datasets to produce deep feature representations. Training tasks typically fall under the classes of either contrastive, generative or both. Contrastive representation learning trains representations for associated data pairs, called positive samples, to be aligned, while pairs with no relation, called negative samples, are contrasted. A larger portion of negative samples is typically necessary in order to prevent catastrophic collapse, which is when all inputs are mapped to the same representation. Generative representation learning tasks the model with producing the correct data to either match a restricted input or reconstruct the full input from a lower dimensional representation. A common setup for self-supervised representation learning of a certain data type (e.g. text, image, audio, video) is to pretrain the model using large datasets of general context, unlabeled data. Depending on the context, the result of this is either a set of representations for common data segments (e.g. words) which new data can be broken into, or a neural network able to convert each new data point (e.g. image) into a set of lower dimensional features. In either case, the output representations can then be used as an initialization in many different problem settings where labeled data may be limited. Specialization of the model to specific tasks is typically done with supervised learning, either by fine-tuning the model / representations with the labels as the signal, or freezing the representations and training an additional model which takes them as an input. Many self-supervised training schemes have been developed for use in representation learning of various modalities, often first showing successful application in text or image before being transferred to other data types.Text

Word2vec

Word2vec is a technique for natural language processing (NLP) published in 2013. The word2vec algorithm uses a neural network model to learn word associations from a large corpus of text. Once trained, such a model can detect synonymous words or ...

is a word embedding technique which learns to represent words through self-supervision over each word and its neighboring words in a sliding window across a large corpus of text. The model has two possible training schemes to produce word vector representations, one generative and one contrastive. The first is word prediction given each of the neighboring words as an input. The second is training on the representation similarity for neighboring words and representation dissimilarity for random pairs of words. A limitation of word2vec is that only the pairwise co-occurrence structure of the data is used, and not the ordering or entire set of context words. More recent transformer-based representation learning approaches attempt to solve this with word prediction tasks. GPT pretrains on next word prediction using prior input words as context, whereas BERT masks random tokens in order to provide bidirectional context.

Other self-supervised techniques extend word embeddings by finding representations for larger text structures such as sentences

''The Four Books of Sentences'' (''Libri Quattuor Sententiarum'') is a book of theology written by Peter Lombard in the 12th century. It is a systematic compilation of theology, written around 1150; it derives its name from the '' sententiae'' ...

or paragraphs in the input data. Doc2vec extends the generative training approach in word2vec by adding an additional input to the word prediction task based on the paragraph it is within, and is therefore intended to represent paragraph level context.

Image

The domain of image representation learning has employed many different self-supervised training techniques, including transformation, inpainting, patch discrimination and clustering. Examples of generative approaches are Context Encoders, which trains anAlexNet

AlexNet is the name of a convolutional neural network (CNN) architecture, designed by Alex Krizhevsky in collaboration with Ilya Sutskever and Geoffrey Hinton, who was Krizhevsky's Ph.D. advisor.

AlexNet competed in the ImageNet Large Scale Vi ...

CNN architecture to generate a removed image region given the masked image as input, and iGPT, which applies the GPT-2

Generative Pre-trained Transformer 2 (GPT-2) is an open-source artificial intelligence created by OpenAI in February 2019. GPT-2 translates text, answers questions, summarizes passages, and generates text output on a level that, while someti ...

language model architecture to images by training on pixel prediction after reducing the image resolution

Image resolution is the detail an image holds. The term applies to digital images, film images, and other types of images. "Higher resolution" means more image detail.

Image resolution can be measured in various ways. Resolution quantifies how ...

.

Many other self-supervised methods use siamese networks, which generate different views of the image through various augmentations that are then aligned to have similar representations. The challenge is avoiding collapsing solutions where the model encodes all images to the same representation. SimCLR is a contrastive approach which uses negative examples in order to generate image representations with a ResNet CNN. Bootstrap Your Own Latent (BYOL) removes the need for negative samples by encoding one of the views with a slow moving average of the model parameters as they are being modified during training.

Graph

The goal of manygraph

Graph may refer to:

Mathematics

*Graph (discrete mathematics), a structure made of vertices and edges

**Graph theory, the study of such graphs and their properties

*Graph (topology), a topological space resembling a graph in the sense of discre ...

representation learning techniques is to produce an embedded representation of each node

In general, a node is a localized swelling (a " knot") or a point of intersection (a vertex).

Node may refer to:

In mathematics

* Vertex (graph theory), a vertex in a mathematical graph

* Vertex (geometry), a point where two or more curves, line ...

based on the overall network topology

Network topology is the arrangement of the elements ( links, nodes, etc.) of a communication network. Network topology can be used to define or describe the arrangement of various types of telecommunication networks, including command and contr ...

. node2vec extends the word2vec

Word2vec is a technique for natural language processing (NLP) published in 2013. The word2vec algorithm uses a neural network model to learn word associations from a large corpus of text. Once trained, such a model can detect synonymous words or ...

training technique to nodes in a graph by using co-occurrence in random walk

In mathematics, a random walk is a random process that describes a path that consists of a succession of random steps on some mathematical space.

An elementary example of a random walk is the random walk on the integer number line \mathbb Z ...

s through the graph as the measure of association. Another approach is to maximize mutual information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual dependence between the two variables. More specifically, it quantifies the " amount of information" (in units such ...

, a measure of similarity, between the representations of associated structures within the graph. An example is Deep Graph Infomax, which uses contrastive self-supervision based on mutual information between the representation of a “patch” around each node, and a summary representation of the entire graph. Negative samples are obtained by pairing the graph representation with either representations from another graph in a multigraph training setting, or corrupted patch representations in single graph training.

Video

With analogous results in masked prediction and clustering, video representation learning approaches are often similar to image techniques but must utilize the temporal sequence of video frames as an additional learned structure. Examples include VCP, which masks video clips and trains to choose the correct one given a set of clip options, and Xu et al., who train a 3D-CNN to identify the original order given a shuffled set of video clips.Audio

Self-supervised representation techniques have also been applied to many audio data formats, particularly forspeech processing

Speech processing is the study of speech signals and the processing methods of signals. The signals are usually processed in a digital representation, so speech processing can be regarded as a special case of digital signal processing, applied t ...

. Wav2vec 2.0 discretizes the audio waveform into timesteps via temporal convolutions

In mathematics (in particular, functional analysis), convolution is a mathematical operation on two functions ( and ) that produces a third function (f*g) that expresses how the shape of one is modified by the other. The term ''convolution'' ...

, and then trains a transformer

A transformer is a passive component that transfers electrical energy from one electrical circuit to another circuit, or multiple circuits. A varying current in any coil of the transformer produces a varying magnetic flux in the transformer' ...

on masked prediction of random timesteps using a contrastive loss. This is similar to the BERT language model, except as in many SSL approaches to video, the model chooses among a set of options rather than over the entire word vocabulary.

Multimodal

Self-supervised learning has also been used to develop joint representations of multiple data types. Approaches usually rely on some natural or human-derived association between the modalities as an implicit label, for instance video clips of animals or objects with characteristic sounds, or captions written to describe images. CLIP produces a joint image-text representation space by training to align image and text encodings from a large dataset of image-caption pairs using a contrastive loss. MERLOT Reserve trains a transformer-based encoder to jointly represent audio, subtitles and video frames from a large dataset of videos through 3 joint pretraining tasks: contrastive masked prediction of either audio or text segments given the video frames and surrounding audio and text context, along with contrastive alignment of video frames with their corresponding captions. Multimodal representation models are typically unable to assume direct correspondence of representations in the different modalities, since the precise alignment can often be noisy or ambiguous. For example, the text "dog" could be paired with many different pictures of dogs, and correspondingly a picture of a dog could be captioned with varying degrees of specificity. This limitation means that downstream tasks may require an additional generative mapping network between modalities to achieve optimal performance, such as in DALLE-2 for text to image generation.See also

*Automated machine learning

Automated machine learning (AutoML) is the process of automating the tasks of applying machine learning to real-world problems. AutoML potentially includes every stage from beginning with a raw dataset to building a machine learning model ready ...

(AutoML)

* Deep learning

Deep learning (also known as deep structured learning) is part of a broader family of machine learning methods based on artificial neural networks with representation learning. Learning can be supervised, semi-supervised or unsupervised.

...

* Feature detection (computer vision)

In computer vision and image processing, a feature is a piece of information about the content of an image; typically about whether a certain region of the image has certain properties. Features may be specific structures in the image such as poi ...

* Feature extraction

In machine learning, pattern recognition, and image processing, feature extraction starts from an initial set of measured data and builds derived values (features) intended to be informative and non-redundant, facilitating the subsequent learning a ...

* Word embedding

* Vector quantization

Vector quantization (VQ) is a classical quantization technique from signal processing that allows the modeling of probability density functions by the distribution of prototype vectors. It was originally used for data compression. It works by di ...

* Variational autoencoder

In machine learning, a variational autoencoder (VAE), is an artificial neural network architecture introduced by Diederik P. Kingma and Max Welling, belonging to the families of probabilistic graphical models and variational Bayesian methods.

...

References

{{Reflist, 30em Machine learning