The replication crisis (also called the replicability crisis and the reproducibility crisis) is an ongoing

methodological crisis in which the results of many scientific studies are difficult or impossible to

reproduce. Because the reproducibility of empirical results is an essential part of the

scientific method

The scientific method is an empirical method for acquiring knowledge that has characterized the development of science since at least the 17th century (with notable practitioners in previous centuries; see the article history of scientifi ...

, such failures undermine the credibility of theories building on them and potentially call into question substantial parts of scientific knowledge.

The replication crisis is frequently discussed in relation to

psychology

Psychology is the science, scientific study of mind and behavior. Psychology includes the study of consciousness, conscious and Unconscious mind, unconscious phenomena, including feelings and thoughts. It is an academic discipline of immens ...

and

medicine

Medicine is the science and practice of caring for a patient, managing the diagnosis, prognosis, prevention, treatment, palliation of their injury or disease, and promoting their health. Medicine encompasses a variety of health care pr ...

, where considerable efforts have been undertaken to reinvestigate classic results, to determine both their reliability and, if found unreliable, the reasons for the failure. Data strongly indicate that other

natural

Nature, in the broadest sense, is the physical world or universe. "Nature" can refer to the phenomena of the physical world, and also to life in general. The study of nature is a large, if not the only, part of science. Although humans ar ...

, and

social sciences

Social science is one of the branches of science, devoted to the study of societies and the relationships among individuals within those societies. The term was formerly used to refer to the field of sociology, the original "science of so ...

are affected as well.

The phrase ''replication crisis'' was coined in the early 2010s as part of a growing awareness of the problem. Considerations of causes and remedies have given rise to a new scientific discipline,

metascience

Metascience (also known as meta-research) is the use of scientific methodology to study science itself. Metascience seeks to increase the quality of scientific research while reducing inefficiency. It is also known as "''research on research''" ...

, which uses methods of empirical research to examine empirical research practice.

Since empirical research involves both obtaining and analyzing data, considerations about its reproducibility fall into two categories. The validation of the analysis and interpretation of the data obtained in a study runs under the term reproducibility in the narrow sense. The task of repeating the experiment or observational study to obtain new, independent data with the goal of reaching the same or similar conclusions as an original study is called

replication.

Background

Replication has been called "the cornerstone of science". Environmental health scientist Stefan Schmidt began a 2009 review with this description of replication:

But there is limited consensus on how to define ''replication'' and potentially related concepts.

A number of types of replication have been identified:

#''Direct'' or ''exact replication'', where an experimental procedure is repeated as closely as possible.

#''Systematic replication'', where an experimental procedure is largely repeated, with some intentional changes.

#''Conceptual replication'', where a finding or hypothesis is tested using a different procedure.

Conceptual replication allows testing for generalizability and veracity of a result or hypothesis.

''Reproducibility'' can also be distinguished from ''replication'', as referring to reproducing the same results using the same data set. Reproducibility of this type is why many researchers make their data available to others for testing.

The replication crisis does not necessarily mean these fields are unscientific. Rather, this process is part of the scientific process in which old ideas or those that cannot withstand careful scrutiny are pruned, although this pruning process is not always effective.

A hypothesis is generally considered to be supported when the results match the predicted pattern and that pattern of results is found to be

statistically significant

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the p ...

. Results are generally considered significant when statistical testing determines that there is a 5% (or less) probability that the measured effects are inconsequential. This is depicted as ''p'' < 0.05, where

''p'' (typically called the ''p''-value) is the probability level. This should result in 5% of hypotheses that are supported being false positives (an incorrect hypothesis being erroneously found correct), assuming the studies meet all of the statistical assumptions. Some fields use smaller p-values, such as ''p'' < 0.01 (1% chance of a false positive) or ''p'' < 0.001 (0.1% chance of a false positive). But a smaller chance of a false positive often requires greater sample sizes or a greater chance of a

false negative (a correct hypothesis being erroneously found incorrect). Although ''p''-value testing is the most commonly used method, it is not the only method.

Prevalence

In psychology

Despite issues with replicability being pervasive across scientific fields, several factors have combined to put psychology at the center of the conversation. Some areas of psychology once considered solid, such as

social priming, have come under increased scrutiny due to failed replications. Much of the focus has been on the area of

social psychology

Social psychology is the scientific study of how thoughts, feelings, and behaviors are influenced by the real or imagined presence of other people or by social norms. Social psychologists typically explain human behavior as a result of the ...

, although other areas of psychology such as

clinical psychology

Clinical psychology is an integration of social science, theory, and clinical knowledge for the purpose of understanding, preventing, and relieving psychologically based distress or dysfunction and to promote subjective well-being and persona ...

,

developmental psychology

Developmental psychology is the scientific study of how and why humans grow, change, and adapt across the course of their lives. Originally concerned with infants and children, the field has expanded to include adolescence, adult developme ...

, and

educational research

Educational research refers to the systematic collection and analysis of data related to the field of education. Research may involve a variety of methods and various aspects of education including student learning, teaching methods, teacher tra ...

have also been implicated.

In August 2015, the first open

empirical study of reproducibility in psychology was published, called

The Reproducibility Project: Psychology. Coordinated by psychologist

Brian Nosek

Brian Arthur Nosek is an American social-cognitive psychologist, professor of psychology at the University of Virginia, and the co-founder and director of the Center for Open Science. He also co-founded the Society for the Improvement of Psycholog ...

, researchers redid 100 studies in psychological science from three high-ranking psychology journals (''

Journal of Personality and Social Psychology

The ''Journal of Personality and Social Psychology'' is a monthly peer-reviewed scientific journal published by the American Psychological Association that was established in 1965. It covers the fields of social and personality psychology. The ed ...

'', ''

Journal of Experimental Psychology: Learning, Memory, and Cognition'', and ''

Psychological Science''). 97 of the original studies had significant effects, but of those 97, only 36% of the replications yielded significant findings (''p'' value below 0.05).

The mean

effect size

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the ...

in the replications was approximately half the magnitude of the effects reported in the original studies. The same paper examined the reproducibility rates and effect sizes by journal and discipline. Study replication rates were 23% for the ''Journal of Personality and Social Psychology'', 48% for ''Journal of Experimental Psychology: Learning, Memory, and Cognition'', and 38% for ''Psychological Science''. Studies in the field of cognitive psychology had a higher replication rate (50%) than studies in the field of social psychology (25%).

A study published in 2018 in ''

Nature Human Behaviour

''Nature Human Behaviour'' is a monthly multidisciplinary online-only peer-reviewed scientific journal covering all aspects of human behaviour. It was established in January 2017 and is published by Springer Nature Publishing. The editor-in-ch ...

'' replicated 21 social and behavioral science papers from ''Nature'' and ''

Science

Science is a systematic endeavor that builds and organizes knowledge in the form of testable explanations and predictions about the universe.

Science may be as old as the human species, and some of the earliest archeological evidence ...

,'' finding that only about 62% could successfully reproduce original results.

Similarly, in a study conducted under the auspices of the

Center for Open Science, a team of 186 researchers from 60 different laboratories (representing 36 different nationalities from six different continents) conducted replications of 28 classic and contemporary findings in psychology.

The study's focus was not only whether the original papers' findings replicated but also the extent to which findings varied as a function of variations in samples and contexts. Overall, 50% of the 28 findings failed to replicate despite massive sample sizes. But if a finding replicated, then it replicated in most samples. If a finding was not replicated, then it failed to replicate with little variation across samples and contexts. This evidence is inconsistent with a proposed explanation that failures to replicate in psychology are likely due to changes in the sample between the original and replication study.

Results of a 2022 study suggest that many earlier

brain

A brain is an organ (biology), organ that serves as the center of the nervous system in all vertebrate and most invertebrate animals. It is located in the head, usually close to the sensory organs for senses such as Visual perception, vision. I ...

–

phenotype

In genetics, the phenotype () is the set of observable characteristics or traits of an organism. The term covers the organism's morphology (biology), morphology or physical form and structure, its Developmental biology, developmental proc ...

studies ("brain-wide association studies" (BWAS)) produced invalid conclusions as the replication of such studies requires samples from thousands of individuals due to small

effect size

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the ...

s.

In medicine

Of 49 medical studies from 1990 to 2003 with more than 1000 citations, 92% found that the studied therapies were effective. Of these studies, 16% were contradicted by subsequent studies, 16% had found stronger effects than did subsequent studies, 44% were replicated, and 24% remained largely unchallenged. A 2011 analysis by researchers with pharmaceutical company

Bayer

Bayer AG (, commonly pronounced ; ) is a German multinational pharmaceutical and biotechnology company and one of the largest pharmaceutical companies in the world. Headquartered in Leverkusen, Bayer's areas of business include pharmaceutic ...

found that, at most, a quarter of Bayer's in-house findings replicated the original results. But the analysis of Bayer's results found that the results that did replicate could often be successfully used for clinical applications.

[ Updated on 14 June 2017]

In a 2012 paper,

C. Glenn Begley

C. Glenn Begley is a hematologist and oncologist who was the CEO of BioCurate, an Australia-based joint venture between the University of Melbourne and Monash University that was launched in 2016. Previously, he worked at the California-based bio ...

, a biotech consultant working at

Amgen

Amgen Inc. (formerly Applied Molecular Genetics Inc.) is an American multinational biopharmaceutical company headquartered in Thousand Oaks, California. One of the world's largest independent biotechnology companies, Amgen was established in T ...

, and Lee Ellis, a medical researcher at the University of Texas, found that only 11% of 53 pre-clinical cancer studies had replications that could confirm conclusions from the original studies.

In late 2021, The Reproducibility Project: Cancer Biology examined 53 top papers about cancer published between 2010 and 2012 and showed that among studies that provided sufficient information to be redone, the effect sizes were 85% smaller on average than the original findings.

A survey of cancer researchers found that half of them had been unable to reproduce a published result.

In other disciplines

In economics

Economics has lagged behind other social sciences and psychology in its attempts to assess replication rates and increase the number of studies that attempt replication.

A 2016 study in the journal ''Science'' replicated 18

experimental studies

An experiment is a procedure carried out to support or refute a hypothesis, or determine the efficacy or likelihood of something previously untried. Experiments provide insight into cause-and-effect by demonstrating what outcome occurs when a ...

published in two top-tier economics journals, ''

The American Economic Review

The ''American Economic Review'' is a monthly peer-reviewed academic journal published by the American Economic Association. First published in 1911, it is considered one of the most prestigious and highly distinguished journals in the field of eco ...

'' and the ''

Quarterly Journal of Economics

''The Quarterly Journal of Economics'' is a peer-reviewed academic journal published by the Oxford University Press for the Harvard University Department of Economics. Its current editors-in-chief are Robert J. Barro, Lawrence F. Katz, Nathan ...

'', between 2011 and 2014. It found that about 39% failed to reproduce the original results.

About 20% of studies published in ''The American Economic Review'' are contradicted by other studies despite relying on the same or similar data sets. A study of empirical findings in the ''

Strategic Management Journal

The Strategic Management Society (SMS) is a professional society for the advancement of strategic management. The society consists of nearly 3,000 members representing various backgrounds and perspectives from more than eighty different countries. ...

'' found that about 30% of 27 retested articles showed statistically insignificant results for previously significant findings, whereas about 4% showed statistically significant results for previously insignificant findings.

In water resource management

A 2019 study in ''

Scientific Data'' estimated with 95% confidence that of 1,989 articles on water resources and management published in 2017, study results might be reproduced for only 0.6% to 6.8%, even if each of these articles were to provide sufficient information that allowed for replication.

Across fields

A 2016 survey by ''Nature'' on 1,576 researchers who took a brief online questionnaire on reproducibility found that more than 70% of researchers have tried and failed to reproduce another scientist's experiment results (including 87% of

chemist

A chemist (from Greek ''chēm(ía)'' alchemy; replacing ''chymist'' from Medieval Latin ''alchemist'') is a scientist trained in the study of chemistry. Chemists study the composition of matter and its properties. Chemists carefully describe th ...

s, 77% of

biologist

A biologist is a scientist who conducts research in biology. Biologists are interested in studying life on Earth, whether it is an individual cell, a multicellular organism, or a community of interacting populations. They usually specialize ...

s, 69% of

physicist

A physicist is a scientist who specializes in the field of physics, which encompasses the interactions of matter and energy at all length and time scales in the physical universe.

Physicists generally are interested in the root or ultimate cau ...

s and

engineer

Engineers, as practitioners of engineering, are professionals who Invention, invent, design, analyze, build and test machines, complex systems, structures, gadgets and materials to fulfill functional objectives and requirements while considerin ...

s, 67% of

medical researchers, 64% of

earth

Earth is the third planet from the Sun and the only astronomical object known to harbor life. While large volumes of water can be found throughout the Solar System, only Earth sustains liquid surface water. About 71% of Earth's sur ...

and

environmental scientists, and 62% of all others), and more than half have failed to reproduce their own experiments. But fewer than 20% had been contacted by another researcher unable to reproduce their work. The survey found that fewer than 31% of researchers believe that failure to reproduce results means that the original result is probably wrong, although 52% agree that a significant replication crisis exists. Most researchers said they still trust the published literature.

Early analysis of

result-blind peer review, which is less affected by publication bias, has estimated that 61% of result-blind studies in biomedicine and psychology have led to

null results, in contrast to an estimated 5% to 20% in earlier research.

Causes

The replication crisis may be triggered by the "generation of new data and scientific publications at an unprecedented rate" that leads to the "desperation to publish or perish" and a failure to adhere to good scientific practice.

Historical and sociological roots

Predictions of an impending crisis in the quality control mechanism of science can be traced back several decades.

Derek de Solla Price—considered the father of

scientometrics

Scientometrics is the field of study which concerns itself with measuring and analysing scholarly literature. Scientometrics is a sub-field of informetrics. Major research issues include the measurement of the impact of research papers and academ ...

, the

quantitative study of science—predicted that science could reach "senility" as a result of its own exponential growth.

Some present-day literature seems to vindicate this "overflow" prophecy, lamenting the decay in both attention and quality.

Historian

Philip Mirowski

Philip Mirowski (born 21 August 1951 in Jackson, Michigan) is a historian and philosopher of economic thought at the University of Notre Dame. He received a PhD in Economics from the University of Michigan in 1979.

Career

In his 1989 book ''More ...

offers another reading of the crisis in his 2011 book ''Science-Mart: Privatizing American Science''. Mirowski uses the word ''Mart'' as a metaphor for the commodification of science. In his analysis, the quality of science collapses when it becomes a commodity being traded in a market. He argues his case by tracing the decay of science to the decision of major corporations to close their in-house laboratories. They outsourced their work to universities in an effort to reduce costs and increase profits. The corporations subsequently moved their research away from universities to an even cheaper option –

Contract Research Organizations In the life sciences, a contract research organization (CRO) is a company that provides support to the pharmaceutical, biotechnology, and medical device industries in the form of research services outsourced on a contract basis. A CRO may prov ...

.

Social

systems theory

Systems theory is the interdisciplinary study of systems, i.e. cohesive groups of interrelated, interdependent components that can be natural or human-made. Every system has causal boundaries, is influenced by its context, defined by its structu ...

, as expounded in the work of German sociologist

Niklas Luhmann

Niklas Luhmann (; ; December 8, 1927 – November 6, 1998) was a German sociologist, philosopher of social science, and a prominent thinker in systems theory.

Biography

Luhmann was born in Lüneburg, Free State of Prussia, where his father's ...

, inspires a similar diagnosis. This theory holds that each system, such as economy, science, religion or media, communicates using its own code: ''true'' and ''false'' for science, ''profit'' and ''loss'' for the economy, ''news'' and ''no-news'' for the media, and so on. According to some sociologists, science's

mediatization,

its commodification

and its politicization,

as a result of the structural coupling among systems, have led to a confusion of the original system codes. If science's code of ''true'' and ''false'' is substituted with those of the other systems, such as ''profit'' and ''loss'' or ''news'' and ''no-news'', science enters into an internal crisis.

Economist Noah Smith suggests that a factor in the crisis has been the overvaluing of research in academia and undervaluing of teaching ability, especially in fields with few major recent discoveries.

Publish-or-perish culture in academia

Philosopher and historian of science

Jerome R. Ravetz predicted in his 1971 book ''

Scientific Knowledge and Its Social Problems'' that science—in its progression from "little" science composed of isolated communities of researchers, to "big" science or "techno-science"—would suffer major problems in its internal system of quality control. He recognized that the incentive structure for modern scientists could become dysfunctional, now known as the present publish-or-perish challenge, creating

perverse incentives to publish any findings, however dubious. According to Ravetz, quality in science is maintained only when there is a community of scholars, linked by a set of shared norms and standards, who are willing and able to hold each other accountable.

Philosopher

Brian D. Earp and psychologist Jim A. C. Everett argue that, although replication is in the best interests of academics and researchers as a group, features of academic psychological culture discourage replication by individual researchers. They argue that performing replications can be time-consuming, and take away resources from projects that reflect the researcher's original thinking. They are harder to publish, largely because they are unoriginal, and even when they can be published they are unlikely to be viewed as major contributions to the field. Ultimately, replications "bring less recognition and reward, including grant money, to their authors".

A major cause of low reproducibility is the

publication bias

In published academic research, publication bias occurs when the outcome of an experiment or research study biases the decision to publish or otherwise distribute it. Publishing only results that show a significant finding disturbs the balance o ...

stemming from the fact that statistically non-significant results and seemingly unoriginal replications are rarely published. Only a very small proportion of academic journals in psychology and neurosciences explicitly welcomed submissions of replication studies in their aim and scope or instructions to authors. This does not encourage reporting on, or even attempts to perform, replication studies. Among 1,576 researchers surveyed by ''Nature'' in 2016, only a minority had ever attempted to publish a replication, and several respondents who had published failed replications noted that editors and reviewers demanded that they play down comparisons with the original studies.

An analysis of 4,270 empirical studies in 18 business journals from 1970 to 1991 reported that less than 10% of accounting, economics, and finance articles and 5% of management and marketing articles were replication studies.

Publication bias is augmented by the

pressure to publish and the author's own

confirmation bias

Confirmation bias is the tendency to search for, interpret, favor, and recall information in a way that confirms or supports one's prior beliefs or values. People display this bias when they select information that supports their views, ignoring ...

, and is an inherent hazard in the field, requiring a certain degree of

skepticism

Skepticism, also spelled scepticism, is a questioning attitude or doubt toward knowledge claims that are seen as mere belief or dogma. For example, if a person is skeptical about claims made by their government about an ongoing war then the p ...

on the part of readers.

Certain publishing practices also make it difficult to conduct replications and to monitor the severity of the reproducibility crisis, for oftentimes the articles do not come with sufficient descriptions for other scholars to reproduce the study. The Reproducibility Project: Cancer Biology showed that of 193 experiments from 53 top papers about cancer published between 2010 and 2012, only 50 experiments from 23 papers have authors who provided enough information for researchers to redo the studies, sometimes with modifications. None of the 193 papers examined had its experimental protocols fully described and replicating 70% of experiments required asking for key reagents.

The aforementioned study of empirical findings in the ''

Strategic Management Journal

The Strategic Management Society (SMS) is a professional society for the advancement of strategic management. The society consists of nearly 3,000 members representing various backgrounds and perspectives from more than eighty different countries. ...

'' found that 70% of 88 articles could not be replicated due to a lack of sufficient information for data or procedures.

In

water resources

Water resources are natural resources of water that are potentially useful for humans, for example as a source of drinking water supply or irrigation water. 97% of the water on the Earth is salt water and only three percent is fresh water; slight ...

and

management

Management (or managing) is the administration of an organization, whether it is a business, a nonprofit organization, or a government body. It is the art and science of managing resources of the business.

Management includes the activitie ...

, most of 1,987 articles published in 2017 were not replicable because of a lack of available information shared online.

Questionable research practices and fraud

Questionable research practices (QRPs) are intentional behaviors which capitalize on the gray area of acceptable scientific behavior or exploit the

researcher degrees of freedom (researcher DF), which can contribute to the irreproducibility of results.

Researcher DF are seen in

hypothesis

A hypothesis (plural hypotheses) is a proposed explanation for a phenomenon. For a hypothesis to be a scientific hypothesis, the scientific method requires that one can test it. Scientists generally base scientific hypotheses on previous obse ...

formulation,

design of experiments

The design of experiments (DOE, DOX, or experimental design) is the design of any task that aims to describe and explain the variation of information under conditions that are hypothesized to reflect the variation. The term is generally associ ...

,

data collection

Data collection or data gathering is the process of gathering and measuring information on targeted variables in an established system, which then enables one to answer relevant questions and evaluate outcomes. Data collection is a research com ...

and

analysis

Analysis ( : analyses) is the process of breaking a complex topic or substance into smaller parts in order to gain a better understanding of it. The technique has been applied in the study of mathematics and logic since before Aristotle (3 ...

, and

reporting of research.

Some examples of QRPs are

data dredging

Data dredging (also known as data snooping or ''p''-hacking) is the misuse of data analysis to find patterns in data that can be presented as statistically significant, thus dramatically increasing and understating the risk of false positives. T ...

,

,

and

HARKing (hypothesising after results are known).

In medicine, irreproducible studies have six features in common. These include investigators not being blinded to the experimental versus the control arms, a failure to repeat experiments, a lack of

positive and

negative controls, failing to report all the data, inappropriate use of statistical tests, and use of reagents that were not appropriately

validated.

QRPs do not include more explicit violations of scientific integrity, such as data falsification.

Fraudulent research does occur, as in the case of scientific fraud by social psychologist

Diederik Stapel,

cognitive psychologist

Marc Hauser and social psychologist Lawrence Sanna.

Despite these scandals, scientific fraud appears to be uncommon.

In 2009, a meta-analysis found that 2% of scientists across fields admitted to falsifying studies at least once and 14% admitted to personally knowing someone who did. Such misconduct was, according to one study, reported more frequently by medical researchers than by others.

According to biotechnology researcher J. Leslie Glick's estimate in 1992, about 10 to 20% of research and development studies involved either QRPs or outright fraud. A 2012 survey of over 2,000 psychologists indicated that about 94% of respondents admitted to using at least one QRP or engaging in fraud,

although the methodology of this survey and its results have been called into question.

Statistical issues

According to a 2018 analysis of 200 meta-analyses, "psychological research is, on average, afflicted with low

statistical power

In statistics, the power of a binary hypothesis test is the probability that the test correctly rejects the null hypothesis (H_0) when a specific alternative hypothesis (H_1) is true. It is commonly denoted by 1-\beta, and represents the chances ...

",

meaning that most studies do not have a high probability of accurately finding an effect when one exists. Findings from original studies which have low power will often fail to replicate, and replication studies with low power are susceptible to false negatives.

Low statistical power is a substantial contributor to the replication crisis.

Within economics, the replication crisis may be exacerbated because econometric results are fragile: using different but plausible

estimation procedures or

data preprocessing techniques can lead to obtaining conflicting results.

Base rate of hypothesis accuracy

Philosopher

Alexander Bird argues that high rates of failed replications can be consistent with quality science. He argues that this depends on the base rate of hypotheses: a field with a high rate of incorrect hypotheses would see a high rate of failed reproductions. Given the parameters of statistical testing, 5% of studies testing incorrect hypotheses would be significant (a false positive). If there are almost no correct hypotheses (true positives), then the false positive findings would outnumber the true positives. When trying to replicate these results, a further 95% of the false positives would then be identified, resulting in a high number of failed replications.

Consequences

When effects are wrongly stated as relevant in the literature, failure to detect this by replication will lead to the canonization of such false facts.

A 2021 study found that papers in leading general interest, psychology and economics

journals with findings that could not be replicated tend to be cited more over time than reproducible research papers, likely because these results are surprising or interesting. The trend is not affected by publication of failed reproductions, after which only 12% of papers that cite the original research will mention the failed replication.

Further, experts are able to predict which studies will be replicable, leading the authors of the 2021 study, Marta Serra-Garcia and

Uri Gneezy, to conclude that experts apply lower standards to interesting results when deciding whether to publish them.

Political repercussions

The crisis of science's quality control system is affecting the use of science for policy. This is the thesis of a recent work by a group of

science and technology studies

Science and technology studies (STS) is an interdisciplinary field that examines the creation, development, and consequences of science and technology in their historical, cultural, and social contexts.

History

Like most interdisciplinary fie ...

scholars, who identify in "evidence based (or informed) policy" a point of present tension.

In the US, science's reproducibility crisis has become a topic of political contention, linked to the attempt to diminish regulations – e.g. of emissions of pollutants, with the argument that these regulations are based on non-reproducible science.

Previous attempts with the same aim accused studies used by regulators of being non-transparent.

Public awareness and perceptions

Concerns have been expressed within the scientific community that the general public may consider science less credible due to failed replications. Research supporting this concern is sparse, but a nationally representative survey in Germany showed that more than 75% of Germans have not heard of replication failures in science.

The study also found that most Germans have positive perceptions of replication efforts: only 18% think that non-replicability shows that science cannot be trusted, while 65% think that replication research shows that science applies quality control, and 80% agree that errors and corrections are part of science.

Response in academia

With the replication crisis of psychology earning attention, Princeton University psychologist

Susan Fiske

Susan Tufts Fiske (born August 19, 1952) is the Eugene Higgins Professor of Psychology and Public Affairs in the Department of Psychology at Princeton University. She is a social psychologist known for her work on social cognition, stereotypes, ...

drew controversy for speaking against critics of psychology for what she called bullying and undermining the science.

She called these unidentified "adversaries" names such as "methodological terrorist" and "self-appointed data police", saying that criticism of psychology should be expressed only in private or by contacting the journals.

Columbia University statistician and political scientist

Andrew Gelman

Andrew Eric Gelman (born February 11, 1965) is an American statistician and professor of statistics and political science at Columbia University.

Gelman received bachelor of science degrees in mathematics and in physics from MIT, where he w ...

responded to Fiske, saying that she had found herself willing to tolerate the "dead paradigm" of faulty statistics and had refused to retract publications even when errors were pointed out.

He added that her tenure as editor had been abysmal and that a number of published papers she edited were found to be based on extremely weak statistics; one of Fiske's own published papers had a major statistical error and "impossible" conclusions.

Remedies

Focus on the replication crisis has led to renewed efforts in psychology to retest important findings.

A 2013 special edition of the journal ''

Social Psychology

Social psychology is the scientific study of how thoughts, feelings, and behaviors are influenced by the real or imagined presence of other people or by social norms. Social psychologists typically explain human behavior as a result of the ...

'' focused on replication studies.

Standardization

Standardization or standardisation is the process of implementing and developing technical standards based on the consensus of different parties that include firms, users, interest groups, standards organizations and governments. Standardizatio ...

as well as (requiring) transparency of the used statistical and experimental methods have been proposed. Careful

documentation

Documentation is any communicable material that is used to describe, explain or instruct regarding some attributes of an object, system or procedure, such as its parts, assembly, installation, maintenance and use. As a form of knowledge manageme ...

of the experimental set-up is considered crucial for replicability of experiments and various variables may not be documented and standardized such as animals' diets in animal studies.

A 2016 article by

John Ioannidis

John P. A. Ioannidis (; el, Ιωάννης Ιωαννίδης, ; born August 21, 1965) is a Greek-American physician-scientist, writer and Stanford University professor who has made contributions to evidence-based medicine, epidemiology, and cl ...

elaborated on "Why Most Clinical Research Is Not Useful".

Ioannidis describes what he views as some of the problems and calls for reform, characterizing certain points for medical research to be useful again; one example he makes is the need for medicine to be patient-centered (e.g. in the form of the

Patient-Centered Outcomes Research Institute

The Patient-Centered Outcomes Research Institute (PCORI) is a United States-based non-profit institute created through the 2010 Patient Protection and Affordable Care Act. It is a government-sponsored organization charged with funding comparative ...

) instead of the current practice to mainly take care of "the needs of physicians, investigators, or sponsors".

Reform in scientific publishing

Metascience

Metascience is the use of

scientific methodology

Science is a systematic endeavor that builds and organizes knowledge in the form of testable explanations and predictions about the universe.

Science may be as old as the human species, and some of the earliest archeological evidence ...

to study science itself. It seeks to increase the quality of scientific research while reducing waste. It is also known as "research on research" and "the science of science", as it uses

research methods to study how

research

Research is "creative and systematic work undertaken to increase the stock of knowledge". It involves the collection, organization and analysis of evidence to increase understanding of a topic, characterized by a particular attentiveness ...

is done and where improvements can be made. Metascience is concerned with all fields of research and has been called "a bird's eye view of science." In Ioannidis's words, "Science is the best thing that has happened to human beings ... but we can do it better."

Meta-research continues to be conducted to identify the roots of the crisis and to address them. Methods of addressing the crisis include

pre-registration of scientific studies and

clinical trials

Clinical trials are prospective biomedical or behavioral research studies on human participants designed to answer specific questions about biomedical or behavioral interventions, including new treatments (such as novel vaccines, drugs, dieta ...

as well as the founding of organizations such as

CONSORT and the

EQUATOR Network that issue guidelines for methodology and reporting. Efforts continue to reform the system of academic incentives, improve the

peer review

Peer review is the evaluation of work by one or more people with similar competencies as the producers of the work ( peers). It functions as a form of self-regulation by qualified members of a profession within the relevant field. Peer revie ...

process, reduce the

misuse of statistics, combat bias in scientific literature, and increase the overall quality and efficiency of the scientific process.

Presentation of methodology

Some authors have argued that the insufficient communication of experimental methods is a major contributor to the reproducibility crisis and that better reporting of experimental design and statistical analyses would improve the situation. These authors tend to plead for both a broad cultural change in the scientific community of how statistics are considered and a more coercive push from scientific journals and funding bodies. But concerns have been raised about the potential for standards for transparency and replication to be misapplied to qualitative as well as quantitative studies.

Business and management journals that have introduced editorial policies on data accessibility, replication, and transparency include the ''

Strategic Management Journal

The Strategic Management Society (SMS) is a professional society for the advancement of strategic management. The society consists of nearly 3,000 members representing various backgrounds and perspectives from more than eighty different countries. ...

'', the ''

Journal of International Business Studies'', and the ''

Management and Organization Review''.

Result-blind peer review

In response to concerns in psychology about publication bias and

data dredging

Data dredging (also known as data snooping or ''p''-hacking) is the misuse of data analysis to find patterns in data that can be presented as statistically significant, thus dramatically increasing and understating the risk of false positives. T ...

, more than 140 psychology journals have adopted result-blind peer review. In this approach, studies are accepted not on the basis of their findings and after the studies are completed, but before they are conducted and on the basis of the

methodological rigor

Rigour (British English) or rigor (American English; see spelling differences) describes a condition of stiffness or strictness. These constraints may be environmentally imposed, such as "the rigours of famine"; logically imposed, such as ma ...

of their experimental designs, and the theoretical justifications for their statistical analysis techniques before data collection or analysis is done. Early analysis of this procedure has estimated that 61% of result-blind studies have led to

null results, in contrast to an estimated 5% to 20% in earlier research.

In addition, large-scale collaborations between researchers working in multiple labs in different countries that regularly make their data openly available for different researchers to assess have become much more common in psychology.

Pre-registration of studies

Scientific publishing has begun using

pre-registration reports to address the replication crisis. The registered report format requires authors to submit a description of the study methods and analyses prior to data collection. Once the method and analysis plan is vetted through peer-review, publication of the findings is provisionally guaranteed, based on whether the authors follow the proposed protocol. One goal of registered reports is to circumvent the

publication bias

In published academic research, publication bias occurs when the outcome of an experiment or research study biases the decision to publish or otherwise distribute it. Publishing only results that show a significant finding disturbs the balance o ...

toward significant findings that can lead to implementation of questionable research practices. Another is to encourage publication of studies with rigorous methods.

The journal ''

Psychological Science'' has encouraged the

preregistration of studies and the reporting of effect sizes and confidence intervals. The editor in chief also noted that the editorial staff will be asking for replication of studies with surprising findings from examinations using small sample sizes before allowing the manuscripts to be published.

Metadata and digital tools for tracking replications

It has been suggested that "a simple way to check how often studies have been repeated, and whether or not the original findings are confirmed" is needed.

Categorizations and ratings of reproducibility at the study or results level, as well as addition of links to and rating of third-party confirmations, could be conducted by the peer-reviewers, the scientific journal, or by readers in combination with novel digital platforms or tools.

Statistical reform

Requiring smaller ''p''-values

Many publications require a

''p''-value of ''p'' < 0.05 to claim

statistical significance

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the p ...

. The paper "Redefine statistical significance",

signed by a large number of scientists and mathematicians, proposes that in "fields where the threshold for defining statistical significance for new discoveries is ''p'' < 0.05, we propose a change to ''p'' < 0.005. This simple step would immediately improve the reproducibility of scientific research in many fields." Their rationale is that "a leading cause of non-reproducibility (is that the) statistical standards of evidence for claiming new discoveries in many fields of science are simply too low. Associating 'statistically significant' findings with ''p'' < 0.05 results in a high rate of false positives even in the absence of other experimental, procedural and reporting problems."

This call was subsequently criticised by another large group, who argued that "redefining" the threshold would not fix current problems, would lead to some new ones, and that in the end, all thresholds needed to be justified case-by-case instead of following general conventions.

Addressing misinterpretation of ''p''-values

Although statisticians are unanimous that the use of "''p'' < 0.05" as a standard for significance provides weaker evidence than is generally appreciated, there is a lack of unanimity about what should be done about it. Some have advocated that

Bayesian methods

Bayesian inference is a method of statistical inference in which Bayes' theorem is used to update the probability for a hypothesis as more evidence or information becomes available. Bayesian inference is an important technique in statistics, an ...

should replace ''p''-values. This has not happened on a wide scale, partly because it is complicated and partly because many users distrust the specification of prior distributions in the absence of hard data. A simplified version of the Bayesian argument, based on testing a point null hypothesis was suggested by pharmacologist

David Colquhoun

David Colquhoun (born 19 July 1936) is a British pharmacologist at University College London (UCL). He has contributed to the general theory of receptor and synaptic mechanisms, and in particular the theory and practice of single ion channel f ...

.

The logical problems of inductive inference were discussed in "The Problem with p-values" (2016).

The hazards of reliance on ''p''-values arises partly because even an observation of ''p'' = 0.001 is not necessarily strong evidence against the null hypothesis.

Despite the fact that the likelihood ratio in favor of the alternative hypothesis over the null is close to 100, if the hypothesis was implausible, with a prior probability of a real effect being 0.1, even the observation of ''p'' = 0.001 would have a false positive risk of 8 percent. It would still fail to reach the 5 percent level.

It was recommended that the terms "significant" and "non-significant" should not be used.

''p''-values and confidence intervals should still be specified, but they should be accompanied by an indication of the false-positive risk. It was suggested that the best way to do this is to calculate the prior probability that would be necessary to believe in order to achieve a false positive risk of a certain level, such as 5%. The calculations can be done with various computer software.

This reverse Bayesian approach, which physicist

Robert Matthews suggested in 2001, is one way to avoid the problem that the prior probability is rarely known.

Encouraging larger sample sizes

To improve the quality of replications, larger

sample sizes than those used in the original study are often needed. Larger sample sizes are needed because estimates of

effect size

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the ...

s in published work are often exaggerated due to publication bias and large sampling variability associated with small sample sizes in an original study.

Further, using

significance thresholds usually leads to inflated effects, because particularly with small sample sizes, only the largest effects will become significant.

Replication efforts

Funding

In July 2016, the

Netherlands Organisation for Scientific Research made €3 million available for replication studies. The funding is for replication based on reanalysis of existing data and replication by collecting and analysing new data. Funding is available in the areas of social sciences, health research and healthcare innovation.

In 2013, the

Laura and John Arnold Foundation

Arnold Ventures LLC (formerly known as The Laura and John Arnold Foundation) is focused on evidence-based giving in a wide range of categories including: criminal justice, education, health care, and public finance. The organization was founded by ...

funded the launch of

The Center for Open Science with a $5.25 million grant. By 2017, it provided an additional $10 million in funding.

It also funded the launch of the

Meta-Research Innovation Center at Stanford The Meta-Research Innovation Center at Stanford (METRICS) is a research center within the Stanford School of Medicine that aims to improve reproducibility by studying how science is practiced and published and developing better ways for the scienti ...

at Stanford University run by Ioannidis and medical scientist

Steven Goodman to study ways to improve scientific research.

[ It also provided funding for the ]AllTrials

AllTrials (sometimes called All Trials or AllTrials.net) is a project advocating that clinical research adopt the principles of open research. The project summarizes itself as "All trials registered, all results reported": that is, all clinical tri ...

initiative led in part by medical scientist Ben Goldacre.[

]

Emphasis in post-secondary education

Based on coursework in experimental methods at MIT, Stanford, and the University of Washington

The University of Washington (UW, simply Washington, or informally U-Dub) is a public research university in Seattle, Washington.

Founded in 1861, Washington is one of the oldest universities on the West Coast; it was established in Seatt ...

, it has been suggested that methods courses in psychology and other fields should emphasize replication attempts rather than original studies. Such an approach would help students learn scientific methodology and provide numerous independent replications of meaningful scientific findings that would test the replicability of scientific findings. Some have recommended that graduate students should be required to publish a high-quality replication attempt on a topic related to their doctoral research prior to graduation.

= Final year thesis

=

Some institutions require undergraduate

Undergraduate education is education conducted after secondary education and before postgraduate education. It typically includes all postsecondary programs up to the level of a bachelor's degree. For example, in the United States, an entry-le ...

students to submit a final year thesis that consists of an original piece of research. Daniel Quintana, a psychologist at the University of Oslo in Norway, has recommended that students should be encouraged to perform replication studies in thesis projects, as well as being taught about open science.

= Semi-automated

=

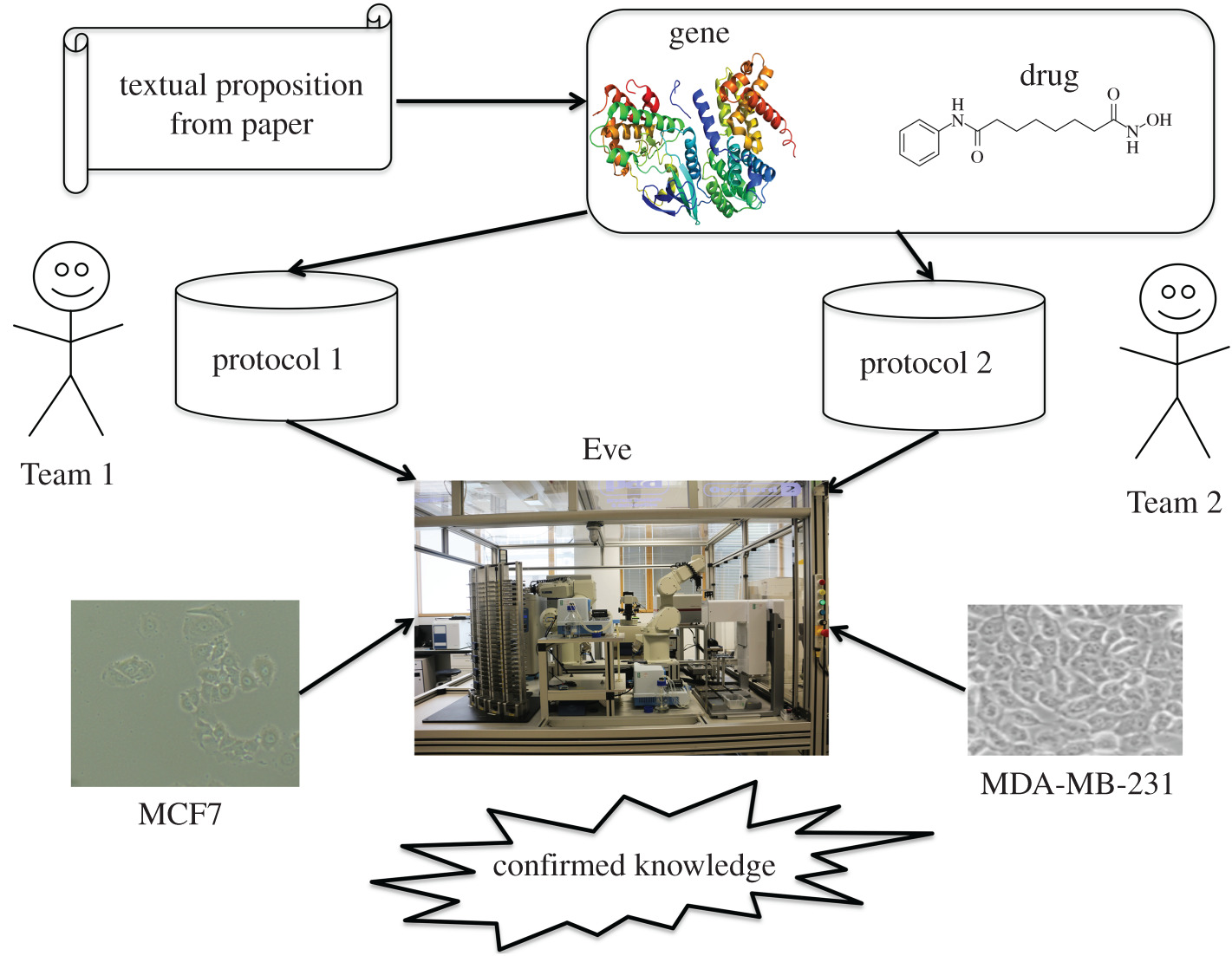

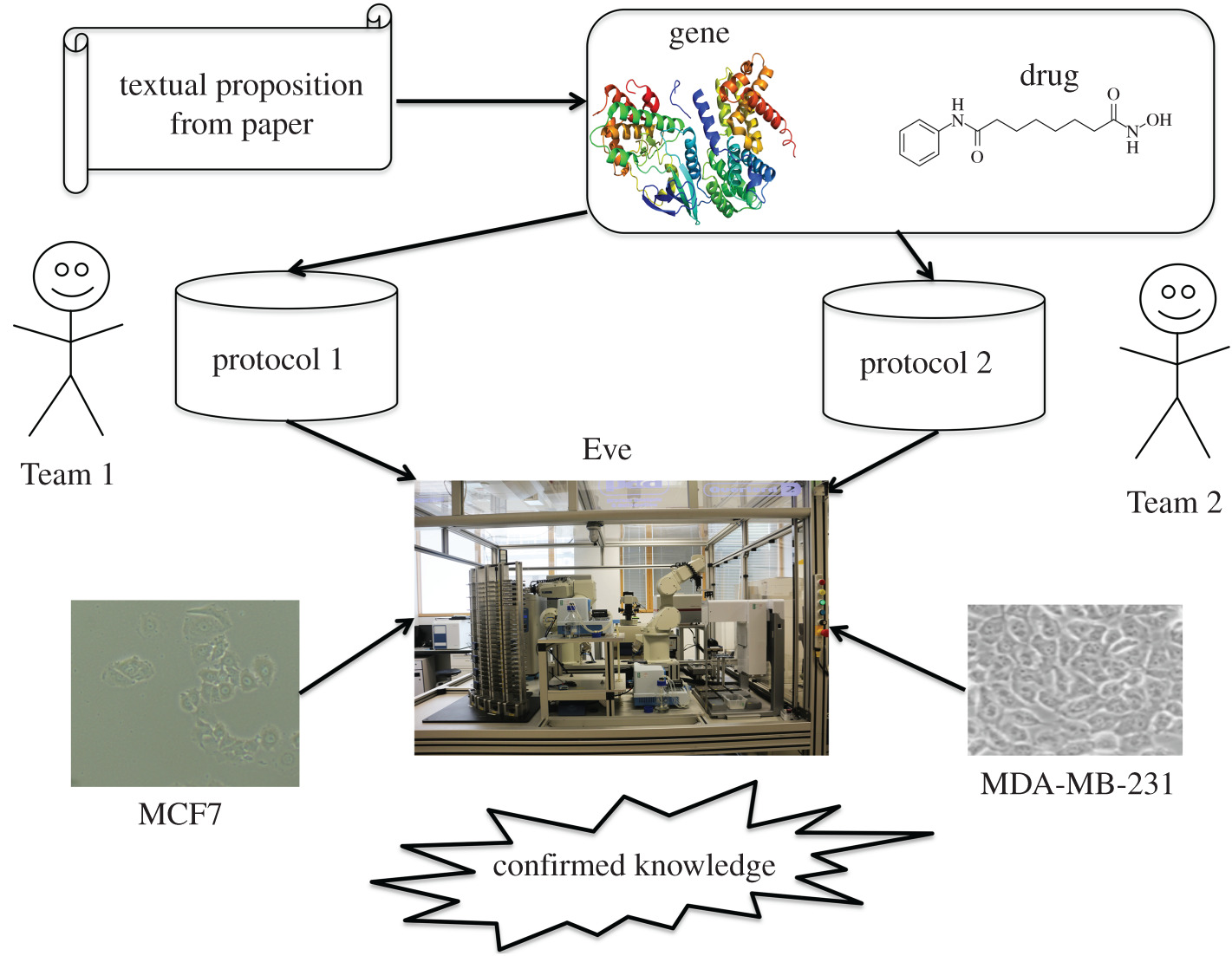

Researchers demonstrated a way of semi-automated testing for reproducibility: statements about experimental results were extracted from, as of 2022 non-semantic, gene expression cancer research papers and subsequently reproduced via

Researchers demonstrated a way of semi-automated testing for reproducibility: statements about experimental results were extracted from, as of 2022 non-semantic, gene expression cancer research papers and subsequently reproduced via robot scientist

Robot Scientist (also known as Adam) is a laboratory robot created and developed by a group of scientists including Ross King, Kenneth Whelan, Ffion Jones, Philip Reiser, Christopher Bryant, Stephen Muggleton, Douglas Kell, Emma Byrne and Stev ...

" Eve". Problems of this approach include that it may not be feasible for many areas of research and that sufficient experimental data may not get extracted from some or many papers even if available.

Involving original authors

Psychologist Daniel Kahneman

Daniel Kahneman (; he, דניאל כהנמן; born March 5, 1934) is an Israeli-American psychologist and economist notable for his work on the psychology of judgment and decision-making, as well as behavioral economics, for which he was award ...

argued that, in psychology, the original authors should be involved in the replication effort because the published methods are often too vague.

Broader changes to scientific approach

Emphasize triangulation, not just replication

Psychologist Marcus R. Munafò and Epidemiologist George Davey Smith

George Davey Smith (born 9 May 1959) is a British epidemiologist. He has been professor of clinical epidemiology at the University of Bristol since 1994, honorary professor of public health at the University of Glasgow since 1996, and visitin ...

argue, in a piece published by ''Nature

Nature, in the broadest sense, is the physical world or universe. "Nature" can refer to the phenomena of the physical world, and also to life in general. The study of nature is a large, if not the only, part of science. Although humans are ...

'', that research should emphasize triangulation

In trigonometry and geometry, triangulation is the process of determining the location of a point by forming triangles to the point from known points.

Applications

In surveying

Specifically in surveying, triangulation involves only angle me ...

, not just replication, to protect against flawed ideas. They claim that,

Complex systems paradigm

The dominant scientific and statistical model of causation is the linear model.

Replication should seek to revise theories

Replication is fundamental for scientific progress to confirm original findings. However, replication alone is not sufficient to resolve the replication crisis. Replication efforts should seek not just to support or question the original findings, but also to replace them with revised, stronger theories with greater explanatory power. This approach therefore involves pruning existing theories, comparing all the alternative theories, and making replication efforts more generative and engaged in theory-building. However, replication alone is not enough, it is important to assess the extent that results generalise across geographical, historical and social contexts is important for several scientific fields, especially practitioners and policy makers to make analyses in order to guide important strategic decisions. Reproducible and replicable findings was the best predictor of generalisability beyond historical and geographical contexts, indicating that for social sciences, results from a certain time period and place can meaningfully drive as to what is universally present in individuals.

Open science

Open data, open source software and open source hardware all are critical to enabling reproducibility in the sense of validation of the original data analysis. The use of proprietary software, the lack of the publication of analysis software and the lack of open data prevents the replication of studies. Unless software used in research is open source, reproducing results with different software and hardware configurations is impossible.

Open data, open source software and open source hardware all are critical to enabling reproducibility in the sense of validation of the original data analysis. The use of proprietary software, the lack of the publication of analysis software and the lack of open data prevents the replication of studies. Unless software used in research is open source, reproducing results with different software and hardware configurations is impossible. CERN

The European Organization for Nuclear Research, known as CERN (; ; ), is an intergovernmental organization that operates the largest particle physics laboratory in the world. Established in 1954, it is based in a northwestern suburb of Gen ...

has both Open Data and CERN Analysis Preservation projects for storing data, all relevant information, and all software and tools needed to preserve an analysis at the large experiments of the LHC. Aside from all software and data, preserved analysis assets include metadata that enable understanding of the analysis workflow, related software, systematic uncertainties, statistics procedures and meaningful ways to search for the analysis, as well as references to publications and to backup material. CERN software is open source and available for use outside of particle physics

Particle physics or high energy physics is the study of fundamental particles and forces that constitute matter and radiation. The fundamental particles in the universe are classified in the Standard Model as fermions (matter particles) an ...

and there is some guidance provided to other fields on the broad approaches and strategies used for open science in contemporary particle physics.Registry of Research Data Repositories

The Registry of Research Data Repositories (re3data.org) is an open science tool that offers researchers, funding organizations, libraries and publishers an overview of existing international repositories for research data.

Background

re3d ...

, and Psychfiledrawer.org. Sites like Open Science Framework offer badges for using open science practices in an effort to incentivize scientists. However, there have been concerns that those who are most likely to provide their data and code for analyses are the researchers that are likely the most sophisticated.

See also

* Decline effect

* Estimation statistics

* Invalid science

Invalid science consists of scientific claims based on experiments that cannot be reproduced or that are contradicted by experiments that can be reproduced. Recent analyses indicate that the proportion of retracted claims in the scientific literatu ...

Notes

References

Further reading

*

*

*

*

*

Book Review

(Nov. 2020, ''The American Conservative

''The American Conservative'' (''TAC'') is a magazine published by the American Ideas Institute which was founded in 2002. Originally published twice a month, it was reduced to monthly publication in August 2009, and since February 2013, it has ...

'')

* review of

{{refend

Scientific method

Criticism of science

Ethics and statistics

Metascience

Statistical reliability

Of 49 medical studies from 1990 to 2003 with more than 1000 citations, 92% found that the studied therapies were effective. Of these studies, 16% were contradicted by subsequent studies, 16% had found stronger effects than did subsequent studies, 44% were replicated, and 24% remained largely unchallenged. A 2011 analysis by researchers with pharmaceutical company

Of 49 medical studies from 1990 to 2003 with more than 1000 citations, 92% found that the studied therapies were effective. Of these studies, 16% were contradicted by subsequent studies, 16% had found stronger effects than did subsequent studies, 44% were replicated, and 24% remained largely unchallenged. A 2011 analysis by researchers with pharmaceutical company  Researchers demonstrated a way of semi-automated testing for reproducibility: statements about experimental results were extracted from, as of 2022 non-semantic, gene expression cancer research papers and subsequently reproduced via

Researchers demonstrated a way of semi-automated testing for reproducibility: statements about experimental results were extracted from, as of 2022 non-semantic, gene expression cancer research papers and subsequently reproduced via