SuperComputer International on:

[Wikipedia]

[Google]

[Amazon]

A supercomputer is a type of

A supercomputer is a type of

A supercomputer is a type of

A supercomputer is a type of computer

A computer is a machine that can be Computer programming, programmed to automatically Execution (computing), carry out sequences of arithmetic or logical operations (''computation''). Modern digital electronic computers can perform generic set ...

with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point

In computing, floating-point arithmetic (FP) is arithmetic on subsets of real numbers formed by a ''significand'' (a Sign (mathematics), signed sequence of a fixed number of digits in some Radix, base) multiplied by an integer power of that ba ...

operations per second (FLOPS

Floating point operations per second (FLOPS, flops or flop/s) is a measure of computer performance in computing, useful in fields of scientific computations that require floating-point calculations.

For such cases, it is a more accurate measu ...

) instead of million instructions per second (MIPS). Since 2022, supercomputers have existed which can perform over 1018 FLOPS, so called exascale supercomputers. For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux

Linux ( ) is a family of open source Unix-like operating systems based on the Linux kernel, an kernel (operating system), operating system kernel first released on September 17, 1991, by Linus Torvalds. Linux is typically package manager, pac ...

-based operating systems. Additional research is being conducted in the United States, the European Union

The European Union (EU) is a supranational union, supranational political union, political and economic union of Member state of the European Union, member states that are Geography of the European Union, located primarily in Europe. The u ...

, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

Supercomputers play an important role in the field of computational science

Computational science, also known as scientific computing, technical computing or scientific computation (SC), is a division of science, and more specifically the Computer Sciences, which uses advanced computing capabilities to understand and s ...

, and are used for a wide range of computationally intensive tasks in various fields, including quantum mechanics

Quantum mechanics is the fundamental physical Scientific theory, theory that describes the behavior of matter and of light; its unusual characteristics typically occur at and below the scale of atoms. Reprinted, Addison-Wesley, 1989, It is ...

, weather forecasting

Weather forecasting or weather prediction is the application of science and technology forecasting, to predict the conditions of the Earth's atmosphere, atmosphere for a given location and time. People have attempted to predict the weather info ...

, climate research, oil and gas exploration, molecular modeling (computing the structures and properties of chemical compounds, biological macromolecules

A macromolecule is a "molecule of high relative molecular mass, the structure of which essentially comprises the multiple repetition of units derived, actually or conceptually, from molecules of low relative molecular mass." Polymers are physi ...

, polymers, and crystals), and physical simulations (such as simulations of the early moments of the universe, airplane and spacecraft aerodynamics

Aerodynamics () is the study of the motion of atmosphere of Earth, air, particularly when affected by a solid object, such as an airplane wing. It involves topics covered in the field of fluid dynamics and its subfield of gas dynamics, and is an ...

, the detonation of nuclear weapons

A nuclear weapon is an explosive device that derives its destructive force from nuclear reactions, either nuclear fission, fission (fission or atomic bomb) or a combination of fission and nuclear fusion, fusion reactions (thermonuclear weap ...

, and nuclear fusion

Nuclear fusion is a nuclear reaction, reaction in which two or more atomic nuclei combine to form a larger nuclei, nuclei/neutrons, neutron by-products. The difference in mass between the reactants and products is manifested as either the rele ...

). They have been essential in the field of cryptanalysis

Cryptanalysis (from the Greek ''kryptós'', "hidden", and ''analýein'', "to analyze") refers to the process of analyzing information systems in order to understand hidden aspects of the systems. Cryptanalysis is used to breach cryptographic se ...

.

Supercomputers were introduced in the 1960s, and for several decades the fastest was made by Seymour Cray

Seymour Roger Cray (September 28, 1925 – October 5, 1996)

– was an American

In 1960,

In 1960,

– was an American

Control Data Corporation

Control Data Corporation (CDC) was a mainframe and supercomputer company that in the 1960s was one of the nine major U.S. computer companies, which group included IBM, the Burroughs Corporation, and the Digital Equipment Corporation (DEC), the N ...

(CDC), Cray Research

Cray Inc., a subsidiary of Hewlett Packard Enterprise, is an American supercomputer manufacturer headquartered in Seattle, Washington. It also manufactures systems for data storage and analytics. Several Cray supercomputer systems are listed i ...

and subsequent companies bearing his name or monogram. The first such machines were highly tuned conventional designs that ran more quickly than their more general-purpose contemporaries. Through the decade, increasing amounts of parallelism were added, with one to four processors being typical. In the 1970s, vector processor

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called ...

s operating on large arrays of data came to dominate. A notable example is the highly successful Cray-1 of 1976. Vector computers remained the dominant design into the 1990s. From then until today, massively parallel

Massively parallel is the term for using a large number of computer processors (or separate computers) to simultaneously perform a set of coordinated computations in parallel. GPUs are massively parallel architecture with tens of thousands of ...

supercomputers with tens of thousands of off-the-shelf processors became the norm.

The U.S. has long been a leader in the supercomputer field, initially through Cray's nearly uninterrupted dominance, and later through a variety of technology companies. Japan made significant advancements in the field during the 1980s and 1990s, while China has become increasingly active in supercomputing in recent years. , Lawrence Livermore National Laboratory's El Capitan

El Capitan (; ) is a vertical Rock formations in the United States, rock formation in Yosemite National Park, on the north side of Yosemite Valley, near its western end. The El Capitan Granite, granite monolith is about from base to summit alo ...

is the world's fastest supercomputer. The US has five of the top 10; Italy two, Japan, Finland, Switzerland have one each. In June 2018, all combined supercomputers on the TOP500 list broke the 1 exaFLOPS mark.

History

In 1960,

In 1960, UNIVAC

UNIVAC (Universal Automatic Computer) was a line of electronic digital stored-program computers starting with the products of the Eckert–Mauchly Computer Corporation. Later the name was applied to a division of the Remington Rand company and ...

built the Livermore Atomic Research Computer (LARC), today considered among the first supercomputers, for the US Navy Research and Development Center. It still used high-speed drum memory

Drum memory was a magnetic data storage device invented by Gustav Tauschek in 1932 in Austria. Drums were widely used in the 1950s and into the 1960s as computer memory.

Many early computers, called drum computers or drum machines, used drum ...

, rather than the newly emerging disk drive technology. Also, among the first supercomputers was the IBM 7030 Stretch

The IBM 7030, also known as Stretch, was IBM's first transistorized supercomputer. It was the fastest computer in the world from 1961 until the first CDC 6600 became operational in 1964."Designed by Seymour Cray, the CDC 6600 was almost three tim ...

. The IBM 7030 was built by IBM for the Los Alamos National Laboratory

Los Alamos National Laboratory (often shortened as Los Alamos and LANL) is one of the sixteen research and development Laboratory, laboratories of the United States Department of Energy National Laboratories, United States Department of Energy ...

, which then in 1955 had requested a computer 100 times faster than any existing computer. The IBM 7030 used transistors

A transistor is a semiconductor device used to Electronic amplifier, amplify or electronic switch, switch electrical signals and electric power, power. It is one of the basic building blocks of modern electronics. It is composed of semicondu ...

, magnetic core memory, pipelined instructions, prefetched data through a memory controller and included pioneering random access disk drives. The IBM 7030 was completed in 1961 and despite not meeting the challenge of a hundredfold increase in performance, it was purchased by the Los Alamos National Laboratory. Customers in England and France also bought the computer, and it became the basis for the IBM 7950 Harvest, a supercomputer built for cryptanalysis

Cryptanalysis (from the Greek ''kryptós'', "hidden", and ''analýein'', "to analyze") refers to the process of analyzing information systems in order to understand hidden aspects of the systems. Cryptanalysis is used to breach cryptographic se ...

.

The third pioneering supercomputer project in the early 1960s was the Atlas

An atlas is a collection of maps; it is typically a bundle of world map, maps of Earth or of a continent or region of Earth. Advances in astronomy have also resulted in atlases of the celestial sphere or of other planets.

Atlases have traditio ...

at the University of Manchester

The University of Manchester is a public university, public research university in Manchester, England. The main campus is south of Manchester city centre, Manchester City Centre on Wilmslow Road, Oxford Road. The University of Manchester is c ...

, built by a team led by Tom Kilburn

Tom Kilburn (11 August 1921 – 17 January 2001) was an English mathematician and computer scientist. Over his 30-year career, he was involved in the development of five computers of great historical significance. With Freddie Williams he wor ...

. He designed the Atlas to have memory space for up to a million words of 48 bits, but because magnetic storage with such a capacity was unaffordable, the actual core memory of the Atlas was only 16,000 words, with a drum providing memory for a further 96,000 words. The Atlas Supervisor

The Atlas Supervisor was the program which managed the allocation of processing resources of Manchester University's Atlas Computer so that the machine was able to act on many tasks and user programs concurrently.

Its various functions includ ...

swapped data in the form of pages between the magnetic core and the drum. The Atlas operating system also introduced time-sharing

In computing, time-sharing is the Concurrency (computer science), concurrent sharing of a computing resource among many tasks or users by giving each Process (computing), task or User (computing), user a small slice of CPU time, processing time. ...

to supercomputing, so that more than one program could be executed on the supercomputer at any one time. Atlas was a joint venture between Ferranti

Ferranti International PLC or simply Ferranti was a UK-based electrical engineering and equipment firm that operated for over a century, from 1885 until its bankruptcy in 1993. At its peak, Ferranti was a significant player in power grid system ...

and Manchester University

The University of Manchester is a public university, public research university in Manchester, England. The main campus is south of Manchester city centre, Manchester City Centre on Wilmslow Road, Oxford Road. The University of Manchester is c ...

and was designed to operate at processing speeds approaching one microsecond per instruction, about one million instructions per second.

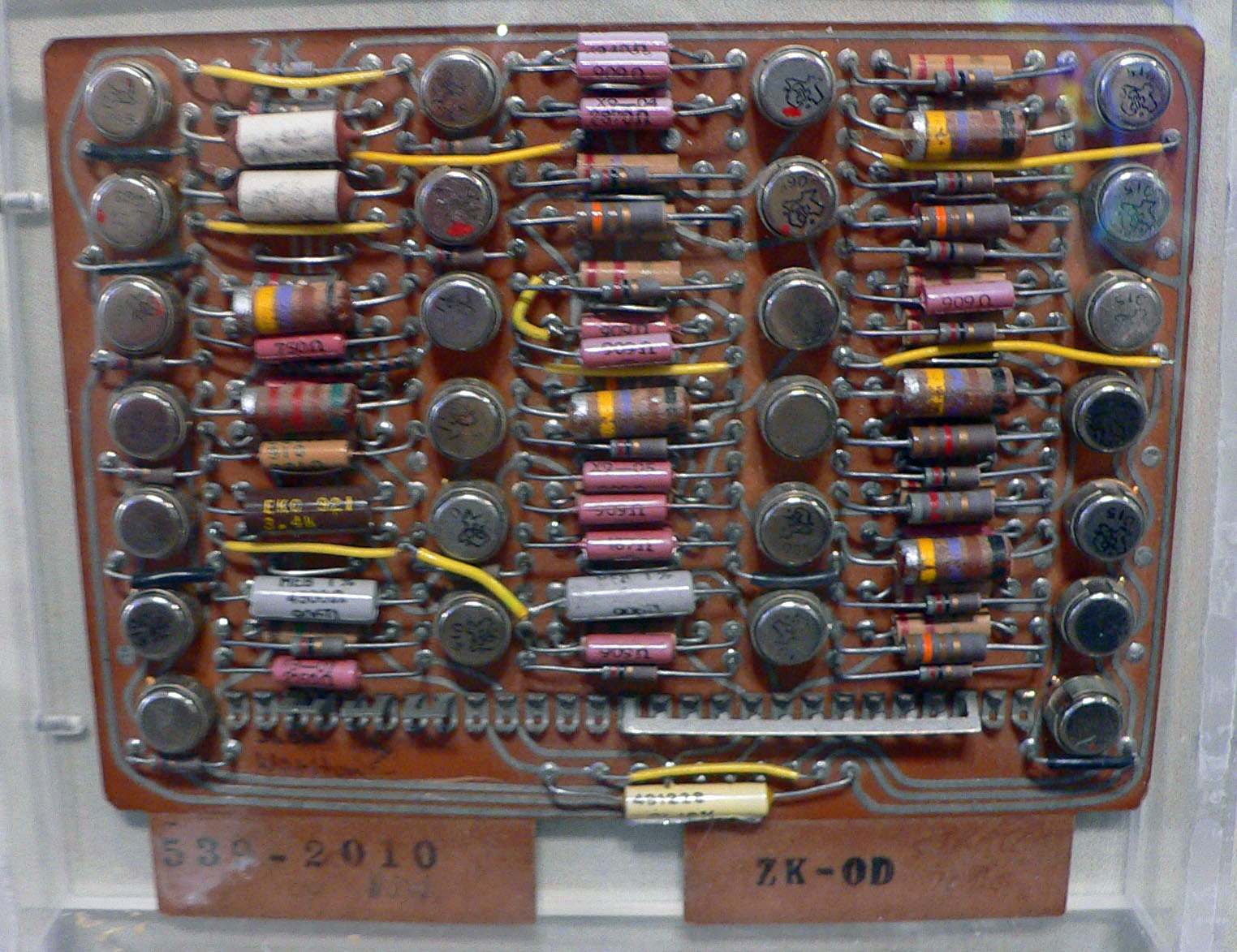

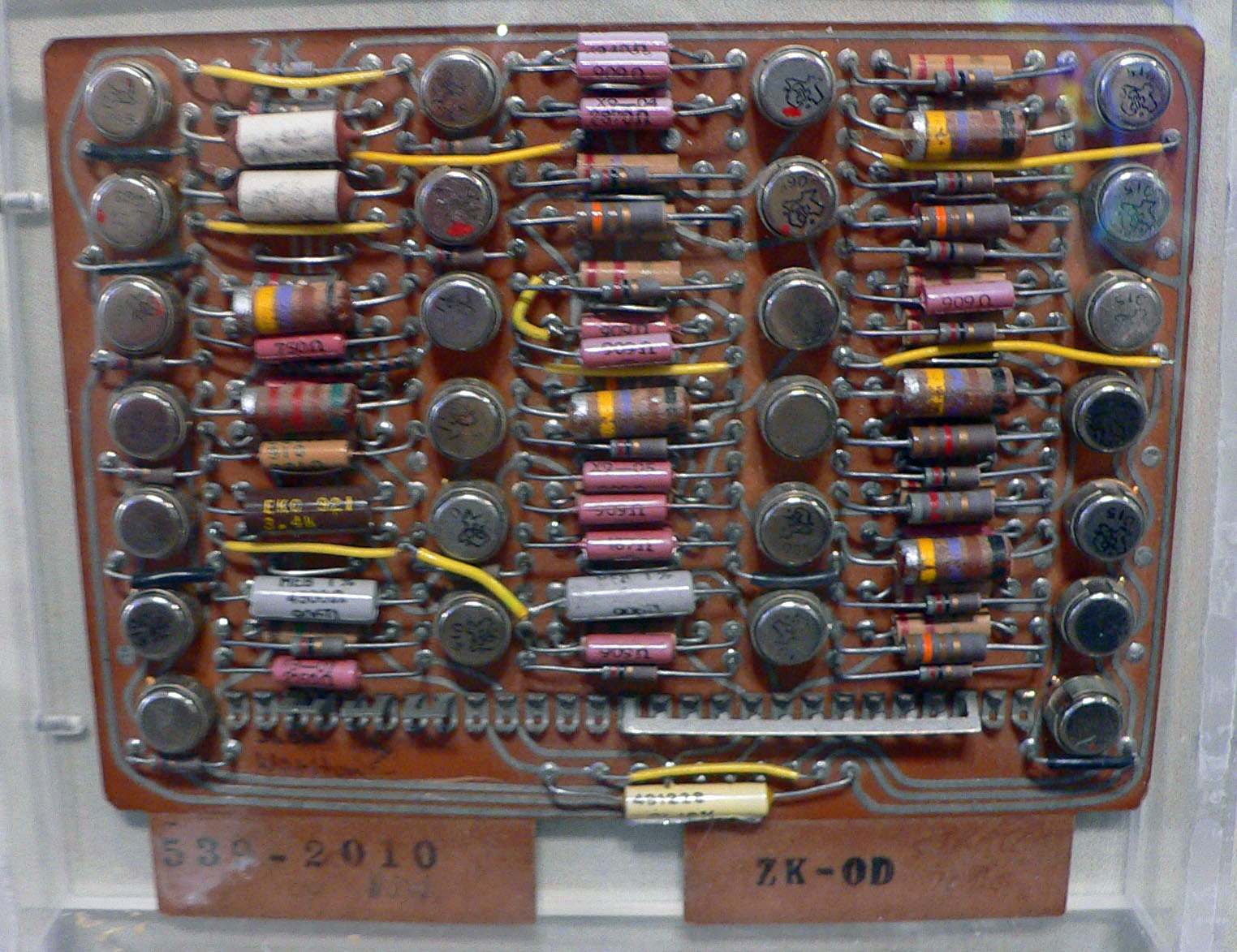

The CDC 6600

The CDC 6600 was the flagship of the 6000 series of mainframe computer systems manufactured by Control Data Corporation. Generally considered to be the first successful supercomputer, it outperformed the industry's prior recordholder, the I ...

, designed by Seymour Cray

Seymour Roger Cray (September 28, 1925 – October 5, 1996)

– was an American

The only computer to seriously challenge the Cray-1's performance in the 1970s was the

The only computer to seriously challenge the Cray-1's performance in the 1970s was the

Systems with a massive number of processors generally take one of two paths. In the

Systems with a massive number of processors generally take one of two paths. In the

IBM creates world's most powerful computer

, ''NewScientist.com news service'', June 2007 The use of

Heat management is a major issue in complex electronic devices and affects powerful computer systems in various ways. The thermal design power and

Heat management is a major issue in complex electronic devices and affects powerful computer systems in various ways. The thermal design power and

An Evaluation of the Oak Ridge National Laboratory Cray XT3

' by Sadaf R. Alam etal ''International Journal of High Performance Computing Applications'' February 2008 vol. 22 no. 1 52–80 While in a traditional multi-user computer system

The parallel architectures of supercomputers often dictate the use of special programming techniques to exploit their speed. Software tools for distributed processing include standard APIs such as

The parallel architectures of supercomputers often dictate the use of special programming techniques to exploit their speed. Software tools for distributed processing include standard APIs such as

Opportunistic supercomputing is a form of networked

Opportunistic supercomputing is a form of networked

In general, the speed of supercomputers is measured and benchmarked in

In general, the speed of supercomputers is measured and benchmarked in

Since 1993, the fastest supercomputers have been ranked on the TOP500 list according to their LINPACK benchmark results. The list does not claim to be unbiased or definitive, but it is a widely cited current definition of the "fastest" supercomputer available at any given time.

This is a list of the computers which appeared at the top of the TOP500 list since June 1993, and the "Peak speed" is given as the "Rmax" rating. In 2018,

Since 1993, the fastest supercomputers have been ranked on the TOP500 list according to their LINPACK benchmark results. The list does not claim to be unbiased or definitive, but it is a widely cited current definition of the "fastest" supercomputer available at any given time.

This is a list of the computers which appeared at the top of the TOP500 list since June 1993, and the "Peak speed" is given as the "Rmax" rating. In 2018,

"Supercomputer Design: An Initial Effort to Capture the Environmental, Economic, and Societal Impacts"

Chemical and Biomolecular Engineering Publications and Other Works. {{Authority control Supercomputers, American inventions Cluster computing Concurrent computing Distributed computing architecture Parallel computing

– was an American

germanium

Germanium is a chemical element; it has Symbol (chemistry), symbol Ge and atomic number 32. It is lustrous, hard-brittle, grayish-white and similar in appearance to silicon. It is a metalloid or a nonmetal in the carbon group that is chemically ...

to silicon

Silicon is a chemical element; it has symbol Si and atomic number 14. It is a hard, brittle crystalline solid with a blue-grey metallic lustre, and is a tetravalent metalloid (sometimes considered a non-metal) and semiconductor. It is a membe ...

transistors. Silicon transistors could run more quickly and the overheating problem was solved by introducing refrigeration to the supercomputer design. Thus, the CDC6600 became the fastest computer in the world. Given that the 6600 outperformed all the other contemporary computers by about 10 times, it was dubbed a ''supercomputer'' and defined the supercomputing market, when one hundred computers were sold at $8 million each.

Cray left CDC in 1972 to form his own company, Cray Research

Cray Inc., a subsidiary of Hewlett Packard Enterprise, is an American supercomputer manufacturer headquartered in Seattle, Washington. It also manufactures systems for data storage and analytics. Several Cray supercomputer systems are listed i ...

. Four years after leaving CDC, Cray delivered the 80 MHz Cray-1 in 1976, which became one of the most successful supercomputers in history.''Readings in computer architecture'' by Mark Donald Hill, Norman Paul Jouppi, Gurindar Sohi 1999 page 41-48''Milestones in computer science and information technology'' by Edwin D. Reilly 2003 page 65 The Cray-2

The Cray-2 is a supercomputer with four vector processors made by Cray Research starting in 1985. At 1.9 GFLOPS peak performance, it was the fastest machine in the world when it was released, replacing the Cray X-MP in that spot. It was, ...

was released in 1985. It had eight central processing unit

A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary Processor (computing), processor in a given computer. Its electronic circuitry executes Instruction (computing), instructions ...

s (CPUs), liquid cooling and the electronics coolant liquid Fluorinert

Fluorinert is the trademarked brand name for the line of electronics coolant liquids sold commercially by 3M. As perfluorinated compounds (PFCs), all Fluorinert variants have an extremely high global warming potential (GWP), so should be used with ...

was pumped through the supercomputer architecture. It reached 1.9 gigaFLOPS, making it the first supercomputer to break the gigaflop barrier.

Massively parallel designs

The only computer to seriously challenge the Cray-1's performance in the 1970s was the

The only computer to seriously challenge the Cray-1's performance in the 1970s was the ILLIAC IV

The ILLIAC IV was the first massively parallel computer. The system was originally designed to have 256 64-bit floating-point units (FPUs) and four central processing units (CPUs) able to process 1 billion operations per second. Due to budget cons ...

. This machine was the first realized example of a true massively parallel

Massively parallel is the term for using a large number of computer processors (or separate computers) to simultaneously perform a set of coordinated computations in parallel. GPUs are massively parallel architecture with tens of thousands of ...

computer, in which many processors worked together to solve different parts of a single larger problem. In contrast with the vector systems, which were designed to run a single stream of data as quickly as possible, in this concept, the computer instead feeds separate parts of the data to entirely different processors and then recombines the results. The ILLIAC's design was finalized in 1966 with 256 processors and offer speed up to 1 GFLOPS, compared to the 1970s Cray-1's peak of 250 MFLOPS. However, development problems led to only 64 processors being built, and the system could never operate more quickly than about 200 MFLOPS while being much larger and more complex than the Cray. Another problem was that writing software for the system was difficult, and getting peak performance from it was a matter of serious effort.

But the partial success of the ILLIAC IV was widely seen as pointing the way to the future of supercomputing. Cray argued against this, famously quipping that "If you were plowing a field, which would you rather use? Two strong oxen or 1024 chickens?" But by the early 1980s, several teams were working on parallel designs with thousands of processors, notably the Connection Machine

The Connection Machine (CM) is a member of a series of massively parallel supercomputers sold by Thinking Machines Corporation. The idea for the Connection Machine grew out of doctoral research on alternatives to the traditional von Neumann arch ...

(CM) that developed from research at MIT

The Massachusetts Institute of Technology (MIT) is a private research university in Cambridge, Massachusetts, United States. Established in 1861, MIT has played a significant role in the development of many areas of modern technology and sc ...

. The CM-1 used as many as 65,536 simplified custom microprocessor

A microprocessor is a computer processor (computing), processor for which the data processing logic and control is included on a single integrated circuit (IC), or a small number of ICs. The microprocessor contains the arithmetic, logic, a ...

s connected together in a network to share data. Several updated versions followed; the CM-5 supercomputer is a massively parallel processing computer capable of many billions of arithmetic operations per second.

In 1982, Osaka University

The , abbreviated as UOsaka or , is a List of national universities in Japan, national research university in Osaka, Japan. The university traces its roots back to Edo period, Edo-era institutions Tekijuku (1838) and Kaitokudō, Kaitokudo (1724), ...

's LINKS-1 Computer Graphics System used a massively parallel

Massively parallel is the term for using a large number of computer processors (or separate computers) to simultaneously perform a set of coordinated computations in parallel. GPUs are massively parallel architecture with tens of thousands of ...

processing architecture, with 514 microprocessor

A microprocessor is a computer processor (computing), processor for which the data processing logic and control is included on a single integrated circuit (IC), or a small number of ICs. The microprocessor contains the arithmetic, logic, a ...

s, including 257 Zilog Z8001 control processors and 257 iAPX 86/20 floating-point processors. It was mainly used for rendering realistic 3D computer graphics

3D computer graphics, sometimes called Computer-generated imagery, CGI, 3D-CGI or three-dimensional Computer-generated imagery, computer graphics, are graphics that use a three-dimensional representation of geometric data (often Cartesian coor ...

. Fujitsu's VPP500 from 1992 is unusual since, to achieve higher speeds, its processors used GaAs

Gallium arsenide (GaAs) is a III-V direct band gap semiconductor with a zinc blende crystal structure.

Gallium arsenide is used in the manufacture of devices such as microwave frequency integrated circuits, monolithic microwave integrated circui ...

, a material normally reserved for microwave applications due to its toxicity. Fujitsu's Numerical Wind Tunnel supercomputer used 166 vector processors to gain the top spot in 1994 with a peak speed of 1.7 gigaFLOPS (GFLOPS) per processor. The Hitachi SR2201 obtained a peak performance of 600 GFLOPS in 1996 by using 2048 processors connected via a fast three-dimensional crossbar network. The Intel Paragon could have 1000 to 4000 Intel i860 processors in various configurations and was ranked the fastest in the world in 1993. The Paragon was a MIMD machine which connected processors via a high speed two-dimensional mesh, allowing processes to execute on separate nodes, communicating via the Message Passing Interface

The Message Passing Interface (MPI) is a portable message-passing standard designed to function on parallel computing architectures. The MPI standard defines the syntax and semantics of library routines that are useful to a wide range of use ...

.

Software development remained a problem, but the CM series sparked off considerable research into this issue. Similar designs using custom hardware were made by many companies, including the Evans & Sutherland ES-1, MasPar

MasPar Computer Corporation (later NeoVista Software, Inc.) was a minisupercomputer vendor that was founded in 1987 by Jeff Kalb. The company was based in Sunnyvale, California.

History

While Kalb was the vice-president of the division of Digita ...

, nCUBE, Intel iPSC and the Goodyear MPP

The Goodyear Massively Parallel Processor (MPP) was a

massively parallel processing supercomputer built by Goodyear Aerospace

for the NASA Goddard Space Flight Center. It was designed to deliver enormous computational power at lower cost than ot ...

. But by the mid-1990s, general-purpose CPU performance had improved so much in that a supercomputer could be built using them as the individual processing units, instead of using custom chips. By the turn of the 21st century, designs featuring tens of thousands of commodity CPUs were the norm, with later machines adding graphic units to the mix.

In 1998, David Bader developed the first Linux

Linux ( ) is a family of open source Unix-like operating systems based on the Linux kernel, an kernel (operating system), operating system kernel first released on September 17, 1991, by Linus Torvalds. Linux is typically package manager, pac ...

supercomputer using commodity parts. While at the University of New Mexico, Bader sought to build a supercomputer running Linux using consumer off-the-shelf parts and a high-speed low-latency interconnection network. The prototype utilized an Alta Technologies "AltaCluster" of eight dual, 333 MHz, Intel Pentium II computers running a modified Linux kernel. Bader ported a significant amount of software to provide Linux support for necessary components as well as code from members of the National Computational Science Alliance (NCSA) to ensure interoperability, as none of it had been run on Linux previously. Using the successful prototype design, he led the development of "RoadRunner," the first Linux supercomputer for open use by the national science and engineering community via the National Science Foundation's National Technology Grid. RoadRunner was put into production use in April 1999. At the time of its deployment, it was considered one of the 100 fastest supercomputers in the world. Though Linux-based clusters using consumer-grade parts, such as Beowulf

''Beowulf'' (; ) is an Old English poetry, Old English poem, an Epic poetry, epic in the tradition of Germanic heroic legend consisting of 3,182 Alliterative verse, alliterative lines. It is one of the most important and List of translat ...

, existed prior to the development of Bader's prototype and RoadRunner, they lacked the scalability, bandwidth, and parallel computing capabilities to be considered "true" supercomputers.

grid computing

Grid computing is the use of widely distributed computer resources to reach a common goal. A computing grid can be thought of as a distributed system with non-interactive workloads that involve many files. Grid computing is distinguished fro ...

approach, the processing power of many computers, organized as distributed, diverse administrative domains, is opportunistically used whenever a computer is available. In another approach, many processors are used in proximity to each other, e.g. in a computer cluster

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newes ...

. In such a centralized massively parallel

Massively parallel is the term for using a large number of computer processors (or separate computers) to simultaneously perform a set of coordinated computations in parallel. GPUs are massively parallel architecture with tens of thousands of ...

system the speed and flexibility of the ' becomes very important and modern supercomputers have used various approaches ranging from enhanced Infiniband

InfiniBand (IB) is a computer networking communications standard used in high-performance computing that features very high throughput and very low latency. It is used for data interconnect both among and within computers. InfiniBand is also used ...

systems to three-dimensional torus interconnects.Knight, Will:IBM creates world's most powerful computer

, ''NewScientist.com news service'', June 2007 The use of

multi-core processor

A multi-core processor (MCP) is a microprocessor on a single integrated circuit (IC) with two or more separate central processing units (CPUs), called ''cores'' to emphasize their multiplicity (for example, ''dual-core'' or ''quad-core''). Ea ...

s combined with centralization is an emerging direction, e.g. as in the Cyclops64 system.''Analysis and performance results of computing betweenness centrality on IBM Cyclops64'' by Guangming Tan, Vugranam C. Sreedhar and Guang R. Gao The Journal of Supercomputing Volume 56, Number 1, 1–24 September 2011

As the price, performance and energy efficiency of general-purpose graphics processing units (GPGPUs) have improved, a number of petaFLOPS

Floating point operations per second (FLOPS, flops or flop/s) is a measure of computer performance in computing, useful in fields of scientific computations that require floating-point calculations.

For such cases, it is a more accurate measu ...

supercomputers such as Tianhe-I and Nebulae have started to rely on them. However, other systems such as the K computer continue to use conventional processors such as SPARC-based designs and the overall applicability of GPGPU

General-purpose computing on graphics processing units (GPGPU, or less often GPGP) is the use of a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditiona ...

s in general-purpose high-performance computing applications has been the subject of debate, in that while a GPGPU may be tuned to score well on specific benchmarks, its overall applicability to everyday algorithms may be limited unless significant effort is spent to tune the application to it. However, GPUs are gaining ground, and in 2012 the Jaguar

The jaguar (''Panthera onca'') is a large felidae, cat species and the only extant taxon, living member of the genus ''Panthera'' that is native to the Americas. With a body length of up to and a weight of up to , it is the biggest cat spe ...

supercomputer was transformed into Titan

Titan most often refers to:

* Titan (moon), the largest moon of Saturn

* Titans, a race of deities in Greek mythology

Titan or Titans may also refer to:

Arts and entertainment

Fictional entities

Fictional locations

* Titan in fiction, fictiona ...

by retrofitting CPUs with GPUs.

High-performance computers have an expected life cycle of about three years before requiring an upgrade. The Gyoukou supercomputer is unique in that it uses both a massively parallel design and liquid immersion cooling.

Special purpose supercomputers

A number of special-purpose systems have been designed, dedicated to a single problem. This allows the use of specially programmedFPGA

A field-programmable gate array (FPGA) is a type of configurable integrated circuit that can be repeatedly programmed after manufacturing. FPGAs are a subset of logic devices referred to as programmable logic devices (PLDs). They consist of a ...

chips or even custom ASICs, allowing better price/performance ratios by sacrificing generality. Examples of special-purpose supercomputers include Belle, Deep Blue, and Hydra for playing chess

Chess is a board game for two players. It is an abstract strategy game that involves Perfect information, no hidden information and no elements of game of chance, chance. It is played on a square chessboard, board consisting of 64 squares arran ...

, Gravity Pipe for astrophysics, MDGRAPE-3 for protein structure prediction and molecular dynamics, and Deep Crack for breaking the DES cipher

In cryptography, a cipher (or cypher) is an algorithm for performing encryption or decryption—a series of well-defined steps that can be followed as a procedure. An alternative, less common term is ''encipherment''. To encipher or encode i ...

.

Energy usage and heat management

Throughout the decades, the management of heat density has remained a key issue for most centralized supercomputers.''The Supermen: Story of Seymour Cray and the Technical Wizards Behind the Supercomputer'' by Charles J. Murray 1997, , pages 133–135''Parallel Computational Fluid Dyynamics; Recent Advances and Future Directions'' edited by Rupak Biswas 2010 page 401 The large amount of heat generated by a system may also have other effects, e.g. reducing the lifetime of other system components.''Supercomputing Research Advances'' by Yongge Huáng 2008, , pages 313–314 There have been diverse approaches to heat management, from pumpingFluorinert

Fluorinert is the trademarked brand name for the line of electronics coolant liquids sold commercially by 3M. As perfluorinated compounds (PFCs), all Fluorinert variants have an extremely high global warming potential (GWP), so should be used with ...

through the system, to a hybrid liquid-air cooling system or air cooling with normal air conditioning

Air conditioning, often abbreviated as A/C (US) or air con (UK), is the process of removing heat from an enclosed space to achieve a more comfortable interior temperature, and in some cases, also controlling the humidity of internal air. Air c ...

temperatures.''Parallel computing for real-time signal processing and control'' by M. O. Tokhi, Mohammad Alamgir Hossain 2003, , pages 201–202 A typical supercomputer consumes large amounts of electrical power, almost all of which is converted into heat, requiring cooling. For example, Tianhe-1A consumes 4.04 megawatt

The watt (symbol: W) is the unit of Power (physics), power or radiant flux in the International System of Units (SI), equal to 1 joule per second or 1 kg⋅m2⋅s−3. It is used to quantification (science), quantify the rate of Work ...

s (MW) of electricity. The cost to power and cool the system can be significant, e.g. 4 MW at $0.10/kWh is $400 an hour or about $3.5 million per year.

Heat management is a major issue in complex electronic devices and affects powerful computer systems in various ways. The thermal design power and

Heat management is a major issue in complex electronic devices and affects powerful computer systems in various ways. The thermal design power and CPU power dissipation

Processor power dissipation or processing unit power dissipation is the process in which computer processors consume electrical energy, and dissipate this energy in the form of heat due to the resistance in the electronic circuits.

Power manag ...

issues in supercomputing surpass those of traditional computer cooling

Computer cooling is required to remove the waste heat produced by computer components, to keep components within permissible operating temperature limits. Components that are susceptible to temporary malfunction or permanent failure if overhe ...

technologies. The supercomputing awards for green computing

Green computing, green IT (Information Technology), or Information and Communication Technology Sustainability, is the study and practice of environmentally sustainable computing or IT.

The goals of green computing include optimising energy ef ...

reflect this issue.

The packing of thousands of processors together inevitably generates significant amounts of heat density that need to be dealt with. The Cray-2

The Cray-2 is a supercomputer with four vector processors made by Cray Research starting in 1985. At 1.9 GFLOPS peak performance, it was the fastest machine in the world when it was released, replacing the Cray X-MP in that spot. It was, ...

was liquid cooled, and used a Fluorinert

Fluorinert is the trademarked brand name for the line of electronics coolant liquids sold commercially by 3M. As perfluorinated compounds (PFCs), all Fluorinert variants have an extremely high global warming potential (GWP), so should be used with ...

"cooling waterfall" which was forced through the modules under pressure. However, the submerged liquid cooling approach was not practical for the multi-cabinet systems based on off-the-shelf processors, and in System X a special cooling system that combined air conditioning with liquid cooling was developed in conjunction with the Liebert company.''Computational science – ICCS 2005: 5th international conference'' edited by Vaidy S. Sunderam 2005, , pages 60–67

In the Blue Gene

Blue Gene was an IBM project aimed at designing supercomputers that can reach operating speeds in the petaFLOPS (PFLOPS) range, with relatively low power consumption.

The project created three generations of supercomputers, Blue Gene/L, Blue ...

system, IBM deliberately used low power processors to deal with heat density. The IBM Power 775, released in 2011, has closely packed elements that require water cooling. The IBM Aquasar system uses hot water cooling to achieve energy efficiency, the water being used to heat buildings as well.

The energy efficiency of computer systems is generally measured in terms of " FLOPS per watt". In 2008, Roadrunner by IBM

International Business Machines Corporation (using the trademark IBM), nicknamed Big Blue, is an American Multinational corporation, multinational technology company headquartered in Armonk, New York, and present in over 175 countries. It is ...

operated at 376 MFLOPS/W. In November 2010, the Blue Gene/Q reached 1,684 MFLOPS/W and in June 2011 the top two spots on the Green 500 list were occupied by Blue Gene

Blue Gene was an IBM project aimed at designing supercomputers that can reach operating speeds in the petaFLOPS (PFLOPS) range, with relatively low power consumption.

The project created three generations of supercomputers, Blue Gene/L, Blue ...

machines in New York (one achieving 2097 MFLOPS/W) with the DEGIMA cluster in Nagasaki placing third with 1375 MFLOPS/W.

Because copper wires can transfer energy into a supercomputer with much higher power densities than forced air or circulating refrigerants can remove waste heat

Waste heat is heat that is produced by a machine, or other process that uses energy, as a byproduct of doing work. All such processes give off some waste heat as a fundamental result of the laws of thermodynamics. Waste heat has lower utility ...

, the ability of the cooling systems to remove waste heat is a limiting factor. , many existing supercomputers have more infrastructure capacity than the actual peak demand of the machine designers generally conservatively design the power and cooling infrastructure to handle more than the theoretical peak electrical power consumed by the supercomputer. Designs for future supercomputers are power-limited the thermal design power of the supercomputer as a whole, the amount that the power and cooling infrastructure can handle, is somewhat more than the expected normal power consumption, but less than the theoretical peak power consumption of the electronic hardware.

Software and system management

Operating systems

Since the end of the 20th century, supercomputer operating systems have undergone major transformations, based on the changes in supercomputer architecture.''Encyclopedia of Parallel Computing'' by David Padua 2011 pages 426–429 While early operating systems were custom tailored to each supercomputer to gain speed, the trend has been to move away from in-house operating systems to the adaptation of generic software such asLinux

Linux ( ) is a family of open source Unix-like operating systems based on the Linux kernel, an kernel (operating system), operating system kernel first released on September 17, 1991, by Linus Torvalds. Linux is typically package manager, pac ...

.''Knowing machines: essays on technical change'' by Donald MacKenzie 1998 page 149-151

Since modern massively parallel

Massively parallel is the term for using a large number of computer processors (or separate computers) to simultaneously perform a set of coordinated computations in parallel. GPUs are massively parallel architecture with tens of thousands of ...

supercomputers typically separate computations from other services by using multiple types of nodes, they usually run different operating systems on different nodes, e.g. using a small and efficient lightweight kernel such as CNK or CNL on compute nodes, but a larger system such as a full Linux distribution

A Linux distribution, often abbreviated as distro, is an operating system that includes the Linux kernel for its kernel functionality. Although the name does not imply product distribution per se, a distro—if distributed on its own—is oft ...

on server and I/O nodes.''Euro-Par 2004 Parallel Processing: 10th International Euro-Par Conference'' 2004, by Marco Danelutto, Marco Vanneschi and Domenico Laforenza, , page 835''Euro-Par 2006 Parallel Processing: 12th International Euro-Par Conference'', 2006, by Wolfgang E. Nagel, Wolfgang V. Walter and Wolfgang Lehner pageAn Evaluation of the Oak Ridge National Laboratory Cray XT3

' by Sadaf R. Alam etal ''International Journal of High Performance Computing Applications'' February 2008 vol. 22 no. 1 52–80 While in a traditional multi-user computer system

job scheduling

A job scheduler is a computer application for controlling unattended background program execution of job (computing), jobs. This is commonly called batch scheduling, as execution of non-interactive jobs is often called batch processing, though tr ...

is, in effect, a tasking problem for processing and peripheral resources, in a massively parallel system, the job management system needs to manage the allocation of both computational and communication resources, as well as gracefully deal with inevitable hardware failures when tens of thousands of processors are present.Open Job Management Architecture for the Blue Gene/L Supercomputer by Yariv Aridor et al. in ''Job scheduling strategies for parallel processing'' by Dror G. Feitelson 2005 pages 95–101

Although most modern supercomputers use Linux

Linux ( ) is a family of open source Unix-like operating systems based on the Linux kernel, an kernel (operating system), operating system kernel first released on September 17, 1991, by Linus Torvalds. Linux is typically package manager, pac ...

-based operating systems, each manufacturer has its own specific Linux distribution, and no industry standard exists, partly due to the fact that the differences in hardware architectures require changes to optimize the operating system to each hardware design.

Software tools and message passing

The parallel architectures of supercomputers often dictate the use of special programming techniques to exploit their speed. Software tools for distributed processing include standard APIs such as

The parallel architectures of supercomputers often dictate the use of special programming techniques to exploit their speed. Software tools for distributed processing include standard APIs such as MPI

MPI or Mpi may refer to:

Science and technology Biology and medicine

* Magnetic particle imaging, a tomographic technique

* Myocardial perfusion imaging, a medical procedure that illustrates heart function

* Mannose phosphate isomerase, an enzyme ...

and PVM, VTL, and open source

Open source is source code that is made freely available for possible modification and redistribution. Products include permission to use and view the source code, design documents, or content of the product. The open source model is a decentrali ...

software such as Beowulf

''Beowulf'' (; ) is an Old English poetry, Old English poem, an Epic poetry, epic in the tradition of Germanic heroic legend consisting of 3,182 Alliterative verse, alliterative lines. It is one of the most important and List of translat ...

.

In the most common scenario, environments such as PVM and MPI

MPI or Mpi may refer to:

Science and technology Biology and medicine

* Magnetic particle imaging, a tomographic technique

* Myocardial perfusion imaging, a medical procedure that illustrates heart function

* Mannose phosphate isomerase, an enzyme ...

for loosely connected clusters and OpenMP

OpenMP is an application programming interface (API) that supports multi-platform shared-memory multiprocessing programming in C, C++, and Fortran, on many platforms, instruction-set architectures and operating systems, including Solaris, ...

for tightly coordinated shared memory machines are used. Significant effort is required to optimize an algorithm for the interconnect characteristics of the machine it will be run on; the aim is to prevent any of the CPUs from wasting time waiting on data from other nodes. GPGPU

General-purpose computing on graphics processing units (GPGPU, or less often GPGP) is the use of a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditiona ...

s have hundreds of processor cores and are programmed using programming models such as CUDA

In computing, CUDA (Compute Unified Device Architecture) is a proprietary parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for accelerated gene ...

or OpenCL

OpenCL (Open Computing Language) is a software framework, framework for writing programs that execute across heterogeneous computing, heterogeneous platforms consisting of central processing units (CPUs), graphics processing units (GPUs), di ...

.

Moreover, it is quite difficult to debug and test parallel programs. Special techniques need to be used for testing and debugging such applications.

Distributed supercomputing

Opportunistic approaches

Opportunistic supercomputing is a form of networked

Opportunistic supercomputing is a form of networked grid computing

Grid computing is the use of widely distributed computer resources to reach a common goal. A computing grid can be thought of as a distributed system with non-interactive workloads that involve many files. Grid computing is distinguished fro ...

whereby a "super virtual computer" of many loosely coupled volunteer computing machines performs very large computing tasks. Grid computing has been applied to a number of large-scale embarrassingly parallel problems that require supercomputing performance scales. However, basic grid and cloud computing

Cloud computing is "a paradigm for enabling network access to a scalable and elastic pool of shareable physical or virtual resources with self-service provisioning and administration on-demand," according to International Organization for ...

approaches that rely on volunteer computing

Volunteer computing is a type of distributed computing in which people donate their computers' unused resources to a research-oriented project, and sometimes in exchange for credit points. The fundamental idea behind it is that a modern desktop ...

cannot handle traditional supercomputing tasks such as fluid dynamic simulations.

The fastest grid computing system is the volunteer computing project Folding@home

Folding@home (FAH or F@h) is a distributed computing project aimed to help scientists develop new therapeutics for a variety of diseases by the means of simulating protein dynamics. This includes the process of protein folding and the movements ...

(F@h). , F@h reported 2.5 exaFLOPS of x86

x86 (also known as 80x86 or the 8086 family) is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel, based on the 8086 microprocessor and its 8-bit-external-bus variant, the 8088. Th ...

processing power. Of this, over 100 PFLOPS are contributed by clients running on various GPUs, and the rest from various CPU systems.

The Berkeley Open Infrastructure for Network Computing

The Berkeley Open Infrastructure for Network Computing (BOINC, pronounced rhymes with "oink") is an open-source middleware system for volunteer computing (a type of distributed computing). Developed originally to support SETI@home, it became ...

(BOINC) platform hosts a number of volunteer computing projects. , BOINC recorded a processing power of over 166 petaFLOPS through over 762 thousand active Computers (Hosts) on the network.

, Great Internet Mersenne Prime Search

The Great Internet Mersenne Prime Search (GIMPS) is a collaborative project of volunteers who use freely available software to search for Mersenne prime numbers.

GIMPS was founded in 1996 by George Woltman, who also wrote the Prime95 client and ...

's (GIMPS) distributed Mersenne Prime

In mathematics, a Mersenne prime is a prime number that is one less than a power of two. That is, it is a prime number of the form for some integer . They are named after Marin Mersenne, a French Minim friar, who studied them in the early 1 ...

search achieved about 0.313 PFLOPS through over 1.3 million computers. The PrimeNet server has supported GIMPS's grid computing approach, one of the earliest volunteer computing projects, since 1997.

Quasi-opportunistic approaches

Quasi-opportunistic supercomputing is a form ofdistributed computing

Distributed computing is a field of computer science that studies distributed systems, defined as computer systems whose inter-communicating components are located on different networked computers.

The components of a distributed system commu ...

whereby the "super virtual computer" of many networked geographically disperse computers performs computing tasks that demand huge processing power. Quasi-opportunistic supercomputing aims to provide a higher quality of service than opportunistic grid computing by achieving more control over the assignment of tasks to distributed resources and the use of intelligence about the availability and reliability of individual systems within the supercomputing network. However, quasi-opportunistic distributed execution of demanding parallel computing software in grids should be achieved through the implementation of grid-wise allocation agreements, co-allocation subsystems, communication topology-aware allocation mechanisms, fault tolerant message passing libraries and data pre-conditioning.

High-performance computing clouds

Cloud computing

Cloud computing is "a paradigm for enabling network access to a scalable and elastic pool of shareable physical or virtual resources with self-service provisioning and administration on-demand," according to International Organization for ...

with its recent and rapid expansions and development have grabbed the attention of high-performance computing (HPC) users and developers in recent years. Cloud computing attempts to provide HPC-as-a-service exactly like other forms of services available in the cloud such as software as a service

Software as a service (SaaS ) is a cloud computing service model where the provider offers use of application software to a client and manages all needed physical and software resources. SaaS is usually accessed via a web application. Unlike o ...

, platform as a service

Platform as a service (PaaS) or application platform as a service (aPaaS) or platform-based service is a cloud computing service model where users provision, instantiate, run and manage a modular bundle of a computing platform and applications, w ...

, and infrastructure as a service

Infrastructure as a service (IaaS) is a cloud computing service model where a cloud services vendor provides computing resources such as storage, network, servers, and virtualization (which emulates computer hardware). This service frees users fr ...

. HPC users may benefit from the cloud in different angles such as scalability, resources being on-demand, fast, and inexpensive. On the other hand, moving HPC applications have a set of challenges too. Good examples of such challenges are virtualization

In computing, virtualization (abbreviated v12n) is a series of technologies that allows dividing of physical computing resources into a series of virtual machines, operating systems, processes or containers.

Virtualization began in the 1960s wit ...

overhead in the cloud, multi-tenancy of resources, and network latency issues. Much research is currently being done to overcome these challenges and make HPC in the cloud a more realistic possibility.

In 2016, Penguin Computing, Parallel Works, R-HPC, Amazon Web Services

Amazon Web Services, Inc. (AWS) is a subsidiary of Amazon.com, Amazon that provides Software as a service, on-demand cloud computing computing platform, platforms and Application programming interface, APIs to individuals, companies, and gover ...

, Univa, Silicon Graphics International

Silicon Graphics International Corp. (SGI; formerly Rackable Systems, Inc.) was an American manufacturer of computer hardware and software, including high-performance computing systems, x86-based servers for datacenter deployment, and visuali ...

, Rescale, Sabalcore, and Gomput started to offer HPC cloud computing

Cloud computing is "a paradigm for enabling network access to a scalable and elastic pool of shareable physical or virtual resources with self-service provisioning and administration on-demand," according to International Organization for ...

. The Penguin On Demand (POD) cloud is a bare-metal

In information technology, bare machine (or bare-metal computer) is a computer which has no operating system. The software executed by a bare machine, commonly called a "bare metal program" or "bare metal application", is designed to interact dir ...

compute model to execute code, but each user is given virtualized login node. POD computing nodes are connected via non-virtualized 10 Gbit/s Ethernet

Ethernet ( ) is a family of wired computer networking technologies commonly used in local area networks (LAN), metropolitan area networks (MAN) and wide area networks (WAN). It was commercially introduced in 1980 and first standardized in 198 ...

or QDR InfiniBand

InfiniBand (IB) is a computer networking communications standard used in high-performance computing that features very high throughput and very low latency. It is used for data interconnect both among and within computers. InfiniBand is also used ...

networks. User connectivity to the POD data center

A data center is a building, a dedicated space within a building, or a group of buildings used to house computer systems and associated components, such as telecommunications and storage systems.

Since IT operations are crucial for busines ...

ranges from 50 Mbit/s to 1 Gbit/s. Citing Amazon's EC2 Elastic Compute Cloud, Penguin Computing argues that virtualization

In computing, virtualization (abbreviated v12n) is a series of technologies that allows dividing of physical computing resources into a series of virtual machines, operating systems, processes or containers.

Virtualization began in the 1960s wit ...

of compute nodes is not suitable for HPC. Penguin Computing has also criticized that HPC clouds may have allocated computing nodes to customers that are far apart, causing latency that impairs performance for some HPC applications.

Performance measurement

Capability versus capacity

Supercomputers generally aim for the maximum in capability computing rather than capacity computing. Capability computing is typically thought of as using the maximum computing power to solve a single large problem in the shortest amount of time. Often a capability system is able to solve a problem of a size or complexity that no other computer can, e.g. a very complex weather simulation application. Capacity computing, in contrast, is typically thought of as using efficient cost-effective computing power to solve a few somewhat large problems or many small problems.''The Potential Impact of High-End Capability Computing on Four Illustrative Fields of Science and Engineering'' by Committee on the Potential Impact of High-End Computing on Illustrative Fields of Science and Engineering and National Research Council (28 October 2008) page 9 Architectures that lend themselves to supporting many users for routine everyday tasks may have a lot of capacity but are not typically considered supercomputers, given that they do not solve a single very complex problem.Performance metrics

FLOPS

Floating point operations per second (FLOPS, flops or flop/s) is a measure of computer performance in computing, useful in fields of scientific computations that require floating-point calculations.

For such cases, it is a more accurate measu ...

(floating-point operations per second), and not in terms of MIPS (million instructions per second), as is the case with general-purpose computers. These measurements are commonly used with an SI prefix

The International System of Units, internationally known by the abbreviation SI (from French ), is the modern form of the metric system and the world's most widely used system of measurement. It is the only system of measurement with official st ...

such as tera-

A metric prefix is a unit prefix that precedes a basic unit of measure to indicate a multiple or submultiple of the unit. All metric prefixes used today are decadic. Each prefix has a unique symbol that is prepended to any unit symbol. The pre ...

, combined into the shorthand TFLOPS (1012 FLOPS, pronounced ''teraflops''), or peta-

A metric prefix is a unit prefix that precedes a basic unit of measure to indicate a multiple or submultiple of the unit. All metric prefixes used today are decadic. Each prefix has a unique symbol that is prepended to any unit symbol. The pre ...

, combined into the shorthand PFLOPS (1015 FLOPS, pronounced ''petaflops''.) Petascale supercomputers can process one quadrillion (1015) (1000 trillion) FLOPS. Exascale is computing performance in the exaFLOPS (EFLOPS) range. An EFLOPS is one quintillion (1018) FLOPS (one million TFLOPS). However, The performance of a supercomputer can be severely impacted by fluctuation brought on by elements like system load, network traffic, and concurrent processes, as mentioned by Brehm and Bruhwiler (2015).

No single number can reflect the overall performance of a computer system, yet the goal of the Linpack benchmark is to approximate how fast the computer solves numerical problems and it is widely used in the industry. The FLOPS measurement is either quoted based on the theoretical floating point performance of a processor (derived from manufacturer's processor specifications and shown as "Rpeak" in the TOP500 lists), which is generally unachievable when running real workloads, or the achievable throughput, derived from the LINPACK benchmarks

The LINPACK benchmarks are a measure of a system's floating-point computing power. Introduced by Jack Dongarra, they measure how fast a computer solves a dense ''n'' × ''n'' system of linear equations ''Ax'' = ''b'', which i ...

and shown as "Rmax" in the TOP500 list. The LINPACK benchmark typically performs LU decomposition

In numerical analysis and linear algebra, lower–upper (LU) decomposition or factorization factors a matrix as the product of a lower triangular matrix and an upper triangular matrix (see matrix multiplication and matrix decomposition). The produ ...

of a large matrix. The LINPACK performance gives some indication of performance for some real-world problems, but does not necessarily match the processing requirements of many other supercomputer workloads, which for example may require more memory bandwidth, or may require better integer computing performance, or may need a high performance I/O system to achieve high levels of performance.

The TOP500 list

Since 1993, the fastest supercomputers have been ranked on the TOP500 list according to their LINPACK benchmark results. The list does not claim to be unbiased or definitive, but it is a widely cited current definition of the "fastest" supercomputer available at any given time.

This is a list of the computers which appeared at the top of the TOP500 list since June 1993, and the "Peak speed" is given as the "Rmax" rating. In 2018,

Since 1993, the fastest supercomputers have been ranked on the TOP500 list according to their LINPACK benchmark results. The list does not claim to be unbiased or definitive, but it is a widely cited current definition of the "fastest" supercomputer available at any given time.

This is a list of the computers which appeared at the top of the TOP500 list since June 1993, and the "Peak speed" is given as the "Rmax" rating. In 2018, Lenovo

Lenovo Group Limited, trading as Lenovo ( , zh, c=联想, p=Liánxiǎng), is a Chinese multinational technology company specializing in designing, manufacturing, and marketing consumer electronics, personal computers, software, servers, conv ...

became the world's largest provider for the TOP500 supercomputers with 117 units produced.

Legend:

* RankPosition within the TOP500 ranking. In the TOP500 list table, the computers are ordered first by their Rmax value. In the case of equal performances (Rmax value) for different computers, the order is by Rpeak. For sites that have the same computer, the order is by memory size and then alphabetically.

* RmaxThe highest score measured using the LINPACK benchmarks

The LINPACK benchmarks are a measure of a system's floating-point computing power. Introduced by Jack Dongarra, they measure how fast a computer solves a dense ''n'' × ''n'' system of linear equations ''Ax'' = ''b'', which i ...

suite. This is the number that is used to rank the computers. Measured in quadrillions of 64-bit floating point

In computing, floating-point arithmetic (FP) is arithmetic on subsets of real numbers formed by a ''significand'' (a signed sequence of a fixed number of digits in some base) multiplied by an integer power of that base.

Numbers of this form ...

operations per second

The second (symbol: s) is a unit of time derived from the division of the day first into 24 hours, then to 60 minutes, and finally to 60 seconds each (24 × 60 × 60 = 86400). The current and formal definition in the International System of U ...

, i.e., petaFLOPS

Floating point operations per second (FLOPS, flops or flop/s) is a measure of computer performance in computing, useful in fields of scientific computations that require floating-point calculations.

For such cases, it is a more accurate measu ...

.

* RpeakThis is the theoretical peak performance of the system. Computed in petaFLOPS.

* NameSome supercomputers are unique, at least in their location, and are thus named by their owner.

* ModelThe computing platform as it is marketed.

* ProcessorThe instruction set architecture or processor microarchitecture, alongside GPU and accelerators when available.

* InterconnectThe Computer network, interconnect between computing nodes. InfiniBand

InfiniBand (IB) is a computer networking communications standard used in high-performance computing that features very high throughput and very low latency. It is used for data interconnect both among and within computers. InfiniBand is also used ...

is most used (38%) by performance share, while Gigabit Ethernet is most used (54%) by number of computers.

* ManufacturerThe manufacturer of the platform and hardware.

* SiteThe name of the facility operating the supercomputer.

* CountryThe country in which the computer is located.

* YearThe year of installation or last major update.

* Operating systemThe operating system that the computer uses.

Applications

The stages of supercomputer application are summarized in the following table: The IBMBlue Gene

Blue Gene was an IBM project aimed at designing supercomputers that can reach operating speeds in the petaFLOPS (PFLOPS) range, with relatively low power consumption.

The project created three generations of supercomputers, Blue Gene/L, Blue ...

/P computer has been used to simulate a number of artificial neurons equivalent to approximately one percent of a human cerebral cortex, containing 1.6 billion neurons with approximately 9 trillion connections. The same research group also succeeded in using a supercomputer to simulate a number of artificial neurons equivalent to the entirety of a rat's brain.

Modern weather forecasting relies on supercomputers. The National Oceanic and Atmospheric Administration uses supercomputers to crunch hundreds of millions of observations to help make weather forecasts more accurate.

In 2011, the challenges and difficulties in pushing the envelope in supercomputing were underscored by IBM

International Business Machines Corporation (using the trademark IBM), nicknamed Big Blue, is an American Multinational corporation, multinational technology company headquartered in Armonk, New York, and present in over 175 countries. It is ...

's abandonment of the Blue Waters petascale project.

The Advanced Simulation and Computing Program currently uses supercomputers to maintain and simulate the United States nuclear stockpile.

In early 2020, COVID-19 was front and center in the world. Supercomputers used different simulations to find compounds that could potentially stop the spread. These computers run for tens of hours using multiple paralleled running CPU's to model different processes.

Development and trends

In the 2010s, China, the United States, the European Union, and others competed to be the first to create a 1 Exascale computing, exaFLOP (1018 or one quintillion FLOPS) supercomputer. Erik P. DeBenedictis of Sandia National Laboratories has theorized that a zettaFLOPS (1021 or one sextillion FLOPS) computer is required to accomplish full Weather forecasting, weather modeling, which could cover a two-week time span accurately. Such systems might be built around 2030. Many Monte Carlo method, Monte Carlo simulations use the same algorithm to process a randomly generated data set; particularly, integro-differential equations describing Transport phenomena, physical transport processes, the Random walk, random paths, collisions, and energy and momentum depositions of neutrons, photons, ions, electrons, etc. The next step for microprocessors may be into the Three-dimensional integrated circuit, third dimension; and specializing to Monte Carlo, the many layers could be identical, simplifying the design and manufacture process. The cost of operating high performance supercomputers has risen, mainly due to increasing power consumption. In the mid-1990s a top 10 supercomputer required in the range of 100 kilowatts, in 2010 the top 10 supercomputers required between 1 and 2 megawatts. A 2010 study commissioned by DARPA identified power consumption as the most pervasive challenge in achieving Exascale computing. At the time a megawatt per year in energy consumption cost about 1 million dollars. Supercomputing facilities were constructed to efficiently remove the increasing amount of heat produced by modern multi-corecentral processing unit

A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary Processor (computing), processor in a given computer. Its electronic circuitry executes Instruction (computing), instructions ...

s. Based on the energy consumption of the Green 500 list of supercomputers between 2007 and 2011, a supercomputer with 1 exaFLOPS in 2011 would have required nearly 500 megawatts. Operating systems were developed for existing hardware to conserve energy whenever possible. CPU cores not in use during the execution of a parallelized application were put into low-power states, producing energy savings for some supercomputing applications.

The increasing cost of operating supercomputers has been a driving factor in a trend toward bundling of resources through a distributed supercomputer infrastructure. National supercomputing centers first emerged in the US, followed by Germany and Japan. The European Union launched the Partnership for Advanced Computing in Europe (PRACE) with the aim of creating a persistent pan-European supercomputer infrastructure with services to support scientists across the European Union

The European Union (EU) is a supranational union, supranational political union, political and economic union of Member state of the European Union, member states that are Geography of the European Union, located primarily in Europe. The u ...

in porting, scaling and optimizing supercomputing applications. Iceland built the world's first zero-emission supercomputer. Located at the Thor Data Center in Reykjavík, Iceland, this supercomputer relies on completely renewable sources for its power rather than fossil fuels. The colder climate also reduces the need for active cooling, making it one of the greenest facilities in the world of computers.

Funding supercomputer hardware also became increasingly difficult. In the mid-1990s a top 10 supercomputer cost about 10 million euros, while in 2010 the top 10 supercomputers required an investment of between 40 and 50 million euros. In the 2000s national governments put in place different strategies to fund supercomputers. In the UK the national government funded supercomputers entirely and high performance computing was put under the control of a national funding agency. Germany developed a mixed funding model, pooling local state funding and federal funding.

In fiction

Examples of supercomputers in fiction include HAL 9000, Multivac, The Machine Stops, GLaDOS, The Evitable Conflict, Vulcan's Hammer, Colossus (novel), Colossus, WarGames, WOPR, I Have No Mouth and I Must Scream#AM, AM, and List of minor The Hitchhiker's Guide to the Galaxy characters#Deep Thought, Deep Thought. A supercomputer from Thinking Machines Corporation, Thinking Machines was mentioned as the supercomputer used to sequence the DNA extracted from preserved parasites in the Jurassic Park series.See also

* ACM/IEEE Supercomputing Conference * ACM SIGHPC * High-performance computing * High-performance technical computing * Jungle computing * Metacomputing * Nvidia Tesla Personal Supercomputer * Parallel computing * Supercomputing in China * Supercomputing in Europe * Supercomputing in India * Supercomputing in Japan * Slurm Workload Manager, SLURM * Testing high-performance computing applications * Ultra Network Technologies * Quantum computingReferences

External links

* McDonnell, Marshall T. (2013)"Supercomputer Design: An Initial Effort to Capture the Environmental, Economic, and Societal Impacts"

Chemical and Biomolecular Engineering Publications and Other Works. {{Authority control Supercomputers, American inventions Cluster computing Concurrent computing Distributed computing architecture Parallel computing