StyleGAN on:

[Wikipedia]

[Google]

[Amazon]

StyleGAN is a

StyleGAN is a

StyleGAN-1 is designed as a combination of Progressive GAN with neural style transfer.

The key architectural choice of StyleGAN-1 is a progressive growth mechanism, similar to Progressive GAN. Each generated image starts as a constant array, and repeatedly passed through style blocks. Each style block applies a "style latent vector" via affine transform ("adaptive instance normalization"), similar to how neural style transfer uses

StyleGAN-1 is designed as a combination of Progressive GAN with neural style transfer.

The key architectural choice of StyleGAN-1 is a progressive growth mechanism, similar to Progressive GAN. Each generated image starts as a constant array, and repeatedly passed through style blocks. Each style block applies a "style latent vector" via affine transform ("adaptive instance normalization"), similar to how neural style transfer uses

The original 2018 Nvidia StyleGAN paper 'A Style-Based Generator Architecture for Generative Adversarial Networks' at arXiv.orgStyleGAN code at GitHub.comThis Person Does Not Exist

Deep learning software applications Computer graphics Virtual reality Applications of computer vision

StyleGAN is a

StyleGAN is a generative adversarial network

A generative adversarial network (GAN) is a class of machine learning frameworks designed by Ian Goodfellow and his colleagues in June 2014. Two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is ...

(GAN) introduced by Nvidia

Nvidia CorporationOfficially written as NVIDIA and stylized in its logo as VIDIA with the lowercase "n" the same height as the uppercase "VIDIA"; formerly stylized as VIDIA with a large italicized lowercase "n" on products from the mid 1990s to ...

researchers in December 2018, and made source available

Source-available software is software released through a source code distribution model that includes arrangements where the source can be viewed, and in some cases modified, but without necessarily meeting the criteria to be called open-source ...

in February 2019.

StyleGAN depends on Nvidia's CUDA

CUDA (or Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for general purpose processing, an approach ...

software, GPUs, and Google's TensorFlow

TensorFlow is a free and open-source software library for machine learning and artificial intelligence. It can be used across a range of tasks but has a particular focus on training and inference of deep neural networks. "It is machine learning ...

, or Meta AI

Meta AI is an artificial intelligence laboratory that belongs to Meta Platforms Inc. (formerly known as Facebook, Inc.) Meta AI intends to develop various forms of artificial intelligence, improving augmented and artificial reality technologies. ...

's PyTorch

PyTorch is a machine learning framework based on the Torch library, used for applications such as computer vision and natural language processing, originally developed by Meta AI and now part of the Linux Foundation umbrella. It is free and op ...

, which supersedes TensorFlow as the official implementation library in later StyleGAN versions. The second version of StyleGAN, called StyleGAN2, was published on February 5, 2020. It removes some of the characteristic artifacts and improves the image quality. Nvidia introduced StyleGAN3, described as an "alias-free" version, on June 23, 2021, and made source available on October 12, 2021.

History

In December 2018, Nvidia researchers distributed a preprint with accompanying software introducing StyleGAN, a GAN for producing an unlimited number of (often convincing) portraits of fake human faces. StyleGAN was able to run on Nvidia's commodity GPU processors. In February 2019,Uber

Uber Technologies, Inc. (Uber), based in San Francisco, provides mobility as a service, ride-hailing (allowing users to book a car and driver to transport them in a way similar to a taxi), food delivery ( Uber Eats and Postmates), pack ...

engineer Phillip Wang used the software to create ''This Person Does Not Exist'', which displayed a new face on each web page reload.

Wang himself has expressed amazement, given that humans are evolved to specifically understand human faces, that nevertheless StyleGAN can competitively "pick apart all the relevant features (of human faces) and recompose them in a way that's coherent."

In September 2019, a website called Generated Photos published 100,000 images as a collection of stock photos

Stock photography is the supply of photographs which are often licensed for specific uses. The stock photo industry, which began to gain hold in the 1920s, has established models including traditional macrostock photography, midstock photography, ...

. The collection was made using a private dataset shot in a controlled environment with similar light and angles.

Similarly, two faculty at the University of Washington's Information School used StyleGAN to create ''Which Face is Real?'', which challenged visitors to differentiate between a fake and a real face side by side. The faculty stated the intention was to "educate the public" about the existence of this technology so they could be wary of it, "just like eventually most people were made aware that you can Photoshop an image".

The second version of StyleGAN, called StyleGAN2, was published on February 5, 2020. It removes some of the characteristic artifacts and improves the image quality.

In 2021, a third version was released, improving consistency between fine and coarse details in the generator. Dubbed "alias-free", this version was implemented with pytorch

PyTorch is a machine learning framework based on the Torch library, used for applications such as computer vision and natural language processing, originally developed by Meta AI and now part of the Linux Foundation umbrella. It is free and op ...

.

Illicit use

In December 2019,Facebook

Facebook is an online social media and social networking service owned by American company Meta Platforms. Founded in 2004 by Mark Zuckerberg with fellow Harvard College students and roommates Eduardo Saverin, Andrew McCollum, Dustin ...

took down a network of accounts with false identities, and mentioned that some of them had used profile pictures created with artificial intelligence.

Architecture

Progressive GAN

Progressive GAN is a method for training GAN for large-scale image generation stably, by growing a GAN generator from small to large scale in a pyramidal fashion. Like SinGAN, it decomposes the generator as, and the discriminator as . During training, at first only are used in a GAN game to generate 4x4 images. Then are added to reach the second stage of GAN game, to generate 8x8 images, and so on, until we reach a GAN game to generate 1024x1024 images. To avoid shock between stages of the GAN game, each new layer is "blended in" (Figure 2 of the paper). For example, this is how the second stage GAN game starts: * Just before, the GAN game consists of the pair generating and discriminating 4x4 images. * Just after, the GAN game consists of the pair generating and discriminating 8x8 images. Here, the functions are image up- and down-sampling functions, and is a blend-in factor (much like an alpha in image composing) that smoothly glides from 0 to 1.StyleGAN-1

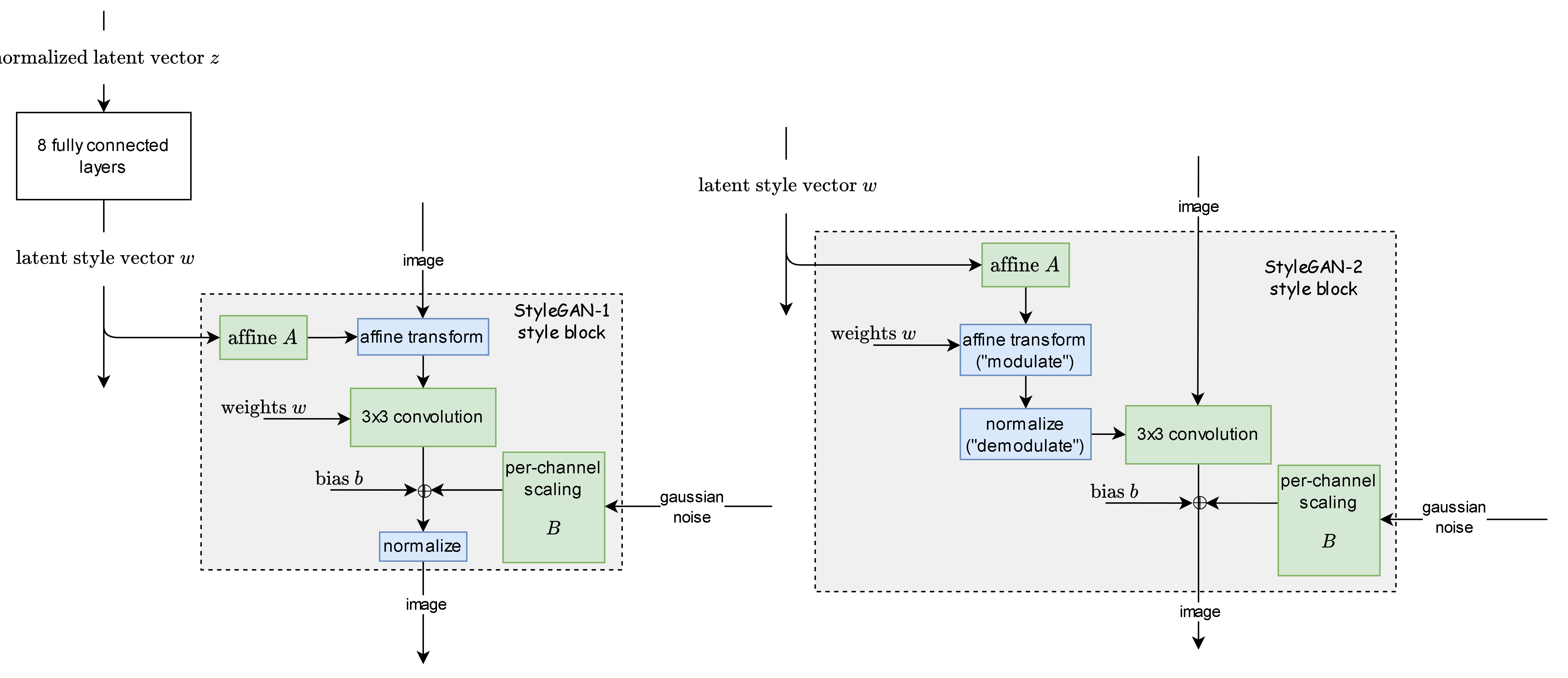

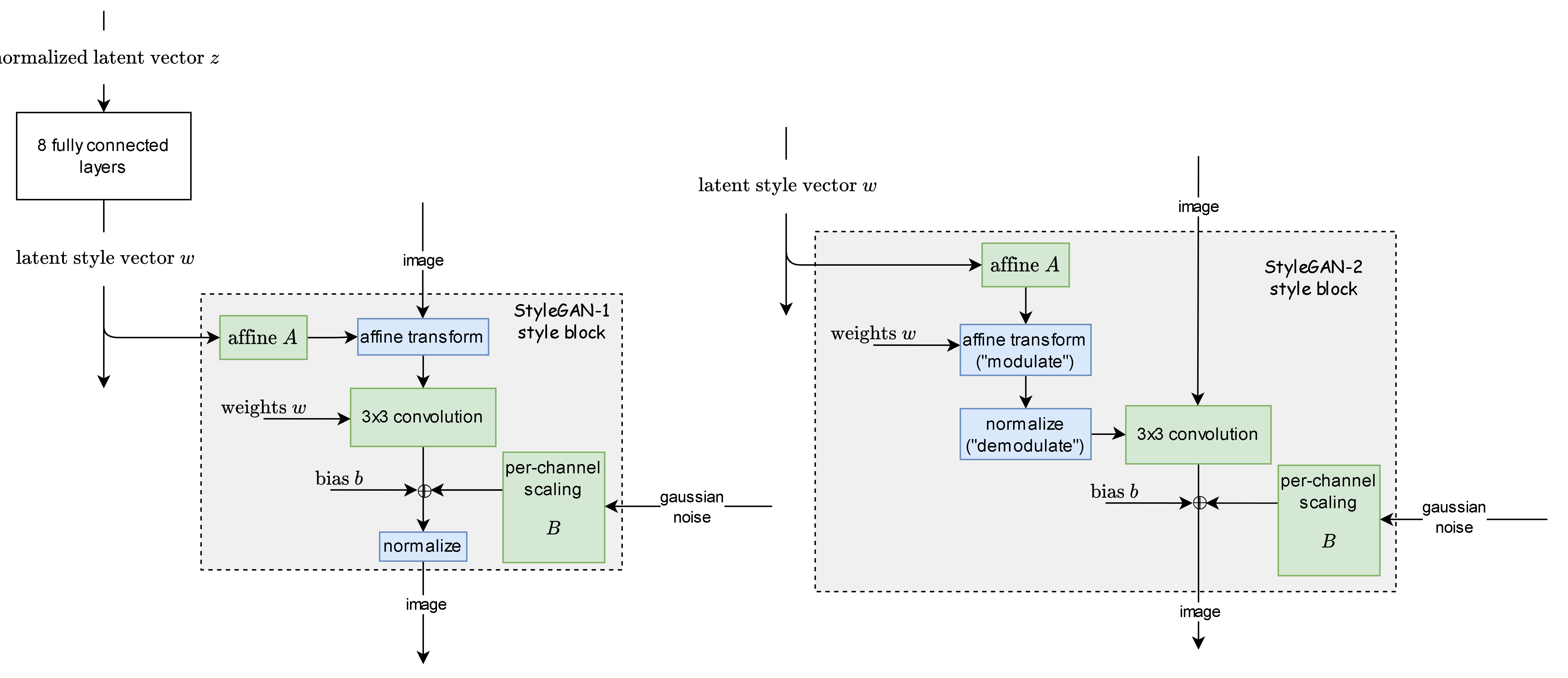

StyleGAN-1 is designed as a combination of Progressive GAN with neural style transfer.

The key architectural choice of StyleGAN-1 is a progressive growth mechanism, similar to Progressive GAN. Each generated image starts as a constant array, and repeatedly passed through style blocks. Each style block applies a "style latent vector" via affine transform ("adaptive instance normalization"), similar to how neural style transfer uses

StyleGAN-1 is designed as a combination of Progressive GAN with neural style transfer.

The key architectural choice of StyleGAN-1 is a progressive growth mechanism, similar to Progressive GAN. Each generated image starts as a constant array, and repeatedly passed through style blocks. Each style block applies a "style latent vector" via affine transform ("adaptive instance normalization"), similar to how neural style transfer uses Gramian matrix

In linear algebra, the Gram matrix (or Gramian matrix, Gramian) of a set of vectors v_1,\dots, v_n in an inner product space is the Hermitian matrix of inner products, whose entries are given by the inner product G_ = \left\langle v_i, v_j \right\r ...

. It then adds noise, and normalize (subtract the mean, then divide by the variance).

At training time, usually only one style latent vector is used per image generated, but sometimes two ("mixing regularization") in order to encourage each style block to independently perform its stylization without expecting help from other style blocks (since they might receive an entirely different style latent vector).

After training, multiple style latent vectors can be fed into each style block. Those fed to the lower layers control the large-scale styles, and those fed to the higher layers control the fine-detail styles.

Style-mixing between two images can be performed as well. First, run a gradient descent to find such that . This is called "projecting an image back to style latent space". Then, can be fed to the lower style blocks, and to the higher style blocks, to generate a composite image that has the large-scale style of , and the fine-detail style of . Multiple images can also be composed this way.

StyleGAN-2

StyleGAN-2 improves upon StyleGAN-1, by using the style latent vector to transform the convolution layer's weights instead, thus solving the "blob" problem. This was updated by the StyleGAN-2-ADA ("ADA" stands for "adaptive"), which uses invertible data augmentation. It also tunes the amount of data augmentation applied by starting at zero, and gradually increasing it until an "overfitting heuristic" reaches a target level, thus the name "adaptive".StyleGAN-3

StyleGAN-3 improves upon StyleGAN-2 by solving the "texture sticking" problem, which can be seen in the official videos. They analyzed the problem by theNyquist–Shannon sampling theorem

The Nyquist–Shannon sampling theorem is a theorem in the field of signal processing which serves as a fundamental bridge between continuous-time signals and discrete-time signals. It establishes a sufficient condition for a sample rate that per ...

, and argued that the layers in the generator learned to exploit the high-frequency signal in the pixels they operate upon.

To solve this, they proposed imposing strict lowpass filters between each generator's layers, so that the generator is forced to operate on the pixels in a way faithful

Faithful may refer to:

Film and television

* ''Faithful'' (1910 film), an American comedy short directed by D. W. Griffith

* ''Faithful'' (1936 film), a British musical drama directed by Paul L. Stein

* ''Faithful'' (1996 film), an American cr ...

to the continuous signals they represent, rather than operate on them as merely discrete signals. They further imposed rotational and translational invariance by using more signal filters. The resulting StyleGAN-3 is able to generate images that rotate and translate smoothly, and without texture sticking.

See also

*Human image synthesis

Human image synthesis is technology that can be applied to make believable and even photorealistic renditions of human-likenesses, moving or still. It has effectively existed since the early 2000s. Many films using computer generated imagery h ...

References

{{ReflistExternal links

The original 2018 Nvidia StyleGAN paper 'A Style-Based Generator Architecture for Generative Adversarial Networks' at arXiv.org

Deep learning software applications Computer graphics Virtual reality Applications of computer vision