natural user interface on:

[Wikipedia]

[Google]

[Amazon]

In

In the 1990s, Steve Mann developed a number of user-interface strategies using natural interaction with the real world as an alternative to a

In the 1990s, Steve Mann developed a number of user-interface strategies using natural interaction with the real world as an alternative to a

computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computer, computing machinery. It includes the study and experimentation of algorithmic processes, and the development of both computer hardware, hardware and softw ...

, a natural user interface (NUI) or natural interface is a user interface

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine fro ...

that is effectively invisible, and remains invisible as the user continuously learns increasingly complex interactions. The word "natural" is used because most computer interfaces use artificial control devices whose operation has to be learned. Examples include voice assistants, such as Alexa and Siri, touch and multitouch interactions on today's mobile phones and tablets, but also touch interfaces invisibly integrated into the textiles of furniture.

An NUI relies on a user being able to quickly transition from novice to expert. While the interface requires learning, that learning is eased through design which gives the user the feeling that they are instantly and continuously successful. Thus, "natural" refers to a goal in the user experience – that the interaction comes naturally, while interacting with the technology, rather than that the interface itself is natural. This is contrasted with the idea of an intuitive interface, referring to one that can be used without previous learning.

Several design strategies have been proposed which have met this goal to varying degrees of success. One strategy is the use of a "reality user interface" ("RUI"), also known as "reality-based interfaces" (RBI) methods. One example of an RUI strategy is to use a wearable computer

A wearable computer, also known as a body-borne computer, is a computing device worn on the body. The definition of 'wearable computer' may be narrow or broad, extending to smartphones or even ordinary wristwatches.

Wearables may be for general ...

to render real-world objects "clickable", i.e. so that the wearer can click on any everyday object so as to make it function as a hyperlink, thus merging cyberspace and the real world. Because the term "natural" is evocative of the "natural world", RBI are often confused for NUI, when in fact they are merely one means of achieving it.

One example of a strategy for designing a NUI not based in RBI is the strict limiting of functionality and customization, so that users have very little to learn in the operation of a device. Provided that the default capabilities match the user's goals, the interface is effortless to use. This is an overarching design strategy in Apple's iOS. Because this design is coincident with a direct-touch display, non-designers commonly misattribute the effortlessness of interacting with the device to that multi-touch display, and not to the design of the software where it actually resides.

History

In the 1990s, Steve Mann developed a number of user-interface strategies using natural interaction with the real world as an alternative to a

In the 1990s, Steve Mann developed a number of user-interface strategies using natural interaction with the real world as an alternative to a command-line interface

A command-line interface (CLI) is a means of interacting with software via command (computing), commands each formatted as a line of text. Command-line interfaces emerged in the mid-1960s, on computer terminals, as an interactive and more user ...

(CLI) or graphical user interface

A graphical user interface, or GUI, is a form of user interface that allows user (computing), users to human–computer interaction, interact with electronic devices through Graphics, graphical icon (computing), icons and visual indicators such ...

(GUI). Mann referred to this work as "natural user interfaces", "Direct User Interfaces", and "metaphor-free computing". Mann's EyeTap

An EyeTap is a concept for a wearable computer, wearable computing device that is worn in front of the human eye, eye that acts as a camera to record the scene available to the eye as well as a display to superimpose computer-generated imagery o ...

technology typically embodies an example of a natural user interface. Mann's use of the word "Natural" refers to both action that comes naturally to human users, as well as the use of nature itself, i.e. physics (''Natural Philosophy''), and the natural environment. A good example of an NUI in both these senses is the hydraulophone

A hydraulophone is a Tonality, tonal acoustic musical instrument played by direct physical contact with water (sometimes other fluids) where sound is generated or affected hydraulics, hydraulically."Fluid Melodies: The hydraulophones of Professo ...

, especially when it is used as an input device, in which touching a natural element (water) becomes a way of inputting data. More generally, a class of musical instruments called "physiphones", so-named from the Greek words "physika", "physikos" (nature) and "phone" (sound) have also been proposed as "Nature-based user interfaces".

In 2006, Christian Moore established an open research community with the goal to expand discussion and development related to NUI technologies. In a 2008 conference presentation "Predicting the Past," August de los Reyes, a Principal User Experience Director of Surface Computing at Microsoft described the NUI as the next evolutionary phase following the shift from the CLI to the GUI. Of course, this too is an over-simplification, since NUIs necessarily include visual elements – and thus, graphical user interfaces. A more accurate description of this concept would be to describe it as a transition from WIMP

WiMP is a music streaming service available on mobile devices, tablets, network players and computers. WiMP, standing for "Wireless Music Player," was a music streaming service that emphasized high-quality audio. WiMP offered music and podcast ...

to NUI.

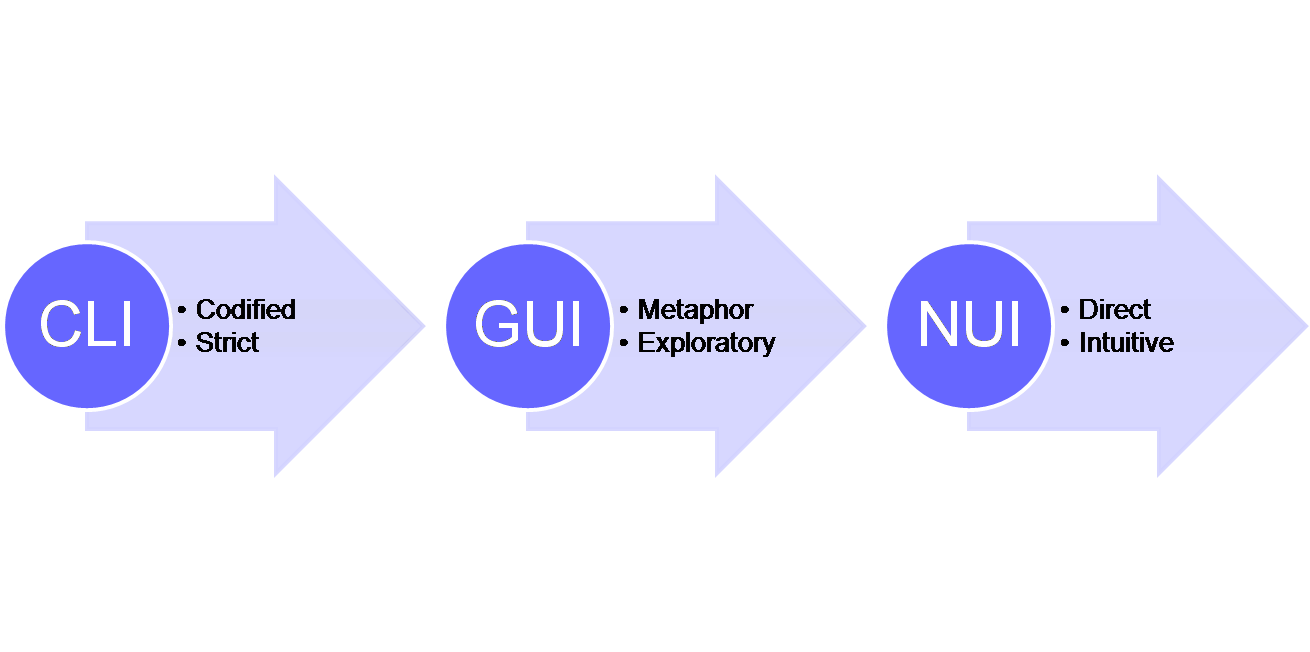

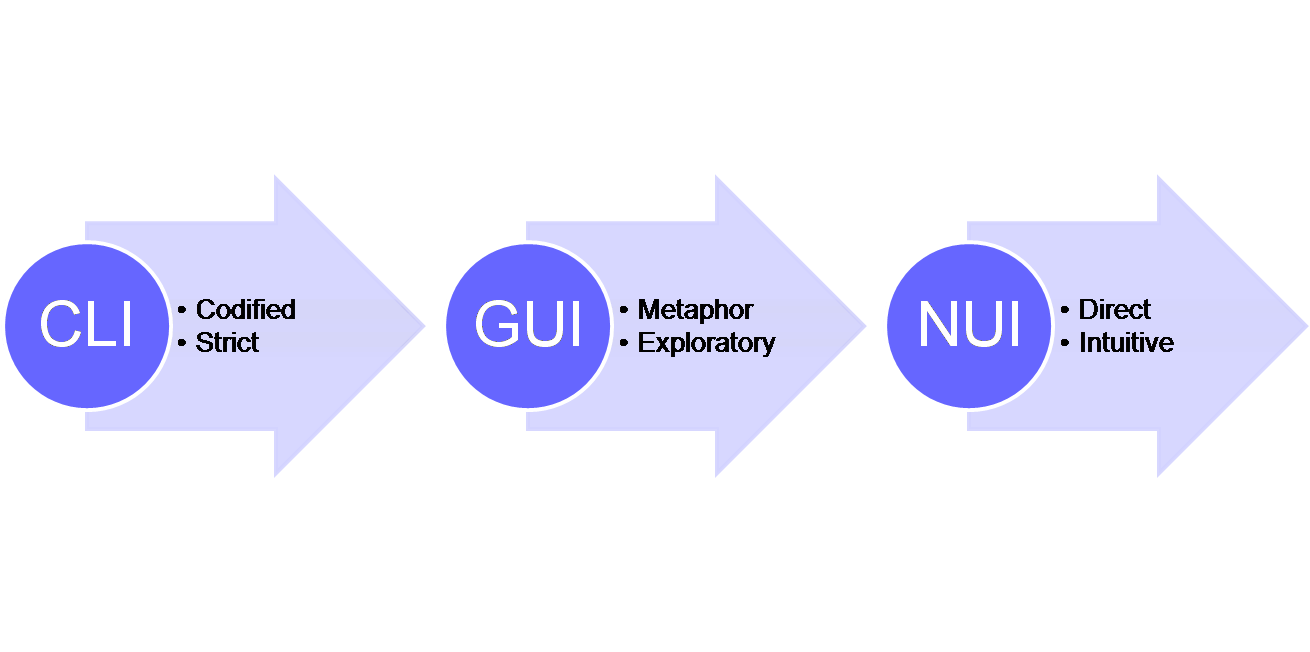

In the CLI, users had to learn an artificial means of input, the keyboard, and a series of codified inputs, that had a limited range of responses, where the syntax of those commands was strict.

Then, when the mouse enabled the GUI, users could more easily learn the mouse movements and actions, and were able to explore the interface much more. The GUI relied on metaphors for interacting with on-screen content or objects. The 'desktop' and 'drag' for example, being metaphors for a visual interface that ultimately was translated back into the strict codified language of the computer.

An example of the misunderstanding of the term NUI was demonstrated at the Consumer Electronics Show

CES (; formerly an initialism for Consumer Electronics Show) is an annual trade show organized by the Consumer Technology Association (CTA). Held in January at the Las Vegas Convention Center in Winchester, Nevada, United States, the event typi ...

in 2010. "Now a new wave of products is poised to bring natural user interfaces, as these methods of controlling electronics devices are called, to an even broader audience."

In 2010, Microsoft's Bill Buxton reiterated the importance of the NUI within Microsoft Corporation with a video discussing technologies which could be used in creating a NUI, and its future potential.

In 2010, Daniel Wigdor and Dennis Wixon provided an operationalization of building natural user interfaces in their book.Brave NUI World In it, they carefully distinguish between natural user interfaces, the technologies used to achieve them, and reality-based UI.

Types of interface

Multi-touch

When Bill Buxton was asked about the iPhone's interface, he responded "Multi-touch technologies have a long history. To put it in perspective, the original work undertaken by my team was done in 1984, the same year that the first Macintosh computer was released, and we were not the first." Multi-Touch is a technology which could enable a natural user interface. Applications of animation creation with multi-touch systems have been demonstrated. However, most UI toolkits used to construct interfaces executed with such technology are traditional GUIs.Gesture control

Hand gesture presents a natural way of interacting with computer systems, especially for those that involve 3D information such as manipulatingvirtual reality

Virtual reality (VR) is a Simulation, simulated experience that employs 3D near-eye displays and pose tracking to give the user an immersive feel of a virtual world. Applications of virtual reality include entertainment (particularly video gam ...

or augmented reality

Augmented reality (AR), also known as mixed reality (MR), is a technology that overlays real-time 3D computer graphics, 3D-rendered computer graphics onto a portion of the real world through a display, such as a handheld device or head-mounted ...

objects, thanks to the 3 dimensional movements of hand gestures. Such hand gestures can be extracted from images collected in color or RGB-D cameras, or captured from sensor gloves. Since hand gestures from such input sources are usually noisy, solutions based on artificial intelligence

Artificial intelligence (AI) is the capability of computer, computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of re ...

are presented to remove the sensor noisy, allowing more effective controls. Moreover, to tackle the computational delay in real-time applications, short-term prediction of hand gestures has enabled an improved level of interactivity.

NUI software and hardware platforms

Perceptive Pixel

One example is the work done by Jefferson Han onmulti-touch

In computing, multi-touch is technology that enables a surface (a touchpad or touchscreen) to recognize the presence of more than one somatosensory system, point of contact with the surface at the same time. The origins of multitouch began at CE ...

interfaces. In a demonstration at TED in 2006, he showed a variety of means of interacting with on-screen content using both direct manipulations and gestures. For example, to shape an on-screen glutinous mass, Jeff literally 'pinches' and prods and pokes it with his fingers. In a GUI interface for a design application for example, a user would use the metaphor of 'tools' to do this, for example, selecting a prod tool, or selecting two parts of the mass that they then wanted to apply a 'pinch' action to. Han showed that user interaction could be much more intuitive by doing away with the interaction devices that we are used to and replacing them with a screen that was capable of detecting a much wider range of human actions and gestures. Of course, this allows only for a very limited set of interactions which map neatly onto physical manipulation (RBI). Extending the capabilities of the software beyond physical actions requires significantly more design work.

Microsoft PixelSense

Microsoft PixelSense

Microsoft PixelSense (formerly called Microsoft Surface) was an interactive surface computing platform that allowed one or more people to use and touch real-world objects, and share digital content at the same time. The PixelSense platform consis ...

takes similar ideas on how users interact with content, but adds in the ability for the device to optically recognize objects placed on top of it. In this way, users can trigger actions on the computer through the same gestures and motions as Jeff Han's touchscreen allowed, but also objects become a part of the control mechanisms. So for example, when you place a wine glass on the table, the computer recognizes it as such and displays content associated with that wine glass. Placing a wine glass on a table maps well onto actions taken with wine glasses and other tables, and thus maps well onto reality-based interfaces. Thus, it could be seen as an entrée to a NUI experience.

3D Immersive Touch

"3D Immersive Touch" is defined as the direct manipulation of 3D virtual environment objects using single or multi-touch surface hardware in multi-user 3D virtual environments. Coined first in 2007 to describe and define the 3D natural user interface learning principles associated with Edusim. Immersive Touch natural user interface now appears to be taking on a broader focus and meaning with the broader adaption of surface and touch driven hardware such as the iPhone, iPod touch, iPad, and a growing list of other hardware. Apple also seems to be taking a keen interest in “Immersive Touch” 3D natural user interfaces over the past few years. This work builds atop the broad academic base which has studied 3D manipulation in virtual reality environments.Xbox Kinect

Kinect

Kinect is a discontinued line of motion sensing input devices produced by Microsoft and first released in 2010. The devices generally contain RGB color model, RGB cameras, and Thermographic camera, infrared projectors and detectors that map dep ...

is a motion sensing input device

In computing, an input device is a piece of equipment used to provide data and control signals to an information processing system, such as a computer or information appliance. Examples of input devices include keyboards, computer mice, scanne ...

by Microsoft

Microsoft Corporation is an American multinational corporation and technology company, technology conglomerate headquartered in Redmond, Washington. Founded in 1975, the company became influential in the History of personal computers#The ear ...

for the Xbox 360

The Xbox 360 is a home video game console developed by Microsoft. As the successor to the Xbox (console), original Xbox, it is the second console in the Xbox#Consoles, Xbox series. It was officially unveiled on MTV on May 12, 2005, with detail ...

video game console

A video game console is an electronic device that Input/output, outputs a video signal or image to display a video game that can typically be played with a game controller. These may be home video game console, home consoles, which are generally ...

and Windows

Windows is a Product lining, product line of Proprietary software, proprietary graphical user interface, graphical operating systems developed and marketed by Microsoft. It is grouped into families and subfamilies that cater to particular sec ...

PCs that uses spatial gestures

A gesture is a form of nonverbal communication or non-vocal communication in which visible bodily actions communicate particular messages, either in place of, or in conjunction with, speech. Gestures include movement of the hands, face, or othe ...

for interaction instead of a game controller

A game controller, gaming controller, or simply controller, is an input device or Input/Output Device, input/output device used with video games or entertainment systems to provide input to a video game. Input devices that have been classified as ...

. According to Microsoft

Microsoft Corporation is an American multinational corporation and technology company, technology conglomerate headquartered in Redmond, Washington. Founded in 1975, the company became influential in the History of personal computers#The ear ...

's page, Kinect

Kinect is a discontinued line of motion sensing input devices produced by Microsoft and first released in 2010. The devices generally contain RGB color model, RGB cameras, and Thermographic camera, infrared projectors and detectors that map dep ...

is designed for "a revolutionary new way to play: no controller required.". Again, because Kinect allows the sensing of the physical world, it shows potential for RBI designs, and thus potentially also for NUI.

See also

* Edusim *Eye tracking

Eye tracking is the process of measuring either the point of gaze (where one is looking) or the motion of an eye relative to the head. An eye tracker is a device for measuring eye positions and eye movement. Eye trackers are used in research ...

* Kinetic user interface

* Organic user interface

In human–computer interaction, an organic user interface (OUI) is defined as a user interface with a non-flat display. After Engelbart and Sutherland's graphical user interface (GUI), which was based on the cathode ray tube

A cathode- ...

* Virtual assistant

A virtual assistant (VA) is a software agent that can perform a range of tasks or services for a user based on user input such as commands or questions, including verbal ones. Such technologies often incorporate chatbot capabilities to streaml ...

* Post-WIMP

In computing, post-WIMP ("windows, icons, menus, pointer") comprises work on user interfaces, mostly graphical user interfaces, which attempt to go beyond the paradigm of windows, icons, menus and a pointing device, i.e. WIMP interfaces.

The reas ...

* Scratch input

* Spatial navigation

* Tangible user interface

A tangible user interface (TUI) is a user interface in which a person interacts with digital information through the physical environment. The initial name was Graspable User Interface, which is no longer used. The purpose of TUI development ...

* Touch user interface

A touch user interface (TUI) is a computer-pointing technology based upon the sense of touch ( haptics). Whereas a graphical user interface (GUI) relies upon the sense of sight, a TUI enables not only the sense of touch to innervate and activate c ...

Notes

References

* http://blogs.msdn.com/surface/archive/2009/02/25/what-is-nui.aspx * https://www.amazon.com/Brave-NUI-World-Designing-Interfaces/dp/0123822319/ref=sr_1_1?ie=UTF8&qid=1329478543&sr=8-1 The book Brave NUI World from the creators of Microsoft Surface's NUI {{DEFAULTSORT:Natural User Interface User interfaces History of human–computer interaction