least-squares on:

[Wikipedia]

[Google]

[Amazon]

The method of least squares is a mathematical optimization technique that aims to determine the best fit function by minimizing the sum of the squares of the differences between the observed values and the predicted values of the model. The method is widely used in areas such as regression analysis, curve fitting and data modeling. The least squares method can be categorized into linear and nonlinear forms, depending on the relationship between the model parameters and the observed data. The method was first proposed by Adrien-Marie Legendre in 1805 and further developed by Carl Friedrich Gauss.

The method of least squares is a mathematical optimization technique that aims to determine the best fit function by minimizing the sum of the squares of the differences between the observed values and the predicted values of the model. The method is widely used in areas such as regression analysis, curve fitting and data modeling. The least squares method can be categorized into linear and nonlinear forms, depending on the relationship between the model parameters and the observed data. The method was first proposed by Adrien-Marie Legendre in 1805 and further developed by Carl Friedrich Gauss.

The least-squares method finds the optimal parameter values by minimizing the sum of squared residuals, :

In the simplest case and the result of the least-squares method is the

The least-squares method finds the optimal parameter values by minimizing the sum of squared residuals, :

In the simplest case and the result of the least-squares method is the  If the residual points had some sort of a shape and were not randomly fluctuating, a linear model would not be appropriate. For example, if the residual plot had a parabolic shape as seen to the right, a parabolic model would be appropriate for the data. The residuals for a parabolic model can be calculated via .

If the residual points had some sort of a shape and were not randomly fluctuating, a linear model would not be appropriate. For example, if the residual plot had a parabolic shape as seen to the right, a parabolic model would be appropriate for the data. The residuals for a parabolic model can be calculated via .

A special case of

A special case of

History

Founding

The method of least squares grew out of the fields ofastronomy

Astronomy is a natural science that studies celestial objects and the phenomena that occur in the cosmos. It uses mathematics, physics, and chemistry in order to explain their origin and their overall evolution. Objects of interest includ ...

and geodesy

Geodesy or geodetics is the science of measuring and representing the Figure of the Earth, geometry, Gravity of Earth, gravity, and Earth's rotation, spatial orientation of the Earth in Relative change, temporally varying Three-dimensional spac ...

, as scientists and mathematicians sought to provide solutions to the challenges of navigating the Earth's oceans during the Age of Discovery

The Age of Discovery (), also known as the Age of Exploration, was part of the early modern period and overlapped with the Age of Sail. It was a period from approximately the 15th to the 17th century, during which Seamanship, seafarers fro ...

. The accurate description of the behavior of celestial bodies was the key to enabling ships to sail in open seas, where sailors could no longer rely on land sightings for navigation.

The method was the culmination of several advances that took place during the course of the eighteenth century:

*The combination of different observations as being the best estimate of the true value; errors decrease with aggregation rather than increase, first appeared in Isaac Newton

Sir Isaac Newton () was an English polymath active as a mathematician, physicist, astronomer, alchemist, theologian, and author. Newton was a key figure in the Scientific Revolution and the Age of Enlightenment, Enlightenment that followed ...

's work in 1671, though it went unpublished, and again in 1700. It was perhaps first expressed formally by Roger Cotes in 1722.

*The combination of different observations taken under the ''same'' conditions contrary to simply trying one's best to observe and record a single observation accurately. The approach was known as the method of averages. This approach was notably used by Newton while studying equinoxes in 1700, also writing down the first of the 'normal equations' known from ordinary least squares

In statistics, ordinary least squares (OLS) is a type of linear least squares method for choosing the unknown parameters in a linear regression

In statistics, linear regression is a statistical model, model that estimates the relationship ...

, Tobias Mayer while studying the libration

In lunar astronomy, libration is the cyclic variation in the apparent position of the Moon that is perceived by observers on the Earth and caused by changes between the orbital and rotational planes of the moon. It causes an observer to see ...

s of the Moon in 1750, and by Pierre-Simon Laplace

Pierre-Simon, Marquis de Laplace (; ; 23 March 1749 – 5 March 1827) was a French polymath, a scholar whose work has been instrumental in the fields of physics, astronomy, mathematics, engineering, statistics, and philosophy. He summariz ...

in his work in explaining the differences in motion of Jupiter

Jupiter is the fifth planet from the Sun and the List of Solar System objects by size, largest in the Solar System. It is a gas giant with a Jupiter mass, mass more than 2.5 times that of all the other planets in the Solar System combined a ...

and Saturn

Saturn is the sixth planet from the Sun and the second largest in the Solar System, after Jupiter. It is a gas giant, with an average radius of about 9 times that of Earth. It has an eighth the average density of Earth, but is over 95 tim ...

in 1788.

*The combination of different observations taken under conditions. The method came to be known as the method of '' least absolute deviation''. It was notably performed by Roger Joseph Boscovich in his work on the shape of the Earth in 1757 and by Pierre-Simon Laplace

Pierre-Simon, Marquis de Laplace (; ; 23 March 1749 – 5 March 1827) was a French polymath, a scholar whose work has been instrumental in the fields of physics, astronomy, mathematics, engineering, statistics, and philosophy. He summariz ...

for the same problem in 1789 and 1799.

*The development of a criterion that can be evaluated to determine when the solution with the minimum error has been achieved. Laplace tried to specify a mathematical form of the probability

Probability is a branch of mathematics and statistics concerning events and numerical descriptions of how likely they are to occur. The probability of an event is a number between 0 and 1; the larger the probability, the more likely an e ...

density for the errors and define a method of estimation that minimizes the error of estimation. For this purpose, Laplace used a symmetric two-sided exponential distribution we now call Laplace distribution

In probability theory and statistics, the Laplace distribution is a continuous probability distribution named after Pierre-Simon Laplace. It is also sometimes called the double exponential distribution, because it can be thought of as two exponen ...

to model the error distribution, and used the sum of absolute deviation as error of estimation. He felt these to be the simplest assumptions he could make, and he had hoped to obtain the arithmetic mean as the best estimate. Instead, his estimator was the posterior median.

The method

The first clear and concise exposition of the method of least squares was published by Legendre in 1805. The technique is described as an algebraic procedure for fitting linear equations to data and Legendre demonstrates the new method by analyzing the same data as Laplace for the shape of the Earth. Within ten years after Legendre's publication, the method of least squares had been adopted as a standard tool in astronomy and geodesy inFrance

France, officially the French Republic, is a country located primarily in Western Europe. Overseas France, Its overseas regions and territories include French Guiana in South America, Saint Pierre and Miquelon in the Atlantic Ocean#North Atlan ...

, Italy

Italy, officially the Italian Republic, is a country in Southern Europe, Southern and Western Europe, Western Europe. It consists of Italian Peninsula, a peninsula that extends into the Mediterranean Sea, with the Alps on its northern land b ...

, and Prussia

Prussia (; ; Old Prussian: ''Prūsija'') was a Germans, German state centred on the North European Plain that originated from the 1525 secularization of the Prussia (region), Prussian part of the State of the Teutonic Order. For centuries, ...

, which constitutes an extraordinarily rapid acceptance of a scientific technique.

In 1809 Carl Friedrich Gauss

Johann Carl Friedrich Gauss (; ; ; 30 April 177723 February 1855) was a German mathematician, astronomer, geodesist, and physicist, who contributed to many fields in mathematics and science. He was director of the Göttingen Observatory and ...

published his method of calculating the orbits of celestial bodies. In that work he claimed to have been in possession of the method of least squares since 1795. This naturally led to a priority dispute with Legendre. However, to Gauss's credit, he went beyond Legendre and succeeded in connecting the method of least squares with the principles of probability and to the normal distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

f(x) = \frac ...

. He had managed to complete Laplace's program of specifying a mathematical form of the probability density for the observations, depending on a finite number of unknown parameters, and define a method of estimation that minimizes the error of estimation. Gauss showed that the arithmetic mean

In mathematics and statistics, the arithmetic mean ( ), arithmetic average, or just the ''mean'' or ''average'' is the sum of a collection of numbers divided by the count of numbers in the collection. The collection is often a set of results fr ...

is indeed the best estimate of the location parameter by changing both the probability density and the method of estimation. He then turned the problem around by asking what form the density should have and what method of estimation should be used to get the arithmetic mean as estimate of the location parameter. In this attempt, he invented the normal distribution.

An early demonstration of the strength of Gauss's method came when it was used to predict the future location of the newly discovered asteroid Ceres. On 1 January 1801, the Italian astronomer Giuseppe Piazzi discovered Ceres and was able to track its path for 40 days before it was lost in the glare of the Sun. Based on these data, astronomers desired to determine the location of Ceres after it emerged from behind the Sun without solving Kepler's complicated nonlinear equations of planetary motion. The only predictions that successfully allowed Hungarian astronomer Franz Xaver von Zach

Baron Franz Xaver von Zach (''Franz Xaver Freiherr von Zach''; 4 June 1754 – 2 September 1832) was an Austrian astronomer born at Pest, Hungary (now Budapest in Hungary).

Biography

Zach studied physics at the Royal University of Pest, and ...

to relocate Ceres were those performed by the 24-year-old Gauss using least-squares analysis.

In 1810, after reading Gauss's work, Laplace, after proving the central limit theorem

In probability theory, the central limit theorem (CLT) states that, under appropriate conditions, the Probability distribution, distribution of a normalized version of the sample mean converges to a Normal distribution#Standard normal distributi ...

, used it to give a large sample justification for the method of least squares and the normal distribution. In 1822, Gauss was able to state that the least-squares approach to regression analysis is optimal in the sense that in a linear model where the errors have a mean of zero, are uncorrelated, normally distributed, and have equal variances, the best linear unbiased estimator of the coefficients is the least-squares estimator. An extended version of this result is known as the Gauss–Markov theorem

In statistics, the Gauss–Markov theorem (or simply Gauss theorem for some authors) states that the ordinary least squares (OLS) estimator has the lowest sampling variance within the class of linear unbiased estimators, if the errors in ...

.

The idea of least-squares analysis was also independently formulated by the American Robert Adrain in 1808. In the next two centuries workers in the theory of errors and in statistics found many different ways of implementing least squares.

Problem statement

The objective consists of adjusting the parameters of a model function to best fit a data set. A simple data set consists of ''n'' points (data pairs) , ''i'' = 1, …, ''n'', where is anindependent variable

A variable is considered dependent if it depends on (or is hypothesized to depend on) an independent variable. Dependent variables are studied under the supposition or demand that they depend, by some law or rule (e.g., by a mathematical function ...

and is a dependent variable

A variable is considered dependent if it depends on (or is hypothesized to depend on) an independent variable. Dependent variables are studied under the supposition or demand that they depend, by some law or rule (e.g., by a mathematical functio ...

whose value is found by observation. The model function has the form , where ''m'' adjustable parameters are held in the vector . The goal is to find the parameter values for the model that "best" fits the data. The fit of a model to a data point is measured by its residual, defined as the difference between the observed value of the dependent variable and the value predicted by the model:

The least-squares method finds the optimal parameter values by minimizing the sum of squared residuals, :

In the simplest case and the result of the least-squares method is the

The least-squares method finds the optimal parameter values by minimizing the sum of squared residuals, :

In the simplest case and the result of the least-squares method is the arithmetic mean

In mathematics and statistics, the arithmetic mean ( ), arithmetic average, or just the ''mean'' or ''average'' is the sum of a collection of numbers divided by the count of numbers in the collection. The collection is often a set of results fr ...

of the input data.

An example of a model in two dimensions is that of the straight line. Denoting the y-intercept as and the slope as , the model function is given by . See linear least squares

Linear least squares (LLS) is the least squares approximation of linear functions to data.

It is a set of formulations for solving statistical problems involved in linear regression, including variants for ordinary (unweighted), weighted, and ...

for a fully worked out example of this model.

A data point may consist of more than one independent variable. For example, when fitting a plane to a set of height measurements, the plane is a function of two independent variables, ''x'' and ''z'', say. In the most general case there may be one or more independent variables and one or more dependent variables at each data point.

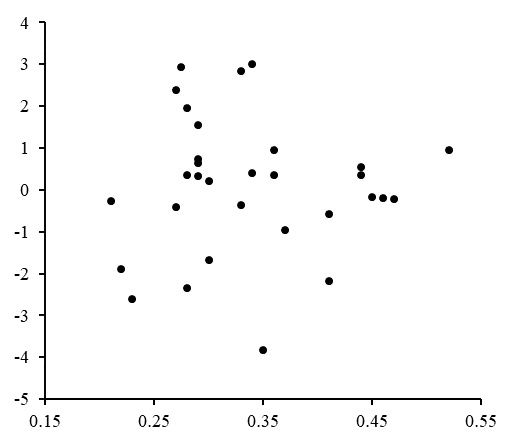

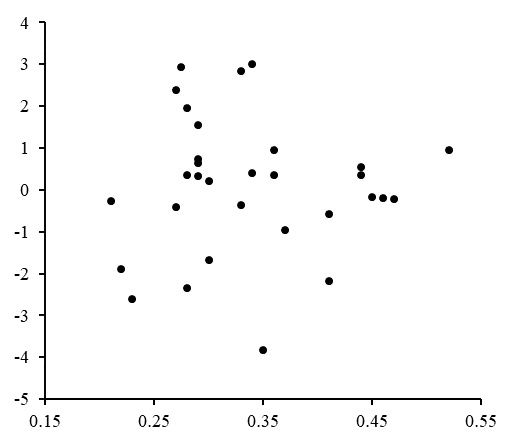

To the right is a residual plot illustrating random fluctuations about , indicating that a linear model is appropriate. is an independent, random variable.

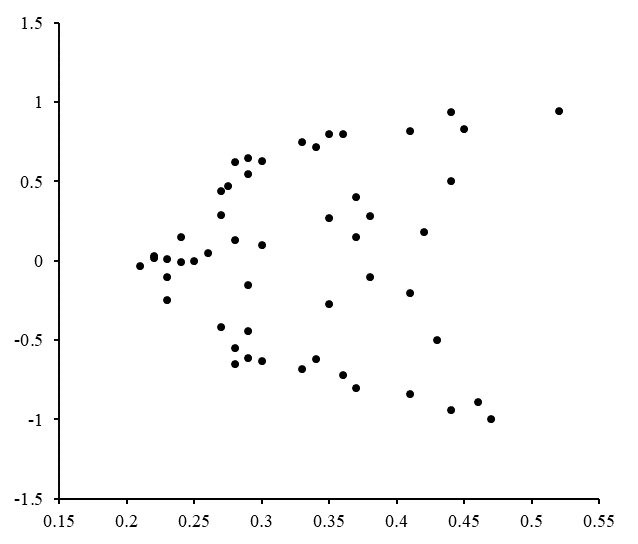

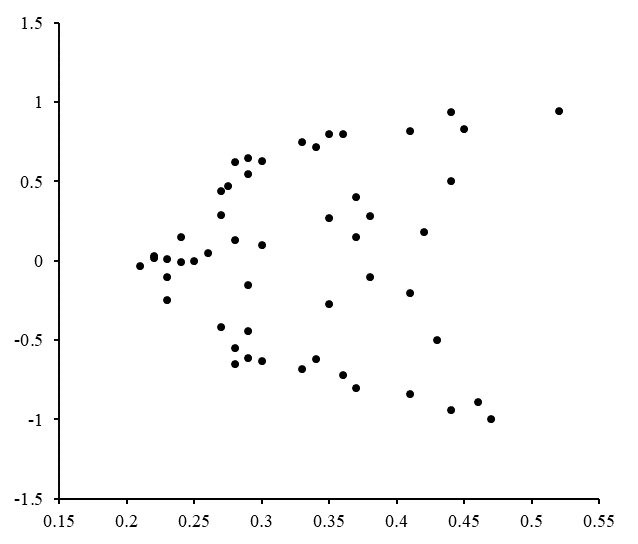

If the residual points had some sort of a shape and were not randomly fluctuating, a linear model would not be appropriate. For example, if the residual plot had a parabolic shape as seen to the right, a parabolic model would be appropriate for the data. The residuals for a parabolic model can be calculated via .

If the residual points had some sort of a shape and were not randomly fluctuating, a linear model would not be appropriate. For example, if the residual plot had a parabolic shape as seen to the right, a parabolic model would be appropriate for the data. The residuals for a parabolic model can be calculated via .

Limitations

This regression formulation considers only observational errors in the dependent variable (but the alternativetotal least squares

In applied statistics, total least squares is a type of errors-in-variables regression, a least squares data modeling technique in which observational errors on both dependent and independent variables are taken into account. It is a generaliz ...

regression can account for errors in both variables). There are two rather different contexts with different implications:

*Regression for prediction. Here a model is fitted to provide a prediction rule for application in a similar situation to which the data used for fitting apply. Here the dependent variables corresponding to such future application would be subject to the same types of observation error as those in the data used for fitting. It is therefore logically consistent to use the least-squares prediction rule for such data.

*Regression for fitting a "true relationship". In standard regression analysis that leads to fitting by least squares there is an implicit assumption that errors in the independent variable

A variable is considered dependent if it depends on (or is hypothesized to depend on) an independent variable. Dependent variables are studied under the supposition or demand that they depend, by some law or rule (e.g., by a mathematical function ...

are zero or strictly controlled so as to be negligible. When errors in the independent variable

A variable is considered dependent if it depends on (or is hypothesized to depend on) an independent variable. Dependent variables are studied under the supposition or demand that they depend, by some law or rule (e.g., by a mathematical function ...

are non-negligible, models of measurement error can be used; such methods can lead to parameter estimates, hypothesis testing

A statistical hypothesis test is a method of statistical inference used to decide whether the data provide sufficient evidence to reject a particular hypothesis. A statistical hypothesis test typically involves a calculation of a test statistic. T ...

and confidence intervals that take into account the presence of observation errors in the independent variables. An alternative approach is to fit a model by total least squares

In applied statistics, total least squares is a type of errors-in-variables regression, a least squares data modeling technique in which observational errors on both dependent and independent variables are taken into account. It is a generaliz ...

; this can be viewed as taking a pragmatic approach to balancing the effects of the different sources of error in formulating an objective function for use in model-fitting.

Solving the least squares problem

Theminimum

In mathematical analysis, the maximum and minimum of a function are, respectively, the greatest and least value taken by the function. Known generically as extremum, they may be defined either within a given range (the ''local'' or ''relative ...

of the sum of squares is found by setting the gradient

In vector calculus, the gradient of a scalar-valued differentiable function f of several variables is the vector field (or vector-valued function) \nabla f whose value at a point p gives the direction and the rate of fastest increase. The g ...

to zero. Since the model contains ''m'' parameters, there are ''m'' gradient equations:

and since , the gradient equations become

The gradient equations apply to all least squares problems. Each particular problem requires particular expressions for the model and its partial derivatives.

Linear least squares

A regression model is a linear one when the model comprises alinear combination

In mathematics, a linear combination or superposition is an Expression (mathematics), expression constructed from a Set (mathematics), set of terms by multiplying each term by a constant and adding the results (e.g. a linear combination of ''x'' a ...

of the parameters, i.e.,

where the function is a function of .

Letting and putting the independent and dependent variables in matrices and respectively, we can compute the least squares in the following way. Note that is the set of all data.

The gradient of the loss is:

Setting the gradient of the loss to zero and solving for , we get:

Non-linear least squares

There is, in some cases, aclosed-form solution

In mathematics, an expression or equation is in closed form if it is formed with constants, variables, and a set of functions considered as ''basic'' and connected by arithmetic operations (, and integer powers) and function composition. C ...

to a non-linear least squares problem – but in general there is not. In the case of no closed-form solution, numerical algorithms are used to find the value of the parameters that minimizes the objective. Most algorithms involve choosing initial values for the parameters. Then, the parameters are refined iteratively, that is, the values are obtained by successive approximation:

where a superscript ''k'' is an iteration number, and the vector of increments is called the shift vector. In some commonly used algorithms, at each iteration the model may be linearized by approximation to a first-order Taylor series

In mathematics, the Taylor series or Taylor expansion of a function is an infinite sum of terms that are expressed in terms of the function's derivatives at a single point. For most common functions, the function and the sum of its Taylor ser ...

expansion about :

The Jacobian J is a function of constants, the independent variable ''and'' the parameters, so it changes from one iteration to the next. The residuals are given by

To minimize the sum of squares of , the gradient equation is set to zero and solved for :

which, on rearrangement, become ''m'' simultaneous linear equations, the normal equations:

The normal equations are written in matrix notation as

These are the defining equations of the Gauss–Newton algorithm.

Differences between linear and nonlinear least squares

* The model function, ''f'', in LLSQ (linear least squares) is a linear combination of parameters of the form The model may represent a straight line, a parabola or any other linear combination of functions. In NLLSQ (nonlinear least squares) the parameters appear as functions, such as and so forth. If the derivatives are either constant or depend only on the values of the independent variable, the model is linear in the parameters. Otherwise, the model is nonlinear. *Need initial values for the parameters to find the solution to a NLLSQ problem; LLSQ does not require them. *Solution algorithms for NLLSQ often require that the Jacobian can be calculated similar to LLSQ. Analytical expressions for the partial derivatives can be complicated. If analytical expressions are impossible to obtain either the partial derivatives must be calculated by numerical approximation or an estimate must be made of the Jacobian, often viafinite differences

A finite difference is a mathematical expression of the form . Finite differences (or the associated difference quotients) are often used as approximations of derivatives, such as in numerical differentiation.

The difference operator, commonly d ...

.

*Non-convergence (failure of the algorithm to find a minimum) is a common phenomenon in NLLSQ.

*LLSQ is globally concave so non-convergence is not an issue.

*Solving NLLSQ is usually an iterative process which has to be terminated when a convergence criterion is satisfied. LLSQ solutions can be computed using direct methods, although problems with large numbers of parameters are typically solved with iterative methods, such as the Gauss–Seidel method.

*In LLSQ the solution is unique, but in NLLSQ there may be multiple minima in the sum of squares.

*Under the condition that the errors are uncorrelated with the predictor variables, LLSQ yields unbiased estimates, but even under that condition NLLSQ estimates are generally biased.

These differences must be considered whenever the solution to a nonlinear least squares problem is being sought.

Example

Consider a simple example drawn from physics. A spring should obeyHooke's law

In physics, Hooke's law is an empirical law which states that the force () needed to extend or compress a spring by some distance () scales linearly with respect to that distance—that is, where is a constant factor characteristic of ...

which states that the extension of a spring is proportional to the force, ''F'', applied to it.

constitutes the model, where ''F'' is the independent variable. In order to estimate the force constant, ''k'', we conduct a series of ''n'' measurements with different forces to produce a set of data, , where ''yi'' is a measured spring extension. Each experimental observation will contain some error, , and so we may specify an empirical model for our observations,

There are many methods we might use to estimate the unknown parameter ''k''. Since the ''n'' equations in the ''m'' variables in our data comprise an overdetermined system

In mathematics, a system of equations is considered overdetermined if there are more equations than unknowns. An overdetermined system is almost always inconsistent equations, inconsistent (it has no solution) when constructed with random coeffi ...

with one unknown and ''n'' equations, we estimate ''k'' using least squares. The sum of squares to be minimized is

The least squares estimate of the force constant, ''k'', is given by

We assume that applying force ''causes'' the spring to expand. After having derived the force constant by least squares fitting, we predict the extension from Hooke's law.

Uncertainty quantification

In a least squares calculation with unit weights, or in linear regression, the variance on the ''j''th parameter, denoted , is usually estimated with where the true error variance ''σ''2 is replaced by an estimate, the reduced chi-squared statistic, based on the minimized value of the residual sum of squares (objective function), ''S''. The denominator, ''n'' − ''m'', is the statistical degrees of freedom; see effective degrees of freedom for generalizations. ''C'' is thecovariance matrix

In probability theory and statistics, a covariance matrix (also known as auto-covariance matrix, dispersion matrix, variance matrix, or variance–covariance matrix) is a square matrix giving the covariance between each pair of elements of ...

.

Statistical testing

If theprobability distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical descri ...

of the parameters is known or an asymptotic approximation is made, confidence limits can be found. Similarly, statistical tests on the residuals can be conducted if the probability distribution of the residuals is known or assumed. We can derive the probability distribution of any linear combination of the dependent variables if the probability distribution of experimental errors is known or assumed. Inferring is easy when assuming that the errors follow a normal distribution, consequently implying that the parameter estimates and residuals will also be normally distributed conditional on the values of the independent variables.

It is necessary to make assumptions about the nature of the experimental errors to test the results statistically. A common assumption is that the errors belong to a normal distribution. The central limit theorem

In probability theory, the central limit theorem (CLT) states that, under appropriate conditions, the Probability distribution, distribution of a normalized version of the sample mean converges to a Normal distribution#Standard normal distributi ...

supports the idea that this is a good approximation in many cases.

* The Gauss–Markov theorem

In statistics, the Gauss–Markov theorem (or simply Gauss theorem for some authors) states that the ordinary least squares (OLS) estimator has the lowest sampling variance within the class of linear unbiased estimators, if the errors in ...

. In a linear model in which the errors have expectation zero conditional on the independent variables, are uncorrelated

In probability theory and statistics, two real-valued random variables, X, Y, are said to be uncorrelated if their covariance, \operatorname ,Y= \operatorname Y- \operatorname \operatorname /math>, is zero. If two variables are uncorrelated, ther ...

and have equal variance

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation (SD) is obtained as the square root of the variance. Variance is a measure of dispersion ...

s, the best linear unbiased

Bias is a disproportionate weight ''in favor of'' or ''against'' an idea or thing, usually in a way that is inaccurate, closed-minded, prejudicial, or unfair. Biases can be innate or learned. People may develop biases for or against an individ ...

estimator of any linear combination of the observations, is its least-squares estimator. "Best" means that the least squares estimators of the parameters have minimum variance. The assumption of equal variance is valid when the errors all belong to the same distribution.

*If the errors belong to a normal distribution, the least-squares estimators are also the maximum likelihood estimator

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed stati ...

s in a linear model.

However, suppose the errors are not normally distributed. In that case, a central limit theorem

In probability theory, the central limit theorem (CLT) states that, under appropriate conditions, the Probability distribution, distribution of a normalized version of the sample mean converges to a Normal distribution#Standard normal distributi ...

often nonetheless implies that the parameter estimates will be approximately normally distributed so long as the sample is reasonably large. For this reason, given the important property that the error mean is independent of the independent variables, the distribution of the error term is not an important issue in regression analysis. Specifically, it is not typically important whether the error term follows a normal distribution.

Weighted least squares

A special case of

A special case of generalized least squares

In statistics, generalized least squares (GLS) is a method used to estimate the unknown parameters in a Linear regression, linear regression model. It is used when there is a non-zero amount of correlation between the Residual (statistics), resi ...

called weighted least squares occurs when all the off-diagonal entries of Ω (the correlation matrix of the residuals) are null; the variance

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation (SD) is obtained as the square root of the variance. Variance is a measure of dispersion ...

s of the observations (along the covariance matrix diagonal) may still be unequal (heteroscedasticity

In statistics, a sequence of random variables is homoscedastic () if all its random variables have the same finite variance; this is also known as homogeneity of variance. The complementary notion is called heteroscedasticity, also known as hete ...

). In simpler terms, heteroscedasticity

In statistics, a sequence of random variables is homoscedastic () if all its random variables have the same finite variance; this is also known as homogeneity of variance. The complementary notion is called heteroscedasticity, also known as hete ...

is when the variance of depends on the value of which causes the residual plot to create a "fanning out" effect towards larger values as seen in the residual plot to the right. On the other hand, homoscedasticity

In statistics, a sequence of random variables is homoscedastic () if all its random variables have the same finite variance; this is also known as homogeneity of variance. The complementary notion is called heteroscedasticity, also known as hete ...

is assuming that the variance of and variance of are equal. Relationship to principal components

The first principal component about the mean of a set of points can be represented by that line which most closely approaches the data points (as measured by squared distance of closest approach, i.e. perpendicular to the line). In contrast, linear least squares tries to minimize the distance in the direction only. Thus, although the two use a similar error metric, linear least squares is a method that treats one dimension of the data preferentially, while PCA treats all dimensions equally.Relationship to measure theory

Notable statistician Sara van de Geer usedempirical process theory

In probability theory, an empirical process is a stochastic process that characterizes the deviation of the empirical distribution function from its expectation.

In mean field theory, limit theorems (as the number of objects becomes large) are con ...

and the Vapnik–Chervonenkis dimension to prove a least-squares estimator can be interpreted as a measure on the space of square-integrable functions.

Regularization

Tikhonov regularization

In some contexts, a regularized version of the least squares solution may be preferable. Tikhonov regularization (or ridge regression) adds a constraint that , the squared -norm of the parameter vector, is not greater than a given value to the least squares formulation, leading to a constrained minimization problem. This is equivalent to the unconstrained minimization problem where the objective function is the residual sum of squares plus a penalty term and is a tuning parameter (this is the Lagrangian form of the constrained minimization problem). In a Bayesian context, this is equivalent to placing a zero-mean normally distributedprior

The term prior may refer to:

* Prior (ecclesiastical), the head of a priory (monastery)

* Prior convictions, the life history and previous convictions of a suspect or defendant in a criminal case

* Prior probability, in Bayesian statistics

* Prio ...

on the parameter vector.

Lasso method

An alternative regularized version of least squares isLasso

A lasso or lazo ( or ), also called reata or la reata in Mexico, and in the United States riata or lariat (from Mexican Spanish lasso for roping cattle), is a loop of rope designed as a restraint to be thrown around a target and tightened when ...

(least absolute shrinkage and selection operator), which uses the constraint that , the L1-norm of the parameter vector, is no greater than a given value. (One can show like above using Lagrange multipliers that this is equivalent to an unconstrained minimization of the least-squares penalty with added.) In a Bayesian context, this is equivalent to placing a zero-mean Laplace

Pierre-Simon, Marquis de Laplace (; ; 23 March 1749 – 5 March 1827) was a French polymath, a scholar whose work has been instrumental in the fields of physics, astronomy, mathematics, engineering, statistics, and philosophy. He summariz ...

prior distribution

A prior probability distribution of an uncertain quantity, simply called the prior, is its assumed probability distribution before some evidence is taken into account. For example, the prior could be the probability distribution representing the ...

on the parameter vector. The optimization problem may be solved using quadratic programming

Quadratic programming (QP) is the process of solving certain mathematical optimization problems involving quadratic functions. Specifically, one seeks to optimize (minimize or maximize) a multivariate quadratic function subject to linear constr ...

or more general convex optimization

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems ...

methods, as well as by specific algorithms such as the least angle regression algorithm.

One of the prime differences between Lasso and ridge regression is that in ridge regression, as the penalty is increased, all parameters are reduced while still remaining non-zero, while in Lasso, increasing the penalty will cause more and more of the parameters to be driven to zero. This is an advantage of Lasso over ridge regression, as driving parameters to zero deselects the features from the regression. Thus, Lasso automatically selects more relevant features and discards the others, whereas Ridge regression never fully discards any features. Some feature selection

In machine learning, feature selection is the process of selecting a subset of relevant Feature (machine learning), features (variables, predictors) for use in model construction. Feature selection techniques are used for several reasons:

* sim ...

techniques are developed based on the LASSO including Bolasso which bootstraps samples, and FeaLect which analyzes the regression coefficients corresponding to different values of to score all the features.

The ''L''1-regularized formulation is useful in some contexts due to its tendency to prefer solutions where more parameters are zero, which gives solutions that depend on fewer variables. For this reason, the Lasso and its variants are fundamental to the field of compressed sensing

Compressed sensing (also known as compressive sensing, compressive sampling, or sparse sampling) is a signal processing technique for efficiently acquiring and reconstructing a Signal (electronics), signal by finding solutions to Underdetermined s ...

. An extension of this approach is elastic net regularization.

See also

* Least-squares adjustment * Bayesian MMSE estimator * Best linear unbiased estimator (BLUE) * Best linear unbiased prediction (BLUP) *Gauss–Markov theorem

In statistics, the Gauss–Markov theorem (or simply Gauss theorem for some authors) states that the ordinary least squares (OLS) estimator has the lowest sampling variance within the class of linear unbiased estimators, if the errors in ...

* ''L''2 norm

* Least absolute deviations

Least absolute deviations (LAD), also known as least absolute errors (LAE), least absolute residuals (LAR), or least absolute values (LAV), is a statistical optimality criterion and a statistical optimization technique based on minimizing the su ...

* Least-squares spectral analysis

Least-squares spectral analysis (LSSA) is a method of estimating a Spectral density estimation#Overview, frequency spectrum based on a least-squares fit of Sine wave, sinusoids to data samples, similar to Fourier analysis. Fourier analysis, the ...

* Measurement uncertainty

In metrology, measurement uncertainty is the expression of the statistical dispersion of the values attributed to a quantity measured on an interval or ratio scale.

All measurements are subject to uncertainty and a measurement result is complet ...

* Orthogonal projection

In linear algebra and functional analysis, a projection is a linear transformation P from a vector space to itself (an endomorphism) such that P\circ P=P. That is, whenever P is applied twice to any vector, it gives the same result as if it we ...

* Proximal gradient methods for learning Proximal gradient (forward backward splitting) methods for learning is an area of research in optimization and statistical learning theory which studies algorithms for a general class of Convex function#Definition, convex Regularization (mathematics ...

* Quadratic loss function

* Root mean square

In mathematics, the root mean square (abbrev. RMS, or rms) of a set of values is the square root of the set's mean square.

Given a set x_i, its RMS is denoted as either x_\mathrm or \mathrm_x. The RMS is also known as the quadratic mean (denote ...

* Squared deviations from the mean

References

Further reading

* * * * * *External links

* {{DEFAULTSORT:Least Squares Single-equation methods (econometrics) Optimization algorithms and methods gl:Mínimos cadrados lineais vi:Bình phương tối thiểu tuyến tính