Information gain in decision trees on:

[Wikipedia]

[Google]

[Amazon]

In the context of

Now, it is clear that information gain is the measure of how much information a feature provides about a class. Let's visualize information gain in a decision tree as shown in the right:

The node ''t'' is the parent node, and the sub-nodes ''tL'' and ''tR'' are child nodes. In this case, the parent node ''t'' has a collection of cancer and non-cancer samples denoted as C and NC respectively. We can use information gain to determine how good the splitting of nodes is in a decision tree. In terms of entropy, information gain is defined as:

To understand this idea, let's start by an example in which we create a simple dataset and want to see if gene

Now, it is clear that information gain is the measure of how much information a feature provides about a class. Let's visualize information gain in a decision tree as shown in the right:

The node ''t'' is the parent node, and the sub-nodes ''tL'' and ''tR'' are child nodes. In this case, the parent node ''t'' has a collection of cancer and non-cancer samples denoted as C and NC respectively. We can use information gain to determine how good the splitting of nodes is in a decision tree. In terms of entropy, information gain is defined as:

To understand this idea, let's start by an example in which we create a simple dataset and want to see if gene  Using this same set of formulae with the other three mutations leads to a table of the candidate splits, ranked by their information gain:

The mutation that provides the most useful information would be Mutation 3, so that will be used to split the root node of the decision tree. The root can be split and all the samples can be passed though and appended to the child nodes. A tree describing the split is shown on the left.

The samples that are on the left node of the tree would be classified as cancerous by the tree, while those on the right would be non-cancerous. This tree is relatively accurate at classifying the samples that were used to build it (which is a case of

Using this same set of formulae with the other three mutations leads to a table of the candidate splits, ranked by their information gain:

The mutation that provides the most useful information would be Mutation 3, so that will be used to split the root node of the decision tree. The root can be split and all the samples can be passed though and appended to the child nodes. A tree describing the split is shown on the left.

The samples that are on the left node of the tree would be classified as cancerous by the tree, while those on the right would be non-cancerous. This tree is relatively accurate at classifying the samples that were used to build it (which is a case of  By following the tree, NC10 was classified correctly, but C15 was classified as NC. For other samples, this tree would not be 100% accurate anymore. It could be possible to improve this though, with options such as increasing the depth of the tree or increasing the size of the training set.

By following the tree, NC10 was classified correctly, but C15 was classified as NC. For other samples, this tree would not be 100% accurate anymore. It could be possible to improve this though, with options such as increasing the depth of the tree or increasing the size of the training set.

decision tree

A decision tree is a decision support system, decision support recursive partitioning structure that uses a Tree (graph theory), tree-like Causal model, model of decisions and their possible consequences, including probability, chance event ou ...

s in information theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, ...

and machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

, information gain refers to the conditional expected value of the Kullback–Leibler divergence

In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how much a model probability distribution is diff ...

of the univariate probability distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical descri ...

of one variable from the conditional distribution

Conditional (if then) may refer to:

* Causal conditional, if X then Y, where X is a cause of Y

*Conditional probability, the probability of an event A given that another event B

* Conditional proof, in logic: a proof that asserts a conditional, ...

of this variable given the other one. (In broader contexts, ''information gain'' can also be used as a synonym for either Kullback–Leibler divergence

In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how much a model probability distribution is diff ...

or mutual information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual Statistical dependence, dependence between the two variables. More specifically, it quantifies the "Information conten ...

, but the focus of this article is on the more narrow meaning below.)

Explicitly, the ''information

Information is an Abstraction, abstract concept that refers to something which has the power Communication, to inform. At the most fundamental level, it pertains to the Interpretation (philosophy), interpretation (perhaps Interpretation (log ...

gain'' of a random variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a Mathematics, mathematical formalization of a quantity or object which depends on randomness, random events. The term 'random variable' in its mathema ...

obtained from an observation of a random variable taking value is defined as:

In other words, it is the Kullback–Leibler divergence

In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how much a model probability distribution is diff ...

of (the prior distribution

A prior probability distribution of an uncertain quantity, simply called the prior, is its assumed probability distribution before some evidence is taken into account. For example, the prior could be the probability distribution representing the ...

for ) from (the posterior distribution

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior ...

for given ).

The expected value

In probability theory, the expected value (also called expectation, expectancy, expectation operator, mathematical expectation, mean, expectation value, or first Moment (mathematics), moment) is a generalization of the weighted average. Informa ...

of the information gain is the mutual information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual Statistical dependence, dependence between the two variables. More specifically, it quantifies the "Information conten ...

:

i.e. the reduction in the entropy

Entropy is a scientific concept, most commonly associated with states of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynamics, where it was first recognized, to the micros ...

of achieved by learning the state of the random variable .

In machine learning, this concept can be used to define a preferred sequence of attributes to investigate to most rapidly narrow down the state of ''X''. Such a sequence (which depends on the outcome of the investigation of previous attributes at each stage) is called a decision tree

A decision tree is a decision support system, decision support recursive partitioning structure that uses a Tree (graph theory), tree-like Causal model, model of decisions and their possible consequences, including probability, chance event ou ...

, and when applied in the area of machine learning is known as decision tree learning

Decision tree learning is a supervised learning approach used in statistics, data mining and machine learning. In this formalism, a classification or regression decision tree is used as a predictive model to draw conclusions about a set of obser ...

. Usually an attribute with high mutual information should be preferred to other attributes.

General definition

In general terms, the expected information gain is the reduction ininformation entropy

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential states or possible outcomes. This measures the expected amount of information needed ...

from a prior state to a state that takes some information as given:

:

where is the conditional entropy of given the value of attribute .

This is intuitively plausible when interpreting entropy as a measure of uncertainty of a random variable : by learning (or assuming) about , our uncertainty about is reduced (i.e. is positive), unless of course is independent of , in which case , meaning .

Formal definition

Let denote a set of training examples, each of the form where is the value of the attribute orfeature

Feature may refer to:

Computing

* Feature recognition, could be a hole, pocket, or notch

* Feature (computer vision), could be an edge, corner or blob

* Feature (machine learning), in statistics: individual measurable properties of the phenome ...

of example

Example may refer to:

* ''exempli gratia'' (e.g.), usually read out in English as "for example"

* .example, reserved as a domain name that may not be installed as a top-level domain of the Internet

** example.com, example.net, example.org, an ...

and is the corresponding class label. The information gain for an attribute is defined in terms of Shannon entropy

Shannon may refer to:

People

* Shannon (given name)

* Shannon (surname)

* Shannon (American singer), stage name of singer Brenda Shannon Greene (born 1958)

* Shannon (South Korean singer), British-South Korean singer and actress Shannon Arrum ...

as follows. For a value taken by attribute , let be defined as the set

Set, The Set, SET or SETS may refer to:

Science, technology, and mathematics Mathematics

*Set (mathematics), a collection of elements

*Category of sets, the category whose objects and morphisms are sets and total functions, respectively

Electro ...

of training inputs of for which attribute is equal to . Then the information gain of for attribute is the difference between the a priori Shannon entropy of the training set and the conditional entropy .

:

The mutual information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual Statistical dependence, dependence between the two variables. More specifically, it quantifies the "Information conten ...

is equal to the total entropy for an attribute if for each of the attribute values a unique classification

Classification is the activity of assigning objects to some pre-existing classes or categories. This is distinct from the task of establishing the classes themselves (for example through cluster analysis). Examples include diagnostic tests, identif ...

can be made for the result attribute. In this case, the relative entropies subtracted from the total entropy are 0. In particular, the values defines a partition of the training set data into mutually exclusive

In logic and probability theory, two events (or propositions) are mutually exclusive or disjoint if they cannot both occur at the same time. A clear example is the set of outcomes of a single coin toss, which can result in either heads or tails ...

and all-inclusive subset

In mathematics, a Set (mathematics), set ''A'' is a subset of a set ''B'' if all Element (mathematics), elements of ''A'' are also elements of ''B''; ''B'' is then a superset of ''A''. It is possible for ''A'' and ''B'' to be equal; if they a ...

s, inducing a categorical probability distribution on the values of attribute . The distribution is given . In this representation, the information gain of given can be defined as the difference between the unconditional Shannon entropy of and the expected entropy of conditioned on , where the expectation value

In probability theory, the expected value (also called expectation, expectancy, expectation operator, mathematical expectation, mean, expectation value, or first moment) is a generalization of the weighted average. Informally, the expected va ...

is taken with respect to the induced distribution on the values of .

Another Take on Information Gain, with Example

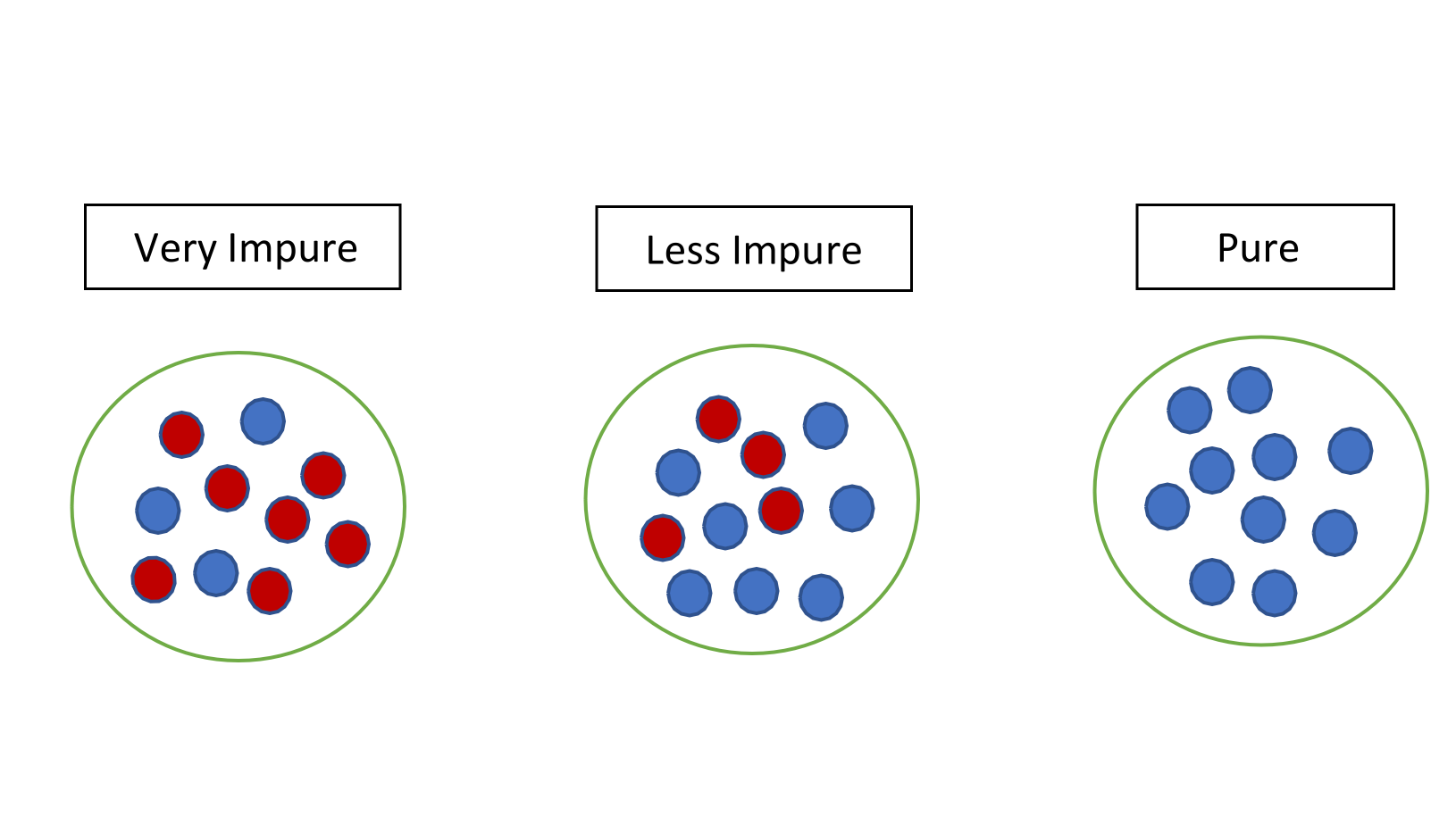

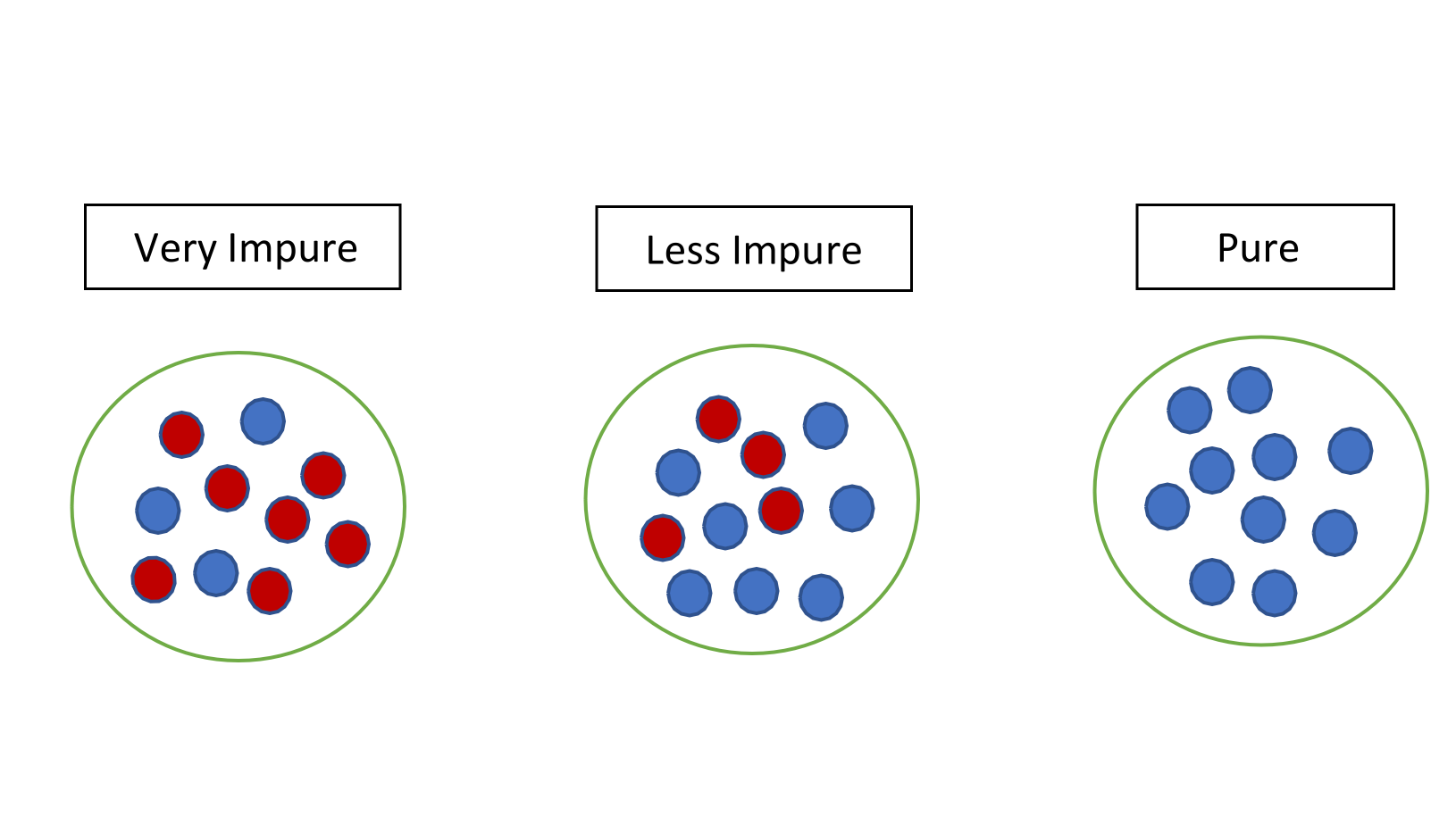

For a better understanding of information gain, let us break it down. As we know, information gain is the reduction in information entropy, what is entropy? Basically, entropy is the measure of impurity or uncertainty in a group of observations. In engineering applications, information is analogous to signal, and entropy is analogous to noise. It determines how a decision tree chooses to split data. The leftmost figure below is very impure and has high entropy corresponding to higher disorder and lower information value. As we go to the right, the entropy decreases, and the information value increases.

Now, it is clear that information gain is the measure of how much information a feature provides about a class. Let's visualize information gain in a decision tree as shown in the right:

The node ''t'' is the parent node, and the sub-nodes ''tL'' and ''tR'' are child nodes. In this case, the parent node ''t'' has a collection of cancer and non-cancer samples denoted as C and NC respectively. We can use information gain to determine how good the splitting of nodes is in a decision tree. In terms of entropy, information gain is defined as:

To understand this idea, let's start by an example in which we create a simple dataset and want to see if gene

Now, it is clear that information gain is the measure of how much information a feature provides about a class. Let's visualize information gain in a decision tree as shown in the right:

The node ''t'' is the parent node, and the sub-nodes ''tL'' and ''tR'' are child nodes. In this case, the parent node ''t'' has a collection of cancer and non-cancer samples denoted as C and NC respectively. We can use information gain to determine how good the splitting of nodes is in a decision tree. In terms of entropy, information gain is defined as:

To understand this idea, let's start by an example in which we create a simple dataset and want to see if gene mutation

In biology, a mutation is an alteration in the nucleic acid sequence of the genome of an organism, virus, or extrachromosomal DNA. Viral genomes contain either DNA or RNA. Mutations result from errors during DNA or viral replication, ...

s could be related to patients with cancer. Given four different gene mutations, as well as seven samples, the training set

In machine learning, a common task is the study and construction of algorithms that can learn from and make predictions on data. Such algorithms function by making data-driven predictions or decisions, through building a mathematical model from ...

for a decision can be created as follows:

In this dataset, a 1 means the sample has the mutation (True), while a 0 means the sample does not (False). A sample with C denotes that it has been confirmed to be cancerous, while NC means it is non-cancerous. Using this data, a decision tree can be created with information gain used to determine the candidate splits for each node.

For the next step, the entropy at parent node ''t'' of the above simple decision tree is computed as:H(''t'') = − 'pC,t'' log2(''pC,t'') + ''pNC,t'' log2(''pNC,t'') where, probability of selecting a class ‘C’ sample at node ''t, pC,t'' = ''n''(''t,'' C) / ''n''(''t''), probability of selecting a class ‘NC’ sample at node ''t, pNC,t'' = ''n''(''t,'' NC) / ''n''(''t''), ''n''(''t''), ''n''(''t,'' C), and ''n''(''t,'' NC) are the number of total samples, ‘C’ samples and ‘NC’ samples at node ''t'' respectively''.''Using this with the example training set, the process for finding information gain beginning with for Mutation 1 is as follows: : ''pC, t'' = 4/7 : ''pNC, t'' = 3/7 : = −(4/7log2(4/7) + 3/7log2(3/7)) = 0.985 Note: will be the same for all mutations at the root. The relatively high value of entropy (1 is the optimal value) suggests that the root node is highly impure and the constituents of the input at the root node would look like the leftmost figure in the above ''Entropy Diagram''. However, such a set of data is good for learning the attributes of the mutations used to split the node. At a certain node, when the homogeneity of the constituents of the input occurs (as shown in the rightmost figure in the above ''Entropy Diagram),'' the dataset would no longer be good for learning. Moving on, the entropy at left and right child nodes of the above decision tree is computed using the formulae:

H(''tL'') = − 'pC,L'' log2(''pC,L'') + ''pNC,L'' log2(''pNC,L'')ref name=":2" />

H(''tR'') = − 'pC,R'' log2(''pC,R'') + ''pNC,R'' log2(''pNC,R'')''where, probability of selecting a class ‘C’ sample at the left child node'', pC,L'' = ''n''(''tL,'' C) / ''n''(''tL''), probability of selecting a class ‘NC’ sample at the left child node'', pNC,L'' = ''n''(''tL,'' NC) / ''n''(''tL''), probability of selecting a class ‘C’ sample at the right child node'', pC,R'' = ''n''(''tR,'' C) / ''n''(''tR''), probability of selecting a class ‘NC’ sample at the right child node'', pNC,R'' = ''n''(''tR,'' NC) / ''n''(''tR''), ''n''(''tL''), ''n''(''tL,'' C), and ''n''(''tL,'' NC) are the total number of samples, ‘C’ samples and ‘NC’ samples at the left child node respectively, ''n''(''tR''), ''n''(''tR,'' C), and ''n''(''tR,'' NC) are the total number of samples, ‘C’ samples and ‘NC’ samples at the right child node respectively''.'' Using these formulae, the H(tL) and H(tR) for Mutation 1 is shown below: : H(''tL'') = −(3/4log2(3/4) + 1/4log2(1/4)) = 0.811 : H(''tR'') = −(1/3log2(1/3) + 2/3log2(2/3)) = 0.918 Following this, average entropy of the child nodes due to the split at node ''t'' of the above decision tree is computed as:

H(''s'',''t'') = ''PLH''(''tL'') + ''PRH''(''tR'') where, probability of samples at the left child, ''PL'' = ''n''(''tL'') / ''n''(''t''), probability of samples at the right child, ''PR'' = ''n''(''tR'') / ''n''(''t''),Finally, H(s,t) along with ''PL'' and ''PR'' for Mutation 1 is as follows: : ''PL'' = 4/7 : ''PR'' = 3/7 : H(''s, t'') = (4/70.811) + (3/70.918) = 0.857 Thus, by definition from equation (i):

(Information gain) = H(''t'') - H(''s'',''t'')After all the steps, gain(''s''), where ''s'' is a candidate split for the example is: :gain(''s'') = 0.985 – 0.857 = 0.128

Using this same set of formulae with the other three mutations leads to a table of the candidate splits, ranked by their information gain:

The mutation that provides the most useful information would be Mutation 3, so that will be used to split the root node of the decision tree. The root can be split and all the samples can be passed though and appended to the child nodes. A tree describing the split is shown on the left.

The samples that are on the left node of the tree would be classified as cancerous by the tree, while those on the right would be non-cancerous. This tree is relatively accurate at classifying the samples that were used to build it (which is a case of

Using this same set of formulae with the other three mutations leads to a table of the candidate splits, ranked by their information gain:

The mutation that provides the most useful information would be Mutation 3, so that will be used to split the root node of the decision tree. The root can be split and all the samples can be passed though and appended to the child nodes. A tree describing the split is shown on the left.

The samples that are on the left node of the tree would be classified as cancerous by the tree, while those on the right would be non-cancerous. This tree is relatively accurate at classifying the samples that were used to build it (which is a case of overfitting

In mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfi ...

), but it would still classify sample C2 incorrectly. To remedy this, the tree can be split again at the child nodes to possibly achieve something even more accurate.

To split the right node, information gain must again be calculated for all the possible candidate splits that were not used for previous nodes. So, the only options this time are Mutations 1, 2, and 4.

Note: is different this time around since there are only four samples at the right child.

: ''PC, t'' = 1/4

: ''PNC, t'' = 3/4

: = -(1/4log2(1/4) + 3/4log2(3/4)) = 0.811

From this new , the candidate splits can be calculated using the same formulae as the root node:

Thus, the right child will be split with Mutation 4. All the samples that have the mutation will be passed to the left child and the ones that lack it will be passed to the right child.

To split the left node, the process would be the same, except there would only be 3 samples to check. Sometimes a node may not need to be split at all if it is a pure set, where all samples at the node are just cancerous or non-cancerous. Splitting the node may lead to the tree being more inaccurate and in this case it will not be split.

The tree would now achieve 100% accuracy if the samples that were used to build it are tested. This isn't a good idea, however, since the tree would overfit the data. The best course of action is to try testing the tree on other samples, of which are not part of the original set. Two outside samples are below: By following the tree, NC10 was classified correctly, but C15 was classified as NC. For other samples, this tree would not be 100% accurate anymore. It could be possible to improve this though, with options such as increasing the depth of the tree or increasing the size of the training set.

By following the tree, NC10 was classified correctly, but C15 was classified as NC. For other samples, this tree would not be 100% accurate anymore. It could be possible to improve this though, with options such as increasing the depth of the tree or increasing the size of the training set.

Advantages

Information gain is the basic criterion to decide whether a feature should be used to split a node or not. The feature with the optimal split i.e., the highest value of information gain at a node of a decision tree is used as the feature for splitting the node. The concept of information gain function falls under the C4.5 algorithm for generating the decision trees and selecting the optimal split for a decision tree node. Some of its advantages include: * It can work with both continuous and discrete variables. * Due to the factor – ∗ log(p)in the entropy definition as given above, leaf nodes with a small number of instances are assigned less weight and it favors dividing rest of the data into bigger but homogeneous groups. And thus, as we dig deeper into the depth of the tree, the dataset becomes more homogeneous. This approach is usually more stable and chooses the most impactful features on the nodes.Drawbacks and Solutions

Although information gain is usually a good measure for deciding therelevance

Relevance is the connection between topics that makes one useful for dealing with the other. Relevance is studied in many different fields, including cognitive science, logic, and library and information science. Epistemology studies it in gener ...

of an attribute, it is not perfect. A notable problem occurs when information gain is applied to attributes that can take on a large number of distinct values. For example, suppose that one is building a decision tree for some data describing the customers of a business. Information gain is often used to decide which of the attributes are the most relevant, so they can be tested near the root of the tree. One of the input attributes might be the customer's membership number, if they are a member of the business's membership program. This attribute has a high mutual information, because it uniquely identifies each customer, but we do ''not'' want to include it in the decision tree. Deciding how to treat a customer based on their membership number is unlikely to generalize to customers we haven't seen before (overfitting

In mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfi ...

). This issue can also occur if the samples that are being tested have multiple attributes with many distinct values. In this case, it can cause the information gain of each of these attributes to be much higher than those without as many distinct values.

To counter this problem, Ross Quinlan proposed to instead choose the attribute with highest information gain ratio from among the attributes whose information gain is average or higher. This biases the decision tree against considering attributes with a large number of distinct values, while not giving an unfair advantage to attributes with very low information value, as the information value is higher or equal to the information gain.

See also

*Information gain

Information is an abstract concept that refers to something which has the power to inform. At the most fundamental level, it pertains to the interpretation (perhaps formally) of that which may be sensed, or their abstractions. Any natur ...

more broadly

* Decision tree learning

Decision tree learning is a supervised learning approach used in statistics, data mining and machine learning. In this formalism, a classification or regression decision tree is used as a predictive model to draw conclusions about a set of obser ...

* Information content

In information theory, the information content, self-information, surprisal, or Shannon information is a basic quantity derived from the probability of a particular event occurring from a random variable. It can be thought of as an alternative w ...

, the starting point of information theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, ...

and the basis of Shannon entropy

Shannon may refer to:

People

* Shannon (given name)

* Shannon (surname)

* Shannon (American singer), stage name of singer Brenda Shannon Greene (born 1958)

* Shannon (South Korean singer), British-South Korean singer and actress Shannon Arrum ...

* Information gain ratio

* ID3 algorithm

** C4.5 algorithm

* Surprisal analysis

References

Further reading

* * * {{DEFAULTSORT:Information Gain In Decision Trees Decision trees Classification algorithms