Precursors

Mythical, fictional, and speculative precursors

Myth and legend

InMedieval legends of artificial beings

In ''Of the Nature of Things'', the Swiss alchemist Paracelsus describes a procedure that he claims can fabricate an "artificial man". By placing the "sperm of a man" in horse dung, and feeding it the "Arcanum of Mans blood" after 40 days, the concoction will become a living infant.

The earliest written account regarding golem-making is found in the writings of Eleazar ben Judah of Worms in the early 13th century. During the Middle Ages, it was believed that the animation of a

In ''Of the Nature of Things'', the Swiss alchemist Paracelsus describes a procedure that he claims can fabricate an "artificial man". By placing the "sperm of a man" in horse dung, and feeding it the "Arcanum of Mans blood" after 40 days, the concoction will become a living infant.

The earliest written account regarding golem-making is found in the writings of Eleazar ben Judah of Worms in the early 13th century. During the Middle Ages, it was believed that the animation of a Modern fiction

By the 19th century, ideas about artificial men and thinking machines became a popular theme in fiction. Notable works likeAutomata

Realistic humanoid automata were built by craftsman from many civilizations, including Yan Shi, Hero of Alexandria, Al-Jazari, Haroun al-Rashid, Jacques de Vaucanson, Leonardo Torres y Quevedo, Pierre Jaquet-Droz and Wolfgang von Kempelen.

The oldest known automata were the sacred statues of

Realistic humanoid automata were built by craftsman from many civilizations, including Yan Shi, Hero of Alexandria, Al-Jazari, Haroun al-Rashid, Jacques de Vaucanson, Leonardo Torres y Quevedo, Pierre Jaquet-Droz and Wolfgang von Kempelen.

The oldest known automata were the sacred statues of Formal reasoning

Artificial intelligence is based on the assumption that the process of human thought can be mechanized. The study of mechanical—or "formal"—reasoning has a long history. Chinese, Indian and Greek philosophers all developed structured methods of formal deduction by the first millennium BCE. Their ideas were developed over the centuries by philosophers such as Their answer was surprising in two ways. First, they proved that there were, in fact, limits to what mathematical logic could accomplish. But second (and more important for AI) their work suggested that, within these limits, ''any'' form of mathematical reasoning could be mechanized. The Church-Turing thesis implied that a mechanical device, shuffling symbols as simple as ''0'' and ''1'', could imitate any conceivable process of mathematical deduction. The key insight was the

Their answer was surprising in two ways. First, they proved that there were, in fact, limits to what mathematical logic could accomplish. But second (and more important for AI) their work suggested that, within these limits, ''any'' form of mathematical reasoning could be mechanized. The Church-Turing thesis implied that a mechanical device, shuffling symbols as simple as ''0'' and ''1'', could imitate any conceivable process of mathematical deduction. The key insight was the Computer science

Calculating machines were designed or built in antiquity and throughout history by many people, includingBirth of artificial intelligence (1941-56)

The earliest research into thinking machines was inspired by a confluence of ideas that became prevalent in the late 1930s, 1940s, and early 1950s. Recent research in

The earliest research into thinking machines was inspired by a confluence of ideas that became prevalent in the late 1930s, 1940s, and early 1950s. Recent research in

Turing Test

In 1950 Turing published a landmark paper "Neuroscience and Hebbian theory

Donald Hebb was a Canadian psychologist whose work laid the foundation for modern neuroscience, particularly in understanding learning, memory, and neural plasticity. His most influential book, The Organization of Behavior (1949), introduced the concept of Hebbian learning, often summarized as "cells that fire together wire together." Hebb began formulating the foundational ideas for this book in the early 1940s, particularly during his time at the Yerkes Laboratories of Primate Biology from 1942 to 1947. He made extensive notes between June 1944 and March 1945 and sent a complete draft to his mentor Karl Lashley in 1946. The manuscript for The Organization of Behavior wasn’t published until 1949. The delay was due to various factors, including World War II and shifts in academic focus. By the time it was published, several of his peers had already published related ideas, making Hebb’s work seem less groundbreaking at first glance. However, his synthesis of psychological and neurophysiological principles became a cornerstone of neuroscience and machine learning.Artificial neural networks

Walter Pitts and Warren McCulloch analyzed networks of idealized artificial neurons and showed how they might perform simple logical functions in 1943. They were the first to describe what later researchers would call aCybernetic robots

Experimental robots such as W. Grey Walter's turtles and the Johns Hopkins Beast, were built in the 1950s. These machines did not use computers, digital electronics or symbolic reasoning; they were controlled entirely by analog circuitry.Game AI

In 1951, using the Ferranti Mark 1 machine of theSymbolic reasoning and the Logic Theorist

When access to digital computers became possible in the mid-fifties, a few scientists instinctively recognized that a machine that could manipulate numbers could also manipulate symbols and that the manipulation of symbols could well be the essence of human thought. This was a new approach to creating thinking machines.

In 1955, Allen Newell and future Nobel Laureate Herbert A. Simon created the "Logic Theorist", with help from J. C. Shaw. The program would eventually prove 38 of the first 52 theorems in Russell and Whitehead's ''

When access to digital computers became possible in the mid-fifties, a few scientists instinctively recognized that a machine that could manipulate numbers could also manipulate symbols and that the manipulation of symbols could well be the essence of human thought. This was a new approach to creating thinking machines.

In 1955, Allen Newell and future Nobel Laureate Herbert A. Simon created the "Logic Theorist", with help from J. C. Shaw. The program would eventually prove 38 of the first 52 theorems in Russell and Whitehead's ''Dartmouth Workshop

The Dartmouth workshop of 1956 was a pivotal event that marked the formal inception of AI as an academic discipline. Dartmouth workshop: * * * * It was organized by Marvin Minsky and John McCarthy, with the support of two senior scientistsCognitive revolution

In the autumn of 1956, Newell and Simon also presented the Logic Theorist at a meeting of the Special Interest Group in Information Theory at theEarly successes (1956-1974)

The programs developed in the years after the Dartmouth Workshop were, to most people, simply "astonishing": computers were solving algebra word problems, proving theorems in geometry and learning to speak English. Few at the time would have believed that such "intelligent" behavior by machines was possible at all. Researchers expressed an intense optimism in private and in print, predicting that a fully intelligent machine would be built in less than 20 years. Government agencies like the Defense Advanced Research Projects Agency (DARPA, then known as "ARPA") poured money into the field. Artificial Intelligence laboratories were set up at a number of British and US universities in the latter 1950s and early 1960s.Approaches

There were many successful programs and new directions in the late 50s and 1960s. Among the most influential were these:Reasoning, planning and problem solving as search

Many early AI programs used the same basicNatural language

Micro-worlds

In the late 60s, Marvin Minsky and Seymour Papert of the MIT AI Laboratory proposed that AI research should focus on artificially simple situations known as micro-worlds. They pointed out that in successful sciences like physics, basic principles were often best understood using simplified models like frictionless planes or perfectly rigid bodies. Much of the research focused on a " blocks world," which consists of colored blocks of various shapes and sizes arrayed on a flat surface. Blocks world: * * * * This paradigm led to innovative work inPerceptrons and early neural networks

In the 1960s funding was primarily directed towards laboratories researching symbolic AI, however several people still pursued research in neural networks. TheOptimism

The first generation of AI researchers made these predictions about their work: * 1958, H. A. Simon and Allen Newell: "within ten years a digital computer will be the world's chess champion" and "within ten years a digital computer will discover and prove an important new mathematical theorem." * 1965, H. A. Simon: "machines will be capable, within twenty years, of doing any work a man can do." * 1967, Marvin Minsky: "Within a generation... the problem of creating 'artificial intelligence' will substantially be solved." * 1970, Marvin Minsky (in ''Life'' magazine): "In from three to eight years we will have a machine with the general intelligence of an average human being."Financing

In June 1963, MIT received a $2.2 million grant from the newly created Advanced Research Projects Agency (ARPA, later known asFirst AI Winter (1974–1980)

In the 1970s, AI was subject to critiques and financial setbacks. AI researchers had failed to appreciate the difficulty of the problems they faced. Their tremendous optimism had raised public expectations impossibly high, and when the promised results failed to materialize, funding targeted at AI was severely reduced. The lack of success indicated the techniques being used by AI researchers at the time were insufficient to achieve their goals. These setbacks did not affect the growth and progress of the field, however. The funding cuts only impacted a handful of major laboratories and the critiques were largely ignored. General public interest in the field continued to grow, the number of researchers increased dramatically, and new ideas were explored in logic programming, commonsense reasoning and many other areas. Historian Thomas Haigh argued in 2023 that there was no winter, and AI researcher Nils Nilsson described this period as the most "exciting" time to work in AI.Problems

In the early seventies, the capabilities of AI programs were limited. Even the most impressive could only handle trivial versions of the problems they were supposed to solve; all the programs were, in some sense, "toys". AI researchers had begun to run into several limits that would be only conquered decades later, and others that still stymie the field in the 2020s: * Limited computer power: There was not enough memory or processing speed to accomplish anything truly useful. For example: Ross Quillian's successful work on natural language was demonstrated with a vocabulary of only 20 words, because that was all that would fit in memory. Hans Moravec argued in 1976 that computers were still millions of times too weak to exhibit intelligence. He suggested an analogy: artificial intelligence requires computer power in the same way that aircraft requireDecrease in funding

The agencies which funded AI research, such as the British government,Philosophical and ethical critiques

Several philosophers had strong objections to the claims being made by AI researchers. One of the earliest was John Lucas, who argued that Gödel's incompleteness theorem showed that aLogic at Stanford, CMU and Edinburgh

Logic was introduced into AI research as early as 1958, by John McCarthy in his Advice Taker proposal. In 1963, J. Alan Robinson had discovered a simple method to implement deduction on computers, the resolution and unification algorithm. However, straightforward implementations, like those attempted by McCarthy and his students in the late 1960s, were especially intractable: the programs required astronomical numbers of steps to prove simple theorems. A more fruitful approach to logic was developed in the 1970s by Robert Kowalski at theMIT's "anti-logic" approach

Among the critics of McCarthy's approach were his colleagues across the country at MIT. Marvin Minsky, Seymour Papert and Roger Schank were trying to solve problems like "story understanding" and "object recognition" that required a machine to think like a person. In order to use ordinary concepts like "chair" or "restaurant" they had to make all the same illogical assumptions that people normally made. Unfortunately, imprecise concepts like these are hard to represent in logic. MIT chose instead to focus on writing programs that solved a given task without using high-level abstract definitions or general theories of cognition, and measured performance by iterative testing, rather than arguments from first principles. Schank described their "anti-logic" approaches as ''scruffy'', as opposed to the ''neat'' paradigm used by McCarthy, Kowalski, Feigenbaum, Newell and Simon. In 1975, in a seminal paper, Minsky noted that many of his fellow researchers were using the same kind of tool: a framework that captures all our common sense assumptions about something. For example, if we use the concept of a bird, there is a constellation of facts that immediately come to mind: we might assume that it flies, eats worms and so on (none of which are true for all birds). Minsky associated these assumptions with the general category and they could be '' inherited'' by the frames for subcategories and individuals, or over-ridden as necessary. He called these structures '' frames''. Schank used a version of frames he called " scripts" to successfully answer questions about short stories in English. Frames would eventually be widely used inBoom (1980–1987)

In the 1980s, a form of AI program called " expert systems" was adopted by corporations around the world andExpert systems become widely used

An expert system is a program that answers questions or solves problems about a specific domain of knowledge, using logicalGovernment funding increases

In 1981, the Japanese Ministry of International Trade and Industry set aside $850 million for the Fifth generation computer project. Their objectives were to write programs and build machines that could carry on conversations, translate languages, interpret pictures, and reason like human beings. Much to the chagrin of scruffies, they initially choseKnowledge revolution

The power of expert systems came from the expert knowledge they contained. They were part of a new direction in AI research that had been gaining ground throughout the 70s. "AI researchers were beginning to suspect—reluctantly, for it violated the scientific canon of parsimony—that intelligence might very well be based on the ability to use large amounts of diverse knowledge in different ways,"{{sfn, McCorduck, 2004, p=299 writesNew directions in the 1980s

Although symbolicRevival of neural networks: "connectionism"

Robotics and embodied reason

{{Main, Nouvelle AI, behavior-based AI, situated AI, embodied cognitive science Rodney Brooks, Hans Moravec and others argued that, in order to show real intelligence, a machine needs to have a body — it needs to perceive, move, survive and deal with the world.{{sfn, McCorduck, 2004, pp=454–462 Sensorimotor skills are essential to higher level skills such as commonsense reasoning. They can't be efficiently implemented using abstract symbolic reasoning, so AI should solve the problems of perception, mobility, manipulation and survival without using symbolic representation at all. These robotics researchers advocated building intelligence "from the bottom up".{{efn, Hans Moravec wrote: "I am confident that this bottom-up route to artificial intelligence will one date meet the traditional top-down route more than half way, ready to provide the real world competence and the commonsense knowledge that has been so frustratingly elusive in reasoning programs. Fully intelligent machines will result when the metaphorical golden spike is driven uniting the two efforts."{{sfn, Moravec, 1988, p=20 A precursor to this idea was David Marr (neuroscientist), David Marr, who had come to MIT in the late 1970s from a successful background in theoretical neuroscience to lead the group studyingSoft computing and probabilistic reasoning

Soft computing uses methods that work with incomplete and imprecise information. They do not attempt to give precise, logical answers, but give results that are only "probably" correct. This allowed them to solve problems that precise symbolic methods could not handle. Press accounts often claimed these tools could "think like a human".{{sfn, Pollack, 1984{{sfn, Pollack, 1989 Judea Pearl's ''Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference'', an influential 1988 book{{sfn, Pearl, 1988 brought probability and decision theory into AI.{{sfn, Russell, Norvig, 2021, p=25 Fuzzy logic, developed by Lofti Zadeh in the 60s, began to be more widely used in AI and robotics. Evolutionary computation and artificial neural networks also handle imprecise information, and are classified as "soft". In the 90s and early 2000s many other soft computing tools were developed and put into use, including Bayesian networks,{{sfn, Russell, Norvig, 2021, p=25 hidden Markov models,{{sfn, Russell, Norvig, 2021, p=25Reinforcement learning

Reinforcement learning{{sfn, Russell, Norvig, 2021, loc=Section 23 gives an agent a reward every time it performs a desired action well, and may give negative rewards (or "punishments") when it performs poorly. It was described in the first half of the twentieth century by psychologists using animal models, such as Edward Thorndike, Thorndike,{{sfn, Christian, 2020, pp=120-124{{sfn, Russell, Norvig, 2021, p=819 Ivan Pavlov, Pavlov{{sfn, Christian, 2020, p=124 and B.F. Skinner, Skinner.{{sfn, Christian, 2020, pp=152-156 In the 1950s,Second AI winter

The business community's fascination with AI rose and fell in the 1980s in the classic pattern of an economic bubble. As dozens of companies failed, the perception in the business world was that the technology was not viable.{{sfn, Newquist, 1994, pp=501, 511 The damage to AI's reputation would last into the 21st century. Inside the field there was little agreement on the reasons for AI's failure to fulfill the dream of human level intelligence that had captured the imagination of the world in the 1960s. Together, all these factors helped to fragment AI into competing subfields focused on particular problems or approaches, sometimes even under new names that disguised the tarnished pedigree of "artificial intelligence".{{sfn, McCorduck, 2004, p=424 Over the next 20 years, AI consistently delivered working solutions to specific isolated problems. By the late 1990s, it was being used throughout the technology industry, although somewhat behind the scenes. The success was due to Moore's law, increasing computer power, by collaboration with other fields (such as mathematical optimization and statistics) and using the highest standards of scientific accountability. By 2000, AI had achieved some of its oldest goals. The field was both more cautious and more successful than it had ever been.AI winter

The term " AI winter" was coined by researchers who had survived the funding cuts of 1974 when they became concerned that enthusiasm for expert systems had spiraled out of control and that disappointment would certainly follow.{{efn, AI winter was first used as the title of a seminar on the subject for the Association for the Advancement of Artificial Intelligence.{{sfn, Crevier, 1993, p=203 Their fears were well founded: in the late 1980s and early 1990s, AI suffered a series of financial setbacks.{{sfn, Russell, Norvig, 2021, p=24 The first indication of a change in weather was the sudden collapse of the market for specialized AI hardware in 1987. Desktop computers from Apple Computer, Apple andAI behind the scenes

In the 1990s, algorithms originally developed by AI researchers began to appear as parts of larger systems. AI had solved a lot of very difficult problems{{efn, See {{slink, Applications of artificial intelligence, Computer science and their solutions proved to be useful throughout the technology industry,{{sfn, NRC, 1999, loc=Artificial Intelligence in the 90s{{sfn, Kurzweil, 2005, p=264 such as data mining, industrial robots, industrial robotics, logistics, speech recognition,{{sfn, The Economist, 2007 banking software,{{sfn, CNN, 2006 medical diagnosis{{sfn, CNN, 2006 and Google's search engine.{{sfn, Olsen, 2004{{sfn, Olsen, 2006 The field of AI received little or no credit for these successes in the 1990s and early 2000s. Many of AI's greatest innovations have been reduced to the status of just another item in the tool chest of computer science. Nick Bostrom explains: "A lot of cutting edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it's not labeled AI anymore."{{sfn, CNN, 2006 Many researchers in AI in the 1990s deliberately called their work by other names, such as Informatics (academic field), informatics, knowledge-based systems, "cognitive systems" or computational intelligence. In part, this may have been because they considered their field to be fundamentally different from AI, but also the new names help to procure funding.{{sfn, The Economist, 2007{{sfn, Tascarella, 2006{{sfn, Newquist, 1994, p=532 In the commercial world at least, the failed promises of the AI Winter continued to haunt AI research into the 2000s, as the ''New York Times'' reported in 2005: "Computer scientists and software engineers avoided the term artificial intelligence for fear of being viewed as wild-eyed dreamers."{{sfn, Markoff, 2005Mathematical rigor, greater collaboration and a narrow focus

AI researchers began to develop and use sophisticated mathematical tools more than they ever had in the past.{{sfn, McCorduck, 2004, pp=486–487{{sfn, Russell, Norvig, 2021, pp=24–25 Most of the new directions in AI relied heavily on mathematical models, including artificial neural networks, probabilistic reasoning, soft computing and reinforcement learning. In the 90s and 2000s, many other highly mathematical tools were adapted for AI. These tools were applied to machine learning, perception and mobility. There was a widespread realization that many of the problems that AI needed to solve were already being worked on by researchers in fields like statistics, mathematics, electrical engineering, economics or operations research. The shared mathematical language allowed both a higher level of collaboration with more established and successful fields and the achievement of results which were measurable and provable; AI had become a more rigorous "scientific" discipline. Another key reason for the success in the 90s was that AI researchers focussed on specific problems with verifiable solutions (an approach later derided as ''narrow AI''). This provided useful tools in the present, rather than speculation about the future.Intelligent agents

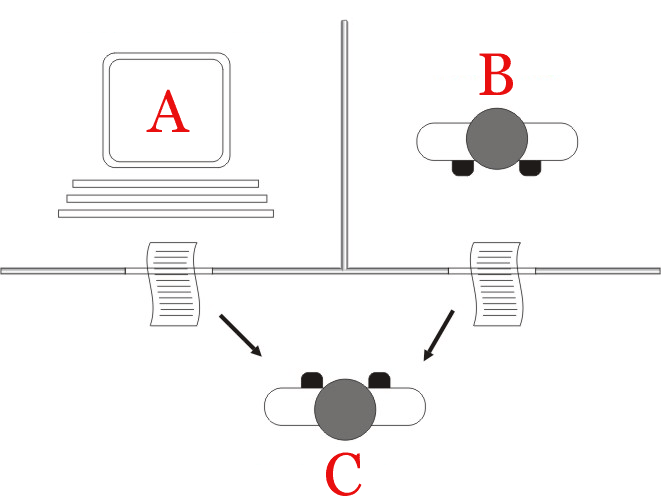

A new paradigm called "intelligent agents" became widely accepted during the 1990s.{{sfn, McCorduck, 2004, pp=471–478{{sfn, Russell, Norvig, 2021, loc=chpt. 2{{efn, Russell and Norvig wrote "The whole-agent view is now widely accepted."{{sfn, Russell, Norvig, 2021, p=61 Although earlier researchers had proposed modular "divide and conquer" approaches to AI,{{efn, Carl Hewitt's Actor model anticipated the modern definition of intelligent agents. {{Harv, Hewitt, Bishop, Steiger, 1973 Both John Doyle {{Harv, Doyle, 1983 and Marvin Minsky's popular classic ''The Society of Mind'' {{Harv, Minsky, 1986 used the word "agent". Other "modular" proposals included Rodney Brooks, Rodney Brook's subsumption architecture,Milestones and Moore's law

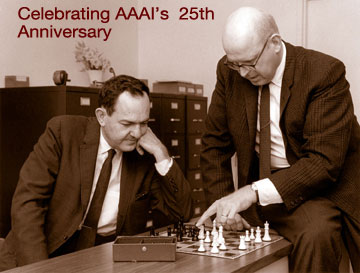

On May 11, 1997, IBM Deep Blue, Deep Blue became the first computer chess-playing system to beat a reigning world chess champion, Garry Kasparov.{{sfn, McCorduck, 2004, pp=480–483 In 2005, a Stanford robot won the DARPA Grand Challenge by driving autonomously for 131 miles along an unrehearsed desert trail. Two years later, a team from CMU won the DARPA Urban Challenge by autonomously navigating 55 miles in an urban environment while responding to traffic hazards and adhering to traffic laws.{{sfn, Russell, Norvig, 2021, p=28 These successes were not due to some revolutionary new paradigm, but mostly on the tedious application of engineering skill and on the tremendous increase in the speed and capacity of computers by the 90s.{{efn, Ray Kurzweil wrote that the improvement in computer chess "is governed only by the brute force expansion of computer hardware."{{sfn, Kurzweil, 2005, p=274 In fact, IBM Deep Blue, Deep Blue's computer was 10 million times faster than the Ferranti Mark 1 that Christopher Strachey taught to play chess in 1951.{{efn, Cycle time of Ferranti Mark 1 was 1.2 milliseconds, which is arguably equivalent to about 833 flops. IBM Deep Blue, Deep Blue ran at 11.38 gigaflops (and this does not even take into account Deep Blue's special-purpose hardware for chess). ''Very'' approximately, these differ by a factor of 107.{{citation needed, date=August 2024 This dramatic increase is measured by Moore's law, which predicts that the speed and memory capacity of computers doubles every two years. The fundamental problem of "raw computer power" was slowly being overcome.Big data, deep learning, AGI (2005–2017)

In the first decades of the 21st century, access to large amounts of data (known as "big data"), Moore's law, cheaper and faster computers and advancedBig data and big machines

{{See also, List of datasets for machine-learning research The success of machine learning in the 2000s depended on the availability of vast amounts of training data and faster computers.{{sfn, Russell, Norvig, 2021, pp=26-27 Russell and Norvig wrote that the "improvement in performance obtained by increasing the size of the data set by two or three orders of magnitude outweighs any improvement that can be made by tweaking the algorithm."{{sfn, Russell, Norvig, 2021, p=26 Geoffrey Hinton recalled that back in the 90s, the problem was that "our labeled datasets were thousands of times too small. [And] our computers were millions of times too slow." This was no longer true by 2010. The most useful data in the 2000s came from curated, labeled data sets created specifically for machine learning and AI. In 2007, a group at University of Massachusetts Amherst, UMass Amherst released Labeled Faces in the Wild, an annotated set of images of faces that was widely used to train and test face recognition systems for the next several decades.{{sfn, Christian, 2020, p=31 Fei-Fei Li developed ImageNet, a database of three million images captioned by volunteers using the Amazon Mechanical Turk. Released in 2009, it was a useful body of training data and a benchmark for testing for the next generation of image processing systems.{{sfn, Christian, 2020, pp=22-23{{sfn, Russell, Norvig, 2021, p=26 Google released word2vec in 2013 as an open source resource. It used large amounts of data text scraped from the internet and word embedding to create a numeric vector to represent each word. Users were surprised at how well it was able to capture word meanings, for example, ordinary vector addition would give equivalences like China + River = Yangtze, London-England+France = Paris.{{sfn, Christian, 2020, p=6 This database in particular would be essential for the development of large language models in the late 2010s. The explosive growth of the internet gave machine learning programs access to billions of pages of text and images that could be data scraping, scraped. And, for specific problems, large privately held databases contained the relevant data. McKinsey Global Institute reported that "by 2009, nearly all sectors in the US economy had at least an average of 200 terabytes of stored data".{{sfn, McKinsey & Co, 2011 This collection of information was known in the 2000s as ''big data''. In a ''Jeopardy!'' exhibition match in February 2011,Deep learning

{{Main, Deep learning In 2012, AlexNet, aThe alignment problem

It became fashionable in the 2000s to begin talking about the future of AI again and several popular books considered the possibility of superintelligent machines and what they might mean for human society. Some of this was optimistic (such as Ray Kurzweil's ''The Singularity is Near''), but others warned that a sufficiently powerful AI was existential risk of artificial general intelligence, existential threat to humanity, such as Nick Bostrom and Eliezer Yudkowsky.{{sfn, Russell, Norvig, 2021, pp=33, 1004 The topic became widely covered in the press and many leading intellectuals and politicians commented on the issue. AI programs in the 21st century are defined by their utility function, goals – the specific measures that they are designed to optimize. Nick Bostrom's influential 2005 book ''Superintelligence (book), Superintelligence'' argued that, if one isn't careful about defining these goals, the machine may cause harm to humanity in the process of achieving a goal. Stuart J. Russell used the example of an intelligent robot that kills its owner to prevent it from being unplugged, reasoning "you can't fetch the coffee if you're dead".{{sfn, Russell, 2020 (This problem is known by the technical term "instrumental convergence".) The solution is to align the machine's goal function with the goals of its owner and humanity in general. Thus, the problem of mitigating the risks and unintended consequences of AI became known as "the value alignment problem" or AI alignment.{{sfn, Russell, Norvig, 2021, pp=5, 33, 1002-1003 At the same time, machine learning systems had begun to have disturbing unintended consequences. Cathy O'Neil explained how statistical algorithms had been among the causes of the great recession, 2008 economic crash,{{sfn, O'Neill, 2016 Julia Angwin of ProPublica argued that the COMPAS (software), COMPAS system used by the criminal justice system exhibited racial bias under some measures,{{sfn, Christian, 2020, pp=60-61{{efn, Later research showed that there was no way for system to avoid a measurable racist bias -- fixing one form of bias would necessarily introduce another.{{sfn, Christian, 2020, pp=67-70 others showed that many machine learning systems exhibited some form of racial algorithmic bias, bias,{{sfn, Christian, 2020, pp=6-7, 25 and there were many other examples of dangerous outcomes that had resulted from machine learning systems.{{efn, A short summary of topics would include privacy, surveillance, copyright, misinformation and deep fakes, filter bubbles and partisanship, algorithmic bias, misleading results that go undetected without algorithmic transparency, the right to an explanation, misuse of autonomous weapons and technological unemployment. See {{section link, Artificial intelligence, Ethics In 2016, the election of Donald Trump and the controversy over the COMPAS system illuminated several problems with the current technological infrastructure, including misinformation, social media algorithms designed to maximize engagement, the misuse of personal data and the trustworthiness of predictive models.{{sfn, Christian, 2020, p=67 Issues of fairness (machine learning), fairness and unintended consequences became significantly more popular at AI conferences, publications vastly increased, funding became available, and many researchers re-focussed their careers on these issues. The AI alignment, value alignment problem became a serious field of academic study.{{sfn, Christian, 2020, pp=67, 73, 117{{efn, Brian Christian wrote "ProPublica's study [of COMPAS in 2015] legitimated concepts like fairness as valid topics for research"{{sfn, Christian, 2020, p=73Artificial general intelligence research

In the early 2000s, several researchers became concerned that mainstream AI was too focused on "measurable performance in specific applications"{{sfn, Russell, Norvig, 2021, p=32 (known as "narrow AI") and had abandoned AI's original goal of creating versatile, fully intelligent machines. An early critic was Nils Nilsson in 1995, and similar opinions were published by AI elder statesmen John McCarthy, Marvin Minsky, and Patrick Winston in 2007–2009. Minsky organized a symposium on "human-level AI" in 2004.{{sfn, Russell, Norvig, 2021, p=32 Ben Goertzel adopted the term "artificial general intelligence" for the new sub-field, founding a journal and holding conferences beginning in 2008.{{sfn, Russell, Norvig, 2021, p=33 The new field grew rapidly, buoyed by the continuing success of artificial neural networks and the hope that it was the key to AGI. Several competing companies, laboratories and foundations were founded to develop AGI in the 2010s. DeepMind was founded in 2010 by three English scientists, Demis Hassabis, Shane Legg and Mustafa Suleyman, with funding from Peter Thiel and later Elon Musk. The founders and financiers were deeply concerned about AI safety and the existential risk of AI. DeepMind's founders had a personal connection with Yudkowsky and Musk was among those who was actively raising the alarm.{{sfn, Metz, Weise, Grant, Isaac, 2023 Hassabis was both worried about the dangers of AGI and optimistic about its power; he hoped they could "solve AI, then solve everything else."{{sfn, Russell, Norvig, 2021, p=31 The New York Times wrote in 2023 "At the heart of this competition is a brain-stretching paradox. The people who say they are most worried about AI are among the most determined to create it and enjoy its riches. They have justified their ambition with their strong belief that they alone can keep AI from endangering Earth."{{sfn, Metz, Weise, Grant, Isaac, 2023 In 2012, Geoffrey Hinton (who been leading neural network research since the 80s) was approached by Baidu, which wanted to hire him and all his students for an enormous sum. Hinton decided to hold an auction and, at a Lake Tahoe AI conference, they sold themselves to Google for a price of $44 million. Hassabis took notice and sold DeepMind to Google in 2014, on the condition that it would not accept military contracts and would be overseen by an ethics board.{{sfn, Metz, Weise, Grant, Isaac, 2023 Larry Page of Google, unlike Musk and Hassabis, was an optimist about the future of AI. Musk and Paige became embroiled in an argument about the risk of AGI at Musk's 2015 birthday party. They had been friends for decades but stopped speaking to each other shortly afterwards. Musk attended the one and only meeting of the DeepMind's ethics board, where it became clear that Google was uninterested in mitigating the harm of AGI. Frustrated by his lack of influence he founded OpenAI in 2015, enlisting Sam Altman to run it and hiring top scientists. OpenAI began as a non-profit, "free from the economic incentives that were driving Google and other corporations."{{sfn, Metz, Weise, Grant, Isaac, 2023 Musk became frustrated again and left the company in 2018. OpenAI turned to Microsoft for continued financial support and Altman and OpenAI formed a for-profit version of the company with more than $1 billion in financing.{{sfn, Metz, Weise, Grant, Isaac, 2023 In 2021, Dario Amodei and 14 other scientists left OpenAI over concerns that the company was putting profits above safety. They formed Anthropic, which soon had $6 billion in financing from Microsoft and Google.{{sfn, Metz, Weise, Grant, Isaac, 2023Large language models, AI boom (2017–present)

{{Main, AI boom The AI boom started with the initial development of key architectures and algorithms such as the transformer architecture in 2017, leading to the scaling and development of large language models exhibiting human-like traits of knowledge, attention and creativity. The new AI era began since 2020, with the public release of scaled large language models (LLMs) such as ChatGPT.Transformer architecture and large language models

{{Main, Large language models In 2017, the Transformer (machine learning model), transformer architecture was proposed by Google researchers. It exploits an Attention (machine learning), attention mechanism and became widely used in large language models.{{sfn, Murgia, 2023 Large language models, based on the transformer, were developed by AGI companies: OpenAI released GPT-3 in 2020, and DeepMind released Gato (DeepMind), Gato in 2022. These are foundation models: they are trained on vast quantities of unlabeled data and can be adapted to a wide range of downstream tasks.{{citation needed, date=August 2024 These models can discuss a huge number of topics and display general knowledge. The question naturally arises: are these models an example of artificial general intelligence? Bill Gates was skeptical of the new technology and the hype that surrounded AGI. However, Altman presented him with a live demo of GPT-4, ChatGPT4 passing an advanced biology test. Gates was convinced.{{sfn, Metz, Weise, Grant, Isaac, 2023 In 2023, Microsoft Research tested the model with a large variety of tasks, and concluded that "it could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system".{{sfn, Bubeck, Chandrasekaran, Eldan, Gehrke, 2023 In 2024, OpenAI o3, a type of advanced reasoning model developed by OpenAI was announced. On the Abstraction and Reasoning Corpus for Artificial General Intelligence (ARC-AGI) benchmark developed by François Chollet in 2019, the model achieved an unofficial score of 87.5% on the semi-private test, surpassing the typical human score of 84%. The benchmark is supposed to be a necessary, but not sufficient test for AGI. Speaking of the benchmark, Chollet has said "You’ll know AGI is here when the exercise of creating tasks that are easy for regular humans but hard for AI becomes simply impossible."AI boom

{{Main, AI boom Investment in AI grew exponentially after 2020, with venture capital funding for generative AI companies increasing dramatically. Total AI investments rose from $18 billion in 2014 to $119 billion in 2021, with generative AI accounting for approximately 30% of investments by 2023. According to metrics from 2017 to 2021, the United States outranked the rest of the world in terms of venture capital funding, number of startups, and AI patents granted.{{Cite web , last=Frank , first=Michael , date=September 22, 2023 , title=US Leadership in Artificial Intelligence Can Shape the 21st Century Global Order , url=https://thediplomat.com/2023/09/us-leadership-in-artificial-intelligence-can-shape-the-21st-century-global-order/ , access-date=2023-12-08 , website=The Diplomat , language=en-US The commercial AI scene became dominated by American Big Tech companies, whose investments in this area surpassed those from U.S.-based venture capitalists. OpenAI's valuation reached $86 billion by early 2024, while NVIDIA's market capitalization surpassed $3.3 trillion by mid-2024, making it the world's largest company by market capitalization as the demand for AI-capable GPUs surged. 15.ai, launched in March 2020 by an anonymous MIT researcher, was one of the earliest examples of generative AI gaining widespread public attention during the initial stages of the AI boom. The free web application demonstrated the ability to clone character voices using neural networks with minimal training data, requiring as little as 15 seconds of audio to reproduce a voice—a capability later corroborated by OpenAI in 2024. The service went viral phenomenon, viral on social media platforms in early 2021, allowing users to generate speech for characters from popular media franchises, and became particularly notable for its pioneering role in popularizing deep learning speech synthesis, AI voice synthesis for content creation, creative content and Internet meme, memes. {{Quote box , quote=Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable. This confidence must be well justified and increase with the magnitude of a system’s potential effects. OpenAI’s recent statement regarding artificial general intelligence, states that "At some point, it may be important to get independent review before starting to train future systems, and for the most advanced efforts to agree to limit the rate of growth of compute used for creating new models." We agree. That point is now. Therefore, we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4. This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium. , author=''Pause Giant AI Experiments: An Open Letter'' , source= , align=right , width=500pxAdvent of AI for public use

ChatGPT was launched on November 30, 2022, marking a pivotal moment in artificial intelligence's public adoption. Within days of its release it went viral, gaining over 100 million users in two months and becoming the fastest-growing consumer software application in history.{{Cite news , last=Milmo , first=Dan , date=December 2, 2023 , title=ChatGPT reaches 100 million users two months after launch , language=en-GB , work=The Guardian The chatbot's ability to engage in human-like conversations, write code, and generate creative content captured public imagination and led to rapid adoption across various sectors including AI in education, education, Artificial intelligence in industry, business, and research. ChatGPT's success prompted unprecedented responses from major technology companies—Google declared a "code red" and rapidly launched Google Gemini, Gemini (formerly known as Google Bard), while Microsoft incorporated the technology into Bing Chat. The rapid adoption of these AI technologies sparked intense debate about their implications. Notable AI researchers and industry leaders voiced both optimism and concern about the accelerating pace of development. In March 2023, over 20,000 signatories, including computer scientist Yoshua Bengio, Elon Musk, and Apple Inc., Apple co-founder Steve Wozniak, signed Pause Giant AI Experiments: An Open Letter, an open letter calling for a pause in advanced AI development, citing "Existential risk from artificial intelligence, profound risks to society and humanity." However, other prominent researchers like Juergen Schmidhuber took a more optimistic view, emphasizing that the majority of AI research aims to make "human lives longer and healthier and easier."{{cite news, url=https://www.theguardian.com/technology/2023/may/07/rise-of-artificial-intelligence-is-inevitable-but-should-not-be-feared-father-of-ai-says, title=Rise of artificial intelligence is inevitable but should not be feared, 'father of AI' says, last1=Taylor, first1=Josh, date=May 7, 2023, work=The Guardian By mid-2024, however, the financial sector began to scrutinize AI companies more closely, particularly questioning their capacity to produce a return on investment commensurate with their massive valuations. Some prominent investors raised concerns about market expectations becoming disconnected from fundamental business realities. Jeremy Grantham, co-founder of GMO LLC, warned investors to "be quite careful" and drew parallels to previous technology-driven market bubbles. Similarly, Jeffrey Gundlach, CEO of DoubleLine Capital, explicitly compared the AI boom to the dot-com bubble of the late 1990s, suggesting that investor enthusiasm might be outpacing realistic near-term capabilities and revenue potential. These concerns were amplified by the substantial market capitalizations of AI-focused companies, many of which had yet to demonstrate sustainable profitability models. In March 2024, Anthropic released the Claude (AI), Claude 3 family of large language models, including Claude 3 Haiku, Sonnet, and Opus. The models demonstrated significant improvements in capabilities across various benchmarks, with Claude 3 Opus notably outperforming leading models from OpenAI and Google. In June 2024, Anthropic released Claude 3.5 Sonnet, which demonstrated improved performance compared to the larger Claude 3 Opus, particularly in areas such as coding, multistep workflows, and image analysis.2024 Nobel Prizes

In 2024, the Royal Swedish Academy of Sciences awarded Nobel Prizes in recognition of groundbreaking contributions to artificial intelligence. The recipients included: * In physics: John Hopfield for his work on physics-inspired Hopfield networks, and Geoffrey Hinton for foundational contributions to Boltzmann machines andFurther Study and development of AI

In January 2025, OpenAI announced a new AI, ChatGPT-Gov, which would be specifically designed for US government agencies to use securely.ChatGPT GovChatGPT Gov is designed to streamline government agencies’ access to OpenAI’s frontier models, OpnAI website. Open AI said that agencies could utilize ChatGPT Gov on a Microsoft Azure cloud or Azure Government cloud, "on top of Microsoft’s Azure’s OpenAI Service." OpenAI's announcement stated that "Self-hosting ChatGPT Gov enables agencies to more easily manage their own security, privacy, and compliance requirements, such as stringent cybersecurity frameworks (IL5, CJIS, ITAR, FedRAMP High). Additionally, we believe this infrastructure will expedite internal authorization of OpenAI’s tools for the handling of non-public sensitive data."

Robotic Integration and Practical Applications of Artificial Intelligence (2025–present)

Advanced artificial intelligence (AI) systems, capable of understanding and responding to human dialogue with high accuracy, have matured to enable seamless integration with robotics, transforming industries such as manufacturing, home automation, household automation, healthcare, public services, and materials research. Applications of artificial intelligence also accelerates scientific research through advanced data analysis and hypothesis generation. Countries including China, the United States, and Japan have invested significantly in policies and funding to deploy Autonomous robot, AI-powered robots, addressing labor shortages, boosting innovation, and enhancing efficiency, while implementing Regulation of artificial intelligence, regulatory frameworks to ensure ethical and safe development.China

The year 2025 has been heralded as the "Year of AI Robotics," marking a pivotal moment in the seamless integration of artificial intelligence (AI) and robotics. In 2025, China invested approximately 730 billion yuan (roughly $100 billion USD) to advance AI and robotics in smart manufacturing and healthcare. The "14th Five-Year Plan" (2021–2025) prioritized service robots, with AI systems enabling robots to perform complex tasks like assisting in surgeries or automating factory assembly lines. For example, AI-powered humanoid robots in Chinese hospitals can interpret patient requests, deliver supplies, and assist nurses with routine tasks, demonstrating that existing AI conversational capabilities are robust enough for practical robotic applications. Starting in September 2025, China mandated labeling of AI-generated content to ensure transparency and public trust in these technologies.United States

In January 2025, a significant development in AI infrastructure investment occurred with the formation of Stargate LLC. The joint venture, created by OpenAI, SoftBank Group, SoftBank, Oracle Corporation, Oracle, and MGX Fund Management Limited, MGX, announced plans to invest US$500 billion in AI infrastructure across the United States by 2029, starting with US$100 billion, in order to support the re-industrialization of the United States and provide a strategic capability to protect the national security of America and its allies. The venture was formally announced by U.S. President Donald Trump on January 21, 2025, with SoftBank CEO Masayoshi Son appointed as chairman.{{Cite news , title=OpenAI, SoftBank, Oracle to invest US$500 BILLION in AI, Trump says. , url=https://www.reuters.com/technology/artificial-intelligence/openai-softbank-oracle-invest-500-bln-ai-trump-says-2025-01-21/ , access-date=January 22, 2025 , work=Reuters The U.S. government allocated approximately $2 billion to integrate AI and robotics in manufacturing and logistics, leveraging AI's ability to process natural language and execute user instructions in 2025. State governments supplemented this with funding for service robots, such as those deployed in warehouses to fulfill verbal commands for inventory management or in eldercare facilities to respond to residents' requests for assistance. These applications highlight that merging advanced AI, already proficient in human interaction, with robotic hardware is a practical step forward. Some funds were directed to defense, including Lethal autonomous weapon and Military robot. In January 2025, Executive Order 14179 established an "AI Action Plan" to accelerate innovation and deployment of these technologies.Impact

In the 2020s, increased investments in AI by governments and organizations worldwide have accelerated the advancement of artificial intelligence, driving scientific breakthroughs, boosting workforce productivity, and transforming industries through the automation of complex tasks.{{Cite web , url=https://www.reuters.com/world/china/chinas-ai-powered-humanoid-robots-aim-transform-manufacturing-2025-05-13/ , title=China's AI-powered humanoid robots aim to transform manufacturing , publisher=Reuters , date=2025-05-13 , access-date=2025-05-30 , language=en By seamlessly integrating advanced AI systems into various sectors, these developments are poised to revolutionize smart manufacturing and service industries, fundamentally transforming everyday life.See also

* History of artificial neural networks * History of knowledge representation and reasoning * History of natural language processing * Outline of artificial intelligence * Progress in artificial intelligence * Timeline of artificial intelligence * Timeline of machine learningNotes

{{notelist {{ReflistReferences