Convex Optimization on:

[Wikipedia]

[Google]

[Amazon]

Convex optimization is a subfield of

*

*

Introductory Lectures on Convex Optimization

', Kluwer Academic Publishers * * * Schmit, L.A.; Fleury, C. 1980: ''Structural synthesis by combining approximation concepts and dual methods''. J. Amer. Inst. Aeronaut. Astronaut 18, 1252-1260

EE364a: Convex Optimization I

an

EE364b: Convex Optimization II

Stanford course homepages

6.253: Convex Analysis and Optimization

an MIT OCW course homepage * Brian Borchers

An overview of software for convex optimizationConvex Optimization Book by Lieven Vandenberghe and Stephen P. Boyd

{{Convex analysis and variational analysis Convex analysis Mathematical optimization

mathematical optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfiel ...

that studies the problem of minimizing convex function

In mathematics, a real-valued function is called convex if the line segment between any two distinct points on the graph of a function, graph of the function lies above or on the graph between the two points. Equivalently, a function is conve ...

s over convex set

In geometry, a set of points is convex if it contains every line segment between two points in the set.

For example, a solid cube (geometry), cube is a convex set, but anything that is hollow or has an indent, for example, a crescent shape, is n ...

s (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard

In computational complexity theory, a computational problem ''H'' is called NP-hard if, for every problem ''L'' which can be solved in non-deterministic polynomial-time, there is a polynomial-time reduction from ''L'' to ''H''. That is, assumi ...

.

Definition

Abstract form

A convex optimization problem is defined by two ingredients: * The ''objective function'', which is a real-valuedconvex function

In mathematics, a real-valued function is called convex if the line segment between any two distinct points on the graph of a function, graph of the function lies above or on the graph between the two points. Equivalently, a function is conve ...

of ''n'' variables, ;

* The ''feasible set'', which is a convex subset

In geometry, a set of points is convex if it contains every line segment between two points in the set.

For example, a solid cube (geometry), cube is a convex set, but anything that is hollow or has an indent, for example, a crescent shape, is n ...

.

The goal of the problem is to find some attaining

:.

In general, there are three options regarding the existence of a solution:

* If such a point ''x''* exists, it is referred to as an ''optimal point'' or ''solution''; the set of all optimal points is called the ''optimal set''; and the problem is called ''solvable''.

* If is unbounded below over , or the infimum is not attained, then the optimization problem is said to be ''unbounded''.

* Otherwise, if is the empty set, then the problem is said to be ''infeasible''.

Standard form

A convex optimization problem is in ''standard form'' if it is written as : where: * is the vector of optimization variables; * The objective function is aconvex function

In mathematics, a real-valued function is called convex if the line segment between any two distinct points on the graph of a function, graph of the function lies above or on the graph between the two points. Equivalently, a function is conve ...

;

* The inequality constraint functions , , are convex functions;

* The equality constraint functions , , are affine transformation

In Euclidean geometry, an affine transformation or affinity (from the Latin, '' affinis'', "connected with") is a geometric transformation that preserves lines and parallelism, but not necessarily Euclidean distances and angles.

More general ...

s, that is, of the form: , where is a vector and is a scalar.

The feasible set of the optimization problem consists of all points satisfying the inequality and the equality constraints. This set is convex because is convex, the sublevel sets of convex functions are convex, affine sets are convex, and the intersection of convex sets is convex.

Many optimization problems can be equivalently formulated in this standard form. For example, the problem of maximizing a concave function

In mathematics, a concave function is one for which the function value at any convex combination of elements in the domain is greater than or equal to that convex combination of those domain elements. Equivalently, a concave function is any funct ...

can be re-formulated equivalently as the problem of minimizing the convex function . The problem of maximizing a concave function over a convex set is commonly called a convex optimization problem.

Epigraph form (standard form with linear objective)

In the standard form it is possible to assume, without loss of generality, that the objective function ''f'' is alinear function

In mathematics, the term linear function refers to two distinct but related notions:

* In calculus and related areas, a linear function is a function whose graph is a straight line, that is, a polynomial function of degree zero or one. For di ...

. This is because any program with a general objective can be transformed into a program with a linear objective by adding a single variable t and a single constraint, as follows:

:

Conic form

Every convex program can be presented in a ''conic form'', which means minimizing a linear objective over the intersection of an affine plane and a convex cone: : where K is a closed pointed convex cone, L is alinear subspace

In mathematics, the term ''linear'' is used in two distinct senses for two different properties:

* linearity of a ''function (mathematics), function'' (or ''mapping (mathematics), mapping'');

* linearity of a ''polynomial''.

An example of a li ...

of R''n'', and b is a vector in R''n''. A linear program in standard form is the special case in which K is the nonnegative orthant of R''n''.

Eliminating linear equality constraints

It is possible to convert a convex program in standard form, to a convex program with no equality constraints. Denote the equality constraints ''hi''(''x'')=0 as ''Ax''=''b'', where ''A'' has ''n'' columns. If ''Ax''=''b'' is infeasible, then of course the original problem is infeasible. Otherwise, it has some solution ''x''0 , and the set of all solutions can be presented as: ''Fz''+''x''0, where ''z'' is in ''Rk'', ''k''=''n''-rank(''A''), and ''F'' is an ''n''-by-''k'' matrix. Substituting ''x'' = ''Fz''+''x''0 in the original problem gives: where the variables are z. Note that there are rank(''A'') fewer variables. This means that, in principle, one can restrict attention to convex optimization problems without equality constraints. In practice, however, it is often preferred to retain the equality constraints, since they might make some algorithms more efficient, and also make the problem easier to understand and analyze.Special cases

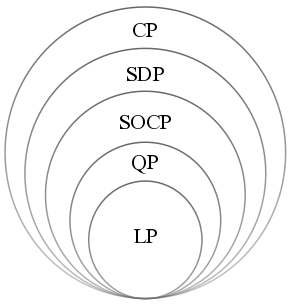

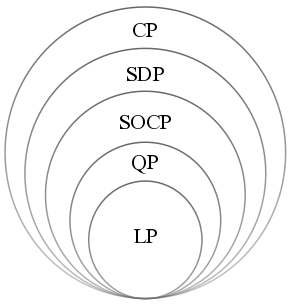

The following problem classes are all convex optimization problems, or can be reduced to convex optimization problems via simple transformations: *

*Linear programming

Linear programming (LP), also called linear optimization, is a method to achieve the best outcome (such as maximum profit or lowest cost) in a mathematical model whose requirements and objective are represented by linear function#As a polynomia ...

problems are the simplest convex programs. In LP, the objective and constraint functions are all linear.

* Quadratic programming are the next-simplest. In QP, the constraints are all linear, but the objective may be a convex quadratic function.

* Second order cone programming are more general.

* Semidefinite programming are more general.

* Conic optimization are even more general - see figure to the right,

Other special cases include;

* Least squares

* Quadratic minimization with convex quadratic constraints

* Geometric programming

* Entropy maximization with appropriate constraints.Properties

The following are useful properties of convex optimization problems: * every point that is local minimum is a also a global minimum; * the optimal set is convex; * if the objective function is ''strictly'' convex, then the problem has at most one optimal point. These results are used by the theory of convex minimization along with geometric notions fromfunctional analysis

Functional analysis is a branch of mathematical analysis, the core of which is formed by the study of vector spaces endowed with some kind of limit-related structure (for example, Inner product space#Definition, inner product, Norm (mathematics ...

(in Hilbert spaces) such as the Hilbert projection theorem, the separating hyperplane theorem, and Farkas' lemma.

Algorithms

Unconstrained and equality-constrained problems

The convex programs easiest to solve are the ''unconstrained'' problems, or the problems with only equality constraints. As the equality constraints are all linear, they can be eliminated withlinear algebra

Linear algebra is the branch of mathematics concerning linear equations such as

:a_1x_1+\cdots +a_nx_n=b,

linear maps such as

:(x_1, \ldots, x_n) \mapsto a_1x_1+\cdots +a_nx_n,

and their representations in vector spaces and through matrix (mathemat ...

and integrated into the objective, thus converting an equality-constrained problem into an unconstrained one.

In the class of unconstrained (or equality-constrained) problems, the simplest ones are those in which the objective is quadratic. For these problems, the KKT conditions (which are necessary for optimality) are all linear, so they can be solved analytically.

For unconstrained (or equality-constrained) problems with a general convex objective that is twice-differentiable, Newton's method

In numerical analysis, the Newton–Raphson method, also known simply as Newton's method, named after Isaac Newton and Joseph Raphson, is a root-finding algorithm which produces successively better approximations to the roots (or zeroes) of a ...

can be used. It can be seen as reducing a general unconstrained convex problem, to a sequence of quadratic problems.Newton's method can be combined with line search for an appropriate step size, and it can be mathematically proven to converge quickly.

Other efficient algorithms for unconstrained minimization are gradient descent (a special case of steepest descent

Gradient descent is a method for unconstrained mathematical optimization. It is a :First order methods, first-order Iterative algorithm, iterative algorithm for minimizing a differentiable function, differentiable multivariate function.

The ide ...

).

General problems

The more challenging problems are those with inequality constraints. A common way to solve them is to reduce them to unconstrained problems by adding a barrier function, enforcing the inequality constraints, to the objective function. Such methods are called interior point methods.They have to be initialized by finding a feasible interior point using by so-called ''phase I'' methods, which either find a feasible point or show that none exist. Phase I methods generally consist of reducing the search in question to a simpler convex optimization problem. Convex optimization problems can also be solved by the following contemporary methods: * Bundle methods (Wolfe, Lemaréchal, Kiwiel), and * Subgradient projection methods (Polyak), * Interior-point methods, which make use of self-concordant barrier functions and self-regular barrier functions. *Cutting-plane methods

In mathematics, mathematical Optimization (mathematics), optimization, the cutting-plane method is any of a variety of optimization methods that iteratively refine a feasible set or objective function by means of linear inequalities, termed ''cut ...

*Ellipsoid method

In mathematical optimization, the ellipsoid method is an iterative method for convex optimization, minimizing convex functions over convex sets. The ellipsoid method generates a sequence of ellipsoids whose volume uniformly decreases at every ste ...

* Subgradient method

* Dual subgradients and the drift-plus-penalty method

Subgradient methods can be implemented simply and so are widely used. Dual subgradient methods are subgradient methods applied to a dual problem

In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. If the primal is a minimization problem then th ...

. The drift-plus-penalty method is similar to the dual subgradient method, but takes a time average of the primal variables.

Lagrange multipliers

Consider a convex minimization problem given in standard form by a cost function and inequality constraints for . Then the domain is: : The Lagrangian function for the problem is : For each point in that minimizes over , there exist real numbers called Lagrange multipliers, that satisfy these conditions simultaneously: # minimizes over all # with at least one # (complementary slackness). If there exists a "strictly feasible point", that is, a point satisfying : then the statement above can be strengthened to require that . Conversely, if some in satisfies (1)–(3) for scalars with then is certain to minimize over .Software

There is a large software ecosystem for convex optimization. This ecosystem has two main categories: ''solvers'' on the one hand and ''modeling tools'' (or ''interfaces'') on the other hand. Solvers implement the algorithms themselves and are usually written in C. They require users to specify optimization problems in very specific formats which may not be natural from a modeling perspective. Modeling tools are separate pieces of software that let the user specify an optimization in higher-level syntax. They manage all transformations to and from the user's high-level model and the solver's input/output format. Below are two tables. The first shows shows modelling tools (such as CVXPY and JuMP.jl) and the second solvers (such as SCS and MOSEK). They are by no means exhaustive.Applications

Convex optimization can be used to model problems in a wide range of disciplines, such as automaticcontrol systems

A control system manages, commands, directs, or regulates the behavior of other devices or systems using control loops. It can range from a single home heating controller using a thermostat controlling a domestic boiler to large industrial co ...

, estimation and signal processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing ''signals'', such as audio signal processing, sound, image processing, images, Scalar potential, potential fields, Seismic tomograph ...

, communications and networks, electronic circuit design

In electrical engineering, the process of circuit design can cover systems ranging from complex electronic systems down to the individual transistors within an integrated circuit. One person can often do the design process without needing a pl ...

, data analysis and modeling, finance

Finance refers to monetary resources and to the study and Academic discipline, discipline of money, currency, assets and Liability (financial accounting), liabilities. As a subject of study, is a field of Business administration, Business Admin ...

, statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a s ...

( optimal experimental design), and structural optimization, where the approximation concept has proven to be efficient. Convex optimization can be used to model problems in the following fields:

* Portfolio optimization.

* Worst-case risk analysis.

* Optimal advertising.

* Variations of statistical regression (including regularization and quantile regression).

* Model fitting (particularly multiclass classification).

* Electricity generation

Electricity generation is the process of generating electric power from sources of primary energy. For electric utility, utilities in the electric power industry, it is the stage prior to its Electricity delivery, delivery (Electric power transm ...

optimization.

* Combinatorial optimization

Combinatorial optimization is a subfield of mathematical optimization that consists of finding an optimal object from a finite set of objects, where the set of feasible solutions is discrete or can be reduced to a discrete set. Typical combina ...

.

* Non-probabilistic modelling of uncertainty

Uncertainty or incertitude refers to situations involving imperfect or unknown information. It applies to predictions of future events, to physical measurements that are already made, or to the unknown, and is particularly relevant for decision ...

.

* Localization using wireless signals Ahmad Bazzi, Dirk TM Slock, and Lisa Meilhac. "Online angle of arrival estimation in the presence of mutual coupling." 2016 IEEE Statistical Signal Processing Workshop (SSP). IEEE, 2016.

Extensions

Extensions of convex optimization include the optimization of biconvex, pseudo-convex, and quasiconvex functions. Extensions of the theory ofconvex analysis

Convex analysis is the branch of mathematics devoted to the study of properties of convex functions and convex sets, often with applications in convex optimization, convex minimization, a subdomain of optimization (mathematics), optimization theor ...

and iterative methods for approximately solving non-convex minimization problems occur in the field of generalized convexity, also known as abstract convex analysis.

See also

* Duality * Karush–Kuhn–Tucker conditions *Optimization problem

In mathematics, engineering, computer science and economics

Economics () is a behavioral science that studies the Production (economics), production, distribution (economics), distribution, and Consumption (economics), consumption of goo ...

* Proximal gradient method

* Algorithmic problems on convex sets

Notes

References

* * * * * * Hiriart-Urruty, Jean-Baptiste, and Lemaréchal, Claude. (2004). ''Fundamentals of Convex analysis''. Berlin: Springer. * * * * * * Nesterov, Yurii. (2004).Introductory Lectures on Convex Optimization

', Kluwer Academic Publishers * * * Schmit, L.A.; Fleury, C. 1980: ''Structural synthesis by combining approximation concepts and dual methods''. J. Amer. Inst. Aeronaut. Astronaut 18, 1252-1260

External links

EE364a: Convex Optimization I

an

EE364b: Convex Optimization II

Stanford course homepages

6.253: Convex Analysis and Optimization

an MIT OCW course homepage * Brian Borchers

An overview of software for convex optimization

{{Convex analysis and variational analysis Convex analysis Mathematical optimization