|

X87

x87 is a floating-point-related subset of the x86 architecture instruction set. It originated as an extension of the 8086 instruction set in the form of optional floating-point coprocessors that work in tandem with corresponding x86 CPUs. These microchips have names ending in "87". This is also known as the NPX (numeric processor extension). Like other extensions to the basic instruction set, x87 instructions are not strictly needed to construct working programs, but provide hardware and microcode implementations of common numerical tasks, allowing these tasks to be performed much faster than corresponding machine code routines can. The x87 instruction set includes instructions for basic floating-point operations such as addition, subtraction and comparison, but also for more complex numerical operations, such as the computation of the tangent function and its inverse, for example. Most x86 processors since the Intel 80486 have had these x87 instructions implemented in the main CP ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Extended Precision

Extended precision refers to floating-point number formats that provide greater precision than the basic floating-point formats. Extended-precision formats support a basic format by minimizing roundoff and overflow errors in intermediate values of expressions on the base format. In contrast to ''extended precision'', arbitrary-precision arithmetic refers to implementations of much larger numeric types (with a storage count that usually is not a power of two) using special software (or, rarely, hardware). Extended-precision implementations There is a long history of extended floating-point formats reaching back nearly to the middle of the last century.. Various manufacturers have used different formats for extended precision for different machines. In many cases the format of the extended precision is not quite the same as a scale-up of the ordinary single- and double-precision formats it is meant to extend. In a few cases the implementation was merely a software-based change i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating-point

In computing, floating-point arithmetic (FP) is arithmetic on subsets of real numbers formed by a ''significand'' (a Sign (mathematics), signed sequence of a fixed number of digits in some Radix, base) multiplied by an integer power of that base. Numbers of this form are called floating-point numbers. For example, the number 2469/200 is a floating-point number in base ten with five digits: 2469/200 = 12.345 = \! \underbrace_\text \! \times \! \underbrace_\text\!\!\!\!\!\!\!\overbrace^ However, 7716/625 = 12.3456 is not a floating-point number in base ten with five digits—it needs six digits. The nearest floating-point number with only five digits is 12.346. And 1/3 = 0.3333… is not a floating-point number in base ten with any finite number of digits. In practice, most floating-point systems use Binary number, base two, though base ten (decimal floating point) is also common. Floating-point arithmetic operations, such as addition and division, approximate the correspond ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Superscalar

A superscalar processor (or multiple-issue processor) is a CPU that implements a form of parallelism called instruction-level parallelism within a single processor. In contrast to a scalar processor, which can execute at most one single instruction per clock cycle, a superscalar processor can execute or start executing more than one instruction during a clock cycle by simultaneously dispatching multiple instructions to different execution units on the processor. It therefore allows more throughput (the number of instructions that can be executed in a unit of time which can even be less than 1) than would otherwise be possible at a given clock rate. Each execution unit is not a separate processor (or a core if the processor is a multi-core processor), but an execution resource within a single CPU such as an arithmetic logic unit. While a superscalar CPU is typically also pipelined, superscalar and pipelining execution are considered different performance enhancement techni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Second

The second (symbol: s) is a unit of time derived from the division of the day first into 24 hours, then to 60 minutes, and finally to 60 seconds each (24 × 60 × 60 = 86400). The current and formal definition in the International System of Units (SI) is more precise: The second ..is defined by taking the fixed numerical value of the caesium frequency, Δ''ν''Cs, the unperturbed ground-state hyperfine transition frequency of the caesium 133 atom, to be when expressed in the unit Hz, which is equal to s−1. This current definition was adopted in 1967 when it became feasible to define the second based on fundamental properties of nature with caesium clocks. As the speed of Earth's rotation varies and is slowing ever so slightly, a leap second is added at irregular intervals to civil time to keep clocks in sync with Earth's rotation. The definition that is based on of a rotation of the earth is still used by the Universal Time 1 (UT1) system. Etymology "Minute" ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Arithmetic Precision

Significant figures, also referred to as significant digits, are specific digits within a number that is written in positional notation that carry both reliability and necessity in conveying a particular quantity. When presenting the outcome of a measurement (such as length, pressure, volume, or mass), if the number of digits exceeds what the measurement instrument can resolve, only the digits that are determined by the resolution are dependable and therefore considered significant. For instance, if a length measurement yields 114.8 mm, using a ruler with the smallest interval between marks at 1 mm, the first three digits (1, 1, and 4, representing 114 mm) are certain and constitute significant figures. Further, digits that are uncertain yet meaningful are also included in the significant figures. In this example, the last digit (8, contributing 0.8 mm) is likewise considered significant despite its uncertainty. Therefore, this measurement contains f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Significand

The significand (also coefficient, sometimes argument, or more ambiguously mantissa, fraction, or characteristic) is the first (left) part of a number in scientific notation or related concepts in floating-point representation, consisting of its significant digits. For negative numbers, it does not include the initial minus sign. Depending on the interpretation of the exponent, the significand may represent an integer or a fractional number, which may cause the term "mantissa" to be misleading, since the ''mantissa'' of a logarithm is always its fractional part. Although the other names mentioned are common, ''significand'' is the word used by IEEE 754, an important technical standard for floating-point arithmetic. In mathematics, the term "argument" may also be ambiguous, since "the argument of a number" sometimes refers to the length of a circular arc from 1 to a number on the unit circle in the complex plane. Example The number 123.45 can be represented as a decimal floati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

64-bit

In computer architecture, 64-bit integers, memory addresses, or other data units are those that are 64 bits wide. Also, 64-bit central processing units (CPU) and arithmetic logic units (ALU) are those that are based on processor registers, address buses, or data buses of that size. A computer that uses such a processor is a 64-bit computer. From the software perspective, 64-bit computing means the use of machine code with 64-bit virtual memory addresses. However, not all 64-bit instruction sets support full 64-bit virtual memory addresses; x86-64 and AArch64, for example, support only 48 bits of virtual address, with the remaining 16 bits of the virtual address required to be all zeros (000...) or all ones (111...), and several 64-bit instruction sets support fewer than 64 bits of physical memory address. The term ''64-bit'' also describes a generation of computers in which 64-bit processors are the norm. 64 bits is a word size that defines certain classes of computer archi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Round-off Error

In computing, a roundoff error, also called rounding error, is the difference between the result produced by a given algorithm using exact arithmetic and the result produced by the same algorithm using finite-precision, rounded arithmetic. Rounding errors are due to inexactness in the representation of real numbers and the arithmetic operations done with them. This is a form of quantization error. When using approximation equations or algorithms, especially when using finitely many digits to represent real numbers (which in theory have infinitely many digits), one of the goals of numerical analysis is to estimate computation errors. Computation errors, also called numerical errors, include both truncation errors and roundoff errors. When a sequence of calculations with an input involving any roundoff error are made, errors may accumulate, sometimes dominating the calculation. In ill-conditioned problems, significant error may accumulate. In short, there are two major facets ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SSE2

SSE2 (Streaming SIMD Extensions 2) is one of the Intel SIMD (Single Instruction, Multiple Data) processor supplementary instruction sets introduced by Intel with the initial version of the Pentium 4 in 2000. SSE2 instructions allow the use of XMM (SIMD) registers on x86 instruction set architecture processors. These registers can load up to 128 bits of data and perform instructions, such as vector addition and multiplication, simultaneously. SSE2 introduced double-precision floating point instructions in addition to the single-precision floating point and integer instructions found in SSE. SSE2 extends earlier SSE instruction set by adding 144 new instructions to the previous 70 instructions. SSE2 intends to fully replace MMX, a SIMD instruction set found on IA-32 architecture processors. Competing chip-maker AMD added support for SSE2 with the introduction of their Opteron and Athlon 64 ranges of AMD64 64-bit CPUs in 2003. SSE2 was extended to create SSE3 in 2004, and e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ACM Transactions On Programming Languages And Systems

The ''ACM Transactions on Programming Languages and Systems'' (''TOPLAS'') is a bimonthly, open access, peer-reviewed scientific journal on the topic of programming languages published by the Association for Computing Machinery. Background Published since 1979, the journal's scope includes programming language design, implementation, and semantics of programming languages, compilers and interpreters, run-time systems, storage allocation and garbage collection, and formal specification, testing, and verification of software. It is indexed in Scopus and SCImago. The editor-in-chief is Andrew Myers (Cornell University). According to the ''Journal Citation Reports'', the journal had a 2020 impact factor of 0.410. References External links * TOPLASat ACM Digital Library TOPLASat DBLP DBLP is a computer science bibliography website. Starting in 1993 at Universität Trier in Germany, it grew from a small collection of HTML files and became an organization hosting a datab ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE 754

The IEEE Standard for Floating-Point Arithmetic (IEEE 754) is a technical standard for floating-point arithmetic originally established in 1985 by the Institute of Electrical and Electronics Engineers (IEEE). The standard #Design rationale, addressed many problems found in the diverse floating-point implementations that made them difficult to use reliably and Software portability, portably. Many hardware floating-point units use the IEEE 754 standard. The standard defines: * ''arithmetic formats:'' sets of Binary code, binary and decimal floating-point data, which consist of finite numbers (including signed zeros and subnormal numbers), infinity, infinities, and special "not a number" values (NaNs) * ''interchange formats:'' encodings (bit strings) that may be used to exchange floating-point data in an efficient and compact form * ''rounding rules:'' properties to be satisfied when rounding numbers during arithmetic and conversions * ''operations:'' arithmetic and other operatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

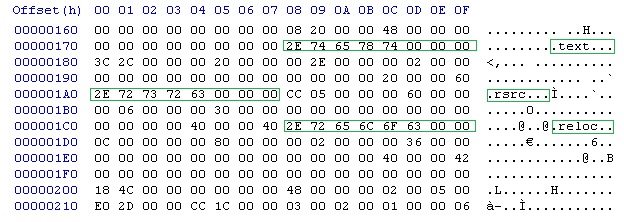

IEEE 754-1985

IEEE 754-1985 is a historic industry standard for representing floating-point numbers in computers, officially adopted in 1985 and superseded in 2008 by IEEE 754-2008, and then again in 2019 by minor revision IEEE 754-2019. During its 23 years, it was the most widely used format for floating-point computation. It was implemented in software, in the form of floating-point libraries, and in hardware, in the instructions of many CPUs and FPUs. The first integrated circuit to implement the draft of what was to become IEEE 754-1985 was the Intel 8087. IEEE 754-1985 represents numbers in binary, providing definitions for four levels of precision, of which the two most commonly used are: The standard also defines representations for positive and negative infinity, a "negative zero", five exceptions to handle invalid results like division by zero, special values called NaNs for representing those exceptions, denormal numbers to represent numbers smaller than shown above, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |