|

Write Barrier

In operating systems, write barrier is a mechanism for enforcing a particular ordering in a sequence of writes to a storage system in a computer system. For example, a write barrier in a file system is a mechanism (program logic) that ensures that in-memory file system state is written out to persistent storage in the correct order. In Garbage collection A write barrier in a garbage collector is a fragment of code emitted by the compiler immediately before every store operation to ensure that (e.g.) generational invariants are maintained. In Computer storage A write barrier in a memory system, also known as a memory barrier, is a hardware-specific compiler intrinsic that ensures that all preceding memory operations "happen before" all subsequent ones. See also * Native Command Queuing In computing, Native Command Queuing (NCQ) is an extension of the Serial ATA protocol allowing hard disk drives to internally optimize the order in which received read and write comman ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Operating System

An operating system (OS) is system software that manages computer hardware, software resources, and provides common daemon (computing), services for computer programs. Time-sharing operating systems scheduler (computing), schedule tasks for efficient use of the system and may also include accounting software for cost allocation of Scheduling (computing), processor time, mass storage, printing, and other resources. For hardware functions such as input and output and memory allocation, the operating system acts as an intermediary between programs and the computer hardware, although the application code is usually executed directly by the hardware and frequently makes system calls to an OS function or is interrupted by it. Operating systems are found on many devices that contain a computer from cellular phones and video game consoles to web servers and supercomputers. The dominant general-purpose personal computer operating system is Microsoft Windows with a market share of aroun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

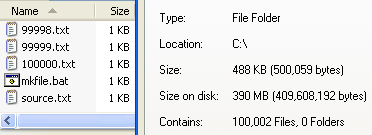

File System

In computing, file system or filesystem (often abbreviated to fs) is a method and data structure that the operating system uses to control how data is stored and retrieved. Without a file system, data placed in a storage medium would be one large body of data with no way to tell where one piece of data stopped and the next began, or where any piece of data was located when it was time to retrieve it. By separating the data into pieces and giving each piece a name, the data are easily isolated and identified. Taking its name from the way a paper-based data management system is named, each group of data is called a " file". The structure and logic rules used to manage the groups of data and their names is called a "file system." There are many kinds of file systems, each with unique structure and logic, properties of speed, flexibility, security, size and more. Some file systems have been designed to be used for specific applications. For example, the ISO 9660 file system is desig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Garbage Collection (computer Science)

In computer science, garbage collection (GC) is a form of automatic memory management. The ''garbage collector'' attempts to reclaim memory which was allocated by the program, but is no longer referenced; such memory is called '' garbage''. Garbage collection was invented by American computer scientist John McCarthy around 1959 to simplify manual memory management in Lisp. Garbage collection relieves the programmer from doing manual memory management, where the programmer specifies what objects to de-allocate and return to the memory system and when to do so. Other, similar techniques include stack allocation, region inference, and memory ownership, and combinations thereof. Garbage collection may take a significant proportion of a program's total processing time, and affect performance as a result. Resources other than memory, such as network sockets, database handles, windows, file descriptors, and device descriptors, are not typically handled by garbage collection, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memory Hierarchy

In computer architecture, the memory hierarchy separates computer storage into a hierarchy based on response time. Since response time, complexity, and capacity are related, the levels may also be distinguished by their performance and controlling technologies. Memory hierarchy affects performance in computer architectural design, algorithm predictions, and lower level programming constructs involving locality of reference. Designing for high performance requires considering the restrictions of the memory hierarchy, i.e. the size and capabilities of each component. Each of the various components can be viewed as part of a hierarchy of memories (m1, m2, ..., mn) in which each member mi is typically smaller and faster than the next highest member mi+1 of the hierarchy. To limit waiting by higher levels, a lower level will respond by filling a buffer and then signaling for activating the transfer. There are four major storage levels. * ''Internal'' – Processor registers a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memory Barrier

In computing, a memory barrier, also known as a membar, memory fence or fence instruction, is a type of barrier instruction that causes a central processing unit (CPU) or compiler to enforce an ordering constraint on memory operations issued before and after the barrier instruction. This typically means that operations issued prior to the barrier are guaranteed to be performed before operations issued after the barrier. Memory barriers are necessary because most modern CPUs employ performance optimizations that can result in out-of-order execution. This reordering of memory operations (loads and stores) normally goes unnoticed within a single thread of execution, but can cause unpredictable behavior in concurrent programs and device drivers unless carefully controlled. The exact nature of an ordering constraint is hardware dependent and defined by the architecture's memory ordering model. Some architectures provide multiple barriers for enforcing different ordering constrai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intrinsic Function

In computer software, in compiler theory, an intrinsic function (or built-in function) is a function (subroutine) available for use in a given programming language whose implementation is handled specially by the compiler. Typically, it may substitute a sequence of automatically generated instructions for the original function call, similar to an inline function. Unlike an inline function, the compiler has an intimate knowledge of an intrinsic function and can thus better integrate and optimize it for a given situation. Compilers that implement intrinsic functions generally enable them only when a program requests optimization, otherwise falling back to a default implementation provided by the language runtime system (environment). Intrinsic functions are often used to explicitly implement vectorization and parallelization in languages which do not address such constructs. Some application programming interfaces (API), for example, AltiVec and OpenMP, use intrinsic functio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Happened-before

In computer science, the happened-before relation (denoted: \to \;) is a relation between the result of two events, such that if one event should happen before another event, the result must reflect that, even if those events are in reality executed out of order (usually to optimize program flow). This involves ordering events based on the potential causal relationship of pairs of events in a concurrent system, especially asynchronous distributed systems. It was formulated by Leslie Lamport. The happened-before relation is formally defined as the least strict partial order on events such that: * If events a \; and b \; occur on the same process, a \to b\; if the occurrence of event a \; preceded the occurrence of event b \;. * If event a \; is the sending of a message and event b \; is the reception of the message sent in event a \;, a \to b\;. If two events happen in different isolated processes (that do not exchange messages directly or indirectly via third-party processes), then ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Native Command Queuing

In computing, Native Command Queuing (NCQ) is an extension of the Serial ATA protocol allowing hard disk drives to internally optimize the order in which received read and write commands are executed. This can reduce the amount of unnecessary drive head movement, resulting in increased performance (and slightly decreased wear of the drive) for workloads where multiple simultaneous read/write requests are outstanding, most often occurring in server-type applications. History Native Command Queuing was preceded by Parallel ATA's version of Tagged Command Queuing (TCQ). ATA's attempt at integrating TCQ was constrained by the requirement that ATA host bus adapters use ISA bus device protocols to interact with the operating system. The resulting high CPU overhead and negligible performance gain contributed to a lack of market acceptance for TCQ. NCQ differs from TCQ in that, with NCQ, each command is of equal importance, but NCQ's host bus adapter also programs its own first part ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compilers

In computing, a compiler is a computer program that translates computer code written in one programming language (the ''source'' language) into another language (the ''target'' language). The name "compiler" is primarily used for programs that translate source code from a high-level programming language to a low-level programming language (e.g. assembly language, object code, or machine code) to create an executable program. Compilers: Principles, Techniques, and Tools by Alfred V. Aho, Ravi Sethi, Jeffrey D. Ullman - Second Edition, 2007 There are many different types of compilers which produce output in different useful forms. A ''cross-compiler'' produces code for a different CPU or operating system than the one on which the cross-compiler itself runs. A ''bootstrap compiler'' is often a temporary compiler, used for compiling a more permanent or better optimised compiler for a language. Related software include, a program that translates from a low-level language to a hi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |