|

Trace Cache

In computer architecture, a trace cache or execution trace cache is a specialized instruction cache which stores the dynamic stream of instructions known as trace. It helps in increasing the instruction fetch bandwidth and decreasing power consumption (in the case of Intel Pentium 4) by storing traces of instructions that have already been fetched and decoded. A trace processor is an architecture designed around the trace cache and processes the instructions at trace level granularity. The formal mathematical theory of traces is described by trace monoids. Background The earliest academic publication of trace cache was "Trace Cache: a Low Latency Approach to High Bandwidth Instruction Fetching". This widely acknowledged paper was presented by Eric Rotenberg, Steve Bennett, and Jim Smith at 1996 International Symposium on Microarchitecture (MICRO) conference. An earlier publication is US patent 5381533, by Alex Peleg and Uri Weiser of Intel, "Dynamic flow instruction cache me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Cache Associativity

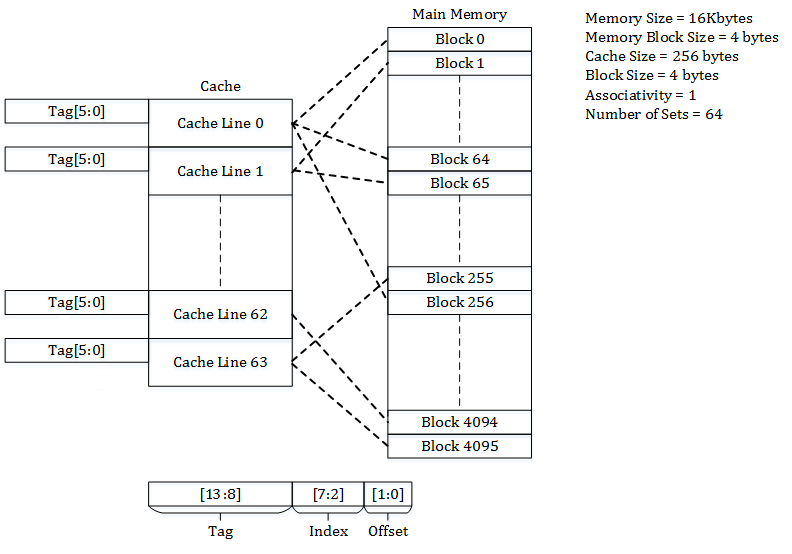

Cache placement policies are policies that determine where a particular memory block can be placed when it goes into a CPU cache. A block of memory cannot necessarily be placed at an arbitrary location in the cache; it may be restricted to a particular cache line or a set of cache lines by the cache's placement policy. There are three different policies available for placement of a memory block in the cache: direct-mapped, fully associative, and set-associative. Originally this space of cache organizations was described using the term "congruence mapping". Direct-mapped cache In a direct-mapped cache structure, the cache is organized into multiple sets with a single cache line per set. Based on the address of the memory block, it can only occupy a single cache line. The cache can be framed as a column matrix. To place a block in the cache * The set is determined by the index bits derived from the address of the memory block. * The memory block is placed in the set identif ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Instruction Cycle

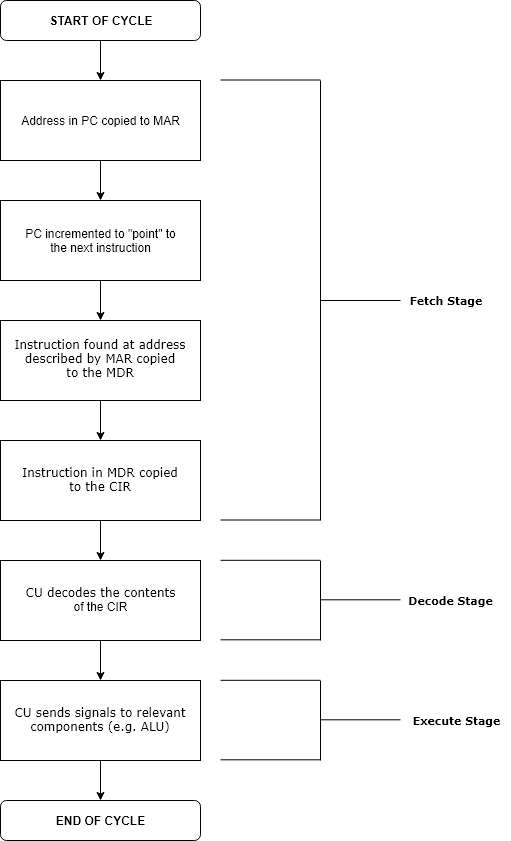

The instruction cycle (also known as the fetch–decode–execute cycle, or simply the fetch–execute cycle) is the cycle that the central processing unit (CPU) follows from boot-up until the computer has shut down in order to process instructions. It is composed of three main stages: the fetch stage, the decode stage, and the execute stage. In simpler CPUs, the instruction cycle is executed sequentially, each instruction being processed before the next one is started. In most modern CPUs, the instruction cycles are instead executed concurrently, and often in parallel, through an instruction pipeline: the next instruction starts being processed before the previous instruction has finished, which is possible because the cycle is broken up into separate steps. Role of components Program counter The program counter (PC) is a register that holds the memory address of the next instruction to be executed. After each instruction copy to the memory address register (MAR), the PC can ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

CPU Cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit (M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Branch Trace

Branch trace is a computer program debugging tool or analysis technique. It is an abbreviated instruction trace in which only the successful Branch (computer science), branch instructions are recorded. On IBM System/360 this was implemented as part of Program-Event Recording (PER) but was seldom used at the application programming level. Program Event Recording hardware was used and due to the overhead of this tool, it was removed from customer-available MVS systems. Branch tracing is also available for Pentium 4, Xeon and later List of Intel processors, Intel processors. There are dedicated processor commands to enable branch tracing and save executed branches into special Intel Branch Trace Store (BTS) area of resident memory. The Branch Trace Store can be also configured to be a circular buffer, so that last executed branches are recorded. Branch tracing on Intel processors using the Branch Trace Store can cause 40x application run-time slow down. For the Intel Core M and the 5th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Micro-operation Cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit (MM ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Sandy Bridge

Sandy Bridge is the List of Intel codenames, codename for Intel's 32 nm process, 32 nm microarchitecture used in the second generation of the Intel Core, Intel Core processors (Intel Core i7, Core i7, Intel Core i5, i5, Intel Core i3, i3). The Sandy Bridge microarchitecture is the successor to Nehalem (microarchitecture), Nehalem and Westmere (microarchitecture), Westmere microarchitecture. Intel demonstrated an A1 stepping Sandy Bridge processor in 2009 during Intel Developer Forum (IDF), and released first products based on the architecture in January 2011 under the Intel Core#Sandy Bridge microarchitecture based, Core brand. Sandy Bridge is manufactured in the 32 nanometer, 32 nm process and has a soldered contact with the die and IHS (Integrated Heat Spreader), while Intel's subsequent generation Ivy Bridge (microarchitecture), Ivy Bridge uses a 22 nanometer, 22 nm die shrink and a TIM (Thermal Interface Material) between the die and the IHS. Technology Intel demonstrated a S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Micro-operation

In computer central processing units, micro-operations (also known as micro-ops or μops, historically also as micro-actions) are detailed low-level instructions used in some designs to implement complex machine instructions (sometimes termed macro-instructions in this context). Usually, micro-operations perform basic operations on data stored in one or more processor register, registers, including transferring data between registers or between registers and external bus (computing), buses of the central processing unit (CPU), and performing arithmetic or logical operations on registers. In a typical fetch-decode-execute cycle, each step of a macro-instruction is decomposed during its execution so the CPU determines and steps through a series of micro-operations. The execution of micro-operations is performed under control of the CPU's control unit, which decides on their execution while performing various optimizations such as reordering, fusion and caching. Optimizations V ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

NetBurst

The NetBurst microarchitecture, called P68 inside Intel, was the successor to the P6 microarchitecture in the x86 family of central processing units (CPUs) made by Intel. The first CPU to use this architecture was the Willamette-core Pentium 4, released on November 20, 2000 and the first of the Pentium 4 CPUs; all subsequent Pentium 4 and Pentium D variants have also been based on NetBurst. In mid-2001, Intel released the ''Foster'' core, which was also based on NetBurst, thus switching the Xeon CPUs to the new architecture as well. Pentium 4-based Celeron CPUs also use the NetBurst architecture. NetBurst was replaced with the Core microarchitecture based on P6, released in July 2006. Technology The NetBurst microarchitecture includes features such as Hyper-threading, Hyper Pipelined Technology, Rapid Execution Engine, Execution Trace Cache, and replay system which all were introduced for the first time in this particular microarchitecture, and some never appeared ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Agner Fog

Agner Fog is a Danish evolutionary anthropologist and computer scientist. He is currently an associate professor of computer science at the Technical University of Denmark (DTU), and has been present at DTU since 1995. He is best known for coining the term "Regality Theory" and for writing extensive optimization manuals for machines running the x86 architecture. Social sciences Agner Fog is the main investigator of Regality Theory, the proposition that the environment a group is in selects for certain psychological traits. As a result, a harsher environment selects for more regal (warlike) social structures while a safer environment selects for more kungic (peaceful) ones. Programming and mathematics Optimization Agner Fog is known as a "CPU analyst" to tech websites covering x86 CPUs. He maintains a five-volume manual for optimizing code for x86 CPUs, with details on the instruction timing and other features of individual microarchitectures. He also maintains a Vector Cl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

X86 Instruction Listings

The x86 instruction set refers to the set of instructions that x86-compatible microprocessors support. The instructions are usually part of an executable program, often stored as a computer file and executed on the processor. The x86 instruction set has been extended several times, introducing wider registers and datatypes as well as new functionality. x86 integer instructions Below is the full 8086/8088 instruction set of Intel (81 instructions total). These instructions are also available in 32-bit mode, in which they operate on 32-bit registers (eax, ebx, etc.) and values instead of their 16-bit (ax, bx, etc.) counterparts. The updated instruction set is grouped according to architecture ( i186, i286, i386, i486, i586/i686) and is referred to as (32-bit) x86 and (64-bit) x86-64 (also known as AMD64). Original 8086/8088 instructions This is the original instruction set. In the 'Notes' column, ''r'' means ''register'', ''m'' means ''memory address'' and ''imm'' means '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Micro-operations

In computer central processing units, micro-operations (also known as micro-ops or μops, historically also as micro-actions) are detailed low-level instructions used in some designs to implement complex machine instructions (sometimes termed macro-instructions in this context). Usually, micro-operations perform basic operations on data stored in one or more processor register, registers, including transferring data between registers or between registers and external bus (computing), buses of the central processing unit (CPU), and performing arithmetic or logical operations on registers. In a typical fetch-decode-execute cycle, each step of a macro-instruction is decomposed during its execution so the CPU determines and steps through a series of micro-operations. The execution of micro-operations is performed under control of the CPU's control unit, which decides on their execution while performing various optimizations such as reordering, fusion and caching. Optimizations V ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |