|

Semantic Triple

A semantic triple, or RDF triple or simply triple, is the atomic data entity in the Resource Description Framework (RDF) data model. As its name indicates, a triple is a set of three entities that codifies a statement about semantic data in the form of subject–predicate–object expressions (e.g., "Bob is 35", or "Bob knows John"). Subject, predicate and object This format enables knowledge to be represented in a machine-readable way. Particularly, every part of an RDF triple is individually addressable via unique URIs — for example, the statement "Bob knows John" might be represented in RDF as: http://example.name#BobSmith12 http://xmlns.com/foaf/0.1/knows http://example.name#JohnDoe34. Given this precise representation, semantic data can be unambiguously queried and reasoned about. The components of a triple, such as the statement "The sky has the color blue", consist of a subject ("the sky"), a predicate ("has the color"), and an object ("blue"). This is similar to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Resource Description Framework

The Resource Description Framework (RDF) is a World Wide Web Consortium (W3C) standard originally designed as a data model for metadata. It has come to be used as a general method for description and exchange of graph data. RDF provides a variety of syntax notations and data serialization formats with Turtle (Terse RDF Triple Language) currently being the most widely used notation. RDF is a directed graph composed of triple statements. An RDF graph statement is represented by: 1) a node for the subject, 2) an arc that goes from a subject to an object for the predicate, and 3) a node for the object. Each of the three parts of the statement can be identified by a URI. An object can also be a literal value. This simple, flexible data model has a lot of expressive power to represent complex situations, relationships, and other things of interest, while also being appropriately abstract. RDF was adopted as a W3C recommendation in 1999. The RDF 1.0 specification was published in 2004, th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

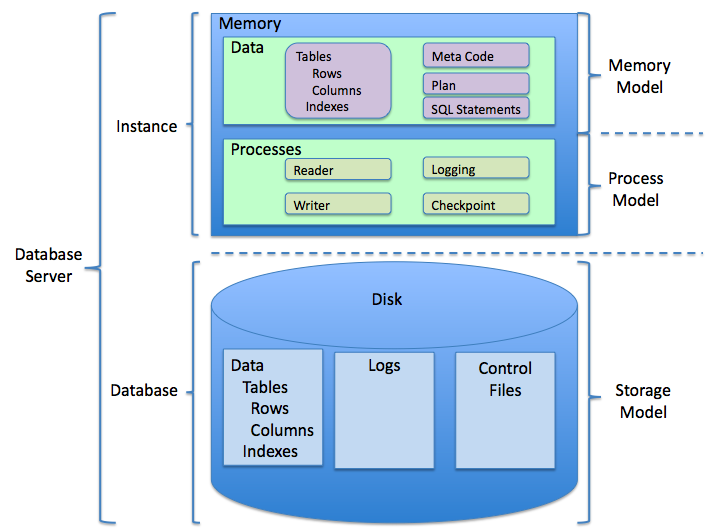

Relational Database

A relational database is a (most commonly digital) database based on the relational model of data, as proposed by E. F. Codd in 1970. A system used to maintain relational databases is a relational database management system (RDBMS). Many relational database systems are equipped with the option of using the SQL (Structured Query Language) for querying and maintaining the database. History The term "relational database" was first defined by E. F. Codd at IBM in 1970. Codd introduced the term in his research paper "A Relational Model of Data for Large Shared Data Banks". In this paper and later papers, he defined what he meant by "relational". One well-known definition of what constitutes a relational database system is composed of Codd's 12 rules. However, no commercial implementations of the relational model conform to all of Codd's rules, so the term has gradually come to describe a broader class of database systems, which at a minimum: # Present the data to the user as re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

World Wide Web Consortium

The World Wide Web Consortium (W3C) is the main international standards organization for the World Wide Web. Founded in 1994 and led by Tim Berners-Lee, the consortium is made up of member organizations that maintain full-time staff working together in the development of standards for the World Wide Web. , W3C had 459 members. W3C also engages in education and outreach, develops software and serves as an open forum for discussion about the Web. History The World Wide Web Consortium (W3C) was founded in 1994 by Tim Berners-Lee after he left the European Organization for Nuclear Research (CERN) in October 1994. It was founded at the Massachusetts Institute of Technology (MIT) Laboratory for Computer Science with support from the European Commission, and the Defense Advanced Research Projects Agency, which had pioneered the ARPANET, one of the predecessors to the Internet. It was located in Technology Square until 2004, when it moved, with the MIT Computer Science and Artif ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

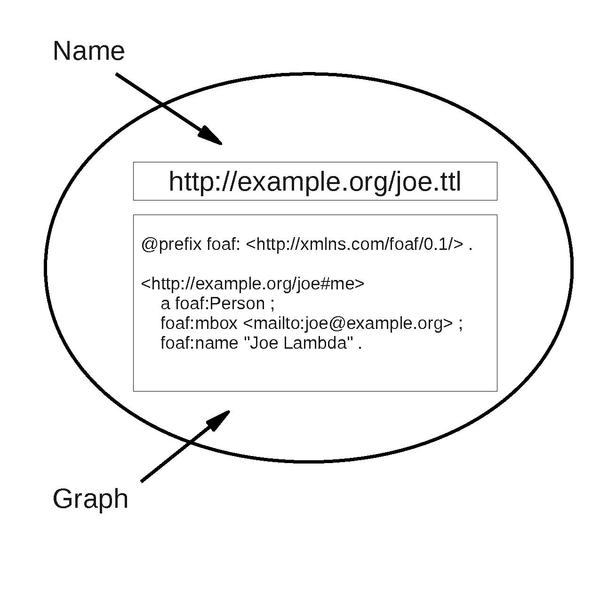

Named Graph

Named graphs are a key concept of Semantic Web architecture in which a set of Resource Description Framework statements (a Graph (discrete mathematics), graph) are identified using a URI, allowing descriptions to be made of that set of statements such as context, provenance information or other such metadata. Named graphs are a simple extension of the RDF data model through which graphs can be created but the model lacks an effective means of distinguishing between them once published on the World Wide Web, Web at large. Named graphs and HTTP One conceptualization of the Web is as a graph of document nodes identified with URIs and connected by hyperlink arcs which are expressed within the HTML documents. By doing an HTTP GET on a URI (usually via a Web browser), a somehow-related document may be retrieved. This "follow your nose" approach also applies to RDF documents on the Web in the form of Linked Data, where typically an RDF syntax is used to express data as a series of st ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Commonsense Reasoning

In artificial intelligence (AI), commonsense reasoning is a human-like ability to make presumptions about the type and essence of ordinary situations humans encounter every day. These assumptions include judgments about the nature of physical objects, taxonomic properties, and peoples' intentions. A device that exhibits commonsense reasoning might be capable of drawing conclusions that are similar to humans' folk psychology (humans' innate ability to reason about people's behavior and intentions) and naive physics (humans' natural understanding of the physical world). Definitions and characterizations Some definitions and characterizations of common sense from different authors include: * "Commonsense knowledge includes the basic facts about events (including actions) and their effects, facts about knowledge and how it is obtained, facts about beliefs and desires. It also includes the basic facts about material objects and their properties." * "Commonsense knowledge differs from ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

What-if Analysis

Sensitivity analysis is the study of how the uncertainty in the output of a mathematical model or system (numerical or otherwise) can be divided and allocated to different sources of uncertainty in its inputs. A related practice is uncertainty analysis, which has a greater focus on uncertainty quantification and propagation of uncertainty; ideally, uncertainty and sensitivity analysis should be run in tandem. The process of recalculating outcomes under alternative assumptions to determine the impact of a variable under sensitivity analysis can be useful for a range of purposes, including: * Testing the robustness of the results of a model or system in the presence of uncertainty. * Increased understanding of the relationships between input and output variables in a system or model. * Uncertainty reduction, through the identification of model input that cause significant uncertainty in the output and should therefore be the focus of attention in order to increase robustness (perhap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predicate (mathematical Logic)

In logic, a predicate is a symbol that represents a property or a relation. For instance, in the first-order formula P(a), the symbol P is a predicate that applies to the individual constant a. Similarly, in the formula R(a,b), the symbol R is a predicate that applies to the individual constants a and b. In the semantics of logic, predicates are interpreted as relations. For instance, in a standard semantics for first-order logic, the formula R(a,b) would be true on an interpretation if the entities denoted by a and b stand in the relation denoted by R. Since predicates are non-logical symbols, they can denote different relations depending on the interpretation given to them. While first-order logic only includes predicates that apply to individual constants, other logics may allow predicates that apply to other predicates. Predicates in different systems A predicate is a statement or mathematical assertion that contains variables, sometimes referred to as predicate variabl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Database Scalability

Database scalability is the ability of a database to handle changing demands by adding/removing resources. Databases use a host of techniques to cope. History The initial history of database scalability was to provide service on ever smaller computers. The first database management systems such as IMS ran on mainframe computers. The second generation, including Ingres, Informix, Sybase, RDB and Oracle emerged on minicomputers. The third generation, including dBase and Oracle (again), ran on personal computers. During the same period, attention turned to handling more data and more demanding workloads. One key software innovation in the late 1980s was to reduce update locking granularity from tables and disk blocks to individual rows. This eliminated a critical scalability bottleneck, as coarser locks could delay access to rows even though they were not directly involved in a transaction. Earlier systems were completely insensitive to increasing resources. Once software limitatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Knowledge Base

A knowledge base (KB) is a technology used to store complex structured and unstructured information used by a computer system. The initial use of the term was in connection with expert systems, which were the first knowledge-based systems. Original usage of the term The original use of the term knowledge base was to describe one of the two sub-systems of an expert system. A knowledge-based system consists of a knowledge-base representing facts about the world and ways of reasoning about those facts to deduce new facts or highlight inconsistencies. Properties The term "knowledge-base" was coined to distinguish this form of knowledge store from the more common and widely used term '' database''. During the 1970s, virtually all large management information systems stored their data in some type of hierarchical or relational database. At this point in the history of information technology, the distinction between a database and a knowledge-base was clear and unambiguou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Software Engineering

Software engineering is a systematic engineering approach to software development. A software engineer is a person who applies the principles of software engineering to design, develop, maintain, test, and evaluate computer software. The term '' programmer'' is sometimes used as a synonym, but may also lack connotations of engineering education or skills. Engineering techniques are used to inform the software development process which involves the definition, implementation, assessment, measurement, management, change, and improvement of the software life cycle process itself. It heavily uses software configuration management which is about systematically controlling changes to the configuration, and maintaining the integrity and traceability of the configuration and code throughout the system life cycle. Modern processes use software versioning. History Beginning in the 1960s, software engineering was seen as its own type of engineering. Additionally, the development of so ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Protégé (software)

Protégé is a free, open source ontology editor and a knowledge management system. The Protégé meta-tool was first built by Mark Musen in 1987 and has since been developed by a team at Stanford University. The software is the most popular and widely used ontology editor in the world. Overview Protégé provides a graphic user interface to define ontologies. It also includes deductive classifiers to validate that models are consistent and to infer new information based on the analysis of an ontology. Like Eclipse, Protégé is a framework for which various other projects suggest plugins. This application is written in Java and makes heavy use of Swing to create the user interface. According to their website, there are over 300,000 registered users. A 2009 book calls it "the leading ontological engineering tool". Protégé is developed at Stanford University and is made available under the BSD 2-clause license. Earlier versions of the tool were developed in collaboration with th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |