|

Scatter Gather (vector Addressing)

Gather/scatter is a type of memory addressing that at once collects (gathers) from, or stores (scatters) data to, multiple, arbitrary indices. Examples of its use include sparse linear algebra operations, sorting algorithms, fast Fourier transforms, and some computational graph theory problems. It is the vector equivalent of register indirect addressing, with gather involving indexed reads, and scatter, indexed writes. Vector processors (and some SIMD units in CPUs) have hardware support for gather and scatter operations. Definitions Gather A sparsely populated vector y holding N non-empty elements can be represented by two densely populated vectors of length N; x containing the non-empty elements of y, and idx giving the index in y where x's element is located. The gather of y into x, denoted x \leftarrow y, _x, assigns x(i)=y(idx(i)) with idx having already been calculated. Assuming no pointer aliasing between x[], y[],idx[], a C (programming language), C implementation is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

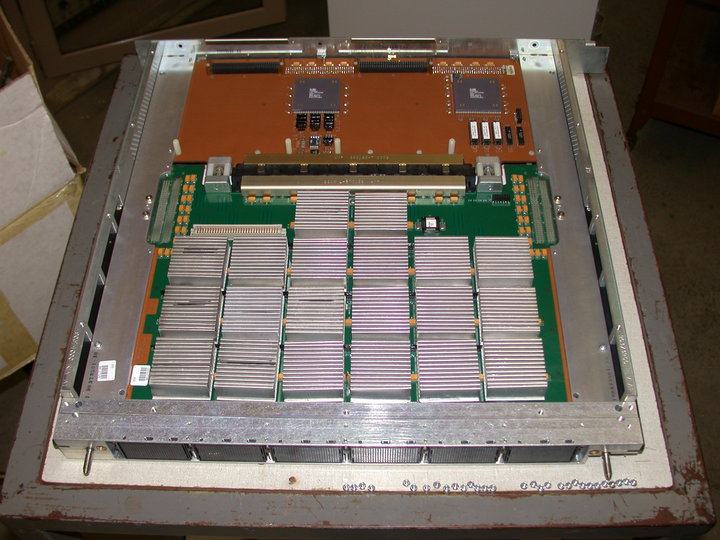

Vector Processing

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data (SIMD) or SWAR Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation and similar tasks. Vector processing techniques also operate in video-game console hardware and in graphics accelerators. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor designs led to a decline in vector super ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AVX-512

AVX-512 are 512-bit extensions to the 256-bit Advanced Vector Extensions SIMD instructions for x86 instruction set architecture (ISA) proposed by Intel in July 2013, and implemented in Intel's Xeon Phi x200 (Knights Landing) and Skylake-X CPUs; this includes the Core-X series (excluding the Core i5-7640X and Core i7-7740X), as well as the new Xeon Scalable Processor Family and Xeon D-2100 Embedded Series. AVX-512 consists of multiple extensions that may be implemented independently. This policy is a departure from the historical requirement of implementing the entire instruction block. Only the core extension AVX-512F (AVX-512 Foundation) is required by all AVX-512 implementations. Besides widening most 256-bit instructions, the extensions introduce various new operations, such as new data conversions, scatter operations, and permutations. The number of AVX registers is increased from 16 to 32, and eight new "mask registers" are added, which allow for variable selection and blend ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memory Access Pattern

In computing, a memory access pattern or IO access pattern is the pattern with which a system or program reads and writes memory on secondary storage. These patterns differ in the level of locality of reference and drastically affect cache performance, and also have implications for the approach to parallelism and distribution of workload in shared memory systems. Further, cache coherency issues can affect multiprocessor performance, which means that certain memory access patterns place a ceiling on parallelism (which manycore approaches seek to break). Computer memory is usually described as "random access", but traversals by software will still exhibit patterns that can be exploited for efficiency. Various tools exist to help system designers and programmers understand, analyse and improve the memory access pattern, including VTune and Vectorization Advisor, including tools to address GPU memory access patterns Memory access patterns also have implications for security, which ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compute Kernel

In computing, a compute kernel is a routine compiled for high throughput accelerators (such as graphics processing units (GPUs), digital signal processors (DSPs) or field-programmable gate arrays (FPGAs)), separate from but used by a main program (typically running on a central processing unit). They are sometimes called compute shaders, sharing execution units with vertex shaders and pixel shaders on GPUs, but are not limited to execution on one class of device, or graphics APIs. Description Compute kernels roughly correspond to inner loops when implementing algorithms in traditional languages (except there is no implied sequential operation), or to code passed to internal iterators. They may be specified by a separate programming language such as " OpenCL C" (managed by the OpenCL API), as "compute shaders" written in a shading language (managed by a graphics API such as OpenGL), or embedded directly in application code written in a high level language, as in the cas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vectorization (other)

Vectorization may refer to: Computing * Array programming, a style of computer programming where operations are applied to whole arrays instead of individual elements * Automatic vectorization, a compiler optimization that transforms loops to vector operations * Image tracing, the creation of vector from raster graphics * Word embedding, mapping words to vectors, in natural language processing Other uses * Vectorization (mathematics) In mathematics, especially in linear algebra and matrix theory, the vectorization of a matrix is a linear transformation which converts the matrix into a column vector. Specifically, the vectorization of a matrix ''A'', denoted vec(''A''), is t ..., a linear transformation which converts a matrix into a column vector * Drug vectorization, to (intra)cellular targeting See also * Vector (other) * Vector graphics (other) {{disambiguation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SIMD

Single instruction, multiple data (SIMD) is a type of parallel processing in Flynn's taxonomy. SIMD can be internal (part of the hardware design) and it can be directly accessible through an instruction set architecture (ISA), but it should not be confused with an ISA. SIMD describes computers with multiple processing elements that perform the same operation on multiple data points simultaneously. Such machines exploit data level parallelism, but not concurrency: there are simultaneous (parallel) computations, but each unit performs the exact same instruction at any given moment (just with different data). SIMD is particularly applicable to common tasks such as adjusting the contrast in a digital image or adjusting the volume of digital audio. Most modern CPU designs include SIMD instructions to improve the performance of multimedia use. SIMD has three different subcategories in Flynn's 1972 Taxonomy, one of which is SIMT. SIMT should not be confused with software ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prefetch

Prefetching in computer science is a technique for speeding up fetch operations by beginning a fetch operation whose result is expected to be needed soon. Usually this is before it is ''known'' to be needed, so there is a risk of wasting time by prefetching data that will not be used. The technique can be applied in several circumstances: * Cache prefetching, a speedup technique used by computer processors where instructions or data are fetched before they are needed * Prefetch input queue (PIQ), in computer architecture, pre-loading machine code from memory * Link prefetching, a web mechanism for prefetching links * Prefetcher technology in modern releases of Microsoft Windows * Cache control instruction#Prefetch, prefetch instructions, for example provided by ** PREFETCH, an X86 instruction listings, X86 instruction in computing * Prefetch buffer, a feature of DDR SDRAM memory * Paging#Anticipatory paging, Swap prefetch, in computer operating systems, anticipatory paging See al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

InfiniBand

InfiniBand (IB) is a computer networking communications standard used in high-performance computing that features very high throughput and very low latency. It is used for data interconnect both among and within computers. InfiniBand is also used as either a direct or switched interconnect between servers and storage systems, as well as an interconnect between storage systems. It is designed to be scalable and uses a switched fabric network topology. By 2014, it was the most commonly used interconnect in the TOP500 list of supercomputers, until about 2016. Mellanox (acquired by Nvidia) manufactures InfiniBand host bus adapters and network switches, which are used by large computer system and database vendors in their product lines. As a computer cluster interconnect, IB competes with Ethernet, Fibre Channel, and Intel Omni-Path. The technology is promoted by the InfiniBand Trade Association. History InfiniBand originated in 1999 from the merger of two competing designs: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scalable Vector Extension

AArch64 or ARM64 is the 64-bit extension of the ARM architecture family. It was first introduced with the Armv8-A architecture. Arm releases a new extension every year. ARMv8.x and ARMv9.x extensions and features Announced in October 2011, ARMv8-A represents a fundamental change to the ARM architecture. It adds an optional 64-bit architecture, named "AArch64", and the associated new "A64" instruction set. AArch64 provides user-space compatibility with the existing 32-bit architecture ("AArch32" / ARMv7-A), and instruction set ("A32"). The 16-32bit Thumb instruction set is referred to as "T32" and has no 64-bit counterpart. ARMv8-A allows 32-bit applications to be executed in a 64-bit OS, and a 32-bit OS to be under the control of a 64-bit hypervisor. ARM announced their Cortex-A53 and Cortex-A57 cores on 30 October 2012. Apple was the first to release an ARMv8-A compatible core (Cyclone) in a consumer product (iPhone 5S). AppliedMicro, using an FPGA, was the first to demo ARMv8 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ARM Architecture

ARM (stylised in lowercase as arm, formerly an acronym for Advanced RISC Machines and originally Acorn RISC Machine) is a family of reduced instruction set computer (RISC) instruction set architectures for computer processors, configured for various environments. Arm Ltd. develops the architectures and licenses them to other companies, who design their own products that implement one or more of those architectures, including system on a chip (SoC) and system on module (SOM) designs, that incorporate different components such as memory, interfaces, and radios. It also designs cores that implement these instruction set architectures and licenses these designs to many companies that incorporate those core designs into their own products. There have been several generations of the ARM design. The original ARM1 used a 32-bit internal structure but had a 26-bit address space that limited it to 64 MB of main memory. This limitation was removed in the ARMv3 series, which h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AVX2

Advanced Vector Extensions (AVX) are extensions to the x86 instruction set architecture for microprocessors from Intel and Advanced Micro Devices (AMD). They were proposed by Intel in March 2008 and first supported by Intel with the Sandy Bridge processor shipping in Q1 2011 and later by AMD with the Bulldozer processor shipping in Q3 2011. AVX provides new features, new instructions and a new coding scheme. AVX2 (also known as Haswell New Instructions) expands most integer commands to 256 bits and introduces new instructions. They were first supported by Intel with the Haswell processor, which shipped in 2013. AVX-512 expands AVX to 512-bit support using a new EVEX prefix encoding proposed by Intel in July 2013 and first supported by Intel with the Knights Landing co-processor, which shipped in 2016. In conventional processors, AVX-512 was introduced with Skylake server and HEDT processors in 2017. Advanced Vector Extensions AVX uses sixteen YMM registers to perform a s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |