|

Memory Timings

Memory timings or RAM timings describe the timing information of a memory module. Due to the inherent qualities of VLSI and microelectronics, memory chips require time to fully execute commands. Executing commands too quickly will result in data corruption and results in system instability. With appropriate time between commands, memory modules/chips can be given the opportunity to fully switch transistors, charge capacitors and correctly signal back information to the memory controller. Because system performance depends on how fast memory can be used, this timing directly affects the performance of the system. The timing of modern synchronous dynamic random-access memory (SDRAM) is commonly indicated using four parameters: CL, TRCD, TRP, and TRAS in units of clock cycles; they are commonly written as four numbers separated with hyphens, ''e.g.'' 7-8-8-24. The fourth (tRAS) is often omitted, or a fifth, the Command rate, sometimes added (normally 2T or 1T, also written 2N, 1N). T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

VLSI

Very large-scale integration (VLSI) is the process of creating an integrated circuit (IC) by combining millions or billions of MOS transistors onto a single chip. VLSI began in the 1970s when MOS integrated circuit (Metal Oxide Semiconductor) chips were developed and then widely adopted, enabling complex semiconductor and telecommunication technologies. The microprocessor and memory chips are VLSI devices. Before the introduction of VLSI technology, most ICs had a limited set of functions they could perform. An electronic circuit might consist of a CPU, ROM, RAM and other glue logic. VLSI enables IC designers to add all of these into one chip. History Background The history of the transistor dates to the 1920s when several inventors attempted devices that were intended to control current in solid-state diodes and convert them into triodes. Success came after World War II, when the use of silicon and germanium crystals as radar detectors led to improvements in fabricat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DDR SDRAM

Double Data Rate Synchronous Dynamic Random-Access Memory (DDR SDRAM) is a double data rate (DDR) synchronous dynamic random-access memory (SDRAM) class of memory integrated circuits used in computers. DDR SDRAM, also retroactively called DDR1 SDRAM, has been superseded by DDR2 SDRAM, DDR3 SDRAM, DDR4 SDRAM and DDR5 SDRAM. None of its successors are forward or backward compatible with DDR1 SDRAM, meaning DDR2, DDR3, DDR4 and DDR5 memory modules will not work in DDR1-equipped motherboards, and vice versa. Compared to single data rate ( SDR) SDRAM, the DDR SDRAM interface makes higher transfer rates possible by more strict control of the timing of the electrical data and clock signals. Implementations often have to use schemes such as phase-locked loops and self-calibration to reach the required timing accuracy. The interface uses double pumping (transferring data on both the rising and falling edges of the clock signal) to double data bus bandwidth without a corresponding in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Overshoot (signal)

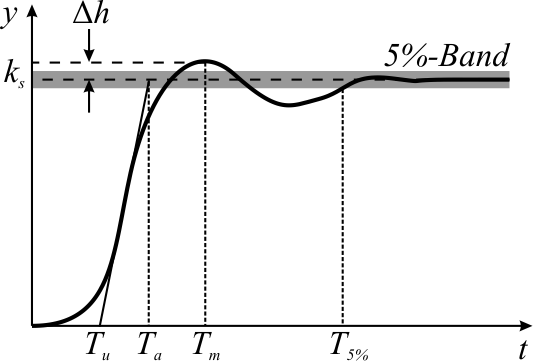

In signal processing, control theory, electronics, and mathematics, overshoot is the occurrence of a signal or function exceeding its target. Undershoot is the same phenomenon in the opposite direction. It arises especially in the step response of bandlimited systems such as low-pass filters. It is often followed by ringing, and at times conflated with the latter. Definition Maximum overshoot is defined in Katsuhiko Ogata's ''Discrete-time control systems'' as "the maximum peak value of the response curve measured from the desired response of the system." Control theory In control theory, overshoot refers to an output exceeding its final, steady-state value. For a step input, the ''percentage overshoot'' (PO) is the maximum value minus the step value divided by the step value. In the case of the unit step, the ''overshoot'' is just the maximum value of the step response minus one. Also see the definition of ''overshoot'' in an electronics context. For second-order sys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eye Pattern

In telecommunication, an eye pattern, also known as an eye diagram, is an oscilloscope display in which a digital signal from a receiver is repetitively sampled and applied to the vertical input, while the data rate is used to trigger the horizontal sweep. It is so called because, for several types of coding, the pattern looks like a series of eyes between a pair of rails. It is a tool for the evaluation of the combined effects of channel noise, dispersion and intersymbol interference on the performance of a baseband pulse-transmission system. The technique was first used with the WWII SIGSALY secure speech transmission system. From a mathematical perspective, an eye pattern is a visualization of the probability density function (PDF) of the signal, modulo the unit interval (UI). In other words, it shows the probability of the signal being at each possible voltage across the duration of the UI. Typically a color ramp is applied to the PDF in order to make small brightness dif ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BIOS

In computing, BIOS (, ; Basic Input/Output System, also known as the System BIOS, ROM BIOS, BIOS ROM or PC BIOS) is firmware used to provide runtime services for operating systems and programs and to perform hardware initialization during the booting process (power-on startup). The BIOS firmware comes pre-installed on an IBM PC or IBM PC compatible's system board and exists in some UEFI-based systems to maintain compatibility with operating systems that do not support UEFI native operation. The name originates from the Basic Input/Output System used in the CP/M operating system in 1975. The BIOS originally proprietary to the IBM PC has been reverse engineered by some companies (such as Phoenix Technologies) looking to create compatible systems. The interface of that original system serves as a ''de facto'' standard. The BIOS in modern PCs initializes and tests the system hardware components ( Power-on self-test), and loads a boot loader from a mass storage device which then ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memory Reference Code

The Memory Reference Code (or MRC) is a fundamental component in the design of some computers, and is "one of the most important aspects of the BIOS" for an Intel-based motherboard. It is the part of an Intel motherboard's firmware that determines how the computer's memory (RAM) will be initialized, and adjusts memory timing algorithms correctly for the effects of any modifications set by the user or computer hardware. Overview Intel has defined the Memory Reference Code (MRC) as follows: The MRC is responsible for initializing the memory as part of the POST process at power-on. Intel provides support in the MRC for all fully validated memory configurations. For non-validated configurations, a system designer should work with their BIOS vendor to produce a working MRC solution ... The MRC in the system BIOS needs to know the specification of the attached system memory. Most of this info should be contained in the onboard SPD. With this in mind care needs to be taken wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Preemption (computing)

In computing, preemption is the act of temporarily interrupting an executing task, with the intention of resuming it at a later time. This interrupt is done by an external scheduler with no assistance or cooperation from the task. This preemptive scheduler usually runs in the most privileged protection ring, meaning that interruption and resuming are considered highly secure actions. Such a change in the currently executing task of a processor is known as context switching. User mode and kernel mode In any given system design, some operations performed by the system may not be preemptable. This usually applies to kernel functions and service interrupts which, if not permitted to run to completion, would tend to produce race conditions resulting in deadlock. Barring the scheduler from preempting tasks while they are processing kernel functions simplifies the kernel design at the expense of system responsiveness. The distinction between user mode and kernel mode, whi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Branch Prediction

In computer architecture, a branch predictor is a digital circuit that tries to guess which way a branch (e.g., an if–then–else structure) will go before this is known definitively. The purpose of the branch predictor is to improve the flow in the instruction pipeline. Branch predictors play a critical role in achieving high performance in many modern pipelined microprocessor architectures such as x86. Two-way branching is usually implemented with a conditional jump instruction. A conditional jump can either be "taken" and jump to a different place in program memory, or it can be "not taken" and continue execution immediately after the conditional jump. It is not known for certain whether a conditional jump will be taken or not taken until the condition has been calculated and the conditional jump has passed the execution stage in the instruction pipeline (see fig. 1). Without branch prediction, the processor would have to wait until the conditional jump instruction ha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memory Dependence Prediction

Memory dependence prediction is a technique, employed by high-performance out-of-order execution microprocessors that execute memory access operations (loads and stores) out of program order, to predict true dependencies between loads and stores at instruction execution time. With the predicted dependence information, the processor can then decide to speculatively execute certain loads and stores out of order, while preventing other loads and stores from executing out-of-order (keeping them in-order). Later in the pipeline, memory disambiguation techniques are used to determine if the loads and stores were correctly executed and, if not, to recover. By using the memory dependence predictor to keep most dependent loads and stores in order, the processor gains the benefits of aggressive out-of-order load/store execution but avoids many of the memory dependence violations that occur when loads and stores were incorrectly executed. This increases performance because it reduces the num ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Prefetching

Cache prefetching is a technique used by computer processors to boost execution performance by fetching instructions or data from their original storage in slower memory to a faster local memory before it is actually needed (hence the term 'prefetch'). Most modern computer processors have fast and local cache memory in which prefetched data is held until it is required. The source for the prefetch operation is usually main memory. Because of their design, accessing cache memories is typically much faster than accessing main memory, so prefetching data and then accessing it from caches is usually many orders of magnitude faster than accessing it directly from main memory. Prefetching can be done with non-blocking cache control instructions. Data vs. instruction cache prefetching Cache prefetching can either fetch data or instructions into cache. * Data prefetching fetches data before it is needed. Because data access patterns show less regularity than instruction patterns, acc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Out-of-order Execution

In computer engineering, out-of-order execution (or more formally dynamic execution) is a paradigm used in most high-performance central processing units to make use of instruction cycles that would otherwise be wasted. In this paradigm, a processor executes instructions in an order governed by the availability of input data and execution units, rather than by their original order in a program. In doing so, the processor can avoid being idle while waiting for the preceding instruction to complete and can, in the meantime, process the next instructions that are able to run immediately and independently. History Out-of-order execution is a restricted form of data flow computation, which was a major research area in computer architecture in the 1970s and early 1980s. The first machine to use out-of-order execution was the CDC 6600 (1964), designed by James E. Thornton, which uses a scoreboard to avoid conflicts. It permits an instruction to execute if its source operand (read) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)