|

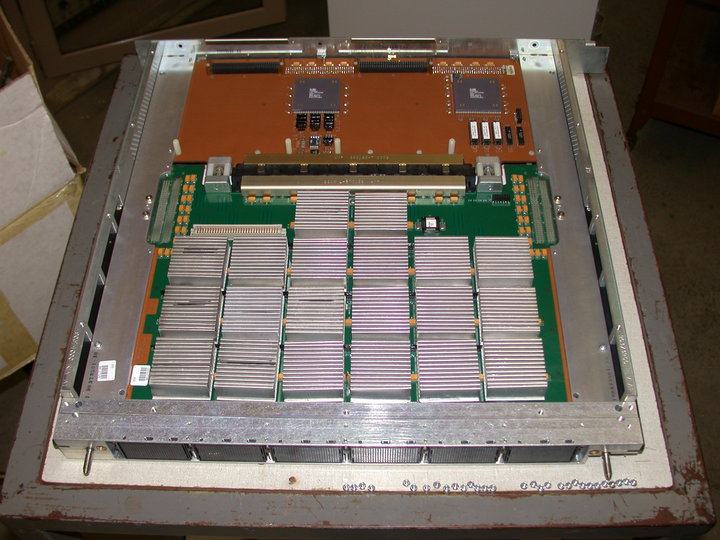

Many-core Processor

Manycore processors are special kinds of multi-core processors designed for a high degree of parallel processing, containing numerous simpler, independent processor cores (from a few tens of cores to thousands or more). Manycore processors are used extensively in embedded computers and high-performance computing. Contrast with multicore architecture Manycore processors are distinct from multi-core processors in being optimized from the outset for a higher degree of explicit parallelism, and for higher throughput (or lower power consumption) at the expense of latency and lower single-thread performance. The broader category of multi-core processors, by contrast, are usually designed to efficiently run ''both'' parallel ''and'' serial code, and therefore place more emphasis on high single-thread performance (e.g. devoting more silicon to out of order execution, deeper pipelines, more superscalar execution units, and larger, more general caches), and shared memory. These techniq ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multi-core Processor

A multi-core processor is a microprocessor on a single integrated circuit with two or more separate processing units, called cores, each of which reads and executes program instructions. The instructions are ordinary CPU instructions (such as add, move data, and branch) but the single processor can run instructions on separate cores at the same time, increasing overall speed for programs that support multithreading or other parallel computing techniques. Manufacturers typically integrate the cores onto a single integrated circuit die (known as a chip multiprocessor or CMP) or onto multiple dies in a single chip package. The microprocessors currently used in almost all personal computers are multi-core. A multi-core processor implements multiprocessing in a single physical package. Designers may couple cores in a multi-core device tightly or loosely. For example, cores may or may not share caches, and they may implement message passing or shared-memory inter-core commu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Direct Memory Access

Direct memory access (DMA) is a feature of computer systems and allows certain hardware subsystems to access main system memory independently of the central processing unit (CPU). Without DMA, when the CPU is using programmed input/output, it is typically fully occupied for the entire duration of the read or write operation, and is thus unavailable to perform other work. With DMA, the CPU first initiates the transfer, then it does other operations while the transfer is in progress, and it finally receives an interrupt from the DMA controller (DMAC) when the operation is done. This feature is useful at any time that the CPU cannot keep up with the rate of data transfer, or when the CPU needs to perform work while waiting for a relatively slow I/O data transfer. Many hardware systems use DMA, including disk drive controllers, graphics cards, network cards and sound cards. DMA is also used for intra-chip data transfer in multi-core processors. Computers that have DMA channels can ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenMP

OpenMP (Open Multi-Processing) is an application programming interface (API) that supports multi-platform shared-memory multiprocessing programming in C, C++, and Fortran, on many platforms, instruction-set architectures and operating systems, including Solaris, AIX, FreeBSD, HP-UX, Linux, macOS, and Windows. It consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior. OpenMP is managed by the nonprofit technology consortium ''OpenMP Architecture Review Board'' (or ''OpenMP ARB''), jointly defined by a broad swath of leading computer hardware and software vendors, including Arm, AMD, IBM, Intel, Cray, HP, Fujitsu, Nvidia, NEC, Red Hat, Texas Instruments, and Oracle Corporation. OpenMP uses a portable, scalable model that gives programmers a simple and flexible interface for developing parallel applications for platforms ranging from the standard desktop computer to the supercomputer. An application bui ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Actor Model

The actor model in computer science is a mathematical model of concurrent computation that treats ''actor'' as the universal primitive of concurrent computation. In response to a message it receives, an actor can: make local decisions, create more actors, send more messages, and determine how to respond to the next message received. Actors may modify their own private state, but can only affect each other indirectly through messaging (removing the need for lock-based synchronization). The actor model originated in 1973. It has been used both as a framework for a theoretical understanding of computation and as the theoretical basis for several practical implementations of concurrent systems. The relationship of the model to other work is discussed in actor model and process calculi. History According to Carl Hewitt, unlike previous models of computation, the actor model was inspired by physics, including general relativity and quantum mechanics. It was also influenced by ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Partitioned Global Address Space

In computer science, partitioned global address space (PGAS) is a parallel programming model paradigm. PGAS is typified by communication operations involving a global memory address space abstraction that is logically partitioned, where a portion is local to each process, thread, or processing element. The novelty of PGAS is that the portions of the shared memory space may have an affinity for a particular process, thereby exploiting locality of reference in order to improve performance. A PGAS memory model is featured in various parallel programming languages and libraries, including: Coarray Fortran, Unified Parallel CSplit-C Fortress, Chapel, X10UPC++ [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compute Kernel

In computing, a compute kernel is a routine compiled for high throughput accelerators (such as graphics processing units (GPUs), digital signal processors (DSPs) or field-programmable gate arrays (FPGAs)), separate from but used by a main program (typically running on a central processing unit). They are sometimes called compute shaders, sharing execution units with vertex shaders and pixel shaders on GPUs, but are not limited to execution on one class of device, or graphics APIs. Description Compute kernels roughly correspond to inner loops when implementing algorithms in traditional languages (except there is no implied sequential operation), or to code passed to internal iterators. They may be specified by a separate programming language such as " OpenCL C" (managed by the OpenCL API), as "compute shaders" written in a shading language (managed by a graphics API such as OpenGL), or embedded directly in application code written in a high level language, as in the cas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

EE Times

''EE Times'' (''Electronic Engineering Times'') is an electronics industry magazine published in the United States since 1972. EE Times is currently owned by AspenCore, a division of Arrow Electronics since August 2016. Since its acquisition by AspenCore, EE Times has seen major editorial and publishing technology investment and a renewed emphasis on investigative coverage. New features include The Dispatch, which profiles frontline engineers and unpacks the real-life design problems and their solutions in technical yet conversational reporting. Ownership and status ''EE Times'' was launched in 1972 by Gerard G. Leeds of CMP Publications Inc. In 1999, the Leeds family sold CMP to United Business Media for $900 million. After 2000, ''EE Times'' moved more into web publishing. The shift in advertising from print to online began to accelerate in 2007 and the periodical shed staff to adjust to the downturn in revenue. In July 2013, the digital edition migrated to UBM TechWeb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenCL

OpenCL (Open Computing Language) is a framework for writing programs that execute across heterogeneous platforms consisting of central processing units (CPUs), graphics processing units (GPUs), digital signal processors (DSPs), field-programmable gate arrays (FPGAs) and other processors or hardware accelerators. OpenCL specifies programming languages (based on C99, C++14 and C++17) for programming these devices and application programming interfaces (APIs) to control the platform and execute programs on the compute devices. OpenCL provides a standard interface for parallel computing using task- and data-based parallelism. OpenCL is an open standard maintained by the non-profit technology consortium Khronos Group. Conformant implementations are available from Altera, AMD, ARM, Creative, IBM, Imagination, Intel, Nvidia, Qualcomm, Samsung, Vivante, Xilinx, and ZiiLABS. Overview OpenCL views a computing system as consisting of a number of ''compute devices'', which ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shader Processing Units

Graphics Core Next (GCN) is the codename for a series of microarchitectures and an instruction set architecture that were developed by AMD for its GPUs as the successor to its TeraScale microarchitecture. The first product featuring GCN was launched on January 9, 2012. GCN is a reduced instruction set SIMD microarchitecture contrasting the very long instruction word SIMD architecture of TeraScale. GCN requires considerably more transistors than TeraScale, but offers advantages for general-purpose GPU (GPGPU) computation due to a simpler compiler. GCN graphics chips were fabricated with CMOS at 28 nm, and with FinFET at 14 nm (by Samsung Electronics and GlobalFoundries) and 7 nm (by TSMC), available on selected models in AMD's Radeon HD 7000, HD 8000, 200, 300, 400, 500 and Vega series of graphics cards, including the separately released Radeon VII. GCN was also used in the graphics portion of Accelerated Processing Units (APUs), such as those in the PlayStation 4 and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector Processors

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data (SIMD) or SWAR Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation and similar tasks. Vector processing techniques also operate in video-game console hardware and in graphics accelerators. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor designs led to a decline in vector supercom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Cluster

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The components of a cluster are usually connected to each other through fast local area networks, with each node (computer used as a server) running its own instance of an operating system. In most circumstances, all of the nodes use the same hardware and the same operating system, although in some setups (e.g. using Open Source Cluster Application Resources (OSCAR)), different operating systems can be used on each computer, or different hardware. Clusters are usually deployed to improve performance and availability over that of a single computer, while typically being much more cost-effective than single computers of comparable speed or availability. Computer clusters emerged as a result of convergence of a number of computing trends including t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |