|

Mlpack

mlpack is a free, open-source and header-only software library for machine learning and artificial intelligence written in C++, built on top of the Armadillo library and thensmallennumerical optimization library. mlpack has an emphasis on scalability, speed, and ease-of-use. Its aim is to make machine learning possible for novice users by means of a simple, consistent API, while simultaneously exploiting C++ language features to provide maximum performance and maximum flexibility for expert users. mlpack has also a light deployment infrastructure with minimum dependencies, making it perfect for embedded systems and low resource devices. Its intended target users are scientists and engineers. It is open-source software distributed under the BSD license, making it useful for developing both open source and proprietary software. Releases 1.0.11 and before were released under the LGPL license. The project is supported by the Georgia Institute of Technology and contributions from ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Armadillo (C++ Library)

Armadillos () are New World placental mammals in the order Cingulata. They form part of the superorder Xenarthra, along with the anteaters and sloths. 21 extant species of armadillo have been described, some of which are distinguished by the number of bands on their armor. All species are native to the Americas, where they inhabit a variety of environments. Living armadillos are characterized by a leathery armor shell and long, sharp claws for digging. They have short legs, but can move quite quickly. The average length of an armadillo is about , including its tail. The giant armadillo grows up to and weighs up to , while the pink fairy armadillo has a length of only . When threatened by a predator, '' Tolypeutes'' species frequently roll up into a ball; they are the only species of armadillo capable of this. Recent genetic research has shown that the megafaunal glyptodonts (up to tall with maximum body masses of around 2 tonnes), which became extinct around 12,000 yea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Euclidean Minimum Spanning Tree

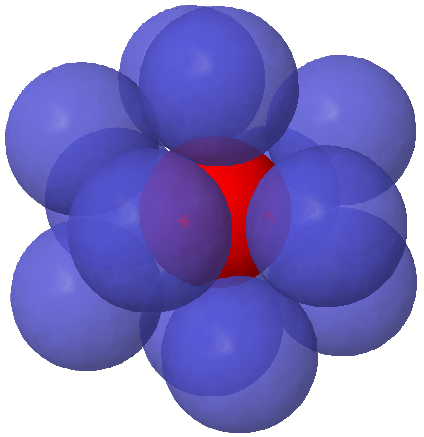

A Euclidean minimum spanning tree of a finite set of points in the Euclidean plane or higher-dimensional Euclidean space connects the points by a system of line segments with the points as endpoints, minimizing the total length of the segments. In it, any two points can reach each other along a path through the line segments. It can be found as the minimum spanning tree of a complete graph with the points as vertices and the Euclidean distances between points as edge weights. The edges of the minimum spanning tree meet at angles of at least 60°, at most six to a vertex. In higher dimensions, the number of edges per vertex is bounded by the kissing number of tangent unit spheres. The total length of the edges, for points in a unit square, is at most proportional to the square root of the number of points. Each edge lies in an empty region of the plane, and these regions can be used to prove that the Euclidean minimum spanning tree is a subgraph of other geometric graphs including ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Decision Stump

A decision stump is a machine learning model consisting of a one-level decision tree. That is, it is a decision tree with one internal node (the root) which is immediately connected to the terminal nodes (its leaves). A decision stump makes a prediction based on the value of just a single input feature. Sometimes they are also called 1-rules. Depending on the type of the input feature, several variations are possible. For nominal features, one may build a stump which contains a leaf for each possible feature value or a stump with the two leaves, one of which corresponds to some chosen category, and the other leaf to all the other categories.This is what has been implemented in Weka's DecisionStump classifier. For binary features these two schemes are identical. A missing value may be treated as a yet another category. For continuous features, usually, some threshold feature value is selected, and the stump contains two leaves — for values below and above the threshold. However, r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

K-means Clustering

''k''-means clustering is a method of vector quantization, originally from signal processing, that aims to partition of a set, partition ''n'' observations into ''k'' clusters in which each observation belongs to the cluster (statistics), cluster with the nearest mean (cluster centers or cluster centroid), serving as a prototype of the cluster. This results in a partitioning of the data space into Voronoi cells. ''k''-means clustering minimizes within-cluster variances (squared Euclidean distances), but not regular Euclidean distances, which would be the more difficult Weber problem: the mean optimizes squared errors, whereas only the geometric median minimizes Euclidean distances. For instance, better Euclidean solutions can be found using k-medians clustering, ''k''-medians and k-medoids, ''k''-medoids. The problem is computationally difficult (NP-hardness, NP-hard); however, efficient heuristic algorithms converge quickly to a local optimum. These are usually similar to the ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Kernel Density Estimation

In statistics, kernel density estimation (KDE) is the application of kernel smoothing for probability density estimation, i.e., a non-parametric method to estimate the probability density function of a random variable based on '' kernels'' as weights. KDE answers a fundamental data smoothing problem where inferences about the population are made based on a finite data sample. In some fields such as signal processing and econometrics it is also termed the Parzen–Rosenblatt window method, after Emanuel Parzen and Murray Rosenblatt, who are usually credited with independently creating it in its current form. One of the famous applications of kernel density estimation is in estimating the class-conditional marginal densities of data when using a naive Bayes classifier, which can improve its prediction accuracy. Definition Let be independent and identically distributed samples drawn from some univariate distribution with an unknown density at any given point . We are in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

GitHub

GitHub () is a Proprietary software, proprietary developer platform that allows developers to create, store, manage, and share their code. It uses Git to provide distributed version control and GitHub itself provides access control, bug tracking system, bug tracking, software feature requests, task management, continuous integration, and wikis for every project. Headquartered in California, GitHub, Inc. has been a subsidiary of Microsoft since 2018. It is commonly used to host open source software development projects. GitHub reported having over 100 million developers and more than 420 million Repository (version control), repositories, including at least 28 million public repositories. It is the world's largest source code host Over five billion developer contributions were made to more than 500 million open source projects in 2024. About Founding The development of the GitHub platform began on October 19, 2005. The site was launched in April 2008 by Tom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Mathematical Model

A mathematical model is an abstract and concrete, abstract description of a concrete system using mathematics, mathematical concepts and language of mathematics, language. The process of developing a mathematical model is termed ''mathematical modeling''. Mathematical models are used in applied mathematics and in the natural sciences (such as physics, biology, earth science, chemistry) and engineering disciplines (such as computer science, electrical engineering), as well as in non-physical systems such as the social sciences (such as economics, psychology, sociology, political science). It can also be taught as a subject in its own right. The use of mathematical models to solve problems in business or military operations is a large part of the field of operations research. Mathematical models are also used in music, linguistics, and philosophy (for example, intensively in analytic philosophy). A model may help to explain a system and to study the effects of different components, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Collaborative Filtering

Collaborative filtering (CF) is, besides content-based filtering, one of two major techniques used by recommender systems.Francesco Ricci and Lior Rokach and Bracha ShapiraIntroduction to Recommender Systems Handbook, Recommender Systems Handbook, Springer, 2011, pp. 1–35 Collaborative filtering has two senses, a narrow one and a more general one. In the newer, narrower sense, collaborative filtering is a method of making automatic predictions (filtering) about a user's interests by utilizing preferences or taste information collected from many users (collaborating). This approach assumes that if persons ''A'' and ''B'' share similar opinions on one issue, they are more likely to agree on other issues compared to a random pairing of ''A'' with another person. For instance, a collaborative filtering system for television programming could predict which shows a user might enjoy based on a limited list of the user's tastes (likes or dislikes). These predictions are specific to t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Mixture Models

In chemistry, a mixture is a material made up of two or more different chemical substances which can be separated by physical method. It is an impure substance made up of 2 or more elements or compounds mechanically mixed together in any proportion. A mixture is the physical combination of two or more substances in which the identities are retained and are mixed in the form of solutions, suspensions or colloids. Mixtures are one product of mechanically blending or mixing chemical substances such as elements and compounds, without chemical bonding or other chemical change, so that each ingredient substance retains its own chemical properties and makeup. Despite the fact that there are no chemical changes to its constituents, the physical properties of a mixture, such as its melting point, may differ from those of the components. Some mixtures can be separated into their components by using physical (mechanical or thermal) means. Azeotropes are one kind of mixture that usually po ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Density Estimation

In statistics, probability density estimation or simply density estimation is the construction of an estimate, based on observed data, of an unobservable underlying probability density function. The unobservable density function is thought of as the density according to which a large population is distributed; the data are usually thought of as a random sample from that population. A variety of approaches to density estimation are used, including Parzen windows and a range of data clustering techniques, including vector quantization. The most basic form of density estimation is a rescaled histogram. Example We will consider records of the incidence of diabetes. The following is quoted verbatim from the data set description: :''A population of women who were at least 21 years old, of Pima Indian heritage and living near Phoenix, Arizona, was tested for diabetes mellitus according to World Health Organization criteria. The data were collected by the US National Ins ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |