|

Kernel-independent Component Analysis

In statistics, kernel-independent component analysis (kernel ICA) is an efficient algorithm for independent component analysis which estimates source components by optimizing a ''generalized variance'' contrast function, which is based on representations in a reproducing kernel Hilbert space. Those contrast functions use the notion of mutual information as a measure of statistical independence Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of .... Main idea Kernel ICA is based on the idea that correlations between two random variables can be represented in a reproducing kernel Hilbert space (RKHS), denoted by \mathcal, associated with a feature map L_x: \mathcal \mapsto \mathbb defined for a fixed x \in \mathbb. The \mathcal-correlation between two random variables X and Y is define ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

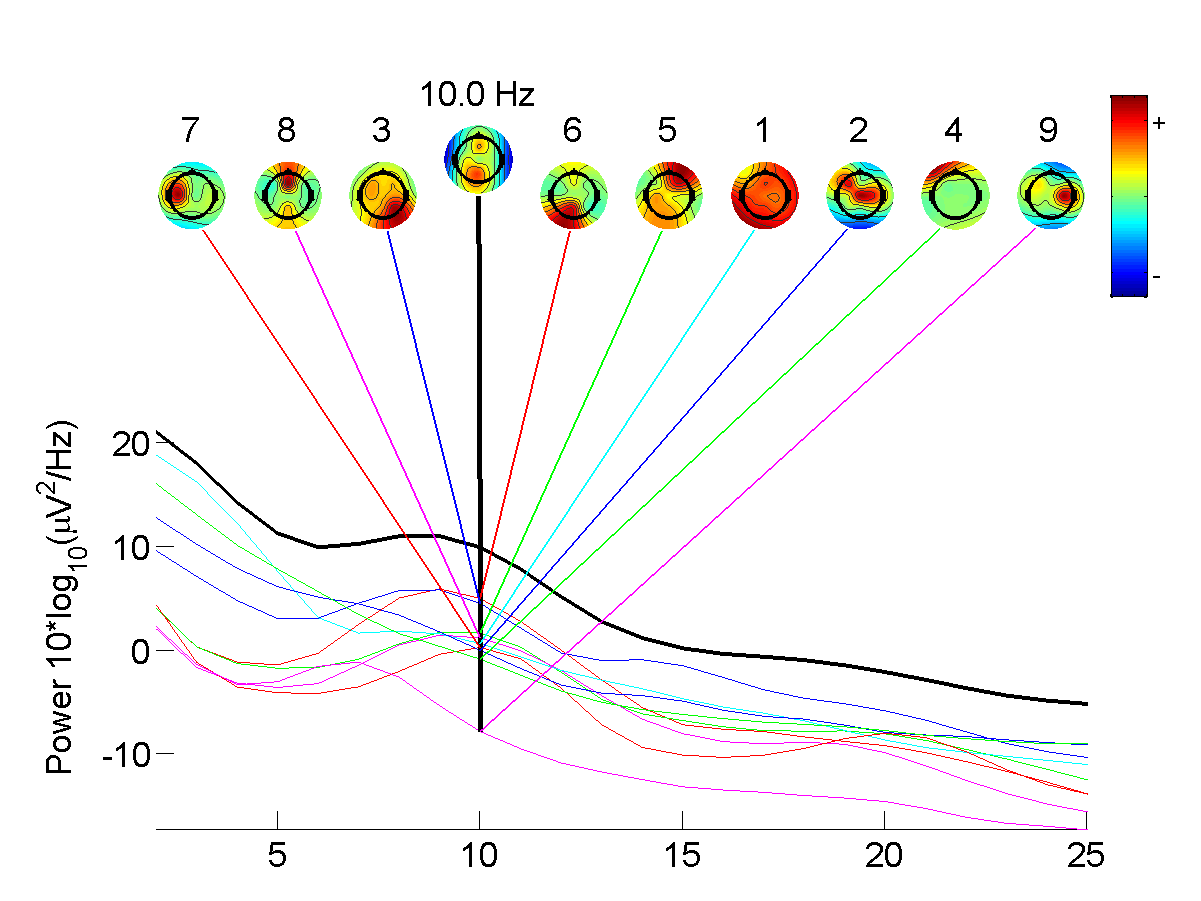

Independent Component Analysis

In signal processing, independent component analysis (ICA) is a computational method for separating a multivariate statistics, multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and that the subcomponents are Statistical independence, statistically independent from each other. ICA was invented by Jeanny Hérault and Christian Jutten in 1985. ICA is a special case of blind source separation. A common example application of ICA is the "cocktail party problem" of listening in on one person's speech in a noisy room. Introduction Independent component analysis attempts to decompose a multivariate signal into independent non-Gaussian signals. As an example, sound is usually a signal that is composed of the numerical addition, at each time t, of signals from several sources. The question then is whether it is possible to separate these contributing sources from the observed total signal. When the statistical independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reproducing Kernel Hilbert Space

In functional analysis, a reproducing kernel Hilbert space (RKHS) is a Hilbert space of functions in which point evaluation is a continuous linear functional. Specifically, a Hilbert space H of functions from a set X (to \mathbb or \mathbb) is an RKHS if the point-evaluation functional L_x:H\to\mathbb, L_x(f)=f(x), is continuous for every x\in X. Equivalently, H is an RKHS if there exists a function K_x \in H such that, for all f \in H,\langle f, K_x \rangle = f(x).The function K_x is then called the ''reproducing kernel'', and it reproduces the value of f at x via the inner product. An immediate consequence of this property is that convergence in norm implies uniform convergence on any subset of X on which \, K_x\, is bounded. However, the converse does not necessarily hold. Often the set X carries a topology, and \, K_x\, depends continuously on x\in X, in which case: convergence in norm implies uniform convergence on compact subsets of X. It is not entirely straightforwar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independent Component Analysis

In signal processing, independent component analysis (ICA) is a computational method for separating a multivariate statistics, multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and that the subcomponents are Statistical independence, statistically independent from each other. ICA was invented by Jeanny Hérault and Christian Jutten in 1985. ICA is a special case of blind source separation. A common example application of ICA is the "cocktail party problem" of listening in on one person's speech in a noisy room. Introduction Independent component analysis attempts to decompose a multivariate signal into independent non-Gaussian signals. As an example, sound is usually a signal that is composed of the numerical addition, at each time t, of signals from several sources. The question then is whether it is possible to separate these contributing sources from the observed total signal. When the statistical independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independence (probability Theory)

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two event (probability theory), events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called Pairwise independence, pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Whitening Transformation

A whitening transformation or sphering transformation is a linear transformation that transforms a vector of random variables with a known covariance matrix into a set of new variables whose covariance is the identity matrix, meaning that they are uncorrelated and each have variance 1. The transformation is called "whitening" because it changes the input vector into a white noise vector. Several other transformations are closely related to whitening: # the decorrelation transform removes only the correlations but leaves variances intact, # the standardization transform sets variances to 1 but leaves correlations intact, # a coloring transformation transforms a vector of white random variables into a random vector with a specified covariance matrix. Definition Suppose X is a random (column) vector with non-singular covariance matrix \Sigma and mean 0. Then the transformation Y = W X with a whitening matrix W satisfying the condition W^\mathrm W = \Sigma^ yields the whitened ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |