|

Eqn (software)

Part of the troff suite of Unix document layout tools, eqn is a preprocessor that formats equations for printing. A similar program, neqn, accepted the same input as eqn, but produced output tuned to look better in nroff. The eqn program was created in 1974 by Brian Kernighan and Lorinda Cherry. It was implemented using yacc compiler-compiler. The input language used by eqn allows the user to write mathematical expressions in much the same way as they would be spoken aloud. The language is defined by a context-free grammar, together with operator precedence and operator associativity rules. The eqn language is similar to the mathematical component of TeX Tex, TeX, TEX, may refer to: People and fictional characters * Tex (nickname), a list of people and fictional characters with the nickname * Tex Earnhardt (1930–2020), U.S. businessman * Joe Tex (1933–1982), stage name of American soul singer ..., which appeared several years later, but is simpler and less comple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Brian Kernighan

Brian Wilson Kernighan (; born January 30, 1942) is a Canadian computer scientist. He worked at Bell Labs and contributed to the development of Unix alongside Unix creators Ken Thompson and Dennis Ritchie. Kernighan's name became widely known through co-authorship of the first book on the C programming language ('' The C Programming Language'') with Dennis Ritchie. Kernighan affirmed that he had no part in the design of the C language ("it's entirely Dennis Ritchie's work"). Kernighan authored many Unix programs, including ditroff. He is coauthor of the AWK and AMPL programming languages. The "K" of K&R C and of AWK both stand for "Kernighan". In collaboration with Shen Lin he devised well-known heuristics for two NP-complete optimization problems: graph partitioning and the travelling salesman problem. In a display of authorial equity, the former is usually called the Kernighan–Lin algorithm, while the latter is known as the Lin–Kernighan heuristic. Kernighan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nroff

nroff (short for "new roff") is a text-formatting program on Unix and Unix-like operating systems. It produces output suitable for simple fixed-width printers and terminal windows. It is an integral part of the Unix help system, being used to format man pages for display. nroff and the related troff were both developed from the original roff. While nroff was intended to produce output on terminals and line printers, troff was intended to produce output on typesetting systems. Both used the same underlying markup and a single source file could normally be used by nroff or troff without change. History nroff was written by Joe Ossanna for Version 2 Unix, in Assembly language and then ported to C. It was a descendant of the RUNOFF program from CTSS, the first computerized text-formatting program, and is a predecessor of the Unix troff document processing system. Variants There is also a free software version of nroff in the groff package emulating the AT&T version, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Communications Of The ACM

''Communications of the ACM'' (''CACM'') is the monthly journal of the Association for Computing Machinery (ACM). History It was established in 1958, with Saul Rosen as its first managing editor. It is sent to all ACM members. Articles are intended for readers with backgrounds in all areas of computer science and information systems. The focus is on the practical implications of advances in information technology and associated management issues; ACM also publishes a variety of more theoretical journals. The magazine straddles the boundary of a science magazine, trade magazine, and a scientific journal. While the content is subject to peer review, the articles published are often summaries of research that may also be published elsewhere. Material published must be accessible and relevant to a broad readership. From 1960 onward, ''CACM'' also published algorithms, expressed in ALGOL. The collection of algorithms later became known as the Collected Algorithms of the ACM. CA ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Whitespace Character

A whitespace character is a character data element that represents white space when text is rendered for display by a computer. For example, a ''space'' character (, ASCII 32) represents blank space such as a word divider in a Western script. A printable character results in output when rendered, but a whitespace character does not. Instead, whitespace characters define the layout of text to a limited degree, interrupting the normal sequence of rendering characters next to each other. The output of subsequent characters is typically shifted to the right (or to the left for right-to-left script) or to the start of the next line. The effect of multiple sequential whitespace characters is cumulative such that the next printable character is rendered at a location based on the accumulated effect of preceding whitespace characters. The origin of the term ''whitespace'' is rooted in the common practice of rendering text on white paper. Normally, a whitespace character is ''not' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexical Analysis

Lexical tokenization is conversion of a text into (semantically or syntactically) meaningful ''lexical tokens'' belonging to categories defined by a "lexer" program. In case of a natural language, those categories include nouns, verbs, adjectives, punctuations etc. In case of a programming language, the categories include identifiers, operators, grouping symbols, data types and language keywords. Lexical tokenization is related to the type of tokenization used in large language models (LLMs) but with two differences. First, lexical tokenization is usually based on a lexical grammar, whereas LLM tokenizers are usually probability-based. Second, LLM tokenizers perform a second step that converts the tokens into numerical values. Rule-based programs A rule-based program, performing lexical tokenization, is called ''tokenizer'', or ''scanner'', although ''scanner'' is also a term for the first stage of a lexer. A lexer forms the first phase of a compiler frontend in processing. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

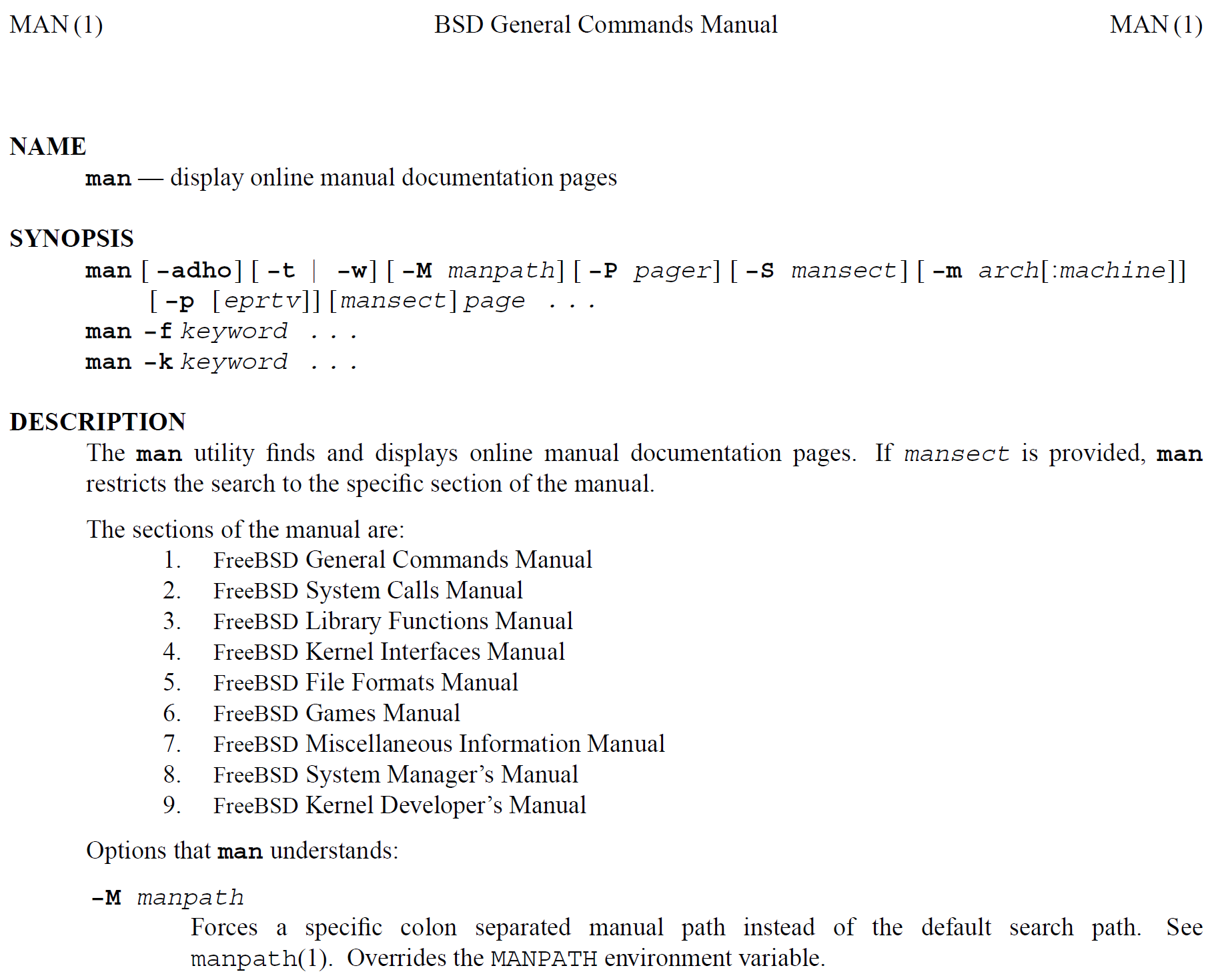

Man Pages

A man page (short for manual page) is a form of software documentation found on Unix and Unix-like operating systems. Topics covered include programs, system libraries, system calls, and sometimes local system details. The local host administrators can create and install manual pages associated with the specific host. A manual end user may invoke a documentation page by issuing the man command followed by the name of the item for which they want the documentation. These manual pages are typically requested by end users, programmers and administrators doing real time work but can also be formatted for printing. By default, man typically uses a formatting program such as nroff with a macro package or mandoc, and also a terminal pager program such as more or less to display its output on the user's screen. Man pages are often referred to as an ''online'' form of software documentation, even though the man command does not require internet access. The environment variable ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mandoc

mandoc (historically called mdocml) is a utility used for formatting man pages in BSD Operating Systems (e.g. NetBSD), specifically those written in the ''mdoc'' and ''man'' macro languages. Unlike the groff and older troff and nroff tools that are predominantly used for this purpose by tools such as , mandoc focuses specifically on manuals and is not suitable for general-purpose type-setting. is mainly used to format the ''mdoc'' manuals used in the BSD Operating Systems, but it also implements most of the ''man'' macros used in Linux distributions, as well as a subset of roff commands occasionally intermixed with the ''man'' macros. It does not support other macro sets such as ''mm'' and ''ms'', or any typesetting features like hyphenation, fonts and alignment. Simple styling such as bold and italics are supported, but italicized text is replaced by underlined text on the terminal. mandoc has built-in support for the troff soelim (inclusion) preprocessor and partial b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Keyword (computer Programming)

Keyword may refer to: Computing * Index term, a term used as a keyword to documents in an information system such as a catalog or a search engine * Keyword (Internet search), a word or phrase typically used by bloggers or online content creator to rank a web page on a particular topic * A reserved word in a programming language Other uses * Keyword (linguistics), a word that occurs in a text more often than by chance alone * '' Keywords: A Vocabulary of Culture and Society'', 1973 non-fiction book by Raymond Williams * "Keyword" (song), a 2008 song by Tohoshinki See also *Buzzword A buzzword is a word or phrase, new or already existing, that becomes popular for a period of time. Buzzwords often derive from technical terms yet often have much of the original technical meaning removed through fashionable use, being simply ... * Trigger word *Related to index terms: ** Key Word in Context ** Keyword advertising, a form of online advertising ** Keyword clustering, a search ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Groff (software)

groff ( ) (also called GNU troff) is a typesetting system that creates formatted output when given plain text mixed with formatting commands. It is the GNU replacement for the troff and nroff text formatters, which were both developed from the original roff. Groff contains a large number of helper programs, preprocessors, and postprocessors including eqn, tbl, pic and soelim. There are also several macro packages included that duplicate, expand on the capabilities of, or outright replace the standard troff macro packages. Groff development of new features is active, and is an important part of free, open source, and UNIX derived operating systems such as Linux and 4.4 BSD derivatives — notably because troff macros are used to create man pages, the standard form of documentation on Unix and Unix-like systems. OpenBSD has replaced groff with mandoc in the base install, since their 4.9 release, as has macOS Ventura. History groff is an original implementation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Operator Associativity

In programming language theory, the associativity of an operator is a property that determines how operators of the same precedence are grouped in the absence of parentheses. If an operand is both preceded and followed by operators (for example, ^ 3 ^), and those operators have equal precedence, then the operand may be used as input to two different operations (i.e. the two operations indicated by the two operators). The choice of which operations to apply the operand to, is determined by the associativity of the operators. Operators may be associative (meaning the operations can be grouped arbitrarily), left-associative (meaning the operations are grouped from the left), right-associative (meaning the operations are grouped from the right) or non-associative (meaning operations cannot be chained, often because the output type is incompatible with the input types). The associativity and precedence of an operator is a part of the definition of the programming language; different p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Operator Precedence

In mathematics and computer programming, the order of operations is a collection of rules that reflect conventions about which operations to perform first in order to evaluate a given mathematical expression. These rules are formalized with a ranking of the operations. The rank of an operation is called its precedence, and an operation with a ''higher'' precedence is performed before operations with ''lower'' precedence. Calculators generally perform operations with the same precedence from left to right, but some programming languages and calculators adopt different conventions. For example, multiplication is granted a higher precedence than addition, and it has been this way since the introduction of modern algebraic notation. Thus, in the expression , the multiplication is performed before addition, and the expression has the value , and not . When exponents were introduced in the 16th and 17th centuries, they were given precedence over both addition and multiplication and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Context-free Grammar

In formal language theory, a context-free grammar (CFG) is a formal grammar whose production rules can be applied to a nonterminal symbol regardless of its context. In particular, in a context-free grammar, each production rule is of the form : A\ \to\ \alpha with A a ''single'' nonterminal symbol, and \alpha a string of terminals and/or nonterminals (\alpha can be empty). Regardless of which symbols surround it, the single nonterminal A on the left hand side can always be replaced by \alpha on the right hand side. This distinguishes it from a context-sensitive grammar, which can have production rules in the form \alpha A \beta \rightarrow \alpha \gamma \beta with A a nonterminal symbol and \alpha, \beta, and \gamma strings of terminal and/or nonterminal symbols. A formal grammar is essentially a set of production rules that describe all possible strings in a given formal language. Production rules are simple replacements. For example, the first rule in the picture, : \lan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |