|

Early Stopping

In machine learning, early stopping is a form of Regularization (mathematics), regularization used to avoid overfitting when training a model with an iterative method, such as gradient descent. Such methods update the model to make it better fit the Training, validation, and test data sets, training data with each iteration. Up to a point, this improves the model's performance on data outside of the training set (e.g., the validation set). Past that point, however, improving the model's fit to the training data comes at the expense of increased generalization error. Early stopping rules provide guidance as to how many iterations can be run before the learner begins to over-fit. Early stopping rules have been employed in many different machine learning methods, with varying amounts of theoretical foundation. Background This section presents some of the basic machine-learning concepts required for a description of early stopping methods. Overfitting Machine learning algorithms tra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boosting (machine Learning)

In machine learning (ML), boosting is an Ensemble learning, ensemble metaheuristic for primarily reducing Bias–variance tradeoff, bias (as opposed to variance). It can also improve the Stability (learning theory), stability and accuracy of ML Statistical classification, classification and Regression analysis, regression algorithms. Hence, it is prevalent in supervised learning for converting weak learners to strong learners. The concept of boosting is based on the question posed by Michael Kearns (computer scientist), Kearns and Leslie Valiant, Valiant (1988, 1989):Michael Kearns(1988)''Thoughts on Hypothesis Boosting'' Unpublished manuscript (Machine Learning class project, December 1988) "Can a set of weak learners create a single strong learner?" A weak learner is defined as a Statistical classification, classifier that is only slightly correlated with the true classification. A strong learner is a classifier that is arbitrarily well-correlated with the true classification. R ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-validation (statistics)

Cross-validation, sometimes called rotation estimation or out-of-sample testing, is any of various similar model validation techniques for assessing how the results of a statistics, statistical analysis will Generalization error, generalize to an independent data set. Cross-validation includes Resampling (statistics), resampling and sample splitting methods that use different portions of the data to test and train a model on different iterations. It is often used in settings where the goal is prediction, and one wants to estimate how accuracy, accurately a predictive modelling, predictive model will perform in practice. It can also be used to assess the quality of a fitted model and the stability of its parameters. In a prediction problem, a model is usually given a dataset of ''known data'' on which training is run (''training dataset''), and a dataset of ''unknown data'' (or ''first seen'' data) against which the model is tested (called the validation set, validation dataset o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Learning Theory

Statistical learning theory is a framework for machine learning drawing from the fields of statistics and functional analysis. Statistical learning theory deals with the statistical inference problem of finding a predictive function based on data. Statistical learning theory has led to successful applications in fields such as computer vision, speech recognition, and bioinformatics. Introduction The goals of learning are understanding and prediction. Learning falls into many categories, including supervised learning, unsupervised learning, online learning, and reinforcement learning. From the perspective of statistical learning theory, supervised learning is best understood. Supervised learning involves learning from a training set of data. Every point in the training is an input–output pair, where the input maps to an output. The learning problem consists of inferring the function that maps between the input and the output, such that the learned function can be used to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalization Error

For supervised learning applications in machine learning and statistical learning theory, generalization errorMohri, M., Rostamizadeh A., Talwakar A., (2018) ''Foundations of Machine learning'', 2nd ed., Boston: MIT Press (also known as the out-of-sample errorY S. Abu-Mostafa, M.Magdon-Ismail, and H.-T. Lin (2012) Learning from Data, AMLBook Press. or the risk) is a measure of how accurately an algorithm is able to predict outcomes for previously unseen data. As learning algorithms are evaluated on finite samples, the evaluation of a learning algorithm may be sensitive to sampling error. As a result, measurements of prediction error on the current data may not provide much information about the algorithm's predictive ability on new, unseen data. The generalization error can be minimized by avoiding overfitting in the learning algorithm. The performance of machine learning algorithms is commonly visualized by Learning curve (machine learning), learning curve plots that show estimates ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

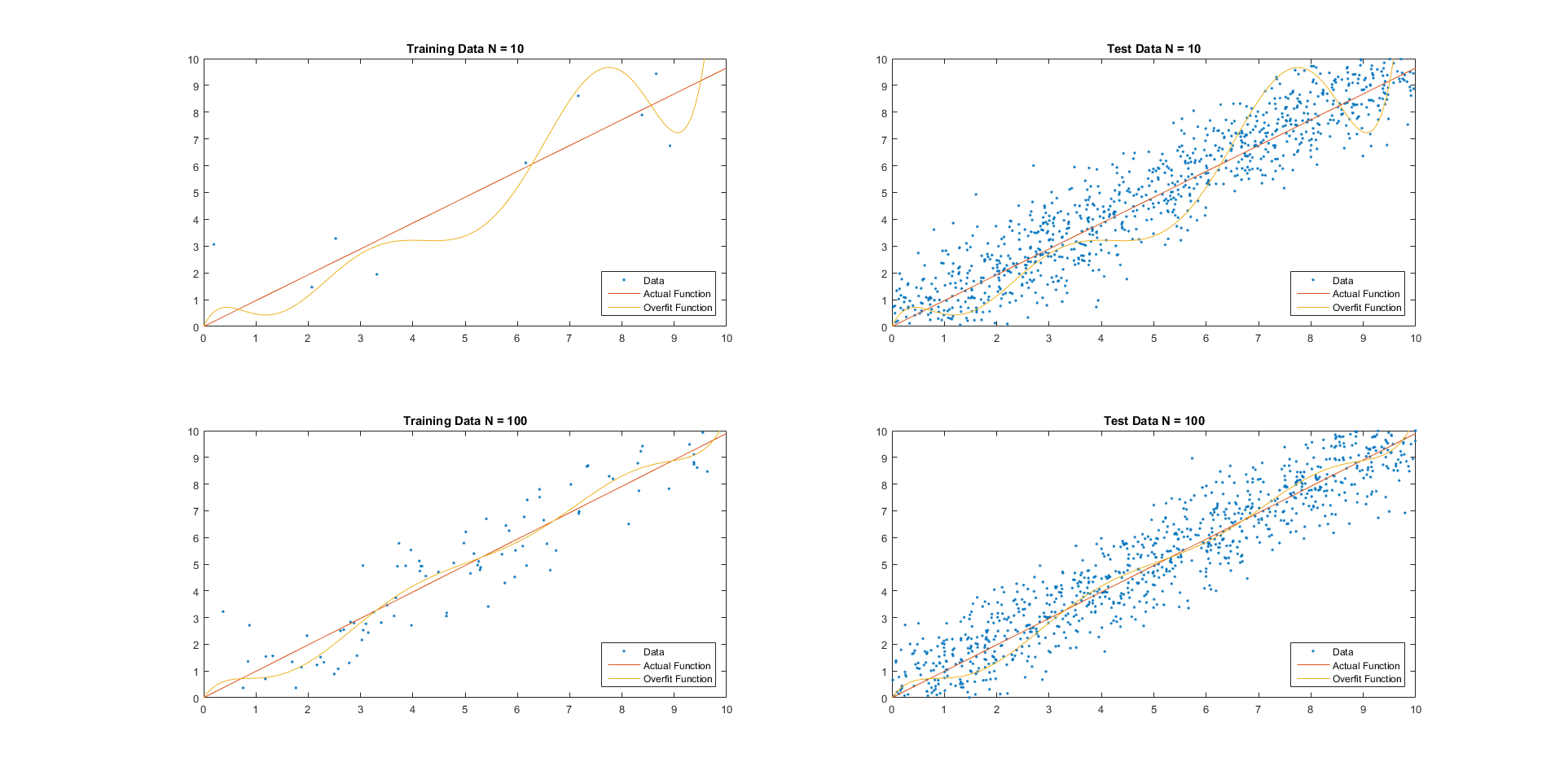

Overfitting

In mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfitted model is a mathematical model that contains more parameters than can be justified by the data. In the special case where the model consists of a polynomial function, these parameters represent the degree of a polynomial. The essence of overfitting is to have unknowingly extracted some of the residual variation (i.e., the Statistical noise, noise) as if that variation represented underlying model structure. Underfitting occurs when a mathematical model cannot adequately capture the underlying structure of the data. An under-fitted model is a model where some parameters or terms that would appear in a correctly specified model are missing. Underfitting would occur, for example, when fitting a linear model to nonlinear data. Such a model ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-validation (statistics)

Cross-validation, sometimes called rotation estimation or out-of-sample testing, is any of various similar model validation techniques for assessing how the results of a statistics, statistical analysis will Generalization error, generalize to an independent data set. Cross-validation includes Resampling (statistics), resampling and sample splitting methods that use different portions of the data to test and train a model on different iterations. It is often used in settings where the goal is prediction, and one wants to estimate how accuracy, accurately a predictive modelling, predictive model will perform in practice. It can also be used to assess the quality of a fitted model and the stability of its parameters. In a prediction problem, a model is usually given a dataset of ''known data'' on which training is run (''training dataset''), and a dataset of ''unknown data'' (or ''first seen'' data) against which the model is tested (called the validation set, validation dataset o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Holdout Method

Cross-validation, sometimes called rotation estimation or out-of-sample testing, is any of various similar model validation techniques for assessing how the results of a statistical analysis will generalize to an independent data set. Cross-validation includes resampling and sample splitting methods that use different portions of the data to test and train a model on different iterations. It is often used in settings where the goal is prediction, and one wants to estimate how accurately a predictive model will perform in practice. It can also be used to assess the quality of a fitted model and the stability of its parameters. In a prediction problem, a model is usually given a dataset of ''known data'' on which training is run (''training dataset''), and a dataset of ''unknown data'' (or ''first seen'' data) against which the model is tested (called the validation dataset or ''testing set''). The goal of cross-validation is to test the model's ability to predict new data th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Network

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a computational model inspired by the structure and functions of biological neural networks. A neural network consists of connected units or nodes called '' artificial neurons'', which loosely model the neurons in the brain. Artificial neuron models that mimic biological neurons more closely have also been recently investigated and shown to significantly improve performance. These are connected by ''edges'', which model the synapses in the brain. Each artificial neuron receives signals from connected neurons, then processes them and sends a signal to other connected neurons. The "signal" is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs, called the '' activation function''. The strength of the signal at each connection is determined by a ''weight'', which adjusts during the learning process. Typically, ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Validation Set

In machine learning, a common task is the study and construction of algorithms that can learn from and make predictions on data. Such algorithms function by making data-driven predictions or decisions, through building a mathematical model from input data. These input data used to build the model are usually divided into multiple data sets. In particular, three data sets are commonly used in different stages of the creation of the model: training, validation, and test sets. The model is initially fit on a training data set, which is a set of examples used to fit the parameters (e.g. weights of connections between neurons in artificial neural networks) of the model. The model (e.g. a naive Bayes classifier) is trained on the training data set using a supervised learning method, for example using optimization methods such as gradient descent or stochastic gradient descent. In practice, the training data set often consists of pairs of an input vector (or scalar) and the correspondin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Early Stopping In Non-parametric Regression

Early may refer to: Places in the United States * Early, Iowa, a city * Early, Texas, a city * Early Branch, a stream in Missouri * Early County, Georgia * Fort Early, Georgia, an early 19th century fort Music * Early B, stage name of Jamaican dancehall and reggae deejay Earlando Arrington Neil (1957–1994) * Early James, stage name of American singer-songwriter Fredrick Mullis Jr. (born 1993) * ''Early'' (Scritti Politti album), 2005 * ''Early'' (A Certain Ratio album), 2002 * Early Records, a record label Other uses * Early (name), a list of people and fictional characters with the given name or surname * Early effect, an effect in transistor physics * Early, a synonym for ''hotter'' in stellar classification See also * * The Earlies, a 21st century band * Earley (other) Earley is a town in England. Earley may also refer to: *Earley (surname), a list of people with the surname Earley * Earley (given name), a variant of the given name Earlene *Earley Lake, a lak ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistency (statistics)

In statistics, consistency of procedures, such as computing confidence intervals or conducting hypothesis tests, is a desired property of their behaviour as the number of items in the data set to which they are applied increases indefinitely. In particular, consistency requires that as the dataset size increases, the outcome of the procedure approaches the correct outcome. (entries for consistency, consistent estimator, consistent test) Use of the term in statistics derives from Sir Ronald Fisher in 1922. Use of the terms ''consistency'' and ''consistent'' in statistics is restricted to cases where essentially the same procedure can be applied to any number of data items. In complicated applications of statistics, there may be several ways in which the number of data items may grow. For example, records for rainfall within an area might increase in three ways: records for additional time periods; records for additional sites with a fixed area; records for extra sites obtained by ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |