|

Disambiguation

Word-sense disambiguation (WSD) is the process of identifying which sense of a word is meant in a sentence or other segment of context. In human language processing and cognition, it is usually subconscious/automatic but can often come to conscious attention when ambiguity impairs clarity of communication, given the pervasive polysemy in natural language. In computational linguistics, it is an open problem that affects other computer-related writing, such as discourse, improving relevance of search engines, anaphora resolution, coherence, and inference. Given that natural language requires reflection of neurological reality, as shaped by the abilities provided by the brain's neural networks, computer science has had a long-term challenge in developing the ability in computers to do natural language processing and machine learning. Many techniques have been researched, including dictionary-based methods that use the knowledge encoded in lexical resources, supervised machine l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word Sense

In linguistics, a word sense is one of the meanings of a word. For example, a dictionary may have over 50 different senses of the word " play", each of these having a different meaning based on the context of the word's usage in a sentence, as follows: In each sentence different collocates of "play" signal its different meanings. People and computers, as they read words, must use a process called word-sense disambiguationR. Navigli''Word Sense Disambiguation: A Survey', ACM Computing Surveys, 41(2), 2009, pp. 1-69. to reconstruct the likely intended meaning of a word. This process uses context to narrow the possible senses down to the probable ones. The context includes such things as the ideas conveyed by adjacent words and nearby phrases, the known or probable purpose and register of the conversation or document, and the orientation (time and place) implied or expressed. The disambiguation is thus context-sensitive. Advanced semantic analysis has resulted in a s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled " Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Context (language Use)

In semiotics, linguistics, sociology and anthropology, context refers to those objects or entities which surround a ''focal event'', in these disciplines typically a communicative event, of some kind. Context is "a frame that surrounds the event and provides resources for its appropriate interpretation". It is thus a relative concept, only definable with respect to some focal event within a frame, not independently of that frame. In linguistics In the 19th century, it was debated whether the most fundamental principle in language was contextuality or compositionality, and compositionality was usually preferred.Janssen, T. M. (2012) Compositionality: Its historic context', in M. Werning, W. Hinzen, & E. Machery (Eds.), The Oxford handbook of compositionality', pp. 19-46, Oxford University Press. Verbal context refers to the text or speech surrounding an expression (word, sentence, or speech act). Verbal context influences the way an expression is understood; hence the norm of n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consciousness

Consciousness, at its simplest, is sentience and awareness of internal and external existence. However, the lack of definitions has led to millennia of analyses, explanations and debates by philosophers, theologians, linguisticians, and scientists. Opinions differ about what exactly needs to be studied or even considered consciousness. In some explanations, it is synonymous with the mind, and at other times, an aspect of mind. In the past, it was one's "inner life", the world of introspection, of private thought, imagination and volition. Today, it often includes any kind of cognition, experience, feeling or perception. It may be awareness, awareness of awareness, or self-awareness either continuously changing or not. The disparate range of research, notions and speculations raises a curiosity about whether the right questions are being asked. Examples of the range of descriptions, definitions or explanations are: simple wakefulness, one's sense of selfhood or soul ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ambiguity

Ambiguity is the type of meaning in which a phrase, statement or resolution is not explicitly defined, making several interpretations plausible. A common aspect of ambiguity is uncertainty. It is thus an attribute of any idea or statement whose intended meaning cannot be definitively resolved according to a rule or process with a finite number of steps. (The '' ambi-'' part of the term reflects an idea of " two", as in "two meanings".) The concept of ambiguity is generally contrasted with vagueness. In ambiguity, specific and distinct interpretations are permitted (although some may not be immediately obvious), whereas with information that is vague, it is difficult to form any interpretation at the desired level of specificity. Linguistic forms Lexical ambiguity is contrasted with semantic ambiguity. The former represents a choice between a finite number of known and meaningful context-dependent interpretations. The latter represents a choice between any number of p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Anaphora Resolution

In linguistics, anaphora () is the use of an expression whose interpretation depends upon another expression in context (its antecedent or postcedent). In a narrower sense, anaphora is the use of an expression that depends specifically upon an antecedent expression and thus is contrasted with cataphora, which is the use of an expression that depends upon a postcedent expression. The anaphoric (referring) term is called an anaphor. For example, in the sentence ''Sally arrived, but nobody saw her'', the pronoun ''her'' is an anaphor, referring back to the antecedent ''Sally''. In the sentence ''Before her arrival, nobody saw Sally'', the pronoun ''her'' refers forward to the postcedent ''Sally'', so ''her'' is now a ''cataphor'' (and an anaphor in the broader, but not the narrower, sense). Usually, an anaphoric expression is a pro-form or some other kind of deictic (contextually dependent) expression. Both anaphora and cataphora are species of endophora, referring to something menti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

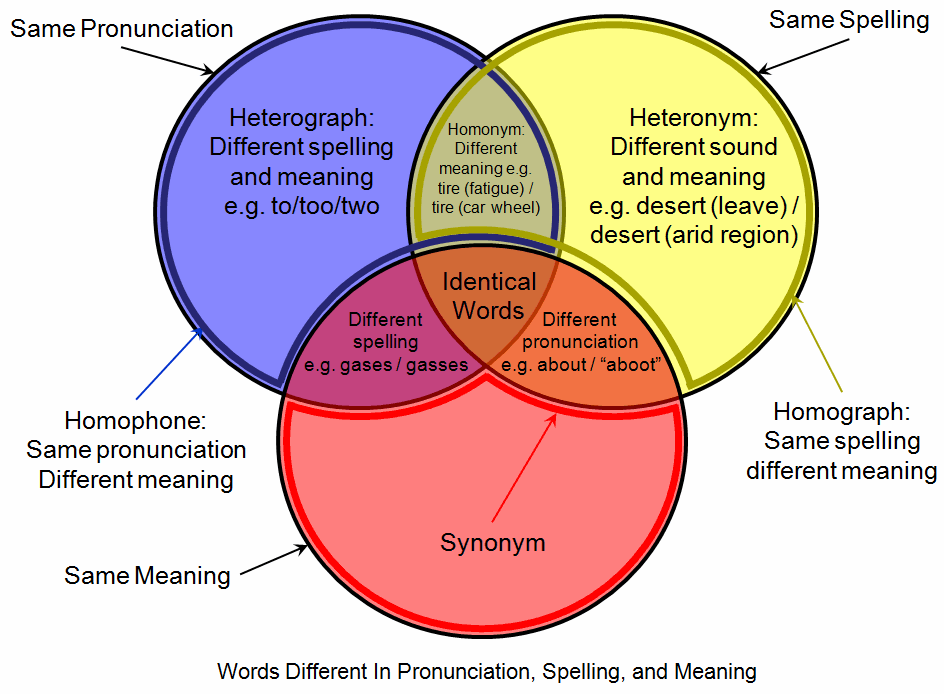

Homograph

A homograph (from the el, ὁμός, ''homós'', "same" and γράφω, ''gráphō'', "write") is a word that shares the same written form as another word but has a different meaning. However, some dictionaries insist that the words must also be pronounced differently, while the Oxford English Dictionary says that the words should also be of "different origin". In this vein, ''The Oxford Guide to Practical Lexicography'' lists various types of homographs, including those in which the words are discriminated by being in a different ''word class'', such as ''hit'', the verb ''to strike'', and ''hit'', the noun ''a blow''. If, when spoken, the meanings may be distinguished by different pronunciations, the words are also heteronyms. Words with the same writing ''and'' pronunciation (i.e. are both homographs and homophones) are considered homonyms. However, in a looser sense the term "homonym" may be applied to words with the same writing ''or'' pronunciation. Homograph disambigua ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discourse

Discourse is a generalization of the notion of a conversation to any form of communication. Discourse is a major topic in social theory, with work spanning fields such as sociology, anthropology, continental philosophy, and discourse analysis. Following pioneering work by Michel Foucault, these fields view discourse as a system of thought, knowledge, or communication that constructs our experience of the world. Since control of discourse amounts to control of how the world is perceived, social theory often studies discourse as a window into power. Within theoretical linguistics, discourse is understood more narrowly as linguistic information exchange and was one of the major motivations for the framework of dynamic semantics, in which expressions' denotations are equated with their ability to update a discourse context. Social theory In the humanities and social sciences, discourse describes a formal way of thinking that can be expressed through language. Discourse is a so ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language

Language is a structured system of communication. The structure of a language is its grammar and the free components are its vocabulary. Languages are the primary means by which humans communicate, and may be conveyed through a variety of methods, including spoken, sign, and written language. Many languages, including the most widely-spoken ones, have writing systems that enable sounds or signs to be recorded for later reactivation. Human language is highly variable between cultures and across time. Human languages have the properties of productivity and displacement, and rely on social convention and learning. Estimates of the number of human languages in the world vary between and . Precise estimates depend on an arbitrary distinction (dichotomy) established between languages and dialects. Natural languages are spoken, signed, or both; however, any language can be encoded into secondary media using auditory, visual, or tactile stimuli – for example, writing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Yorick Wilks

Yorick Wilks FBCS (born 27 October 1939), a British computer scientist, is emeritus professor of artificial intelligence at the University of Sheffield, visiting professor of artificial intelligence at Gresham College (a post created especially for him), Former senior research fellow at the Oxford Internet Institute, senior scientist at the Florida Institute for Human and Machine Cognition, and a member of the Epiphany Philosophers. __TOC__ Biography Wilks was educated at Torquay Boys' Grammar School, followed by Pembroke College, Cambridge, where he read Philosophy, joined the Epiphany Philosophers and obtained his Doctor of Philosophy degree (1968) under Professor R. B. Braithwaite for the thesis 'Argument and Proof'; he was an early pioneer in meaning-based approaches to the understanding of natural language content by computers. His main early contribution in the 1970s was called "Preference Semantics" (Wilks, 1973; Wilks and Fass, 1992), an algorithmic method for assi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Training Set

In machine learning, a common task is the study and construction of algorithms that can learn from and make predictions on data. Such algorithms function by making data-driven predictions or decisions, through building a mathematical model from input data. These input data used to build the model are usually divided in multiple data sets. In particular, three data sets are commonly used in different stages of the creation of the model: training, validation and test sets. The model is initially fit on a training data set, which is a set of examples used to fit the parameters (e.g. weights of connections between neurons in artificial neural networks) of the model. The model (e.g. a naive Bayes classifier) is trained on the training data set using a supervised learning method, for example using optimization methods such as gradient descent or stochastic gradient descent. In practice, the training data set often consists of pairs of an input vector (or scalar) and the correspo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Corpus Linguistics

Corpus linguistics is the study of a language as that language is expressed in its text corpus (plural ''corpora''), its body of "real world" text. Corpus linguistics proposes that a reliable analysis of a language is more feasible with corpora collected in the field—the natural context ("realia") of that language—with minimal experimental interference. The text-corpus method uses the body of texts written in any natural language to derive the set of abstract rules which govern that language. Those results can be used to explore the relationships between that subject language and other languages which have undergone a similar analysis. The first such corpora were manually derived from source texts, but now that work is automated. Corpora have not only been used for linguistics research, they have also been used to compile dictionaries (starting with ''The American Heritage Dictionary of the English Language'' in 1969) and grammar guides, such as '' A Comprehensive Grammar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |