|

Concurrent Hash Table

A concurrent hash table (concurrent hash map) is an implementation of hash tables allowing ''concurrent access'' by ''multiple'' threads using a hash function. Concurrent hash tables represent a key concurrent data structure for use in concurrent computing which allow multiple threads to more efficiently cooperate for a computation among shared data. Due to the natural problems associated with concurrent access - namely contention - the way and scope in which the table can be concurrently accessed differs depending on the implementation. Furthermore, the resulting speed up might not be linear with the amount of threads used as contention needs to be resolved, producing processing overhead. There exist multiple solutions to mitigate the effects of contention, that each preserve the correctness of operations on the table. As with their sequential counterpart, concurrent hash tables can be generalized and extended to fit broader applications, such as allowing more complex d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deadlock

In concurrent computing, deadlock is any situation in which no member of some group of entities can proceed because each waits for another member, including itself, to take action, such as sending a message or, more commonly, releasing a lock. Deadlocks are a common problem in multiprocessing systems, parallel computing, and distributed systems, because in these contexts systems often use software or hardware locks to arbitrate shared resources and implement process synchronization. In an operating system, a deadlock occurs when a process or thread enters a waiting state because a requested system resource is held by another waiting process, which in turn is waiting for another resource held by another waiting process. If a process remains indefinitely unable to change its state because resources requested by it are being used by another process that itself is waiting, then the system is said to be in a deadlock. In a communications system, deadlocks occur mainly due to l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linux Kernel

The Linux kernel is a free and open-source, monolithic, modular, multitasking, Unix-like operating system kernel. It was originally authored in 1991 by Linus Torvalds for his i386-based PC, and it was soon adopted as the kernel for the GNU operating system, which was written to be a free (libre) replacement for Unix. Linux is provided under the GNU General Public License version 2 only, but it contains files under other compatible licenses. Since the late 1990s, it has been included as part of a large number of operating system distributions, many of which are commonly also called Linux. Linux is deployed on a wide variety of computing systems, such as embedded devices, mobile devices (including its use in the Android operating system), personal computers, servers, mainframes, and supercomputers. It can be tailored for specific architectures and for several usage scenarios using a family of simple commands (that is, without the need of manually editing its source c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Synchronization (computer Science)

In computer science, synchronization refers to one of two distinct but related concepts: synchronization of processes, and synchronization of data. ''Process synchronization'' refers to the idea that multiple processes are to join up or handshake at a certain point, in order to reach an agreement or commit to a certain sequence of action. '' Data synchronization'' refers to the idea of keeping multiple copies of a dataset in coherence with one another, or to maintain data integrity. Process synchronization primitives are commonly used to implement data synchronization. The need for synchronization The need for synchronization does not arise merely in multi-processor systems but for any kind of concurrent processes; even in single processor systems. Mentioned below are some of the main needs for synchronization: '' Forks and Joins:'' When a job arrives at a fork point, it is split into N sub-jobs which are then serviced by n tasks. After being serviced, each sub-job waits until ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phase Concurrent Hashtable

Phase or phases may refer to: Science *State of matter, or phase, one of the distinct forms in which matter can exist *Phase (matter), a region of space throughout which all physical properties are essentially uniform * Phase space, a mathematical space in which each possible state of a physical system is represented by a point — this equilibrium point is also referred to as a "microscopic state" **Phase space formulation, a formulation of quantum mechanics in phase space *Phase (waves), the position of a point in time (an instant) on a waveform cycle **Instantaneous phase, generalization for both cyclic and non-cyclic phenomena * AC phase, the phase offset between alternating current electric power in multiple conducting wires ** Single-phase electric power, distribution of AC electric power in a system where the voltages of the supply vary in unison **Three-phase electric power, a common method of AC electric power generation, transmission, and distribution *Phase problem, the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Starvation (computer Science)

In computer science, resource starvation is a problem encountered in concurrent computing where a process is perpetually denied necessary resources to process its work. Starvation may be caused by errors in a scheduling or mutual exclusion algorithm, but can also be caused by resource leaks, and can be intentionally caused via a denial-of-service attack such as a fork bomb. When starvation is impossible in a concurrent algorithm, the algorithm is called starvation-free, lockout-freed or said to have finite bypass. This property is an instance of liveness, and is one of the two requirements for any mutual exclusion algorithm; the other being correctness. The name "finite bypass" means that any process (concurrent part) of the algorithm is bypassed at most a finite number times before being allowed access to the shared resource. Scheduling Starvation is usually caused by an overly simplistic scheduling algorithm. For example, if a (poorly designed) multi-tasking system alw ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

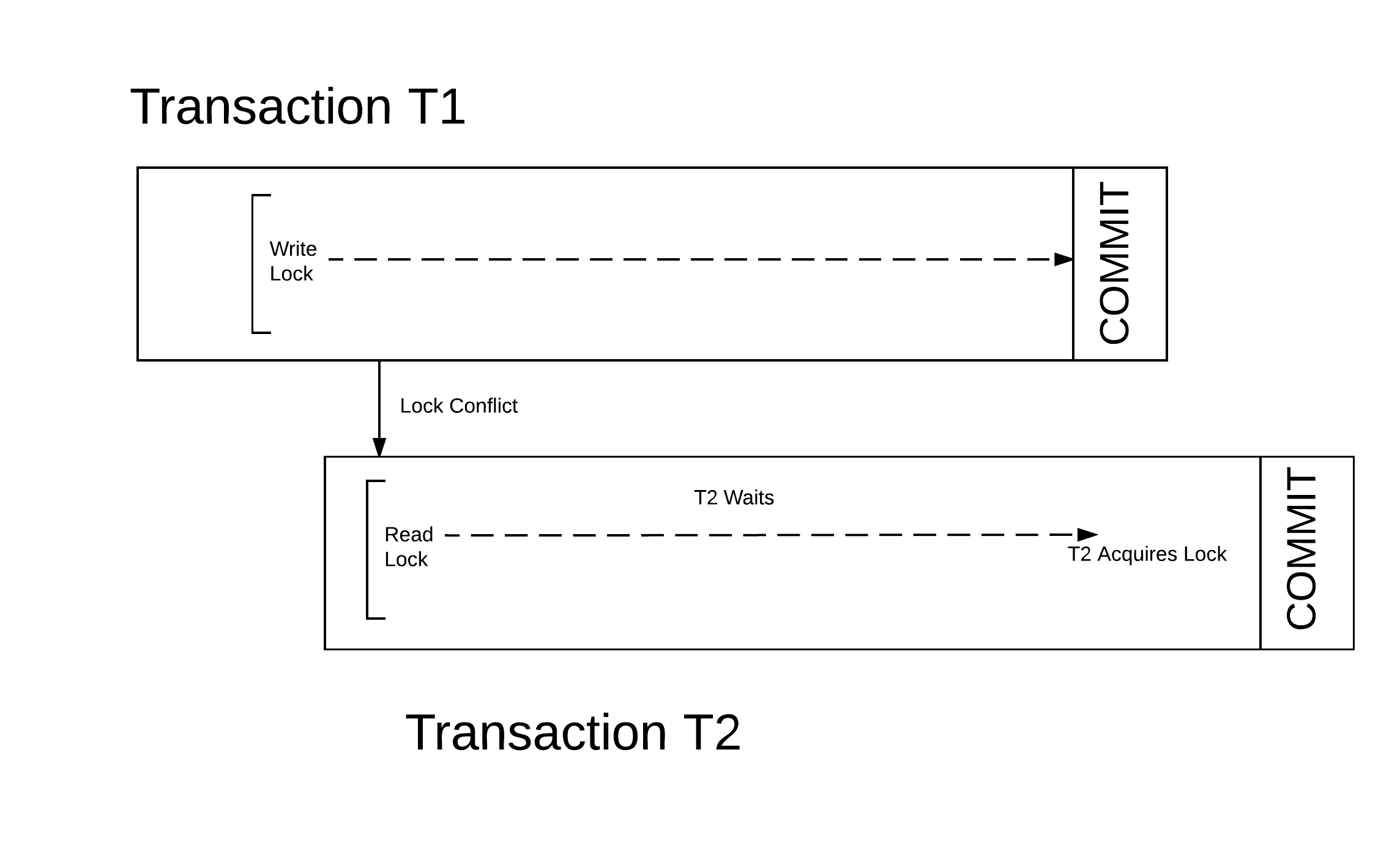

Lock (computer Science)

In computer science, a lock or mutex (from mutual exclusion) is a synchronization primitive: a mechanism that enforces limits on access to a resource when there are many threads of execution. A lock is designed to enforce a mutual exclusion concurrency control policy, and with a variety of possible methods there exists multiple unique implementations for different applications. Types Generally, locks are ''advisory locks'', where each thread cooperates by acquiring the lock before accessing the corresponding data. Some systems also implement ''mandatory locks'', where attempting unauthorized access to a locked resource will force an exception in the entity attempting to make the access. The simplest type of lock is a binary semaphore. It provides exclusive access to the locked data. Other schemes also provide shared access for reading data. Other widely implemented access modes are exclusive, intend-to-exclude and intend-to-upgrade. Another way to classify locks is by what happ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transactional Synchronization Extensions

Transactional Synchronization Extensions (TSX), also called Transactional Synchronization Extensions New Instructions (TSX-NI), is an extension to the x86 instruction set architecture (ISA) that adds hardware transactional memory support, speeding up execution of multi-threaded software through lock elision. According to different benchmarks, TSX/TSX-NI can provide around 40% faster applications execution in specific workloads, and 4–5 times more database transactions per second (TPS). TSX/TSX-NI was documented by Intel in February 2012, and debuted in June 2013 on selected Intel microprocessors based on the Haswell microarchitecture. Haswell processors below 45xx as well as R-series and K-series (with unlocked multiplier) SKUs do not support TSX/TSX-NI. In August 2014, Intel announced a bug in the TSX/TSX-NI implementation on current steppings of Haswell, Haswell-E, Haswell-EP and early Broadwell CPUs, which resulted in disabling the TSX/TSX-NI feature on affected CPU ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Database Transaction

A database transaction symbolizes a unit of work, performed within a database management system (or similar system) against a database, that is treated in a coherent and reliable way independent of other transactions. A transaction generally represents any change in a database. Transactions in a database environment have two main purposes: # To provide reliable units of work that allow correct recovery from failures and keep a database consistent even in cases of system failure. For example: when execution prematurely and unexpectedly stops (completely or partially) in which case many operations upon a database remain uncompleted, with unclear status. # To provide isolation between programs accessing a database concurrently. If this isolation is not provided, the programs' outcomes are possibly erroneous. In a database management system, a transaction is a single unit of logic or work, sometimes made up of multiple operations. Any logical calculation done in a consistent mode in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transactional Memory

In computer science and engineering, transactional memory attempts to simplify concurrent programming by allowing a group of load and store instructions to execute in an atomic way. It is a concurrency control mechanism analogous to database transactions for controlling access to shared memory in concurrent computing. Transactional memory systems provide high-level abstraction as an alternative to low-level thread synchronization. This abstraction allows for coordination between concurrent reads and writes of shared data in parallel systems. Motivation In concurrent programming, synchronization is required when parallel threads attempt to access a shared resource. Low-level thread synchronization constructs such as locks are pessimistic and prohibit threads that are outside a critical section from making any changes. The process of applying and releasing locks often functions as additional overhead in workloads with little conflict among threads. Transactional memory provides ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fetch-and-add

In computer science, the fetch-and-add CPU instruction (FAA) atomically increments the contents of a memory location by a specified value. That is, fetch-and-add performs the operation :increment the value at address by , where is a memory location and is some value, and return the original value at in such a way that if this operation is executed by one process in a concurrent system, no other process will ever see an intermediate result. Fetch-and-add can be used to implement concurrency control structures such as mutex locks and semaphores. Overview The motivation for having an atomic fetch-and-add is that operations that appear in programming languages as : are not safe in a concurrent system, where multiple processes or threads are running concurrently (either in a multi-processor system, or preemptively scheduled onto some single-core systems). The reason is that such an operation is actually implemented as multiple machine instructions: # load into a register; ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compare-and-swap

In computer science, compare-and-swap (CAS) is an atomic instruction used in multithreading to achieve synchronization. It compares the contents of a memory location with a given value and, only if they are the same, modifies the contents of that memory location to a new given value. This is done as a single atomic operation. The atomicity guarantees that the new value is calculated based on up-to-date information; if the value had been updated by another thread in the meantime, the write would fail. The result of the operation must indicate whether it performed the substitution; this can be done either with a simple boolean response (this variant is often called compare-and-set), or by returning the value read from the memory location (''not'' the value written to it). Overview A compare-and-swap operation is an atomic version of the following pseudocode, where denotes access through a pointer: function cas(p: pointer to int, old: int, new: int) is if *p ≠ old ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |