|

Bit

The bit is the most basic unit of information in computing and digital communications. The name is a portmanteau of binary digit. The bit represents a logical state with one of two possible values. These values are most commonly represented as either , but other representations such as ''true''/''false'', ''yes''/''no'', ''on''/''off'', or ''+''/''−'' are also commonly used. The relation between these values and the physical states of the underlying storage or device is a matter of convention, and different assignments may be used even within the same device or program. It may be physically implemented with a two-state device. The symbol for the binary digit is either "bit" per recommendation by the IEC 80000-13:2008 standard, or the lowercase character "b", as recommended by the IEEE 1541-2002 standard. A contiguous group of binary digits is commonly called a ''bit string'', a bit vector, or a single-dimensional (or multi-dimensional) ''bit array''. A group of eight ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Byte

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as The Internet Protocol () refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness. The first bit is number 0, making the eighth bit number 7. The size of the byte has historically been hardware-dependent and no definitive standards existed that mandated the size. Sizes from 1 to 48 bits have been used. The six-bit character code was an often-used implementation in early encoding systems, and computers using six-bit and nine-bit bytes were common in the 1960s. These systems often had memory wo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bit String

A bit array (also known as bitmask, bit map, bit set, bit string, or bit vector) is an array data structure that compactly stores bits. It can be used to implement a simple set data structure. A bit array is effective at exploiting bit-level parallelism in hardware to perform operations quickly. A typical bit array stores ''kw'' bits, where ''w'' is the number of bits in the unit of storage, such as a byte or word, and ''k'' is some nonnegative integer. If ''w'' does not divide the number of bits to be stored, some space is wasted due to internal fragmentation. Definition A bit array is a mapping from some domain (almost always a range of integers) to values in the set . The values can be interpreted as dark/light, absent/present, locked/unlocked, valid/invalid, et cetera. The point is that there are only two possible values, so they can be stored in one bit. As with other arrays, the access to a single bit can be managed by applying an index to the array. Assuming its size ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bit Array

A bit array (also known as bitmask, bit map, bit set, bit string, or bit vector) is an array data structure that compactly stores bits. It can be used to implement a simple set data structure. A bit array is effective at exploiting bit-level parallelism in hardware to perform operations quickly. A typical bit array stores ''kw'' bits, where ''w'' is the number of bits in the unit of storage, such as a byte or word, and ''k'' is some nonnegative integer. If ''w'' does not divide the number of bits to be stored, some space is wasted due to internal fragmentation. Definition A bit array is a mapping from some domain (almost always a range of integers) to values in the set . The values can be interpreted as dark/light, absent/present, locked/unlocked, valid/invalid, et cetera. The point is that there are only two possible values, so they can be stored in one bit. As with other arrays, the access to a single bit can be managed by applying an index to the array. Assuming its size ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Units Of Information

In computing and telecommunications, a unit of information is the capacity of some standard data storage system or communication channel, used to measure the capacities of other systems and channels. In information theory, units of information are also used to measure information contained in messages and the entropy of random variables. The most commonly used units of data storage capacity are the bit, the capacity of a system that has only two states, and the byte (or octet), which is equivalent to eight bits. Multiples of these units can be formed from these with the SI prefixes (power-of-ten prefixes) or the newer IEC binary prefixes (power-of-two prefixes). Primary units In 1928, Ralph Hartley observed a fundamental storage principle, which was further formalized by Claude Shannon in 1945: the information that can be stored in a system is proportional to the logarithm of ''N'' possible states of that system, denoted . Changing the base of the logarithm from ''b'' to a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unit Of Information

In computing and telecommunications, a unit of information is the capacity of some standard data storage system or communication channel, used to measure the capacities of other systems and channels. In information theory, units of information are also used to measure information contained in messages and the entropy of random variables. The most commonly used units of data storage capacity are the bit, the capacity of a system that has only two states, and the byte (or octet), which is equivalent to eight bits. Multiples of these units can be formed from these with the SI prefixes (power-of-ten prefixes) or the newer IEC binary prefixes (power-of-two prefixes). Primary units In 1928, Ralph Hartley observed a fundamental storage principle, which was further formalized by Claude Shannon in 1945: the information that can be stored in a system is proportional to the logarithm of ''N'' possible states of that system, denoted . Changing the base of the logarithm from ''b'' to a d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Teleprinter

A teleprinter (teletypewriter, teletype or TTY) is an electromechanical device that can be used to send and receive typed messages through various communications channels, in both point-to-point and point-to-multipoint configurations. Initially they were used in telegraphy, which developed in the late 1830s and 1840s as the first use of electrical engineering, though teleprinters were not used for telegraphy until 1887 at the earliest. The machines were adapted to provide a user interface to early mainframe computers and minicomputers, sending typed data to the computer and printing the response. Some models could also be used to create punched tape for data storage (either from typed input or from data received from a remote source) and to read back such tape for local printing or transmission. Teleprinters could use a variety of different communication media. These included a simple pair of wires; dedicated non-switched telephone circuits (leased lines); switched ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Number

A binary number is a number expressed in the base-2 numeral system or binary numeral system, a method of mathematical expression which uses only two symbols: typically "0" ( zero) and "1" (one). The base-2 numeral system is a positional notation with a radix of 2. Each digit is referred to as a bit, or binary digit. Because of its straightforward implementation in digital electronic circuitry using logic gates, the binary system is used by almost all modern computers and computer-based devices, as a preferred system of use, over various other human techniques of communication, because of the simplicity of the language and the noise immunity in physical implementation. History The modern binary number system was studied in Europe in the 16th and 17th centuries by Thomas Harriot, Juan Caramuel y Lobkowitz, and Gottfried Leibniz. However, systems related to binary numbers have appeared earlier in multiple cultures including ancient Egypt, China, and India. Leibniz was spec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Entropy

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable X, which takes values in the alphabet \mathcal and is distributed according to p: \mathcal\to , 1/math>: \Eta(X) := -\sum_ p(x) \log p(x) = \mathbb \log p(X), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or " shannons"), while base ''e'' gives "natural units" nat, and base 10 gives units of "dits", "bans", or " hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable. The concept of information entropy was introduced by Claude Shannon in his 1948 paper " A Mathematical Theory of Communication",PDF archived froherePDF archived frohere and is also referred to as Shannon entropy. Shannon's theory d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nibble

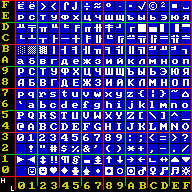

In computing, a nibble (occasionally nybble, nyble, or nybl to match the spelling of byte) is a four- bit aggregation, or half an octet. It is also known as half-byte or tetrade. In a networking or telecommunication context, the nibble is often called a semi-octet, quadbit, or quartet. A nibble has sixteen () possible values. A nibble can be represented by a single hexadecimal digit (–) and called a hex digit. A full byte (octet) is represented by two hexadecimal digits (–); therefore, it is common to display a byte of information as two nibbles. Sometimes the set of all 256-byte values is represented as a table, which gives easily readable hexadecimal codes for each value. Four-bit computer architectures use groups of four bits as their fundamental unit. Such architectures were used in early microprocessors, pocket calculators and pocket computers. They continue to be used in some microcontrollers. In this context, 4-bit groups were sometimes also called ''charact ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Punched Card

A punched card (also punch card or punched-card) is a piece of stiff paper that holds digital data represented by the presence or absence of holes in predefined positions. Punched cards were once common in data processing applications or to directly control automated machinery. Punched cards were widely used through much of the 20th century in the data processing industry, where specialized and increasingly complex unit record machines, organized into semiautomatic data processing systems, used punched cards for data input, output, and storage. The IBM 12-row/80-column punched card format came to dominate the industry. Many early digital computers used punched cards as the primary medium for input of both computer programs and data. While punched cards are now obsolete as a storage medium, as of 2012, some voting machines still used punched cards to record votes. They also had a significant cultural impact. History The idea of control and data storage via punched hole ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the scientific study of the quantification, storage, and communication of information. The field was originally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering, and electrical engineering. A key measure in information theory is entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a die (with six equally likely outcomes). Some other important measures in information theory are mutual information, channel capacity, error exponents, and relative entropy. Important sub-fields of information theory include sourc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computing Device

A computer is a machine that can be programmed to carry out sequences of arithmetic or logical operations (computation) automatically. Modern digital electronic computers can perform generic sets of operations known as programs. These programs enable computers to perform a wide range of tasks. A computer system is a nominally complete computer that includes the hardware, operating system (main software), and peripheral equipment needed and used for full operation. This term may also refer to a group of computers that are linked and function together, such as a computer network or computer cluster. A broad range of industrial and consumer products use computers as control systems. Simple special-purpose devices like microwave ovens and remote controls are included, as are factory devices like industrial robots and computer-aided design, as well as general-purpose devices like personal computers and mobile devices like smartphones. Computers power the Internet, which links bi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)