|

Zarr (data Format)

Zarr is an open standard for storing large multidimensional array data. It specifies a protocol and data format, and is designed to be "cloud ready" including random access, by dividing data into subsets referred to as chunks. Zarr can be used within many programming languages, including Python, Java, JavaScript, C++, Rust and Julia. It has been used by organizations such as Google and Microsoft to publish large datasets. Early versions of Zarr were first released in 2015 by Alistair Miles. Zarr is designed to support high-throughput distributed I/O on different storage systems, which is a common requirement in cloud computing. Multiple read operations can efficiently occur to a Zarr array in parallel, or multiple write operations in parallel. Format description The main data format in Zarr is multidimensional arrays. For parallelisable access, these arrays are stored and accessed as a grid of so-called "chunks". The actual data format on disk depends on the compressor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Open Standard

An open standard is a standard that is openly accessible and usable by anyone. It is also a common prerequisite that open standards use an open license that provides for extensibility. Typically, anybody can participate in their development due to their inherently open nature. There is no single definition, and interpretations vary with usage. Examples of open standards include the GSM, 4G, and 5G standards that allow most modern mobile phones to work world-wide. Definitions The terms ''open'' and ''standard'' have a wide range of meanings associated with their usage. There are a number of definitions of open standards which emphasize different aspects of openness, including the openness of the resulting specification, the openness of the drafting process, and the ownership of rights in the standard. The term "standard" is sometimes restricted to technologies approved by formalized committees that are open to participation by all interested parties and operate on a consensus basis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cloud Computing

Cloud computing is "a paradigm for enabling network access to a scalable and elastic pool of shareable physical or virtual resources with self-service provisioning and administration on-demand," according to International Organization for Standardization, ISO. Essential characteristics In 2011, the National Institute of Standards and Technology (NIST) identified five "essential characteristics" for cloud systems. Below are the exact definitions according to NIST: * On-demand self-service: "A consumer can unilaterally provision computing capabilities, such as server time and network storage, as needed automatically without requiring human interaction with each service provider." * Broad network access: "Capabilities are available over the network and accessed through standard mechanisms that promote use by heterogeneous thin or thick client platforms (e.g., mobile phones, tablets, laptops, and workstations)." * Pooling (resource management), Resource pooling: " The provider' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dask (software)

Dask is an open-source Python library for parallel computing. Dask scales Python code from multi-core local machines to large distributed clusters in the cloud. Dask provides a familiar user interface by mirroring the APIs of other libraries in the PyData ecosystem including: Pandas, scikit-learn and NumPy. It also exposes low-level APIs that help programmers run custom algorithms in parallel. Dask was created by Matthew Rocklin in December 2014 and has over 9.8k stars and 500 contributors on GitHub. Dask is used by retail, financial, governmental organizations, as well as life science and geophysical institutes. Walmart, Wayfair, JDA, GrubHub, General Motors, Nvidia, Harvard Medical School, Capital One and NASA are among the organizations that use Dask. Overview Dask has two parts: * Big data collections (high level and low level) * Dynamic task scheduling Dask's high-level parallel collections – DataFrames, Bags, and Arrays – operate in parallel on datasets that may ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NetCDF

NetCDF (Network Common Data Form) is a set of software libraries and self-describing, machine-independent data formats that support the creation, access, and sharing of array-oriented scientific data. The project homepage is hosted by the Unidata program at the University Corporation for Atmospheric Research (UCAR). They are also the chief source of netCDF software, standards development, updates, etc. The format is an open standard. NetCDF Classic and 64-bit Offset Format are an international standard of the Open Geospatial Consortium. History The project started in 1988 and is still actively supported by UCAR. The original netCDF binary format (released in 1990, now known as "netCDF classic format") is still widely used across the world and continues to be fully supported in all netCDF releases. Version 4.0 (released in 2008) allowed the use of the HDF5 data file format. Version 4.1 (2010) added support for C and Fortran client access to specified subsets of remote data via ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

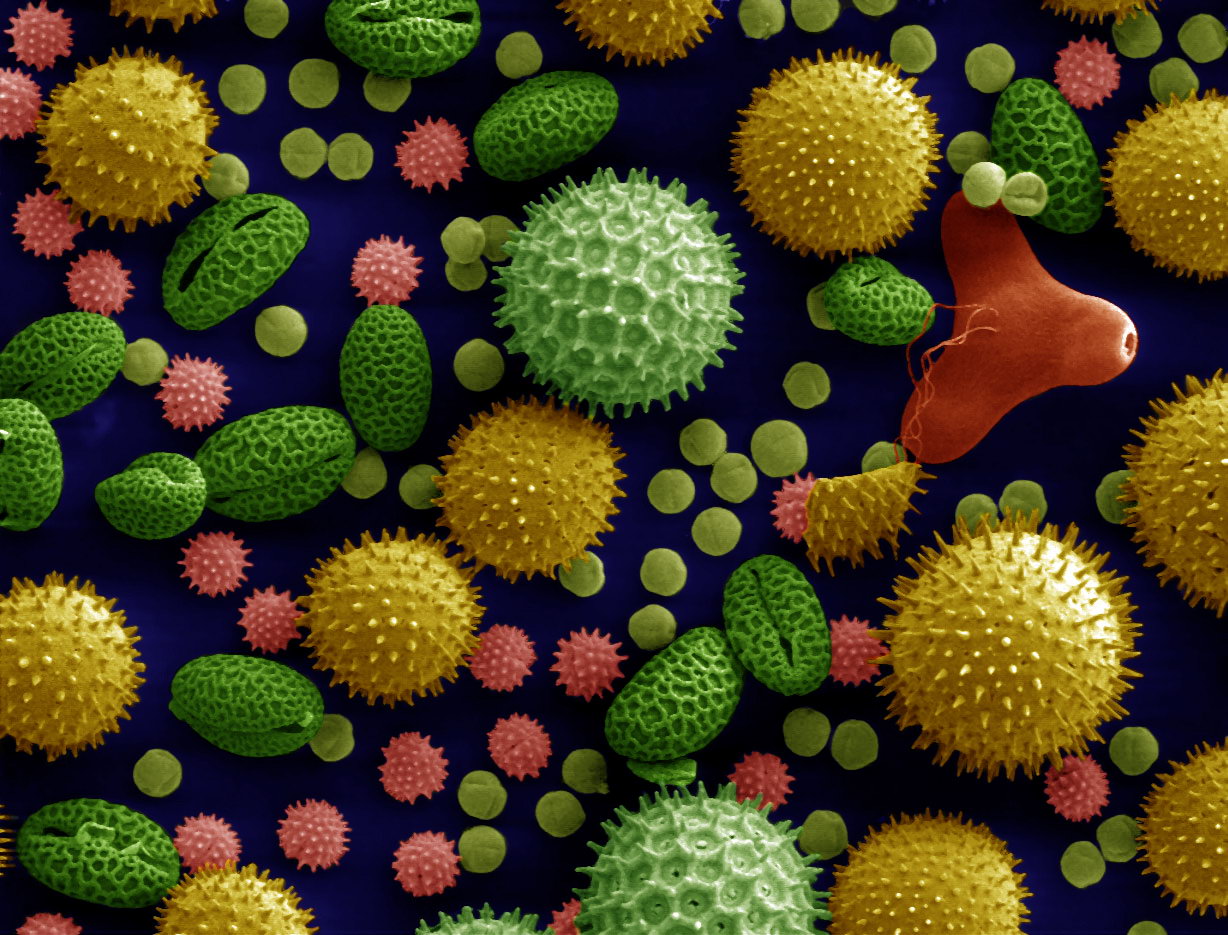

Microscopy

Microscopy is the technical field of using microscopes to view subjects too small to be seen with the naked eye (objects that are not within the resolution range of the normal eye). There are three well-known branches of microscopy: optical microscope, optical, electron microscope, electron, and scanning probe microscopy, along with the emerging field of X-ray microscopy. Optical microscopy and electron microscopy involve the diffraction, reflection (physics), reflection, or refraction of electromagnetic radiation/electron beams interacting with the Laboratory specimen, specimen, and the collection of the scattered radiation or another signal in order to create an image. This process may be carried out by wide-field irradiation of the sample (for example standard light microscopy and transmission electron microscope, transmission electron microscopy) or by scanning a fine beam over the sample (for example confocal laser scanning microscopy and scanning electron microscopy). Scan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metadata

Metadata (or metainformation) is "data that provides information about other data", but not the content of the data itself, such as the text of a message or the image itself. There are many distinct types of metadata, including: * Descriptive metadata – the descriptive information about a resource. It is used for discovery and identification. It includes elements such as title, abstract, author, and keywords. * Structural metadata – metadata about containers of data and indicates how compound objects are put together, for example, how pages are ordered to form chapters. It describes the types, versions, relationships, and other characteristics of digital materials. * Administrative metadata – the information to help manage a resource, like resource type, and permissions, and when and how it was created. * Reference metadata – the information about the contents and quality of Statistical data type, statistical data. * Statistical metadata – also called process data, may ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HDF5

Hierarchical Data Format (HDF) is a set of file formats (HDF4, HDF5) designed to store and organize large amounts of data. Originally developed at the U.S. National Center for Supercomputing Applications, it is supported by The HDF Group, a non-profit corporation whose mission is to ensure continued development of HDF5 technologies and the continued accessibility of data stored in HDF. In keeping with this goal, the HDF libraries and associated tools are available under a liberal, BSD-like license for general use. HDF is supported by many commercial and non-commercial software platforms and programming languages. The freely available HDF distribution consists of the library, command-line utilities, test suite source, Java interface, and the Java-based HDF Viewer (HDFView). The current version, HDF5, differs significantly in design and API from the major legacy version HDF4. Early history The quest for a portable scientific data format, originally dubbed AEHOO (All Encompassin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microsoft

Microsoft Corporation is an American multinational corporation and technology company, technology conglomerate headquartered in Redmond, Washington. Founded in 1975, the company became influential in the History of personal computers#The early 1980s and home computers, rise of personal computers through software like Windows, and the company has since expanded to Internet services, cloud computing, video gaming and other fields. Microsoft is the List of the largest software companies, largest software maker, one of the Trillion-dollar company, most valuable public U.S. companies, and one of the List of most valuable brands, most valuable brands globally. Microsoft was founded by Bill Gates and Paul Allen to develop and sell BASIC interpreters for the Altair 8800. It rose to dominate the personal computer operating system market with MS-DOS in the mid-1980s, followed by Windows. During the 41 years from 1980 to 2021 Microsoft released 9 versions of MS-DOS with a median frequen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Array (data Structure)

In computer science, an array is a data structure consisting of a collection of ''elements'' (value (computer science), values or variable (programming), variables), of same memory size, each identified by at least one ''array index'' or ''key'', a collection of which may be a tuple, known as an index tuple. An array is stored such that the position (memory address) of each element can be computed from its index tuple by a mathematical formula. The simplest type of data structure is a linear array, also called a one-dimensional array. For example, an array of ten 32-bit (4-byte) integer variables, with indices 0 through 9, may be stored as ten Word (data type), words at memory addresses 2000, 2004, 2008, ..., 2036, (in hexadecimal: 0x7D0, 0x7D4, 0x7D8, ..., 0x7F4) so that the element with index ''i'' has the address 2000 + (''i'' × 4). The memory address of the first element of an array is called first address, foundation address, or base address. Because the mathematical conc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google

Google LLC (, ) is an American multinational corporation and technology company focusing on online advertising, search engine technology, cloud computing, computer software, quantum computing, e-commerce, consumer electronics, and artificial intelligence (AI). It has been referred to as "the most powerful company in the world" by the BBC and is one of the world's List of most valuable brands, most valuable brands. Google's parent company, Alphabet Inc., is one of the five Big Tech companies alongside Amazon (company), Amazon, Apple Inc., Apple, Meta Platforms, Meta, and Microsoft. Google was founded on September 4, 1998, by American computer scientists Larry Page and Sergey Brin. Together, they own about 14% of its publicly listed shares and control 56% of its stockholder voting power through super-voting stock. The company went public company, public via an initial public offering (IPO) in 2004. In 2015, Google was reorganized as a wholly owned subsidiary of Alphabet Inc. Go ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |