|

Yule–Simon Distribution

In probability and statistics, the Yule–Simon distribution is a discrete probability distribution named after Udny Yule and Herbert A. Simon. Simon originally called it the ''Yule distribution''. The probability mass function (pmf) of the Yule–Simon (''ρ'') distribution is :f(k;\rho) = \rho\operatorname(k, \rho+1), for integer k \geq 1 and real \rho > 0, where \operatorname is the beta function. Equivalently the pmf can be written in terms of the rising factorial as : f(k;\rho) = \frac, where \Gamma is the gamma function. Thus, if \rho is an integer, : f(k;\rho) = \frac. The parameter \rho can be estimated using a fixed point algorithm. The probability mass function ''f'' has the property that for sufficiently large ''k'' we have : f(k;\rho) \approx \frac \propto \frac 1 . This means that the tail of the Yule–Simon distribution is a realization of Zipf's law: f(k;\rho) can be used to model, for example, the relative frequency of the kth most freque ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Birth Process

In probability theory, a birth process or a pure birth process is a special case of a continuous-time Markov process and a generalisation of a Poisson process. It defines a continuous process which takes values in the natural numbers and can only increase by one (a "birth") or remain unchanged. This is a type of birth–death process with no deaths. The rate at which births occur is given by an exponential random variable whose parameter depends only on the current value of the process Definition Birth rates definition A birth process with birth rates (\lambda_n, n\in \mathbb) and initial value k\in \mathbb is a minimal right-continuous process (X_t, t\ge 0) such that X_0=k and the interarrival times T_i = \inf\ - \inf\ are independent exponential random variables with parameter \lambda_i. Infinitesimal definition A birth process with rates (\lambda_n, n\in \mathbb) and initial value k\in \mathbb is a process (X_t, t\ge 0) such that: * X_0=k * \forall s,t\ge 0: s [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Error

The standard error (SE) of a statistic (usually an estimate of a parameter) is the standard deviation of its sampling distribution or an estimate of that standard deviation. If the statistic is the sample mean, it is called the standard error of the mean (SEM). The sampling distribution of a mean is generated by repeated sampling from the same population and recording of the sample means obtained. This forms a distribution of different means, and this distribution has its own mean and variance. Mathematically, the variance of the sampling mean distribution obtained is equal to the variance of the population divided by the sample size. This is because as the sample size increases, sample means cluster more closely around the population mean. Therefore, the relationship between the standard error of the mean and the standard deviation is such that, for a given sample size, the standard error of the mean equals the standard deviation divided by the square root of the sample size. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expectation Maximisation

Expectation or Expectations may refer to: Science * Expectation (epistemic) * Expected value, in mathematical probability theory * Expectation value (quantum mechanics) * Expectation–maximization algorithm, in statistics Music * ''Expectation'' (album), a 2013 album by Girl's Day * ''Expectation'', a 2006 album by Matt Harding * ''Expectations'' (Keith Jarrett album), 1971 * ''Expectations'' (Dance Exponents album), 1985 * ''Expectations'' (Hayley Kiyoko album), 2018 **"Expectations/Overture", a song from the album * ''Expectations'' (Bebe Rexha album), 2018 * ''Expectations'' (Katie Pruitt album), 2020 **"Expectations", a song from the album * "Expectation" (waltz), a 1980 waltz composed by Ilya Herold Lavrentievich Kittler * "Expectation" (song), a 2010 song by Tame Impala * "Expectations" (song), a 2018 song by Lauren Jauregui * "Expectations", a song by Three Days Grace from ''Transit of Venus frameless, upright=0.5 A transit of Venus across the Sun takes pla ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Probability

Bayesian probability is an interpretation of the concept of probability, in which, instead of frequency or propensity of some phenomenon, probability is interpreted as reasonable expectation representing a state of knowledge or as quantification of a personal belief. The Bayesian interpretation of probability can be seen as an extension of propositional logic that enables reasoning with hypotheses; that is, with propositions whose truth or falsity is unknown. In the Bayesian view, a probability is assigned to a hypothesis, whereas under frequentist inference, a hypothesis is typically tested without being assigned a probability. Bayesian probability belongs to the category of evidential probabilities; to evaluate the probability of a hypothesis, the Bayesian probabilist specifies a prior probability. This, in turn, is then updated to a posterior probability in the light of new, relevant data (evidence). The Bayesian interpretation provides a standard set of procedures and form ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gamma Distribution

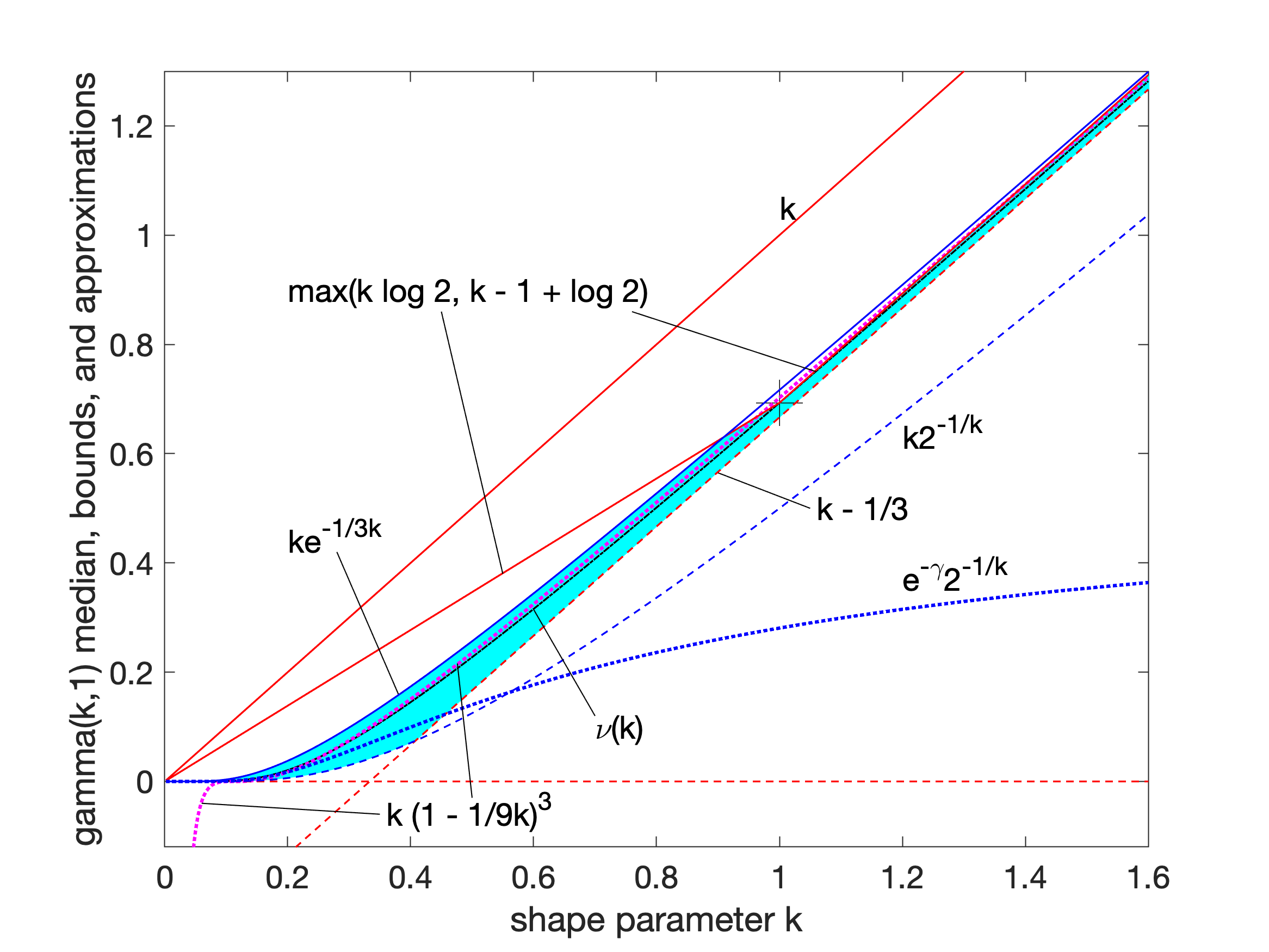

In probability theory and statistics, the gamma distribution is a two-parameter family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-square distribution are special cases of the gamma distribution. There are two equivalent parameterizations in common use: #With a shape parameter k and a scale parameter \theta. #With a shape parameter \alpha = k and an inverse scale parameter \beta = 1/ \theta , called a rate parameter. In each of these forms, both parameters are positive real numbers. The gamma distribution is the maximum entropy probability distribution (both with respect to a uniform base measure and a 1/x base measure) for a random variable X for which E 'X''= ''kθ'' = ''α''/''β'' is fixed and greater than zero, and E n(''X'')= ''ψ''(''k'') + ln(''θ'') = ''ψ''(''α'') − ln(''β'') is fixed (''ψ'' is the digamma function). Definitions The parameterization with ''k'' and ''θ'' appears to be more common i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood Estimator

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed statistical model, the observed data is most probable. The point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when all observed outcomes are assumed to have Normal distributions with the same variance. From the perspective of Bayesian inference, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scale Parameter

In probability theory and statistics, a scale parameter is a special kind of numerical parameter of a parametric family of probability distributions. The larger the scale parameter, the more spread out the distribution. Definition If a family of probability distributions is such that there is a parameter ''s'' (and other parameters ''θ'') for which the cumulative distribution function satisfies :F(x;s,\theta) = F(x/s;1,\theta), \! then ''s'' is called a scale parameter, since its value determines the " scale" or statistical dispersion of the probability distribution. If ''s'' is large, then the distribution will be more spread out; if ''s'' is small then it will be more concentrated. If the probability density exists for all values of the complete parameter set, then the density (as a function of the scale parameter only) satisfies :f_s(x) = f(x/s)/s, \! where ''f'' is the density of a standardized version of the density, i.e. f(x) \equiv f_(x). An estimator of a scale ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exponential Distribution

In probability theory and statistics, the exponential distribution is the probability distribution of the time between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant average rate. It is a particular case of the gamma distribution. It is the continuous analogue of the geometric distribution, and it has the key property of being memoryless. In addition to being used for the analysis of Poisson point processes it is found in various other contexts. The exponential distribution is not the same as the class of exponential families of distributions. This is a large class of probability distributions that includes the exponential distribution as one of its members, but also includes many other distributions, like the normal, binomial, gamma, and Poisson distributions. Definitions Probability density function The probability density function (pdf) of an exponential distribution is : f(x;\lambda) = \begin \lam ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Geometric Distribution

In probability theory and statistics, the geometric distribution is either one of two discrete probability distributions: * The probability distribution of the number ''X'' of Bernoulli trials needed to get one success, supported on the set \; * The probability distribution of the number ''Y'' = ''X'' − 1 of failures before the first success, supported on the set \. Which of these is called the geometric distribution is a matter of convention and convenience. These two different geometric distributions should not be confused with each other. Often, the name ''shifted'' geometric distribution is adopted for the former one (distribution of the number ''X''); however, to avoid ambiguity, it is considered wise to indicate which is intended, by mentioning the support explicitly. The geometric distribution gives the probability that the first occurrence of success requires ''k'' independent trials, each with success probability ''p''. If the probability of succe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compound Distribution

In probability and statistics, a compound probability distribution (also known as a mixture distribution or contagious distribution) is the probability distribution that results from assuming that a random variable is distributed according to some parametrized distribution, with (some of) the parameters of that distribution themselves being random variables. If the parameter is a scale parameter, the resulting mixture is also called a scale mixture. The compound distribution ("unconditional distribution") is the result of marginalizing (integrating) over the ''latent'' random variable(s) representing the parameter(s) of the parametrized distribution ("conditional distribution"). Definition A compound probability distribution is the probability distribution that results from assuming that a random variable X is distributed according to some parametrized distribution F with an unknown parameter \theta that is again distributed according to some other distribution G. The resultin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Urn Problem

In probability and statistics, an urn problem is an idealized mental exercise in which some objects of real interest (such as atoms, people, cars, etc.) are represented as colored balls in an urn or other container. One pretends to remove one or more balls from the urn; the goal is to determine the probability of drawing one color or another, or some other properties. A number of important variations are described below. An urn model is either a set of probabilities that describe events within an urn problem, or it is a probability distribution, or a family of such distributions, of random variables associated with urn problems.Dodge, Yadolah (2003) ''Oxford Dictionary of Statistical Terms'', OUP. History In ''Ars Conjectandi'' (1713), Jacob Bernoulli considered the problem of determining, given a number of pebbles drawn from an urn, the proportions of different colored pebbles within the urn. This problem was known as the '' inverse probability'' problem, and was a topic of r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |