|

Wallis' Integrals

In mathematics, and more precisely in analysis, the Wallis integrals constitute a family of integrals introduced by John Wallis. Definition, basic properties The ''Wallis integrals'' are the terms of the sequence (W_n)_ defined by : W_n = \int_0^ \sin^n x \,dx, or equivalently, : W_n = \int_0^ \cos^n x \,dx. The first few terms of this sequence are: The sequence (W_n) is decreasing and has positive terms. In fact, for all n \geq 0: *W_n > 0, because it is an integral of a non-negative continuous function which is not identically zero; *W_n - W_ = \int_0^ \sin^n x\,dx - \int_0^ \sin^ x\,dx = \int_0^ (\sin^n x)(1 - \sin x )\,dx > 0, again because the last integral is of a non-negative continuous function. Since the sequence (W_n) is decreasing and bounded below by 0, it converges to a non-negative limit. Indeed, the limit is zero (see below). Recurrence relation By means of integration by parts, a reduction formula can be obtained. Using the identity \sin^2 x = 1 - \cos^2 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

John Wallis By Sir Godfrey Kneller, Bt

John is a common English name and surname: * John (given name) * John (surname) John may also refer to: New Testament Works * Gospel of John, a title often shortened to John * First Epistle of John, often shortened to 1 John * Second Epistle of John, often shortened to 2 John * Third Epistle of John, often shortened to 3 John People * John the Baptist (died ), regarded as a prophet and the forerunner of Jesus Christ * John the Apostle (died ), one of the twelve apostles of Jesus Christ * John the Evangelist, assigned author of the Fourth Gospel, once identified with the Apostle * John of Patmos, also known as John the Divine or John the Revelator, the author of the Book of Revelation, once identified with the Apostle * John the Presbyter, a figure either identified with or distinguished from the Apostle, the Evangelist and John of Patmos Other people with the given name Religious figures * John, father of Andrew the Apostle and Saint Peter * Pope John (disambigu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Euler Integral

In mathematics, there are two types of Euler integral: # The ''Euler integral of the first kind'' is the beta function \mathrm(z_1,z_2) = \int_0^1t^(1-t)^\,dt = \frac # The ''Euler integral of the second kind'' is the gamma function \Gamma(z) = \int_0^\infty t^\,\mathrm e^\,dt For positive integers and , the two integrals can be expressed in terms of factorials and binomial coefficients: \Beta(n,m) = \frac = \frac = \left( \frac + \frac \right) \frac \Gamma(n) = (n-1)! See also *Leonhard Euler Leonhard Euler ( ; ; ; 15 April 170718 September 1783) was a Swiss polymath who was active as a mathematician, physicist, astronomer, logician, geographer, and engineer. He founded the studies of graph theory and topology and made influential ... * List of topics named after Leonhard Euler References External links and references * NIST Digital Library of Mathematical Functiondlmf.nist.gov/5.2.1relation 5.2.1 andlmf.nist.gov/5.12relation 5.12.1 Gamma and related functions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wallis Product

The Wallis product is the infinite product representation of : :\begin \frac & = \prod_^ \frac = \prod_^ \left(\frac \cdot \frac\right) \\ pt& = \Big(\frac \cdot \frac\Big) \cdot \Big(\frac \cdot \frac\Big) \cdot \Big(\frac \cdot \frac\Big) \cdot \Big(\frac \cdot \frac\Big) \cdot \; \cdots \\ \end It was published in 1656 by John Wallis. Proof using integration Wallis derived this infinite product using interpolation, though his method is not regarded as rigorous. A modern derivation can be found by examining \int_0^\pi \sin^n x\,dx for even and odd values of n, and noting that for large n, increasing n by 1 results in a change that becomes ever smaller as n increases. Let :I(n) = \int_0^\pi \sin^n x\,dx. (This is a form of Wallis' integrals.) Integrate by parts: :\begin u &= \sin^x \\ \Rightarrow du &= (n-1) \sin^x \cos x\,dx \\ dv &= \sin x\,dx \\ \Rightarrow v &= -\cos x \end :\begin \Rightarrow I(n) &= \int_0^\pi \sin^n x\,dx \\ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gaussian Integral

The Gaussian integral, also known as the Euler–Poisson integral, is the integral of the Gaussian function f(x) = e^ over the entire real line. Named after the German mathematician Carl Friedrich Gauss, the integral is \int_^\infty e^\,dx = \sqrt. Abraham de Moivre originally discovered this type of integral in 1733, while Gauss published the precise integral in 1809, attributing its discovery to Laplace. The integral has a wide range of applications. For example, with a slight change of variables it is used to compute the normalizing constant of the normal distribution. The same integral with finite limits is closely related to both the error function and the cumulative distribution function of the normal distribution. In physics this type of integral appears frequently, for example, in quantum mechanics, to find the probability density of the ground state of the harmonic oscillator. This integral is also used in the path integral formulation, to find the propagator of the h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stirling's Formula

In mathematics, Stirling's approximation (or Stirling's formula) is an Asymptotic analysis, asymptotic approximation for factorials. It is a good approximation, leading to accurate results even for small values of n. It is named after James Stirling (mathematician), James Stirling, though a related but less precise result was first stated by Abraham de Moivre. One way of stating the approximation involves the logarithm of the factorial: \ln(n!) = n\ln n - n +O(\ln n), where the big O notation means that, for all sufficiently large values of n, the difference between \ln(n!) and n\ln n-n will be at most proportional to the logarithm of n. In computer science applications such as the Comparison sort#Number of comparisons required to sort a list, worst-case lower bound for comparison sorting, it is convenient to instead use the binary logarithm, giving the equivalent form \log_2 (n!) = n\log_2 n - n\log_2 e +O(\log_2 n). The error term in either base can be expressed more precisely as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sandwich Theorem

In calculus, the squeeze theorem (also known as the sandwich theorem, among other names) is a theorem regarding the limit of a function that is bounded between two other functions. The squeeze theorem is used in calculus and mathematical analysis, typically to confirm the limit of a function via comparison with two other functions whose limits are known. It was first used geometrically by the mathematicians Archimedes and Eudoxus in an effort to compute , and was formulated in modern terms by Carl Friedrich Gauss. Statement The squeeze theorem is formally stated as follows. * The functions and are said to be lower and upper bounds (respectively) of . * Here, is ''not'' required to lie in the interior of . Indeed, if is an endpoint of , then the above limits are left- or right-hand limits. * A similar statement holds for infinite intervals: for example, if , then the conclusion holds, taking the limits as . This theorem is also valid for sequences. Let be two seq ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gamma Function

In mathematics, the gamma function (represented by Γ, capital Greek alphabet, Greek letter gamma) is the most common extension of the factorial function to complex numbers. Derived by Daniel Bernoulli, the gamma function \Gamma(z) is defined for all complex numbers z except non-positive integers, and for every positive integer z=n, \Gamma(n) = (n-1)!\,.The gamma function can be defined via a convergent improper integral for complex numbers with positive real part: \Gamma(z) = \int_0^\infty t^ e^\textt, \ \qquad \Re(z) > 0\,.The gamma function then is defined in the complex plane as the analytic continuation of this integral function: it is a meromorphic function which is holomorphic function, holomorphic except at zero and the negative integers, where it has simple Zeros and poles, poles. The gamma function has no zeros, so the reciprocal gamma function is an entire function. In fact, the gamma function corresponds to the Mellin transform of the negative exponential functi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Beta Function

In mathematics, the beta function, also called the Euler integral of the first kind, is a special function that is closely related to the gamma function and to binomial coefficients. It is defined by the integral : \Beta(z_1,z_2) = \int_0^1 t^(1-t)^\,dt for complex number inputs z_1, z_2 such that \operatorname(z_1), \operatorname(z_2)>0. The beta function was studied by Leonhard Euler and Adrien-Marie Legendre and was given its name by Jacques Binet; its symbol is a Greek capital beta. Properties The beta function is symmetric, meaning that \Beta(z_1,z_2) = \Beta(z_2,z_1) for all inputs z_1 and z_2.. Specifically, see 6.2 Beta Function. A key property of the beta function is its close relationship to the gamma function: : \Beta(z_1,z_2)=\frac A proof is given below in . The beta function is also closely related to binomial coefficients. When (or , by symmetry) is a positive integer, it follows from the definition of the gamma function that : \Beta(m,n) =\fr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Derivative

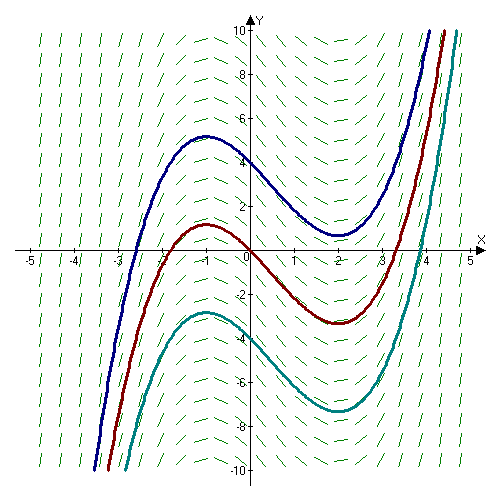

In mathematics, the derivative is a fundamental tool that quantifies the sensitivity to change of a function's output with respect to its input. The derivative of a function of a single variable at a chosen input value, when it exists, is the slope of the tangent line to the graph of the function at that point. The tangent line is the best linear approximation of the function near that input value. For this reason, the derivative is often described as the instantaneous rate of change, the ratio of the instantaneous change in the dependent variable to that of the independent variable. The process of finding a derivative is called differentiation. There are multiple different notations for differentiation. '' Leibniz notation'', named after Gottfried Wilhelm Leibniz, is represented as the ratio of two differentials, whereas ''prime notation'' is written by adding a prime mark. Higher order notations represent repeated differentiation, and they are usually denoted in Leib ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematics

Mathematics is a field of study that discovers and organizes methods, Mathematical theory, theories and theorems that are developed and Mathematical proof, proved for the needs of empirical sciences and mathematics itself. There are many areas of mathematics, which include number theory (the study of numbers), algebra (the study of formulas and related structures), geometry (the study of shapes and spaces that contain them), Mathematical analysis, analysis (the study of continuous changes), and set theory (presently used as a foundation for all mathematics). Mathematics involves the description and manipulation of mathematical object, abstract objects that consist of either abstraction (mathematics), abstractions from nature orin modern mathematicspurely abstract entities that are stipulated to have certain properties, called axioms. Mathematics uses pure reason to proof (mathematics), prove properties of objects, a ''proof'' consisting of a succession of applications of in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Anti-derivative

In calculus, an antiderivative, inverse derivative, primitive function, primitive integral or indefinite integral of a continuous function is a differentiable function whose derivative is equal to the original function . This can be stated symbolically as . The process of solving for antiderivatives is called antidifferentiation (or indefinite integration), and its opposite operation is called ''differentiation'', which is the process of finding a derivative. Antiderivatives are often denoted by capital Roman letters such as and . Antiderivatives are related to definite integrals through the second fundamental theorem of calculus: the definite integral of a function over a closed interval where the function is Riemann integrable is equal to the difference between the values of an antiderivative evaluated at the endpoints of the interval. In physics, antiderivatives arise in the context of rectilinear motion (e.g., in explaining the relationship between position, velocity a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Integration By Reduction Formulae

In integral calculus, integration by reduction formulae is a method relying on recurrence relations. It is used when an expression containing an integer parameter, usually in the form of powers of elementary functions, or products of transcendental functions and polynomials of arbitrary degree, can't be integrated directly. But using other methods of integration a reduction formula can be set up to obtain the integral of the same or similar expression with a lower integer parameter, progressively simplifying the integral until it can be evaluated. This method of integration is one of the earliest used. How to find the reduction formula The reduction formula can be derived using any of the common methods of integration, like integration by substitution, integration by parts, integration by trigonometric substitution, integration by partial fractions, etc. The main idea is to express an integral involving an integer parameter (e.g. power) of a function, represented by In, i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |