|

Tukey's Honestly Significant Difference

Tukey's range test, also known as Tukey's test, Tukey method, Tukey's honest significance test, or Tukey's HSD (honestly significant difference) test, : Also occasionally described as "honestly", see e.g. is a single-step multiple comparison procedure and statistical test. It can be used to correctly interpret the statistical significance of the difference between means that have been selected for comparison because of their extreme values. The method was initially developed and introduced by John Tukey for use in Analysis of Variance (ANOVA), and usually has only been taught in connection with ANOVA. However, the studentized range distribution used to determine the level of significance of the differences considered in Tukey's test has vastly broader application: It is useful for researchers who have searched their collected data for remarkable differences between groups, but then cannot validly determine how significant their discovered stand-out difference is using standar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of The Neurological Sciences

''Journal of the Neurological Sciences'' is a peer-reviewed medical journal covering the field of neurology. It is also the official journal of the World Federation of Neurology. According to the ''Journal Citation Reports'', it received an impact factor The impact factor (IF) or journal impact factor (JIF) of an academic journal is a type of journal ranking. Journals with higher impact factor values are considered more prestigious or important within their field. The Impact Factor of a journa ... of 4.453. Sources External links * Neurology journals Elsevier academic journals Academic journals established in 1964 Monthly journals {{neurology-journal-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Independence

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. Mutual independence implies pairwise independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Student's T-distribution

In probability theory and statistics, Student's distribution (or simply the distribution) t_\nu is a continuous probability distribution that generalizes the Normal distribution#Standard normal distribution, standard normal distribution. Like the latter, it is symmetric around zero and bell-shaped. However, t_\nu has Heavy-tailed distribution, heavier tails, and the amount of probability mass in the tails is controlled by the parameter \nu. For \nu = 1 the Student's distribution t_\nu becomes the standard Cauchy distribution, which has very fat-tailed distribution, "fat" tails; whereas for \nu \to \infty it becomes the standard normal distribution \mathcal(0, 1), which has very "thin" tails. The name "Student" is a pseudonym used by William Sealy Gosset in his scientific paper publications during his work at the Guinness Brewery in Dublin, Ireland. The Student's distribution plays a role in a number of widely used statistical analyses, including Student's t- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Type I Error

Type I error, or a false positive, is the erroneous rejection of a true null hypothesis in statistical hypothesis testing. A type II error, or a false negative, is the erroneous failure in bringing about appropriate rejection of a false null hypothesis. Type I errors can be thought of as errors of commission, in which the status quo is erroneously rejected in favour of new, misleading information. Type II errors can be thought of as errors of omission, in which a misleading status quo is allowed to remain due to failures in identifying it as such. For example, if the assumption that people are ''innocent until proven guilty'' were taken as a null hypothesis, then proving an innocent person as guilty would constitute a Type I error, while failing to prove a guilty person as guilty would constitute a Type II error. If the null hypothesis were inverted, such that people were by default presumed to be ''guilty until proven innocent'', then proving a guilty person's innocence would ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pooled Variance

In statistics, pooled variance (also known as combined variance, composite variance, or overall variance, and written \sigma^2) is a method for estimating variance of several different populations when the mean of each population may be different, but one may assume that the variance of each population is the same. The numerical estimate resulting from the use of this method is also called the pooled variance. Under the assumption of equal population variances, the pooled sample variance provides a higher precision estimate of variance than the individual sample variances. This higher precision can lead to increased statistical power when used in statistical tests that compare the populations, such as the ''t''-test. The square root of a pooled variance estimator is known as a pooled standard deviation (also known as combined standard deviation, composite standard deviation, or overall standard deviation). Motivation In statistics, many times, data are collected for a depe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Error (statistics)

The standard error (SE) of a statistic (usually an estimator of a parameter, like the average or mean) is the standard deviation of its sampling distribution or an estimate of that standard deviation. In other words, it is the standard deviation of statistic values (each value is per sample that is a set of observations made per sampling on the same population). If the statistic is the sample mean, it is called the standard error of the mean (SEM). The standard error is a key ingredient in producing confidence intervals. The sampling distribution of a mean is generated by repeated sampling from the same population and recording the sample mean per sample. This forms a distribution of different means, and this distribution has its own mean and variance. Mathematically, the variance of the sampling mean distribution obtained is equal to the variance of the population divided by the sample size. This is because as the sample size increases, sample means cluster more closely aro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Deviation

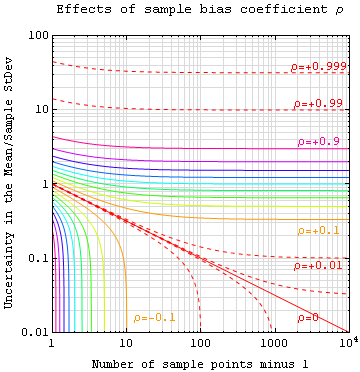

In statistics, the standard deviation is a measure of the amount of variation of the values of a variable about its Expected value, mean. A low standard Deviation (statistics), deviation indicates that the values tend to be close to the mean (also called the expected value) of the set, while a high standard deviation indicates that the values are spread out over a wider range. The standard deviation is commonly used in the determination of what constitutes an outlier and what does not. Standard deviation may be abbreviated SD or std dev, and is most commonly represented in mathematical texts and equations by the lowercase Greek alphabet, Greek letter Sigma, σ (sigma), for the population standard deviation, or the Latin script, Latin letter ''s'', for the sample standard deviation. The standard deviation of a random variable, Sample (statistics), sample, statistical population, data set, or probability distribution is the square root of its variance. (For a finite population, v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Central Limit Theorem

In probability theory, the central limit theorem (CLT) states that, under appropriate conditions, the Probability distribution, distribution of a normalized version of the sample mean converges to a Normal distribution#Standard normal distribution, standard normal distribution. This holds even if the original variables themselves are not Normal distribution, normally distributed. There are several versions of the CLT, each applying in the context of different conditions. The theorem is a key concept in probability theory because it implies that probabilistic and statistical methods that work for normal distributions can be applicable to many problems involving other types of distributions. This theorem has seen many changes during the formal development of probability theory. Previous versions of the theorem date back to 1811, but in its modern form it was only precisely stated as late as 1920. In statistics, the CLT can be stated as: let X_1, X_2, \dots, X_n denote a Sampling ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Null Hypothesis

The null hypothesis (often denoted ''H''0) is the claim in scientific research that the effect being studied does not exist. The null hypothesis can also be described as the hypothesis in which no relationship exists between two sets of data or variables being analyzed. If the null hypothesis is true, any experimentally observed effect is due to chance alone, hence the term "null". In contrast with the null hypothesis, an alternative hypothesis (often denoted ''H''A or ''H''1) is developed, which claims that a relationship does exist between two variables. Basic definitions The null hypothesis and the ''alternative hypothesis'' are types of conjectures used in statistical tests to make statistical inferences, which are formal methods of reaching conclusions and separating scientific claims from statistical noise. The statement being tested in a test of statistical significance is called the null hypothesis. The test of significance is designed to assess the strength of the e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Absolute Value

In mathematics, the absolute value or modulus of a real number x, is the non-negative value without regard to its sign. Namely, , x, =x if x is a positive number, and , x, =-x if x is negative (in which case negating x makes -x positive), and For example, the absolute value of 3 and the absolute value of −3 is The absolute value of a number may be thought of as its distance from zero. Generalisations of the absolute value for real numbers occur in a wide variety of mathematical settings. For example, an absolute value is also defined for the complex numbers, the quaternions, ordered rings, fields and vector spaces. The absolute value is closely related to the notions of magnitude, distance, and norm in various mathematical and physical contexts. Terminology and notation In 1806, Jean-Robert Argand introduced the term ''module'', meaning ''unit of measure'' in French, specifically for the ''complex'' absolute value,Oxford English Dictionary, Draft Revision, Ju ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Error (statistics)

The standard error (SE) of a statistic (usually an estimator of a parameter, like the average or mean) is the standard deviation of its sampling distribution or an estimate of that standard deviation. In other words, it is the standard deviation of statistic values (each value is per sample that is a set of observations made per sampling on the same population). If the statistic is the sample mean, it is called the standard error of the mean (SEM). The standard error is a key ingredient in producing confidence intervals. The sampling distribution of a mean is generated by repeated sampling from the same population and recording the sample mean per sample. This forms a distribution of different means, and this distribution has its own mean and variance. Mathematically, the variance of the sampling mean distribution obtained is equal to the variance of the population divided by the sample size. This is because as the sample size increases, sample means cluster more closely aro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Family-wise Error Rate

In statistics, family-wise error rate (FWER) is the probability of making one or more false discoveries, or type I errors when performing multiple hypotheses tests. Familywise and experimentwise error rates John Tukey developed in 1953 the concept of a familywise error rate as the probability of making a Type I error among a specified group, or "family," of tests. Based on Tukey (1953), Ryan (1959) proposed the related concept of an ''experimentwise error rate'', which is the probability of making a Type I error in a given experiment. Hence, an experimentwise error rate is a familywise error rate where the family includes all the tests that are conducted within an experiment. As Ryan (1959, Footnote 3) explained, an experiment may contain two or more families of multiple comparisons, each of which relates to a particular statistical inference and each of which has its own separate familywise error rate. Hence, familywise error rates are usually based on theoretically informative ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |