|

Subnormal Number

In computer science, subnormal numbers are the subset of denormalized numbers (sometimes called denormals) that fill the underflow gap around zero in floating-point arithmetic. Any non-zero number with magnitude smaller than the smallest normal number is ''subnormal''. :: ''Usage note: in some older documents (especially standards documents such as the initial releases of IEEE 754 and the C language), "denormal" is used to refer exclusively to subnormal numbers. This usage persists in various standards documents, especially when discussing hardware that is incapable of representing any other denormalized numbers, but the discussion here uses the term subnormal in line with the 2008 revision of IEEE 754.'' In a normal floating-point value, there are no leading zeros in the significand ( mantissa); rather, leading zeros are removed by adjusting the exponent (for example, the number 0.0123 would be written as ). Conversely, a denormalized floating point value has a significand wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Division By Zero

In mathematics, division by zero is division where the divisor (denominator) is zero. Such a division can be formally expressed as \tfrac, where is the dividend (numerator). In ordinary arithmetic, the expression has no meaning, as there is no number that, when multiplied by , gives (assuming a \neq 0); thus, division by zero is undefined. Since any number multiplied by zero is zero, the expression \tfrac is also undefined; when it is the form of a limit, it is an indeterminate form. Historically, one of the earliest recorded references to the mathematical impossibility of assigning a value to \tfrac is contained in Anglo-Irish philosopher George Berkeley's criticism of infinitesimal calculus in 1734 in '' The Analyst'' ("ghosts of departed quantities"). There are mathematical structures in which \tfrac is defined for some such as in the Riemann sphere (a model of the extended complex plane) and the Projectively extended real line; however, such structures do not satisf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logarithmic Number System

A logarithmic number system (LNS) is an arithmetic system used for representing real numbers in computer and digital hardware, especially for digital signal processing. Overview In an LNS, a number, X, is represented by the logarithm, x, of its absolute value as follows: :X\rightarrow\, where s is a bit denoting the sign of X (s=0 if X>0 and s=1 if X A VHDL library for LNS hardware generation Computer arithmetic Digital signal processing [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Application Binary Interface

In computer software, an application binary interface (ABI) is an interface between two binary program modules. Often, one of these modules is a library or operating system facility, and the other is a program that is being run by a user. An ''ABI'' defines how data structures or computational routines are accessed in machine code, which is a low-level, hardware-dependent format. In contrast, an ''API'' defines this access in source code, which is a relatively high-level, hardware-independent, often human-readable format. A common aspect of an ABI is the calling convention, which determines how data is provided as input to, or read as output from, computational routines. Examples of this are the x86 calling conventions. Adhering to an ABI (which may or may not be officially standardized) is usually the job of a compiler, operating system, or library author. However, an application programmer may have to deal with an ABI directly when writing a program in a mix of programmi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mac OS X

macOS (; previously OS X and originally Mac OS X) is a Unix operating system developed and marketed by Apple Inc. since 2001. It is the primary operating system for Apple's Mac computers. Within the market of desktop and laptop computers it is the second most widely used desktop OS, after Microsoft Windows and ahead of ChromeOS. macOS succeeded the classic Mac OS, a Mac operating system with nine releases from 1984 to 1999. During this time, Apple cofounder Steve Jobs had left Apple and started another company, NeXT, developing the NeXTSTEP platform that would later be acquired by Apple to form the basis of macOS. The first desktop version, Mac OS X 10.0, was released in March 2001, with its first update, 10.1, arriving later that year. All releases from Mac OS X 10.5 Leopard and after are UNIX 03 certified, with an exception for OS X 10.7 Lion. Apple's other operating systems ( iOS, iPadOS, watchOS, tvOS, audioOS) are derivatives of macOS. A promine ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GNU Compiler Collection

The GNU Compiler Collection (GCC) is an optimizing compiler produced by the GNU Project supporting various programming languages, hardware architectures and operating systems. The Free Software Foundation (FSF) distributes GCC as free software under the GNU General Public License (GNU GPL). GCC is a key component of the GNU toolchain and the standard compiler for most projects related to GNU and the Linux kernel. With roughly 15 million lines of code in 2019, GCC is one of the biggest free programs in existence. It has played an important role in the growth of free software, as both a tool and an example. When it was first released in 1987 by Richard Stallman, GCC 1.0 was named the GNU C Compiler since it only handled the C programming language. It was extended to compile C++ in December of that year. Front ends were later developed for Objective-C, Objective-C++, Fortran, Ada, D and Go, among others. The OpenMP and OpenACC specifications are also supported in the C ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

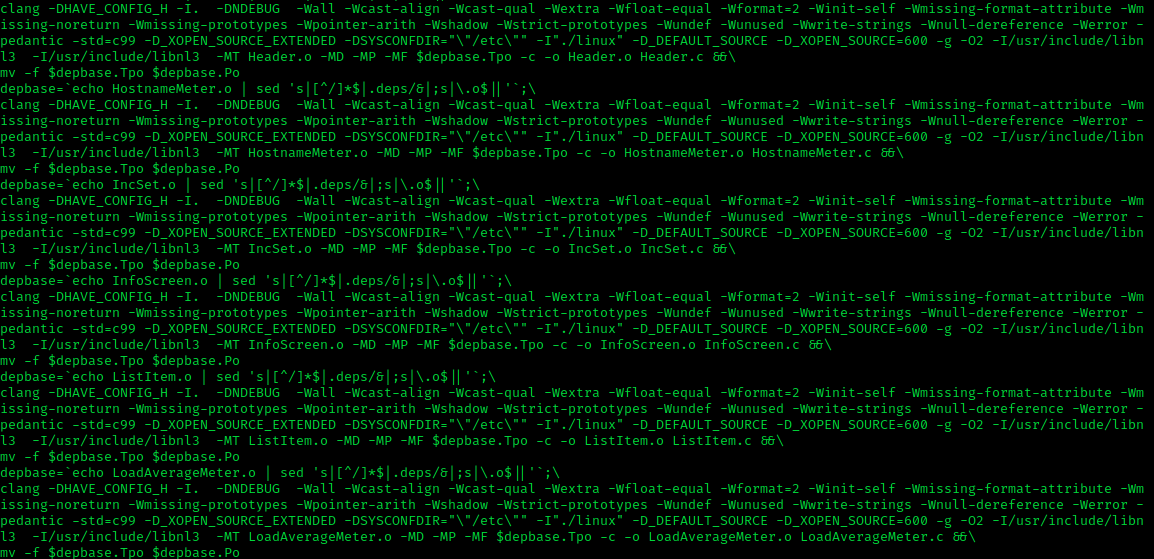

Clang

Clang is a compiler front end for the C, C++, Objective-C, and Objective-C++ programming languages, as well as the OpenMP, OpenCL, RenderScript, CUDA, and HIP frameworks. It acts as a drop-in replacement for the GNU Compiler Collection (GCC), supporting most of its compilation flags and unofficial language extensions. It includes a static analyzer, and several code analysis tools. Clang operates in tandem with the LLVM compiler back end and has been a subproject of LLVM 2.6 and later. As with LLVM, it is free and open-source software under the Apache License 2.0 software license. Its contributors include Apple, Microsoft, Google, ARM, Sony, Intel, and AMD. Clang 14, the latest major version of Clang as of March 2022, has full support for all published C++ standards up to C++17, implements most features of C++20, and has initial support for the upcoming C++23 standard. Since v6.0.0, Clang compiles C++ using the GNU++14 dialect by default, which includes feat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Streaming SIMD Extensions

In computing, Streaming SIMD Extensions (SSE) is a single instruction, multiple data ( SIMD) instruction set extension to the x86 architecture, designed by Intel and introduced in 1999 in their Pentium III series of Central processing units (CPUs) shortly after the appearance of Advanced Micro Devices (AMD's) 3DNow!. SSE contains 70 new instructions (65 unique mnemonics using 70 encodings), most of which work on single precision floating-point data. SIMD instructions can greatly increase performance when exactly the same operations are to be performed on multiple data objects. Typical applications are digital signal processing and graphics processing. Intel's first IA-32 SIMD effort was the MMX instruction set. MMX had two main problems: it re-used existing x87 floating-point registers making the CPUs unable to work on both floating-point and SIMD data at the same time, and it only worked on integers. SSE floating-point instructions operate on a new independent register set ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intel

Intel Corporation is an American multinational corporation and technology company headquartered in Santa Clara, California. It is the world's largest semiconductor chip manufacturer by revenue, and is one of the developers of the x86 series of instruction sets, the instruction sets found in most personal computers (PCs). Incorporated in Delaware, Intel ranked No. 45 in the 2020 ''Fortune'' 500 list of the largest United States corporations by total revenue for nearly a decade, from 2007 to 2016 fiscal years. Intel supplies microprocessors for computer system manufacturers such as Acer, Lenovo, HP, and Dell. Intel also manufactures motherboard chipsets, network interface controllers and integrated circuits, flash memory, graphics chips, embedded processors and other devices related to communications and computing. Intel (''int''egrated and ''el''ectronics) was founded on July 18, 1968, by semiconductor pioneers Gordon Moore (of Moore's law) and Robert Noyce ( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Streaming SIMD Extensions

In computing, Streaming SIMD Extensions (SSE) is a single instruction, multiple data ( SIMD) instruction set extension to the x86 architecture, designed by Intel and introduced in 1999 in their Pentium III series of Central processing units (CPUs) shortly after the appearance of Advanced Micro Devices (AMD's) 3DNow!. SSE contains 70 new instructions (65 unique mnemonics using 70 encodings), most of which work on single precision floating-point data. SIMD instructions can greatly increase performance when exactly the same operations are to be performed on multiple data objects. Typical applications are digital signal processing and graphics processing. Intel's first IA-32 SIMD effort was the MMX instruction set. MMX had two main problems: it re-used existing x87 floating-point registers making the CPUs unable to work on both floating-point and SIMD data at the same time, and it only worked on integers. SSE floating-point instructions operate on a new independent register set ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Timing Attack

In cryptography, a timing attack is a side-channel attack in which the attacker attempts to compromise a cryptosystem by analyzing the time taken to execute cryptographic algorithms. Every logical operation in a computer takes time to execute, and the time can differ based on the input; with precise measurements of the time for each operation, an attacker can work backwards to the input. Finding secrets through timing information may be significantly easier than using cryptanalysis of known plaintext, ciphertext pairs. Sometimes timing information is combined with cryptanalysis to increase the rate of information leakage. Information can leak from a system through measurement of the time it takes to respond to certain queries. How much this information can help an attacker depends on many variables: cryptographic system design, the CPU running the system, the algorithms used, assorted implementation details, timing attack countermeasures, the accuracy of the timing measurements, et ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction (computer Science)

In computer science, an instruction set architecture (ISA), also called computer architecture, is an abstract model of a computer. A device that executes instructions described by that ISA, such as a central processing unit (CPU), is called an ''implementation''. In general, an ISA defines the supported instructions, data types, registers, the hardware support for managing main memory, fundamental features (such as the memory consistency, addressing modes, virtual memory), and the input/output model of a family of implementations of the ISA. An ISA specifies the behavior of machine code running on implementations of that ISA in a fashion that does not depend on the characteristics of that implementation, providing binary compatibility between implementations. This enables multiple implementations of an ISA that differ in characteristics such as performance, physical size, and monetary cost (among other things), but that are capable of running the same machine code, so th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |