|

Structured Data Mining

Structure mining or structured data mining is the process of finding and extracting useful information from semi-structured data sets. Graph mining, sequential pattern mining and molecule mining are special cases of structured data mining. Description The growth of the use of semi-structured data has created new opportunities for data mining, which has traditionally been concerned with tabular data sets, reflecting the strong association between data mining and relational databases. Much of the world's interesting and mineable data does not easily fold into relational databases, though a generation of software engineers have been trained to believe this was the only way to handle data, and data mining algorithms have generally been developed only to cope with tabular data. XML, being the most frequent way of representing semi-structured data, is able to represent both tabular data and arbitrary trees. Any particular representation of data to be exchanged between two applications i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Molecule Mining

This page describes mining for molecules. Since molecules may be represented by molecular graphs this is strongly related to graph mining and structured data mining. The main problem is how to represent molecules while discriminating the data instances. One way to do this is chemical similarity metrics, which has a long tradition in the field of cheminformatics. Typical approaches to calculate chemical similarities use chemical fingerprints, but this loses the underlying information about the molecule topology. Mining the molecular graphs directly avoids this problem. So does the inverse QSAR problem which is preferable for vectorial mappings. Coding(Moleculei,Moleculej\neqi) Kernel methods * Marginalized graph kernelH. Kashima, K. Tsuda, A. Inokuchi, Marginalized Kernels Between Labeled Graphs, The 20th International Conference on Machine Learning (ICML2003), 2003. PDF * Optimal assignment kernelH. Fröhlich, J. K. Wegner, A. Zell, ''Optimal Assignment Kernels For Attribu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semi-structured Data

Semi-structured data is a form of structured data that does not obey the tabular structure of data models associated with relational databases or other forms of data tables, but nonetheless contains tags or other markers to separate semantic elements and enforce hierarchies of records and fields within the data. Therefore, it is also known as self-describing structure. In semi-structured data, the entities belonging to the same class may have different attributes even though they are grouped together, and the attributes' order is not important. Semi-structured data are increasingly occurring since the advent of the Internet where full-text documents and databases are not the only forms of data anymore, and different applications need a medium for exchanging information. In object-oriented databases, one often finds semi-structured data. Types XML XML, other markup languages, email, and EDI are all forms of semi-structured data. OEM (Object Exchange Model) was created prior ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sequential Pattern Mining

Sequential pattern mining is a topic of data mining concerned with finding statistically relevant patterns between data examples where the values are delivered in a sequence. It is usually presumed that the values are discrete, and thus time series mining is closely related, but usually considered a different activity. Sequential pattern mining is a special case of structured data mining. There are several key traditional computational problems addressed within this field. These include building efficient databases and indexes for sequence information, extracting the frequently occurring patterns, comparing sequences for similarity, and recovering missing sequence members. In general, sequence mining problems can be classified as ''string mining'' which is typically based on string processing algorithms and ''itemset mining'' which is typically based on association rule learning. ''Local process models'' extend sequential pattern mining to more complex patterns that can incl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

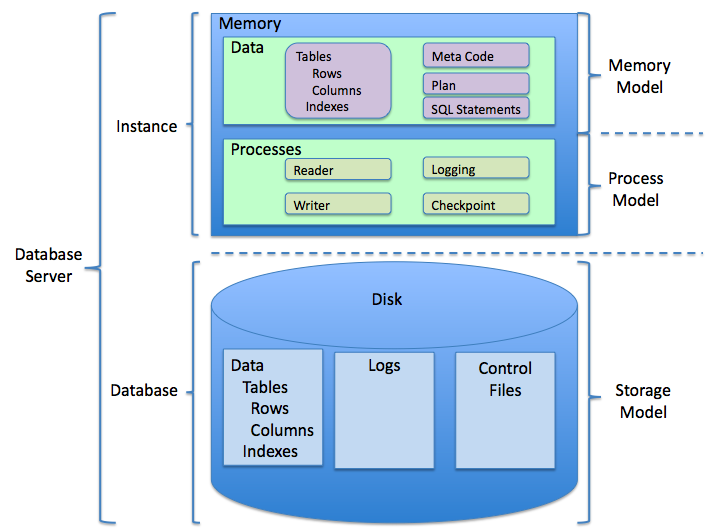

Relational Databases

A relational database is a (most commonly digital) database based on the relational model of data, as proposed by E. F. Codd in 1970. A system used to maintain relational databases is a relational database management system (RDBMS). Many relational database systems are equipped with the option of using the SQL (Structured Query Language) for querying and maintaining the database. History The term "relational database" was first defined by E. F. Codd at IBM in 1970. Codd introduced the term in his research paper "A Relational Model of Data for Large Shared Data Banks". In this paper and later papers, he defined what he meant by "relational". One well-known definition of what constitutes a relational database system is composed of Codd's 12 rules. However, no commercial implementations of the relational model conform to all of Codd's rules, so the term has gradually come to describe a broader class of database systems, which at a minimum: # Present the data to the user as rel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Network

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal to other neurons. An artificial neuron receives signals then processes them and can signal neurons connected to it. The "signal" at a connection is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs. The connections are called ''edges''. Neurons and edges typically have a ''weight'' that adjusts as learning proceeds. The weight increases or decreases the strength of the signal at a connection. Neurons may have a threshold such that a signal is sent only if the aggregate signal crosses that threshold. Typically ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ross Quinlan

John Ross Quinlan is a computer science researcher in data mining and decision theory. He has contributed extensively to the development of decision tree algorithms, including inventing the canonical C4.5 and ID3 algorithms. He also contributed to early ILP literature with First Order Inductive Learner (FOIL). He is currently running the companRuleQuest Researchwhich he founded in 1997. Education He received his BSc degree in Physics and Computing from the University of Sydney in 1965 and his computer science doctorate at the University of Washington in 1968. He has held positions at the University of New South Wales, University of Sydney, University of Technology Sydney, and RAND Corporation. Artificial intelligence Quinlan is a specialist in artificial intelligence, particularly in the aspect involving machine learning and its application to data mining. ID3 Ross Quinlan invented the Iterative Dichotomiser 3 (ID3) algorithm which is used to generate decision trees. ID3 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ID3 Algorithm

In decision tree learning, ID3 (Iterative Dichotomiser 3) is an algorithm invented by Ross QuinlanQuinlan, J. R. 1986. Induction of Decision Trees. Mach. Learn. 1, 1 (Mar. 1986), 81–106 used to generate a decision tree from a dataset. ID3 is the precursor to the C4.5 algorithm, and is typically used in the machine learning and natural language processing domains. Algorithm The ID3 algorithm begins with the original set S as the root node. On each iteration of the algorithm, it iterates through every unused attribute of the set S and calculates the entropy \Eta or the information gain IG(S) of that attribute. It then selects the attribute which has the smallest entropy (or largest information gain) value. The set S is then split or partitioned by the selected attribute to produce subsets of the data. (For example, a node can be split into child nodes based upon the subsets of the population whose ages are less than 50, between 50 and 100, and greater than 100.) The algorit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Mining

Text mining, also referred to as ''text data mining'', similar to text analytics, is the process of deriving high-quality information from text. It involves "the discovery by computer of new, previously unknown information, by automatically extracting information from different written resources." Written resources may include websites, books, emails, reviews, and articles. High-quality information is typically obtained by devising patterns and trends by means such as statistical pattern learning. According to Hotho et al. (2005) we can distinguish between three different perspectives of text mining: information extraction, data mining, and a KDD (Knowledge Discovery in Databases) process. Text mining usually involves the process of structuring the input text (usually parsing, along with the addition of some derived linguistic features and the removal of others, and subsequent insertion into a database), deriving patterns within the structured data, and finally evaluation and in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

XPath

XPath (XML Path Language) is an expression language designed to support the query or transformation of XML documents. It was defined by the World Wide Web Consortium (W3C) and can be used to compute values (e.g., strings, numbers, or Boolean values) from the content of an XML document. Support for XPath exists in applications that support XML, such as web browsers, and many programming languages. Overview The XPath language is based on a tree representation of the XML document, and provides the ability to navigate around the tree, selecting nodes by a variety of criteria. In popular use (though not in the official specification), an XPath expression is often referred to simply as "an XPath". Originally motivated by a desire to provide a common syntax and behavior model between XPointer and XSLT, subsets of the XPath query language are used in other W3C specifications such as XML Schema, XForms and the Internationalization Tag Set (ITS). XPath has been adopted by a number ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graph Kernel

In structure mining, a graph kernel is a kernel function that computes an inner product on graphs. Graph kernels can be intuitively understood as functions measuring the similarity of pairs of graphs. They allow kernelized learning algorithms such as support vector machines to work directly on graphs, without having to do feature extraction to transform them to fixed-length, real-valued feature vectors. They find applications in bioinformatics, in chemoinformatics (as a type of molecule kernels), and in social network analysis. Concepts of graph kernels have been around since the 1999, when D. Haussler introduced convolutional kernels on discrete structures. The term graph kernels was more officially coined in 2002 by R. I. Kondor and J. Lafferty as kernels ''on'' graphs, i.e. similarity functions between the nodes of a single graph, with the World Wide Web hyperlink graph as a suggested application. In 2003, Gaertner ''et al.'' and Kashima ''et al.'' defined kernels ''betw ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |