|

Speech Recognition

Speech recognition is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers with the main benefit of searchability. It is also known as automatic speech recognition (ASR), computer speech recognition or speech to text (STT). It incorporates knowledge and research in the computer science, linguistics and computer engineering fields. The reverse process is speech synthesis. Some speech recognition systems require "training" (also called "enrollment") where an individual speaker reads text or isolated vocabulary into the system. The system analyzes the person's specific voice and uses it to fine-tune the recognition of that person's speech, resulting in increased accuracy. Systems that do not use training are called "speaker-independent" systems. Systems that use training are called "speaker dependent". Speech recognition ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speaker Recognition

Speaker recognition is the identification of a person from characteristics of voices. It is used to answer the question "Who is speaking?" The term voice recognition can refer to ''speaker recognition'' or speech recognition. Speaker verification (also called speaker authentication) contrasts with identification, and ''speaker recognition'' differs from '' speaker diarisation'' (recognizing when the same speaker is speaking). Recognizing the speaker can simplify the task of translating speech in systems that have been trained on specific voices or it can be used to authenticate or verify the identity of a speaker as part of a security process. Speaker recognition has a history dating back some four decades as of 2019 and uses the acoustic features of speech that have been found to differ between individuals. These acoustic patterns reflect both anatomy and learned behavioral patterns. Verification versus identification There are two major applications of speaker recognition techn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speech Translation

Speech translation is the process by which conversational spoken phrases are instantly translated and spoken aloud in a second language. This differs from phrase translation, which is where the system only translates a fixed and finite set of phrases that have been manually entered into the system. Speech translation technology enables speakers of different languages to communicate. It thus is of tremendous value for humankind in terms of science, cross-cultural exchange and global business. How it works A speech translation system would typically integrate the following three software technologies: automatic speech recognition (ASR), machine translation (MT) and voice synthesis (TTS). The speaker of language A speaks into a microphone and the speech recognition module recognizes the utterance. It compares the input with a phonological model, consisting of a large corpus of speech data from multiple speakers. The input is then converted into a string of words, using dictionary ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bell Labs

Nokia Bell Labs, originally named Bell Telephone Laboratories (1925–1984), then AT&T Bell Laboratories (1984–1996) and Bell Labs Innovations (1996–2007), is an American industrial research and scientific development company owned by multinational company Nokia. With headquarters located in Murray Hill, New Jersey, the company operates several laboratories in the United States and around the world. Researchers working at Bell Laboratories are credited with the development of radio astronomy, the transistor, the laser, the photovoltaic cell, the charge-coupled device (CCD), information theory, the Unix operating system, and the programming languages B, C, C++, S, SNOBOL, AWK, AMPL, and others. Nine Nobel Prizes have been awarded for work completed at Bell Laboratories. Bell Labs had its origin in the complex corporate organization of the Bell System telephone conglomerate. In the late 19th century, the laboratory began as the Western Electric Engineering De ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nippon Telegraph And Telephone

, commonly known as NTT, is a Japanese telecommunications company headquartered in Tokyo, Japan. Ranked 55th in ''Fortune'' Global 500, NTT is the fourth largest telecommunications company in the world in terms of revenue, as well as the third largest publicly traded company in Japan after Toyota and Sony, as of June 2022. The company is incorporated pursuant to the NTT Law (). The purpose of the company defined by the law is to own all the shares issued by Nippon Telegraph and Telephone East Corporation (NTT East) and Nippon Telegraph and Telephone West Corporation (NTT West) and to ensure proper and stable provision of telecommunications services all over Japan including remote rural areas by these companies as well as to conduct research relating to the telecommunications technologies that will form the foundation for telecommunications. On 1 July 2019, NTT Corporation launched NTT Ltd., an $11 billion de facto holding company business consisting of 28 brands from across NTT ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nagoya University

, abbreviated to or NU, is a Japanese national research university located in Chikusa-ku, Nagoya. It was the seventh Imperial University in Japan, one of the first five Designated National University and selected as a Top Type university of Top Global University Project by the Japanese government. It is the 3rd highest ranked higher education institution in Japan (84th worldwide). The university is the birthplace of the Sakata School of physics and the Hirata School of chemistry. As of 2021, seven Nobel Prize winners have been associated with Nagoya University, the third most in Japan and Asia behind Kyoto University and the University of Tokyo. History Nagoya University traces its roots back to 1871 when it was the Temporary Medical School/Public Hospital. In 1939 it became Nagoya Imperial University (), the last Imperial University of Japanese Empire. In 1947 it was renamed Nagoya University (), and became a Japanese national university. In 2014, according to the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fumitada Itakura

is a Japanese scientist. He did pioneering work in statistical signal processing, and its application to speech analysis, synthesis and coding, including the development of the linear predictive coding (LPC) and line spectral pairs (LSP) methods. Biography Itakura was born in Toyokawa, Aichi Prefecture, Japan. He received undergraduate and graduate degrees from Nagoya University in 1963 and 1965, respectively. In 1966, while studying his PhD at Nagoya, he developed the earliest concepts for what would later become known as linear predictive coding (LPC), along with Shuzo Saito from Nippon Telegraph and Telephone (NTT). They described an approach to automatic phoneme discrimination that involved the first maximum likelihood approach to speech coding. In 1968, he joined the NTT Musashino Electrical Communication Laboratory in Tokyo. The same year, Itakura and Saito presented the Itakura–Saito distance algorithm. The following year, Itakura and Saito introduced partial correlati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speech Coding

Speech coding is an application of data compression of digital audio signals containing speech. Speech coding uses speech-specific parameter estimation using audio signal processing techniques to model the speech signal, combined with generic data compression algorithms to represent the resulting modeled parameters in a compact bitstream. Some applications of speech coding are mobile telephony and voice over IP (VoIP). The most widely used speech coding technique in mobile telephony is linear predictive coding (LPC), while the most widely used in VoIP applications are the LPC and modified discrete cosine transform (MDCT) techniques. The techniques employed in speech coding are similar to those used in audio data compression and audio coding where knowledge in psychoacoustics is used to transmit only data that is relevant to the human auditory system. For example, in voiceband speech coding, only information in the frequency band 400 to 3500 Hz is transmitted but the reco ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Predictive Coding

Linear predictive coding (LPC) is a method used mostly in audio signal processing and speech processing for representing the spectral envelope of a digital signal of speech in compressed form, using the information of a linear predictive model. LPC is the most widely used method in speech coding and speech synthesis. It is a powerful speech analysis technique, and a useful method for encoding good quality speech at a low bit rate. Overview LPC starts with the assumption that a speech signal is produced by a buzzer at the end of a tube (for voiced sounds), with occasional added hissing and popping sounds (for voiceless sounds such as sibilants and plosives). Although apparently crude, this Source–filter model is actually a close approximation of the reality of speech production. The glottis (the space between the vocal folds) produces the buzz, which is characterized by its intensity (loudness) and frequency (pitch). The vocal tract (the throat and mouth) forms the tub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

1962 World's Fair

The Century 21 Exposition (also known as the Seattle World's Fair) was a world's fair held April 21, 1962, to October 21, 1962, in Seattle, Washington, United States.Guide to the Seattle Center Grounds Photograph Collection: April, 1963 , University of Washington Libraries Special Collections. Accessed online October 18, 2007. Nearly 10 million people attended the fair.Joel Connelly Century 21 introduced Seattle to its future , '' |

Gunnar Fant

Carl Gunnar Michael Fant (October 8, 1919 – June 6, 2009) was a leading researcher in speech science in general and speech synthesis in particular who spent most of his career as a professor at the Swedish Royal Institute of Technology (KTH) in Stockholm. He was a first cousin of the actors and directors George Fant and Kenne Fant. Gunnar Fant received a Master of Science in Electrical Engineering from KTH in 1945. He specialized in the acoustics of the human voice, measuring formant values, and continued to work in this area at Ericsson and at the Massachusetts Institute of Technology. He also took the initiative of creating a speech communication department at KTH, unusual at the time. Fant's work led to the birth of a new era of speech synthesis with the introduction of powerful and configurable formant synthesizers. In 1960 he published the source-filter model of speech production, which became widely used. In the 1960s, Gunnar Fant's Orator Verbis Electris (OVE) competed w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

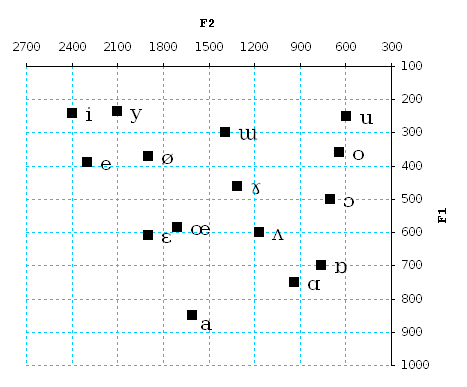

Formants

In speech science and phonetics, a formant is the broad spectral maximum that results from an acoustic resonance of the human vocal tract. In acoustics, a formant is usually defined as a broad peak, or local maximum, in the spectrum. For harmonic sounds, with this definition, the formant frequency is sometimes taken as that of the harmonic that is most augmented by a resonance. The difference between these two definitions resides in whether "formants" characterise the production mechanisms of a sound or the produced sound itself. In practice, the frequency of a spectral peak differs slightly from the associated resonance frequency, except when, by luck, harmonics are aligned with the resonance frequency. A room can be said to have formants characteristic of that particular room, due to its resonances, i.e., to the way sound reflects from its walls and objects. Room formants of this nature reinforce themselves by emphasizing specific frequencies and absorbing others, as exploited ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |