|

Soar (cognitive Architecture)

Soar is a cognitive architecture, originally created by John Laird, Allen Newell, and Paul Rosenbloom at Carnegie Mellon University. (Rosenbloom continued to serve as co-principal investigator after moving to Stanford University, then to the University of Southern California's Information Sciences Institute.) It is nomaintained and developedby John Laird's research group at the University of Michigan. The goal of the Soar project is to develop the fixed computational building blocks necessary for general intelligent agents – agents that can perform a wide range of tasks and encode, use, and learn all types of knowledge to realize the full range of cognitive capabilities found in humans, such as decision making, problem solving, planning, and natural-language understanding. It is both a theory of what cognition is and a computational implementation of that theory. Since its beginnings in 1983 as John Laird’s thesis, it has been widely used by AI researchers to create intelli ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cognitive Architecture

A cognitive architecture refers to both a theory about the structure of the human mind and to a computational instantiation of such a theory used in the fields of artificial intelligence (AI) and computational cognitive science. The formalized models can be used to further refine a comprehensive theory of cognition and as a useful artificial intelligence program. Successful cognitive architectures include ACT-R (Adaptive Control of Thought - Rational) and SOAR. The research on cognitive architectures as software instantiation of cognitive theories was initiated by Allen Newell in 1990. The Institute for Creative Technologies defines cognitive architecture as: "''hypothesis about the fixed structures that provide a mind, whether in natural or artificial systems, and how they work together – in conjunction with knowledge and skills embodied within the architecture – to yield intelligent behavior in a diversity of complex environments." History Herbert A. Simon, one of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unified Theories Of Cognition

''Unified Theories of Cognition'' is a 1990 book by Allen Newell. Newell argues for the need of a set of general assumptions for cognitive models that account for all of cognition: a unified theory of cognition, or cognitive architecture. The research started by Newell on unified theories of cognition represents a crucial element of divergence with respect to the vision of his long-term collaborator, and AI pioneer, Herbert Simon for what concerns the future of artificial intelligence research. Antonio Lieto recently drew attention to such a discrepancy, by pointing out that Herbert Simon decided to focus on the construction of single simulative programs (or microtheories/"middle-range" theories) that were considered a sufficient mean to enable the generalisation of “unifying” theories of cognition (i.e. according to Simon the "unification" was assumed to be derivable from a body of qualitative generalizations coming from the study of individual simulative programs). New ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Production (computer Science)

A production or production rule in computer science is a ''rewrite rule'' specifying a symbol substitution that can be recursively performed to generate new symbol sequences. A finite set of productions P is the main component in the specification of a formal grammar (specifically a generative grammar). The other components are a finite set N of nonterminal symbols, a finite set (known as an alphabet) \Sigma of terminal symbols that is disjoint from N and a distinguished symbol S \in N that is the ''start symbol''. In an unrestricted grammar, a production is of the form u \to v, where u and v are arbitrary strings of terminals and nonterminals, and u may not be the empty string. If v is the empty string, this is denoted by the symbol \epsilon, or \lambda (rather than leave the right-hand side blank). So productions are members of the cartesian product :V^*NV^* \times V^* = (V^*\setminus\Sigma^*) \times V^*, where V := N \cup \Sigma is the ''vocabulary'', ^ is the Kleene star ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symbolic System

In logic, mathematics, computer science, and linguistics, a formal language consists of words whose letters are taken from an alphabet and are well-formed according to a specific set of rules. The alphabet of a formal language consists of symbols, letters, or tokens that concatenate into strings of the language. Each string concatenated from symbols of this alphabet is called a word, and the words that belong to a particular formal language are sometimes called ''well-formed words'' or '' well-formed formulas''. A formal language is often defined by means of a formal grammar such as a regular grammar or context-free grammar, which consists of its formation rules. In computer science, formal languages are used among others as the basis for defining the grammar of programming languages and formalized versions of subsets of natural languages in which the words of the language represent concepts that are associated with particular meanings or semantics. In computational comple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

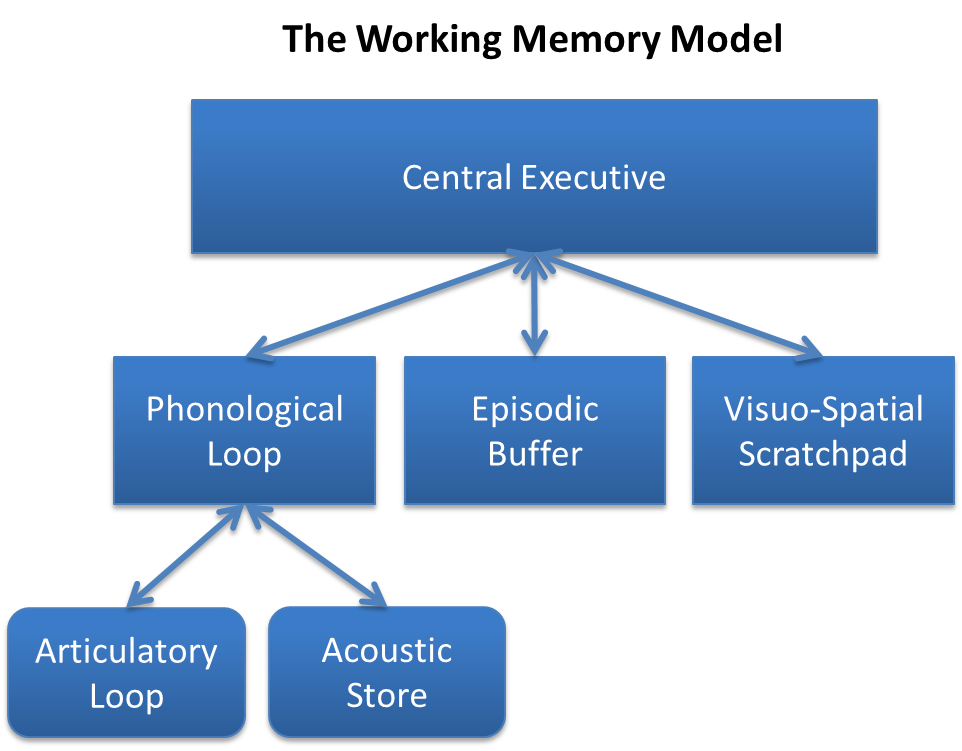

Working Memory

Working memory is a cognitive system with a limited capacity that can hold information temporarily. It is important for reasoning and the guidance of decision-making and behavior. Working memory is often used synonymously with short-term memory, but some theorists consider the two forms of memory distinct, assuming that working memory allows for the manipulation of stored information, whereas short-term memory only refers to the short-term storage of information. Working memory is a theoretical concept central to cognitive psychology, neuropsychology, and neuroscience. History The term "working memory" was coined by Miller, Galanter, and Pribram, and was used in the 1960s in the context of theories that likened the mind to a computer. In 1968, Atkinson and Shiffrin used the term to describe their "short-term store". What we now call working memory was formerly referred to variously as a "short-term store" or short-term memory, primary memory, immediate memory, operant mem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Procedural Memory

Procedural memory is a type of implicit memory ( unconscious, long-term memory) which aids the performance of particular types of tasks without conscious awareness of these previous experiences. Procedural memory guides the processes we perform, and most frequently resides below the level of conscious awareness. When needed, procedural memories are automatically retrieved and utilized for execution of the integrated procedures involved in both cognitive and motor skills, from tying shoes, to reading, to flying an airplane. Procedural memories are accessed and used without the need for conscious control or attention. Procedural memory is created through ''procedural learning'', or repeating a complex activity over and over again until all of the relevant neural systems work together to automatically produce the activity. Implicit procedural learning is essential for the development of any motor skill or cognitive activity. History The difference between procedural and declarati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Physical Symbol System

A physical symbol system (also called a formal system) takes physical patterns (symbols), combining them into structures (expressions) and manipulating them (using processes) to produce new expressions. The physical symbol system hypothesis (PSSH) is a position in the philosophy of artificial intelligence formulated by Allen Newell and Herbert A. Simon. They wrote: This claim implies both that human thinking is a kind of symbol manipulation (because a symbol system is necessary for intelligence) and that machines can be intelligent (because a symbol system is sufficient for intelligence). The idea has philosophical roots in Hobbes (who claimed reasoning was "nothing more than reckoning"), Leibniz (who attempted to create a logical calculus of all human ideas), Hume (who thought perception could be reduced to "atomic impressions") and even Kant (who analyzed all experience as controlled by formal rules). The latest version is called the computational theory of mind, associated ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intelligence (trait)

Intelligence has been defined in many ways: the capacity for abstraction, logic, understanding, self-awareness, learning, emotional knowledge, reasoning, planning, creativity, critical thinking, and problem-solving. More generally, it can be described as the ability to perceive or infer information, and to retain it as knowledge to be applied towards adaptive behaviors within an environment or context. Intelligence is most often studied in humans but has also been observed in both non-human animals and in plants despite controversy as to whether some of these forms of life exhibit intelligence. Intelligence in computers or other machines is called artificial intelligence. Etymology The word ''intelligence'' derives from the Latin nouns '' intelligentia'' or '' intellēctus'', which in turn stem from the verb '' intelligere'', to comprehend or perceive. In the Middle Ages, the word ''intellectus'' became the scholarly technical term for understanding, and a translation for t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symbolic Artificial Intelligence

In artificial intelligence, symbolic artificial intelligence is the term for the collection of all methods in artificial intelligence research that are based on high-level symbolic (human-readable) representations of problems, logic and search. Symbolic AI used tools such as logic programming, production rules, semantic nets and frames, and it developed applications such as knowledge-based systems (in particular, expert systems), symbolic mathematics, automated theorem provers, ontologies, the semantic web, and automated planning and scheduling systems. The Symbolic AI paradigm led to seminal ideas in search, symbolic programming languages, agents, multi-agent systems, the semantic web, and the strengths and limitations of formal knowledge and reasoning systems. Symbolic AI was the dominant paradigm of AI research from the mid-1950s until the middle 1990s. Researchers in the 1960s and the 1970s were convinced that symbolic approaches would eventually succeed in creating ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Daniel Kahneman

Daniel Kahneman (; he, דניאל כהנמן; born March 5, 1934) is an Israeli-American psychologist and economist notable for his work on the psychology of judgment and decision-making, as well as behavioral economics, for which he was awarded the 2002 Nobel Memorial Prize in Economic Sciences (shared with Vernon L. Smith). His empirical findings challenge the assumption of human rationality prevailing in modern economic theory. With Amos Tversky and others, Kahneman established a cognitive basis for common human errors that arise from heuristics and biases, and developed prospect theory. In 2011 he was named by '' Foreign Policy'' magazine in its list of top global thinkers. In the same year his book '' Thinking, Fast and Slow'', which summarizes much of his research, was published and became a best seller. In 2015, ''The Economist'' listed him as the seventh most influential economist in the world. He is professor emeritus of psychology and public affairs at Princeton ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hierarchical Task Network

In artificial intelligence, hierarchical task network (HTN) planning is an approach to automated planning in which the dependency among actions can be given in the form of hierarchically structured networks. Planning problems are specified in the hierarchical task network approach by providing a set of tasks, which can be: # primitive (initial state) tasks, which roughly correspond to the actions of STRIPS; # compound tasks (intermediate state), which can be seen as composed of a set of simpler tasks; # goal tasks (goal state), which roughly corresponds to the goals of STRIPS, but are more general. A solution to an HTN problem is then an executable sequence of primitive tasks that can be obtained from the initial task network by decomposing compound tasks into their set of simpler tasks, and by inserting ordering constraints. A primitive task is an action that can be executed directly given the state in which it is executed supports its precondition. A compound task is a comple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Planning

Planning is the process of thinking regarding the activities required to achieve a desired goal. Planning is based on foresight, the fundamental capacity for mental time travel. The evolution of forethought, the capacity to think ahead, is considered to have been a prime mover in human evolution. Planning is a fundamental property of intelligent behavior. It involves the use of logic and imagination to visualise not only a desired end result, but the steps necessary to achieve that result. An important aspect of planning is its relationship to forecasting. Forecasting aims to predict what the future will look like, while planning imagines what the future could look like. Planning according to established principles is a core part of many professional occupations, particularly in fields such as management and business. Once a plan has been developed it is possible to measure and assess progress, efficiency and effectiveness. As circumstances change, plans may need to be modi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |