|

Sequential Probability Ratio Test

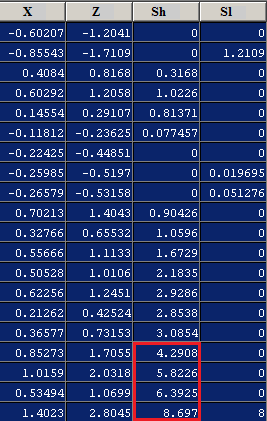

The sequential probability ratio test (SPRT) is a specific sequential hypothesis test, developed by Abraham Wald and later proven to be optimal by Wald and Jacob Wolfowitz. Neyman and Pearson's 1933 result inspired Wald to reformulate it as a sequential analysis problem. The Neyman-Pearson lemma, by contrast, offers a rule of thumb for when all the data is collected (and its likelihood ratio known). While originally developed for use in quality control studies in the realm of manufacturing, SPRT has been formulated for use in the computerized testing of human examinees as a termination criterion. Theory As in classical hypothesis testing, SPRT starts with a pair of hypotheses, say H_0 and H_1 for the null hypothesis and alternative hypothesis respectively. They must be specified as follows: :H_0: p=p_0 :H_1: p=p_1 The next step is to calculate the cumulative sum of the log-likelihood ratio, \log \Lambda_i, as new data arrive: with S_0 = 0, then, for i=1,2,..., :S_i=S_+ \log ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sequential Analysis

In statistics, sequential analysis or sequential hypothesis testing is statistical analysis where the sample size is not fixed in advance. Instead data are evaluated as they are collected, and further sampling is stopped in accordance with a pre-defined stopping rule as soon as significant results are observed. Thus a conclusion may sometimes be reached at a much earlier stage than would be possible with more classical hypothesis testing or estimation, at consequently lower financial and/or human cost. History The method of sequential analysis is first attributed to Abraham Wald with Jacob Wolfowitz, W. Allen Wallis, and Milton Friedman while at Columbia University's Statistical Research Group as a tool for more efficient industrial quality control during World War II. Its value to the war effort was immediately recognised, and led to its receiving a "restricted" classification. At the same time, George Barnard led a group working on optimal stopping in Great Britain. An ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Slope

In mathematics, the slope or gradient of a line is a number that describes both the ''direction'' and the ''steepness'' of the line. Slope is often denoted by the letter ''m''; there is no clear answer to the question why the letter ''m'' is used for slope, but its earliest use in English appears in O'Brien (1844) who wrote the equation of a straight line as and it can also be found in Todhunter (1888) who wrote it as "''y'' = ''mx'' + ''c''". Slope is calculated by finding the ratio of the "vertical change" to the "horizontal change" between (any) two distinct points on a line. Sometimes the ratio is expressed as a quotient ("rise over run"), giving the same number for every two distinct points on the same line. A line that is decreasing has a negative "rise". The line may be practical – as set by a road surveyor, or in a diagram that models a road or a roof either as a description or as a plan. The ''steepness'', incline, or grade of a line is measured by the absolu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

R (programming Language)

R is a programming language for statistical computing and graphics supported by the R Core Team and the R Foundation for Statistical Computing. Created by statisticians Ross Ihaka and Robert Gentleman, R is used among data miners, bioinformaticians and statisticians for data analysis and developing statistical software. Users have created packages to augment the functions of the R language. According to user surveys and studies of scholarly literature databases, R is one of the most commonly used programming languages used in data mining. R ranks 12th in the TIOBE index, a measure of programming language popularity, in which the language peaked in 8th place in August 2020. The official R software environment is an open-source free software environment within the GNU package, available under the GNU General Public License. It is written primarily in C, Fortran, and R itself (partially self-hosting). Precompiled executables are provided for various operating syste ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Addison-Wesley

Addison-Wesley is an American publisher of textbooks and computer literature. It is an imprint of Pearson PLC, a global publishing and education company. In addition to publishing books, Addison-Wesley also distributes its technical titles through the O'Reilly Online Learning e-reference service. Addison-Wesley's majority of sales derive from the United States (55%) and Europe (22%). The Addison-Wesley Professional Imprint produces content including books, eBooks, and video for the professional IT worker including developers, programmers, managers, system administrators. Classic titles include ''The Art of Computer Programming'', ''The C++ Programming Language'', ''The Mythical Man-Month'', and ''Design Patterns''. History Lew Addison Cummings and Melbourne Wesley Cummings founded Addison-Wesley in 1942, with the first book published by Addison-Wesley being Massachusetts Institute of Technology professor Francis Weston Sears' ''Mechanics''. Its first computer book was ''Prog ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Likelihood-ratio Test

In statistics, the likelihood-ratio test assesses the goodness of fit of two competing statistical models based on the ratio of their likelihoods, specifically one found by maximization over the entire parameter space and another found after imposing some constraint. If the constraint (i.e., the null hypothesis) is supported by the observed data, the two likelihoods should not differ by more than sampling error. Thus the likelihood-ratio test tests whether this ratio is significantly different from one, or equivalently whether its natural logarithm is significantly different from zero. The likelihood-ratio test, also known as Wilks test, is the oldest of the three classical approaches to hypothesis testing, together with the Lagrange multiplier test and the Wald test. In fact, the latter two can be conceptualized as approximations to the likelihood-ratio test, and are asymptotically equivalent. In the case of comparing two models each of which has no unknown parameters, use ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wald Test

In statistics, the Wald test (named after Abraham Wald) assesses constraints on statistical parameters based on the weighted distance between the unrestricted estimate and its hypothesized value under the null hypothesis, where the weight is the precision of the estimate. Intuitively, the larger this weighted distance, the less likely it is that the constraint is true. While the finite sample distributions of Wald tests are generally unknown, it has an asymptotic χ2-distribution under the null hypothesis, a fact that can be used to determine statistical significance. Together with the Lagrange multiplier test and the likelihood-ratio test, the Wald test is one of three classical approaches to hypothesis testing. An advantage of the Wald test over the other two is that it only requires the estimation of the unrestricted model, which lowers the computational burden as compared to the likelihood-ratio test. However, a major disadvantage is that (in finite samples) it is not ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computerized Classification Test

A computerized classification test (CCT) refers to, as its name would suggest, a test that is administered by computer for the purpose of classifying examinees. The most common CCT is a mastery test where the test classifies examinees as "Pass" or "Fail," but the term also includes tests that classify examinees into more than two categories. While the term may generally be considered to refer to all computer-administered tests for classification, it is usually used to refer to tests that are interactively administered or of variable-length, similar to computerized adaptive testing (CAT). Like CAT, variable-length CCTs can accomplish the goal of the test (accurate classification) with a fraction of the number of items used in a conventional fixed-form test. A CCT requires several components: # An item bank calibrated with a psychometric model selected by the test designer # A starting point # An item selection algorithm # A termination criterion and scoring procedure The startin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CUSUM

In statistical quality control, the CUsUM (or cumulative sum control chart) is a sequential analysis technique developed by E. S. Page of the University of Cambridge. It is typically used for monitoring change detection. CUSUM was announced in Biometrika, in 1954, a few years after the publication of Wald's sequential probability ratio test (SPRT). E. S. Page referred to a "quality number" \theta, by which he meant a parameter of the probability distribution; for example, the mean. He devised CUSUM as a method to determine changes in it, and proposed a criterion for deciding when to take corrective action. When the CUSUM method is applied to changes in mean, it can be used for step detection of a time series. A few years later, George Alfred Barnard developed a visualization method, the V-mask chart, to detect both increases and decreases in \theta. Method As its name implies, CUSUM involves the calculation of a cumulative sum (which is what makes it "sequential"). Samples fr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Harold Shipman

Harold Frederick Shipman (14 January 1946 – 13 January 2004), known by the public as Doctor Death and to acquaintances as Fred Shipman, was an English general practitioner and serial killer. He is considered to be one of the most prolific serial killers in modern history, with an estimated 250 victims. On 31 January 2000, Shipman was found guilty of murdering 15 patients under his care. He was sentenced to life imprisonment with a whole life order. Shipman died by suicide, hanging himself in his cell at HM Prison Wakefield, West Yorkshire, on 13 January 2004, the day before his 58th birthday. ''The Shipman Inquiry'', a two-year-long investigation of all deaths certified by Shipman, chaired by Dame Janet Smith, examined Shipman's crimes. It revealed Shipman targeted vulnerable elderly people who trusted him as he was their doctor. He killed his victims either by a fatal dose of drugs or prescribing them an abnormal amount. Shipman is the only British doctor to date to h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

David Spiegelhalter

Sir David John Spiegelhalter (born 16 August 1953) is a British statistician and a Fellow of Churchill College, Cambridge. From 2007 to 2018 he was Winton Professor of the Public Understanding of Risk in the Statistical Laboratory at the University of Cambridge. Spiegelhalter is an ISI highly cited researcher. He is currently Chair of the Winton Centre for Risk and Evidence Communication in the Centre for Mathematical Sciences at Cambridge. On 27 May 2020 he joined the board of the UK Statistics Authority as a non-executive director for a period of three years. Early life and education Spiegelhalter was born on 16 August 1953: his name means "Mirror holder" in German. He was educated at Barnstaple Grammar School, a state grammar school in Barnstaple, Devon, from 1963 to 1970. He then studied mathematics at Keble College, Oxford, graduating with a Bachelor of Arts (BA) degree in 1974. He moved to University College London, where he gained his Master of Science (MSc) degr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Item Response Theory

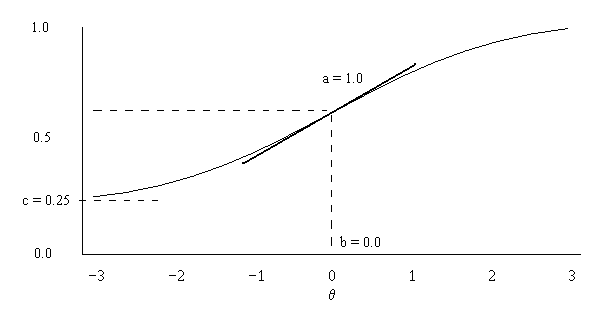

In psychometrics, item response theory (IRT) (also known as latent trait theory, strong true score theory, or modern mental test theory) is a paradigm for the design, analysis, and scoring of tests, questionnaires, and similar instruments measuring abilities, attitudes, or other variables. It is a theory of testing based on the relationship between individuals' performances on a test item and the test takers' levels of performance on an overall measure of the ability that item was designed to measure. Several different statistical models are used to represent both item and test taker characteristics. Unlike simpler alternatives for creating scales and evaluating questionnaire responses, it does not assume that each item is equally difficult. This distinguishes IRT from, for instance, Likert scaling, in which ''"''All items are assumed to be replications of each other or in other words items are considered to be parallel instruments".A. van Alphen, R. Halfens, A. Hasman and T. Imbos. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Classical Test Theory

Classical test theory (CTT) is a body of related psychometric theory that predicts outcomes of psychological testing such as the difficulty of items or the ability of test-takers. It is a theory of testing based on the idea that a person's observed or obtained score on a test is the sum of a true score (error-free score) and an error score. Generally speaking, the aim of classical test theory is to understand and improve the reliability of psychological tests. ''Classical test theory'' may be regarded as roughly synonymous with ''true score theory''. The term "classical" refers not only to the chronology of these models but also contrasts with the more recent psychometric theories, generally referred to collectively as item response theory, which sometimes bear the appellation "modern" as in "modern latent trait theory". Classical test theory as we know it today was codified by Novick (1966) and described in classic texts such as Lord & Novick (1968) and Allen & Yen (1979/2002). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |