|

Probabilistic Relevance Model

The probabilistic relevance model was devised by Stephen E. Robertson and Karen Spärck Jones as a framework for probabilistic models to come. It is a formalism of information retrieval useful to derive ranking functions used by search engines and web search engines in order to rank matching documents according to their relevance to a given search query. It is a theoretical model estimating the probability that a document ''dj'' is relevant to a query ''q''. The model assumes that this probability of relevance depends on the query and document representations. Furthermore, it assumes that there is a portion of all documents that is preferred by the user as the answer set for query ''q''. Such an ideal answer set is called ''R'' and should maximize the overall probability of relevance to that user. The prediction is that documents in this set ''R'' are relevant to the query, while documents not present in the set are non-relevant. sim(d_,q) = \frac Related models There are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stephen Robertson (computer Scientist)

Stephen Robertson is a British computer scientist. He is known for his work on probabilistic information retrieval together with Karen Spärck Jones and the Okapi BM25 weighting model. Okapi BM25 is very successful in experimental search evaluations and found its way in many information retrieval systems and products, including open source search systems like Lucene, Lemur, Xapian, and Terrier. BM25 is used as one of the most important signals in large web search engines, certainly in Microsoft Bing, and probably in other web search engines too. BM25 is also used in various other Microsoft products such as Microsoft SharePoint and SQL Server. After completing his undergraduate degree in mathematics at Cambridge University, he took an MS at City University, and then worked for ASLIB. He earned his PhD at University College London in 1976 under the renowned statistician and scholar B. C. Brookes. He then returned to City University working there from 1978 until 1998 in the Dep ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Karen Spärck Jones

Karen Sparck Jones is a computer science researcher and innovator who pioneered the search engine algorithm known as inverse document frequency (IDF). While many early information scientists and computer engineers were focused on developing programming languages and coding computer systems, Sparck-Jones thought it more beneficial to develop information retrieval systems that could understand human language. /sup> Background Karen Sparck-Jones was born in Huddersfield, Yorkshire, England in 1935 and attended school through university at Girton College in Cambridge. While she did not study computer science in school, she began her research career in a niche organization known as the Cambridge Language Research Unit (CLRU). Through her work at the CLRU, Sparck-Jones began pursuing her Ph.D. At the time of submission, her Ph.D thesis was cast aside as uninspired and lacking original thought but was later published in its entirety as a book. /sup> Professional Career � ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Model

A statistical model is a mathematical model that embodies a set of statistical assumptions concerning the generation of sample data (and similar data from a larger population). A statistical model represents, often in considerably idealized form, the data-generating process. When referring specifically to probabilities, the corresponding term is probabilistic model. A statistical model is usually specified as a mathematical relationship between one or more random variables and other non-random variables. As such, a statistical model is "a formal representation of a theory" ( Herman Adèr quoting Kenneth Bollen). All statistical hypothesis tests and all statistical estimators are derived via statistical models. More generally, statistical models are part of the foundation of statistical inference. Introduction Informally, a statistical model can be thought of as a statistical assumption (or set of statistical assumptions) with a certain property: that the assumption allows ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Retrieval

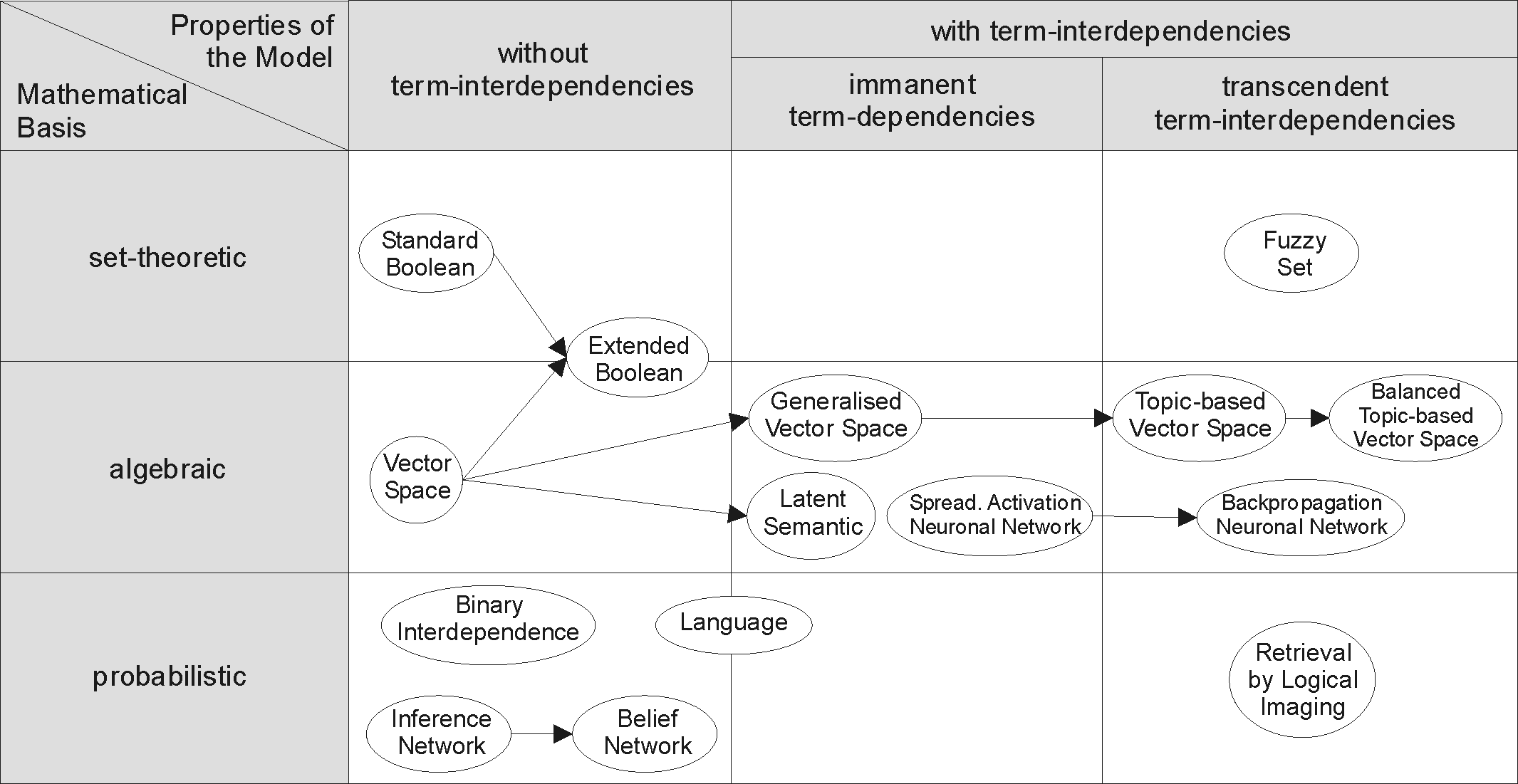

Information retrieval (IR) in computing and information science is the process of obtaining information system resources that are relevant to an information need from a collection of those resources. Searches can be based on full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user or searcher enters a query into the system. Queries are formal statements of information needs, for example search strings in web search engines. In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ranking Function

Ranking of query is one of the fundamental problems in information retrieval (IR), the scientific/engineering discipline behind search engines. Given a query and a collection of documents that match the query, the problem is to rank, that is, sort, the documents in according to some criterion so that the "best" results appear early in the result list displayed to the user. Ranking in terms of information retrieval is an important concept in computer science and is used in many different applications such as search engine queries and recommender systems. A majority of search engines use ranking algorithms to provide users with accurate and relevant results. History The notion of page rank dates back to the 1940s and the idea originated in the field of economics. In 1941, Wassily Leontief developed an iterative method of valuing a country's sector based on the importance of other sectors that supplied resources to it. In 1965, Charles H Hubbell at the University of California, San ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Search Engine

A search engine is a software system designed to carry out web searches. They search the World Wide Web in a systematic way for particular information specified in a textual web search query. The search results are generally presented in a line of results, often referred to as search engine results pages (SERPs). When a user enters a query into a search engine, the engine scans its index of web pages to find those that are relevant to the user's query. The results are then ranked by relevancy and displayed to the user. The information may be a mix of links to web pages, images, videos, infographics, articles, research papers, and other types of files. Some search engines also mine data available in databases or open directories. Unlike web directories and social bookmarking sites, which are maintained by human editors, search engines also maintain real-time information by running an algorithm on a web crawler. Any internet-based content that can't be indexed and searc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Search Engine

A search engine is a software system designed to carry out web searches. They search the World Wide Web in a systematic way for particular information specified in a textual web search query. The search results are generally presented in a line of results, often referred to as search engine results pages (SERPs). When a user enters a query into a search engine, the engine scans its index of web pages to find those that are relevant to the user's query. The results are then ranked by relevancy and displayed to the user. The information may be a mix of links to web pages, images, videos, infographics, articles, research papers, and other types of files. Some search engines also mine data available in databases or open directories. Unlike web directories and social bookmarking sites, which are maintained by human editors, search engines also maintain real-time information by running an algorithm on a web crawler. Any internet-based content that can't be indexed and searc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Relevance (information Retrieval)

In information science and information retrieval, relevance denotes how well a retrieved document or set of documents meets the information need of the user. Relevance may include concerns such as timeliness, authority or novelty of the result. History The concern with the problem of finding relevant information dates back at least to the first publication of scientific journals in the 17th century. The formal study of relevance began in the 20th Century with the study of what would later be called bibliometrics. In the 1930s and 1940s, S. C. Bradford used the term "relevant" to characterize articles relevant to a subject (cf., Bradford's law). In the 1950s, the first information retrieval systems emerged, and researchers noted the retrieval of irrelevant articles as a significant concern. In 1958, B. C. Vickery made the concept of relevance explicit in an address at the International Conference on Scientific Information. Since 1958, information scientists have explored and d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Independence Model

The Binary Independence Model (BIM) in computing and information science is a probabilistic information retrieval technique. The model makes some simple assumptions to make the estimation of document/query similarity probable and feasible. Definitions The Binary Independence Assumption is that documents are binary vectors. That is, only the presence or absence of terms in documents are recorded. Terms are independently distributed in the set of relevant documents and they are also independently distributed in the set of irrelevant documents. The representation is an ordered set of Boolean variables. That is, the representation of a document or query is a vector with one Boolean element for each term under consideration. More specifically, a document is represented by a vector where if term ''t'' is present in the document ''d'' and if it's not. Many documents can have the same vector representation with this simplification. Queries are represented in a similar way. "Independ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probabilistic Relevance Model (BM25)

The probabilistic relevance model was devised by Stephen E. Robertson and Karen Spärck Jones as a framework for probabilistic models to come. It is a formalism of information retrieval useful to derive ranking functions used by search engines and web search engines in order to rank matching documents according to their relevance to a given search query. It is a theoretical model estimating the probability that a document ''dj'' is relevant to a query ''q''. The model assumes that this probability of relevance depends on the query and document representations. Furthermore, it assumes that there is a portion of all documents that is preferred by the user as the answer set for query ''q''. Such an ideal answer set is called ''R'' and should maximize the overall probability of relevance to that user. The prediction is that documents in this set ''R'' are relevant to the query, while documents not present in the set are non-relevant. sim(d_,q) = \frac Related models There are s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Retrieval Techniques

Information is an abstract concept that refers to that which has the power to inform. At the most fundamental level information pertains to the interpretation of that which may be sensed. Any natural process that is not completely random, and any observable pattern in any medium can be said to convey some amount of information. Whereas digital signals and other data use discrete signs to convey information, other phenomena and artifacts such as analog signals, poems, pictures, music or other sounds, and currents convey information in a more continuous form. Information is not knowledge itself, but the meaning that may be derived from a representation through interpretation. Information is often processed iteratively: Data available at one step are processed into information to be interpreted and processed at the next step. For example, in written text each symbol or letter conveys information relevant to the word it is part of, each word conveys information relevant ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |