|

Principle Of Marginality

In statistics, the principle of marginality, sometimes called hierarchical principle, is the fact that the average (or main) effects of variables in an analysis are marginal to their interaction effect—that is, the main effect of one explanatory variable captures the effect of that variable averaged over all values of a second explanatory variable whose value influences the first variable's effect. The principle of marginality implies that, in general, it is wrong to test, estimate, or interpret main effects of explanatory variables where the variables interact or, similarly, to model interaction effects but delete main effects that are marginal to them.Fox, JRegression Notes While such models are interpretable, they lack applicability, as they ignore the dependence of a variable's effect upon another variable's value. Nelder and VenablesVenables, W.N. (1998)"Exegeses on Linear Models" Paper presented to the S-PLUS User's Conference Washington, DC, 8–9 October 1998. have ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Main Effect

In the design of experiments and analysis of variance, a main effect is the effect of an independent variable on a dependent variable averaged across the levels of any other independent variables. The term is frequently used in the context of factorial designs and regression models Regression or regressions may refer to: Arts and entertainment * ''Regression'' (film), a 2015 horror film by Alejandro Amenábar, starring Ethan Hawke and Emma Watson * ''Regression'' (magazine), an Australian punk rock fanzine (1982–1984) * ... to distinguish main effects from interaction effects. Relative to a factorial design, under an analysis of variance, a main effect test will test the hypotheses expected such as H0, the null hypothesis. Running a hypothesis for a main effect will test whether there is evidence of an effect of different treatments. However, a main effect test is nonspecific and will not allow for a localization of specific mean pairwise comparisons (simple effects). A mai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Marginal Distribution

In probability theory and statistics, the marginal distribution of a subset of a collection of random variables is the probability distribution of the variables contained in the subset. It gives the probabilities of various values of the variables in the subset without reference to the values of the other variables. This contrasts with a conditional distribution, which gives the probabilities contingent upon the values of the other variables. Marginal variables are those variables in the subset of variables being retained. These concepts are "marginal" because they can be found by summing values in a table along rows or columns, and writing the sum in the margins of the table. The distribution of the marginal variables (the marginal distribution) is obtained by marginalizing (that is, focusing on the sums in the margin) over the distribution of the variables being discarded, and the discarded variables are said to have been marginalized out. The context here is that the theoreti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interaction Effect

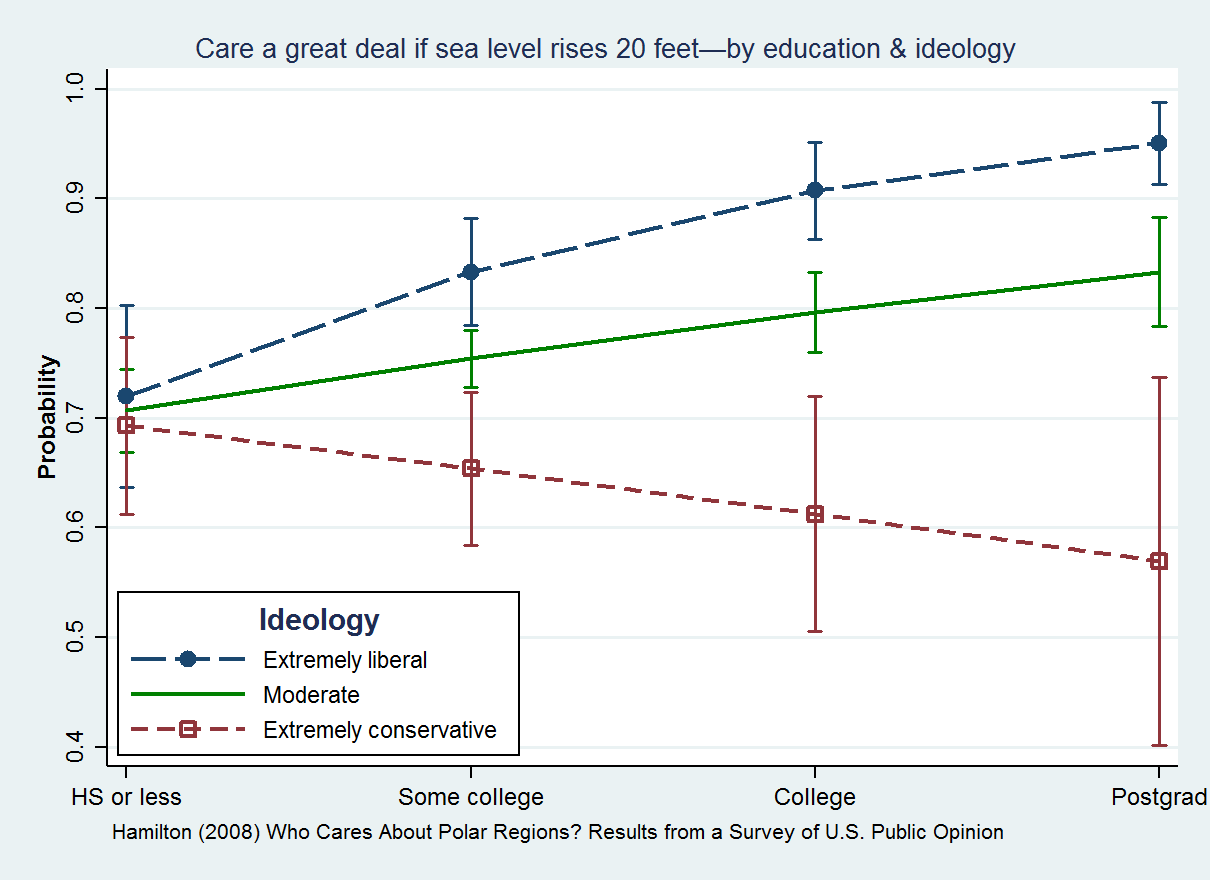

In statistics, an interaction may arise when considering the relationship among three or more variables, and describes a situation in which the effect of one causal variable on an outcome depends on the state of a second causal variable (that is, when effects of the two causes are not additive). Although commonly thought of in terms of causal relationships, the concept of an interaction can also describe non-causal associations (then also called ''moderation'' or ''effect modification''). Interactions are often considered in the context of regression analyses or factorial experiments. The presence of interactions can have important implications for the interpretation of statistical models. If two variables of interest interact, the relationship between each of the interacting variables and a third "dependent variable" depends on the value of the other interacting variable. In practice, this makes it more difficult to predict the consequences of changing the value of a variable ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Explanatory Variable

A variable is considered dependent if it depends on (or is hypothesized to depend on) an independent variable. Dependent variables are studied under the supposition or demand that they depend, by some law or rule (e.g., by a mathematical function), on the values of other variables. Independent variables, on the other hand, are not seen as depending on any other variable in the scope of the experiment in question. Rather, they are controlled by the experimenter. In pure mathematics In mathematics, a function is a rule for taking an input (in the simplest case, a number or set of numbers)Carlson, Robert. A concrete introduction to real analysis. CRC Press, 2006. p.183 and providing an output (which may also be a number). A symbol that stands for an arbitrary input is called an independent variable, while a symbol that stands for an arbitrary output is called a dependent variable. The most common symbol for the input is , and the most common symbol for the output is ; the function ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

John Nelder

John Ashworth Nelder (8 October 1924 – 7 August 2010) was a British statistician known for his contributions to experimental design, analysis of variance, computational statistics, and statistical theory. Contributions Nelder's work was influential in statistics. While leading research at Rothamsted Experimental Station, Nelder developed and supervised the updating of the statistical software packages GLIM and GenStat: Both packages are flexible high-level programming languages that allow statisticians to formulate linear models concisely. GLIM influenced later environments for statistical computing such as S-PLUS and R. Both GLIM and GenStat have powerful facilities for the analysis of variance for block experiments, an area where Nelder made many contributions. In statistical theory, Nelder proposed the generalized linear model together with Robert Wedderburn. Nelder and Wedderburn formulated generalized linear models as a way of unifying various other statistical mod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dependent Variable

A variable is considered dependent if it depends on (or is hypothesized to depend on) an independent variable. Dependent variables are studied under the supposition or demand that they depend, by some law or rule (e.g., by a mathematical function), on the values of other variables. Independent variables, on the other hand, are not seen as depending on any other variable in the scope of the experiment in question. Rather, they are controlled by the experimenter. In pure mathematics In mathematics, a function is a rule for taking an input (in the simplest case, a number or set of numbers)Carlson, Robert. A concrete introduction to real analysis. CRC Press, 2006. p.183 and providing an output (which may also be a number). A symbol that stands for an arbitrary input is called an independent variable, while a symbol that stands for an arbitrary output is called a dependent variable. The most common symbol for the input is , and the most common symbol for the output is ; the functio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Effect Size

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the value of one parameter for a hypothetical population, or to the equation that operationalizes how statistics or parameters lead to the effect size value. Examples of effect sizes include the correlation between two variables, the regression coefficient in a regression, the mean difference, or the risk of a particular event (such as a heart attack) happening. Effect sizes are a complement tool for statistical hypothesis testing, and play an important role in power analyses to assess the sample size required for new experiments. Effect size are fundamental in meta-analyses which aim to provide the combined effect size based on data from multiple studies. The cluster of data-analysis methods concerning effect sizes is referred to as estima ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Errors And Residuals

In statistics and optimization, errors and residuals are two closely related and easily confused measures of the deviation of an observed value of an element of a statistical sample from its "true value" (not necessarily observable). The error of an observation is the deviation of the observed value from the true value of a quantity of interest (for example, a population mean). The residual is the difference between the observed value and the '' estimated'' value of the quantity of interest (for example, a sample mean). The distinction is most important in regression analysis, where the concepts are sometimes called the regression errors and regression residuals and where they lead to the concept of studentized residuals. In econometrics, "errors" are also called disturbances. Introduction Suppose there is a series of observations from a univariate distribution and we want to estimate the mean of that distribution (the so-called location model). In this case, the errors ar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Partial Derivative

In mathematics, a partial derivative of a function of several variables is its derivative with respect to one of those variables, with the others held constant (as opposed to the total derivative, in which all variables are allowed to vary). Partial derivatives are used in vector calculus and differential geometry. The partial derivative of a function f(x, y, \dots) with respect to the variable x is variously denoted by It can be thought of as the rate of change of the function in the x-direction. Sometimes, for the partial derivative of z with respect to x is denoted as \tfrac. Since a partial derivative generally has the same arguments as the original function, its functional dependence is sometimes explicitly signified by the notation, such as in: f'_x(x, y, \ldots), \frac (x, y, \ldots). The symbol used to denote partial derivatives is ∂. One of the first known uses of this symbol in mathematics is by Marquis de Condorcet from 1770, who used it for partial differ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |