|

Pitman Closeness Criterion

In statistical theory, the Pitman closeness criterion, named after E. J. G. Pitman, is a way of comparing two candidate estimators for the same parameter. Under this criterion, estimator A is preferred to estimator B if the probability that estimator A is closer to the true value than estimator B is greater than one half. Here the meaning of ''closer'' is determined by the absolute difference in the case of a scalar parameter, or by the Mahalanobis distance for a vector parameter. References *Pitman, E. (1937) "The “closest” estimates of statistical parameters". ''Mathematical Proceedings of the Cambridge Philosophical Society'', 33 (2), 212–222. *Rukhin, A. (1996) "On the Pitman closeness criterion from the decision – Theoretic point of view". ''Statistics & Decisions'', 14, 253–274. *Peddada, D. S. (1985) "A short note on Pitman’s measure of nearness". ''American Statistician'', 39, 298–299. *Peddada, D. S. (1986) "Reply". ''American Statistician'', 40, 2576 *Nay ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Theory

The theory of statistics provides a basis for the whole range of techniques, in both study design and data analysis, that are used within applications of statistics. The theory covers approaches to statistical-decision problems and to statistical inference, and the actions and deductions that satisfy the basic principles stated for these different approaches. Within a given approach, statistical theory gives ways of comparing statistical procedures; it can find the best possible procedure within a given context for given statistical problems, or can provide guidance on the choice between alternative procedures. Apart from philosophical considerations about how to make statistical inferences and decisions, much of statistical theory consists of mathematical statistics, and is closely linked to probability theory, to utility theory, and to optimization. Scope Statistical theory provides an underlying rationale and provides a consistent basis for the choice of methodology used i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

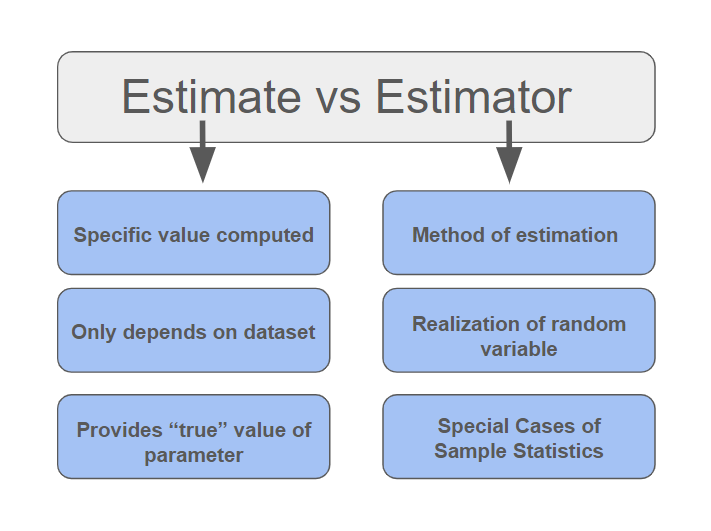

Estimator

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on Sample (statistics), observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point estimator, point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. Howeve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mahalanobis Distance

The Mahalanobis distance is a distance measure, measure of the distance between a point P and a probability distribution D, introduced by Prasanta Chandra Mahalanobis, P. C. Mahalanobis in 1936. The mathematical details of Mahalanobis distance first appeared in the ''Journal of The Asiatic Society of Bengal'' in 1936. Mahalanobis's definition was prompted by the problem of similarity measure, identifying the similarities of skulls based on measurements (the earliest work related to similarities of skulls are from 1922 and another later work is from 1927). Raj Chandra Bose, R.C. Bose later obtained the sampling distribution of Mahalanobis distance, under the assumption of equal dispersion. It is a multivariate generalization of the square of the standard score z=(x- \mu)/\sigma: how many standard deviations away P is from the mean of D. This distance is zero for P at the mean of D and grows as P moves away from the mean along each principal component axis. If each of these axes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of The American Statistical Association

The ''Journal of the American Statistical Association'' is a quarterly peer-reviewed scientific journal published by Taylor & Francis on behalf of the American Statistical Association. It covers work primarily focused on the application of statistics, statistical theory and methods in economic, social, physical, engineering, and health sciences. The journal also includes reviews of books which are relevant to the field. The journal was established in 1888 as the ''Publications of the American Statistical Association''. It was renamed ''Quarterly Publications of the American Statistical Association'' in 1912, obtaining its current title in 1922. Reception According to the ''Journal Citation Reports ''Journal Citation Reports'' (''JCR'') is an annual publication by Clarivate. It has been integrated with the Web of Science and is accessed from the Web of Science Core Collection. It provides information about academic journals in the natur ...'', the journal has a 2023 impac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Biometrika

''Biometrika'' is a peer-reviewed scientific journal published by Oxford University Press for the Biometrika Trust. The editor-in-chief is Paul Fearnhead (Lancaster University). The principal focus of this journal is theoretical statistics. It was established in 1901 and originally appeared quarterly. It changed to three issues per year in 1977 but returned to quarterly publication in 1992. History ''Biometrika'' was established in 1901 by Francis Galton, Karl Pearson, and Raphael Weldon to promote the study of biometrics. The history of ''Biometrika'' is covered by Cox (2001). The name of the journal was chosen by Pearson, but Francis Edgeworth insisted that it be spelt with a "k" and not a "c". Since the 1930s, it has been a journal for statistical theory and methodology. Galton's role in the journal was essentially that of a patron and the journal was run by Pearson and Weldon and after Weldon's death in 1906 by Pearson alone until he died in 1936. In the early days, the Ameri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sankhya (journal)

''Sankhyā: The Indian Journal of Statistics'' is a quarterly peer-reviewed scientific journal on statistics published by the Indian Statistical Institute (ISI). It was established in 1933 by Prasanta Chandra Mahalanobis, founding director of ISI, along the lines of Karl Pearson's ''Biometrika''. Mahalanobis was the founding editor-in-chief. Each volume of ''Sankhya'' consists of four issues, two of them are in Series A, containing articles on theoretical statistics, probability theory, and stochastic processes, whereas the other two issues form Series B, containing articles on applied statistics, i.e. applied probability, applied stochastic processes, econometrics, and statistical computing. ''Sankhya'' is considered as "core journal" of statistics by the Current Index to Statistics. Publication history ''Sankhya'' was first published in June 1933. In 1961, the journal split into two series: Series A which focused on mathematical statistics and Series B which focused on stat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Distance

In statistics, probability theory, and information theory, a statistical distance quantifies the distance between two statistical objects, which can be two random variables, or two probability distributions or samples, or the distance can be between an individual sample point and a population or a wider sample of points. A distance between populations can be interpreted as measuring the distance between two probability distributions and hence they are essentially measures of distances between probability measures. Where statistical distance measures relate to the differences between random variables, these may have statistical dependence, and hence these distances are not directly related to measures of distances between probability measures. Again, a measure of distance between random variables may relate to the extent of dependence between them, rather than to their individual values. Many statistical distance measures are not metrics, and some are not symmetric. Some types ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |