|

Neighbourhood Components Analysis

Neighbourhood components analysis is a supervised learning method for Statistical classification, classifying multivariate statistics, multivariate data into distinct classes according to a given metric (mathematics), distance metric over the data. Functionally, it serves the same purposes as the K-nearest neighbors algorithm, and makes direct use of a related concept termed ''stochastic nearest neighbours''. Definition Neighbourhood components analysis aims at "learning" a distance metric by finding a linear transformation of input data such that the average leave-one-out (LOO) classification performance is maximized in the transformed space. The key insight to the algorithm is that a matrix A corresponding to the transformation can be found by defining a differentiable objective function for A, followed by use of an iterative solver such as Conjugate gradient method, conjugate gradient descent. One of the benefits of this algorithm is that the number of classes k can be determin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supervised Learning

Supervised learning (SL) is a machine learning paradigm for problems where the available data consists of labelled examples, meaning that each data point contains features (covariates) and an associated label. The goal of supervised learning algorithms is learning a function that maps feature vectors (inputs) to labels (output), based on example input-output pairs. It infers a function from ' consisting of a set of ''training examples''. In supervised learning, each example is a ''pair'' consisting of an input object (typically a vector) and a desired output value (also called the ''supervisory signal''). A supervised learning algorithm analyzes the training data and produces an inferred function, which can be used for mapping new examples. An optimal scenario will allow for the algorithm to correctly determine the class labels for unseen instances. This requires the learning algorithm to generalize from the training data to unseen situations in a "reasonable" way (see inductive ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Euclidean Distance

In mathematics, the Euclidean distance between two points in Euclidean space is the length of a line segment between the two points. It can be calculated from the Cartesian coordinates of the points using the Pythagorean theorem, therefore occasionally being called the Pythagorean distance. These names come from the ancient Greek mathematicians Euclid and Pythagoras, although Euclid did not represent distances as numbers, and the connection from the Pythagorean theorem to distance calculation was not made until the 18th century. The distance between two objects that are not points is usually defined to be the smallest distance among pairs of points from the two objects. Formulas are known for computing distances between different types of objects, such as the distance from a point to a line. In advanced mathematics, the concept of distance has been generalized to abstract metric spaces, and other distances than Euclidean have been studied. In some applications in statistic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scikit-learn

scikit-learn (formerly scikits.learn and also known as sklearn) is a free software machine learning library for the Python programming language. It features various classification, regression and clustering algorithms including support-vector machines, random forests, gradient boosting, ''k''-means and DBSCAN, and is designed to interoperate with the Python numerical and scientific libraries NumPy and SciPy. Scikit-learn is a NumFOCUS fiscally sponsored project. Overview The scikit-learn project started as scikits.learn, a Google Summer of Code project by French data scientist David Cournapeau. The name of the project stems from the notion that it is a "SciKit" (SciPy Toolkit), a separately developed and distributed third-party extension to SciPy. The original codebase was later rewritten by other developers. In 2010, contributors Fabian Pedregosa, Gaël Varoquaux, Alexandre Gramfort and Vincent Michel, from the French Institute for Research in Computer Science and Auto ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mlpack

mlpack is a machine learning software library for C++, built on top of the Armadillo library and thensmallennumerical optimization library. mlpack has an emphasis on scalability, speed, and ease-of-use. Its aim is to make machine learning possible for novice users by means of a simple, consistent API, while simultaneously exploiting C++ language features to provide maximum performance and maximum flexibility for expert users. Its intended target users are scientists and engineers. It is open-source software distributed under the BSD license, making it useful for developing both open source and proprietary software. Releases 1.0.11 and before were released under the LGPL license. The project is supported by the Georgia Institute of Technology and contributions from around the world. Miscellaneous features Class templates for GRU, LSTM structures are available, thus the library also supports Recurrent Neural Networks. There are bindings to R, Go, Julia, and Python. Its b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spectral Clustering

In multivariate statistics, spectral clustering techniques make use of the spectrum (eigenvalues) of the similarity matrix of the data to perform dimensionality reduction before clustering in fewer dimensions. The similarity matrix is provided as an input and consists of a quantitative assessment of the relative similarity of each pair of points in the dataset. In application to image segmentation, spectral clustering is known as segmentation-based object categorization. Definitions Given an enumerated set of data points, the similarity matrix may be defined as a symmetric matrix A, where A_\geq 0 represents a measure of the similarity between data points with indices i and j. The general approach to spectral clustering is to use a standard clustering method (there are many such methods, ''k''-means is discussed below) on relevant eigenvectors of a Laplacian matrix of A. There are many different ways to define a Laplacian which have different mathematical interpretations, and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gradient

In vector calculus, the gradient of a scalar-valued differentiable function of several variables is the vector field (or vector-valued function) \nabla f whose value at a point p is the "direction and rate of fastest increase". If the gradient of a function is non-zero at a point , the direction of the gradient is the direction in which the function increases most quickly from , and the magnitude of the gradient is the rate of increase in that direction, the greatest absolute directional derivative. Further, a point where the gradient is the zero vector is known as a stationary point. The gradient thus plays a fundamental role in optimization theory, where it is used to maximize a function by gradient ascent. In coordinate-free terms, the gradient of a function f(\bf) may be defined by: :df=\nabla f \cdot d\bf where ''df'' is the total infinitesimal change in ''f'' for an infinitesimal displacement d\bf, and is seen to be maximal when d\bf is in the direction of the gr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Affine Combination

In mathematics, an affine combination of is a linear combination : \sum_^ = \alpha_ x_ + \alpha_ x_ + \cdots +\alpha_ x_, such that :\sum_^ =1. Here, can be elements ( vectors) of a vector space over a field , and the coefficients \alpha_ are elements of . The elements can also be points of a Euclidean space, and, more generally, of an affine space over a field . In this case the \alpha_ are elements of (or \mathbb R for a Euclidean space), and the affine combination is also a point. See for the definition in this case. This concept is fundamental in Euclidean geometry and affine geometry, because the set of all affine combinations of a set of points forms the smallest subspace containing the points, exactly as the linear combinations of a set of vectors form their linear span. The affine combinations commute with any affine transformation in the sense that : T\sum_^ = \sum_^. In particular, any affine combination of the fixed points of a given affine transformatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Softmax Activation Function

The softmax function, also known as softargmax or normalized exponential function, converts a vector of real numbers into a probability distribution of possible outcomes. It is a generalization of the logistic function to multiple dimensions, and used in multinomial logistic regression. The softmax function is often used as the last activation function of a neural network to normalize the output of a network to a probability distribution over predicted output classes, based on Luce's choice axiom. Definition The softmax function takes as input a vector of real numbers, and normalizes it into a probability distribution consisting of probabilities proportional to the exponentials of the input numbers. That is, prior to applying softmax, some vector components could be negative, or greater than one; and might not sum to 1; but after applying softmax, each component will be in the interval (0, 1), and the components will add up to 1, so that they can be interpreted as probabi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Classification

In statistics, classification is the problem of identifying which of a set of categories (sub-populations) an observation (or observations) belongs to. Examples are assigning a given email to the "spam" or "non-spam" class, and assigning a diagnosis to a given patient based on observed characteristics of the patient (sex, blood pressure, presence or absence of certain symptoms, etc.). Often, the individual observations are analyzed into a set of quantifiable properties, known variously as explanatory variables or ''features''. These properties may variously be categorical (e.g. "A", "B", "AB" or "O", for blood type), ordinal (e.g. "large", "medium" or "small"), integer-valued (e.g. the number of occurrences of a particular word in an email) or real-valued (e.g. a measurement of blood pressure). Other classifiers work by comparing observations to previous observations by means of a similarity or distance function. An algorithm that implements classification, especially in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastic Gradient Descent

Stochastic gradient descent (often abbreviated SGD) is an iterative method for optimizing an objective function with suitable smoothness properties (e.g. differentiable or subdifferentiable). It can be regarded as a stochastic approximation of gradient descent optimization, since it replaces the actual gradient (calculated from the entire data set) by an estimate thereof (calculated from a randomly selected subset of the data). Especially in high-dimensional optimization problems this reduces the very high computational burden, achieving faster iterations in trade for a lower convergence rate. While the basic idea behind stochastic approximation can be traced back to the Robbins–Monro algorithm of the 1950s, stochastic gradient descent has become an important optimization method in machine learning. Background Both statistical estimation and machine learning consider the problem of minimizing an objective function that has the form of a sum: : Q(w) = \frac\sum_^n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

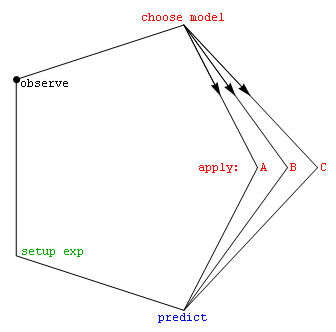

Model Selection

Model selection is the task of selecting a statistical model from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments such that the data collected is well-suited to the problem of model selection. Given candidate models of similar predictive or explanatory power, the simplest model is most likely to be the best choice (Occam's razor). state, "The majority of the problems in statistical inference can be considered to be problems related to statistical modeling". Relatedly, has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Model selection may also refer to the problem of selecting a few representative models from a large set of computational models for the purpose of decision making or optimization under uncertainty. Introduction In its most basic forms, model selection is one ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |