|

Multisync Monitor

A multiple-sync (multisync) monitor, also known as a multiscan or multimode monitor, is a raster-scan analog video monitor that can properly synchronise with multiple horizontal and vertical scan rates. In contrast, fixed frequency monitors can only synchronise with a specific set of scan rates. They are generally used for computer displays, but sometimes for television, and the terminology is mostly applied to CRT displays although the concept applies to other technologies. Multiscan computer monitors appeared during the mid 1980s, offering flexibility as computer video hardware shifted from producing a single fixed scan rate to multiple possible scan rates. "MultiSync" specifically was a trademark of one of NEC's first multiple-sync monitors. Computers History Early home computers output video to ordinary televisions or composite monitors, utilizing television display standards such as NTSC, PAL or SECAM. These display standards had fixed scan rates, and only used the v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Raster Scan

A raster scan, or raster scanning, is the rectangular pattern of image capture and reconstruction in television. By analogy, the term is used for raster graphics, the pattern of image storage and transmission used in most computer bitmap image systems. The word '' raster'' comes from the Latin word '' rastrum'' (a rake), which is derived from '' radere'' (to scrape); see also rastrum, an instrument for drawing musical staff lines. The pattern left by the lines of a rake, when drawn straight, resembles the parallel lines of a raster: this line-by-line scanning is what creates a raster. It is a systematic process of covering the area progressively, one line at a time. Although often a great deal faster, it is similar in the most general sense to how one's gaze travels when one reads lines of text. The data to be drawn is stored in an area of memory called the Framebuffer. This memory area holds the values for each pixel on the screen. These values are retrieved from the refresh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

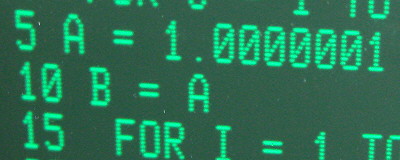

IBM Monochrome Display Adapter

The Monochrome Display Adapter (MDA, also MDA card, Monochrome Display and Printer Adapter, MDPA) is IBM's standard video display card and computer display standard for the IBM PC introduced in 1981. The MDA does not have any pixel-addressable graphics modes, only a single monochrome text mode which can display 80 columns by 25 lines of high resolution text characters or symbols useful for drawing forms. Hardware design The original IBM MDA was an 8-bit ISA card with a Motorola 6845 display controller, 4 KB of RAM, a DE-9 output port intended for use with an IBM monochrome monitor, and a parallel port for attachment of a printer, avoiding the need to purchase a separate card. Capabilities The MDA was based on the IBM System/23 Datamaster's display system, and was intended to support business and word processing use with its sharp, high-resolution characters. Each character is rendered in a box of 9×14 pixels, of which 7×11 depicts the character itself and t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Video Scaler

A video scaler is a system which converts video signals from one display resolution to another; typically, scalers are used to convert a signal from a lower resolution (such as 480p standard definition) to a higher resolution (such as 1080i high definition), a process known as "upconversion" or "upscaling" (by contrast, converting from high to low resolution is known as "downconversion" or "downscaling"). Video scalers are typically found inside consumer electronics devices such as televisions, video game consoles, and DVD or Blu-ray players, but can also be found in other AV equipment (such as video editing and television broadcasting equipment). Video scalers can also be a completely separate devices, often providing simple video switching capabilities. These units are commonly found as part of home theatre or projected presentation systems. They are often combined with other video processing devices or algorithms to create a video processor that improves the apparent defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fixed Pixel Display

Fixed pixel displays are display technologies such as LCD and plasma that use an unfluctuating matrix of pixels with a set number of pixels in each row and column. With such displays, adjusting ( scaling) to different aspect ratios because of different input signals requires complex processing. In contrast, the CRTs electronics architecture "paints" the screen with the required number of pixels horizontally and vertically. CRTs can be designed to more easily accommodate a wide range of inputs ( VGA, XVGA, NTSC, HDTV High-definition television (HD or HDTV) describes a television system which provides a substantially higher image resolution than the previous generation of technologies. The term has been used since 1936; in more recent times, it refers to the g ..., etc.). References Digital imaging {{compu-graphics-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microprocessor

A microprocessor is a computer processor where the data processing logic and control is included on a single integrated circuit, or a small number of integrated circuits. The microprocessor contains the arithmetic, logic, and control circuitry required to perform the functions of a computer's central processing unit. The integrated circuit is capable of interpreting and executing program instructions and performing arithmetic operations. The microprocessor is a multipurpose, clock-driven, register-based, digital integrated circuit that accepts binary data as input, processes it according to instructions stored in its memory, and provides results (also in binary form) as output. Microprocessors contain both combinational logic and sequential digital logic, and operate on numbers and symbols represented in the binary number system. The integration of a whole CPU onto a single or a few integrated circuits using Very-Large-Scale Integration (VLSI) greatly reduced the c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coordinated Video Timings

Coordinated Video Timings (CVT; ''VESA-2013-3 v1.2'') is a standard by VESA which defines the timings of the component video signal. Initially intended for use by computer monitors and video cards, the standard made its way into consumer televisions. The parameters defined by standard include horizontal blanking and vertical blanking intervals, horizontal frequency and vertical frequency (collectively, pixel clock rate or video signal bandwidth), and horizontal/ vertical sync polarity. The standard was adopted in 2002 and superseded the Generalized Timing Formula. Reduced blanking CVT timings include the necessary pauses in picture data (known as "blanking intervals") to allow CRT displays to reposition their electron beam at the end of each horizontal scan line, as well as the vertical repositioning necessary at the end of each frame. CVT also specifies a mode ("CVT-R") which significantly reduces these blanking intervals (to a period insufficient for CRT displays t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalized Timing Formula

Generalized Timing Formula is a standard by VESA which defines exact parameters of the component video signal for analogue VGA display interface. The video parameters defined by the standard include horizontal blanking (retrace) and vertical blanking intervals, horizontal frequency and vertical frequency (collectively, pixel clock rate or video signal bandwidth), and horizontal/ vertical sync polarity. Unlike predefined discrete modes (VESA DMT), any mode in a range can be produced using a formula by GTF. A GTF-compliant display is expected to calculate the blanking intervals from the signal frequencies, producing a properly centered image. At the same time, a compliant graphics card is expected to use the calculation to produce a signal that will work on the display — either a GTF default formula for then-ordinary CRT displays or via a custom formula provided via EDID signaling. These parameters are used by the XFree86 Modeline, for example. This video timing standard ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

VESA BIOS Extensions

VESA BIOS Extensions (VBE) is a VESA standard, currently at version 3, that defines the interface that can be used by software to access compliant video boards at high resolutions and bit depths. This is opposed to the "traditional" int 10h BIOS calls, which are limited to resolutions of 640×480 pixels with 16 color (4-bit) depth or less. VBE is made available through the video card's BIOS, which installs during boot up some interrupt vectors that point to itself. Most newer cards implement the more capable VBE 3.0 standard. Older versions of VBE provide only a real mode interface, which cannot be used without a significant performance penalty from within protected mode operating systems. Consequently, the VBE standard has almost never been used for writing a video card's drivers; each vendor has thus had to invent a proprietary protocol for communicating with its own video card. Despite this, it is common that a driver thunk out to the real mode interrupt in order to initia ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Super VGA

Super VGA (SVGA) is a broad term that covers a wide range of computer display standards that extended IBM's VGA specification. When used as shorthand for a resolution, as VGA and XGA often are, SVGA refers to a resolution of 800×600. History In the late 1980s, after the release of IBM's VGA, third-party manufacturers began making graphics cards based on its specifications with extended capabilities. As these cards grew in popularity they began to be referred to as "Super VGA." This term was not an official standard, but a shorthand for enhanced VGA cards which had become common by 1988. One card that explicitly used the term was Genoa's SuperVGA HiRes. Super VGA cards broke compatibility with the IBM VGA standard, requiring software developers to provide specific display drivers and implementations for each card their software could operate on. Initially, the heavy restrictions this placed on software developers slowed the uptake of Super VGA cards, which motivated VESA t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

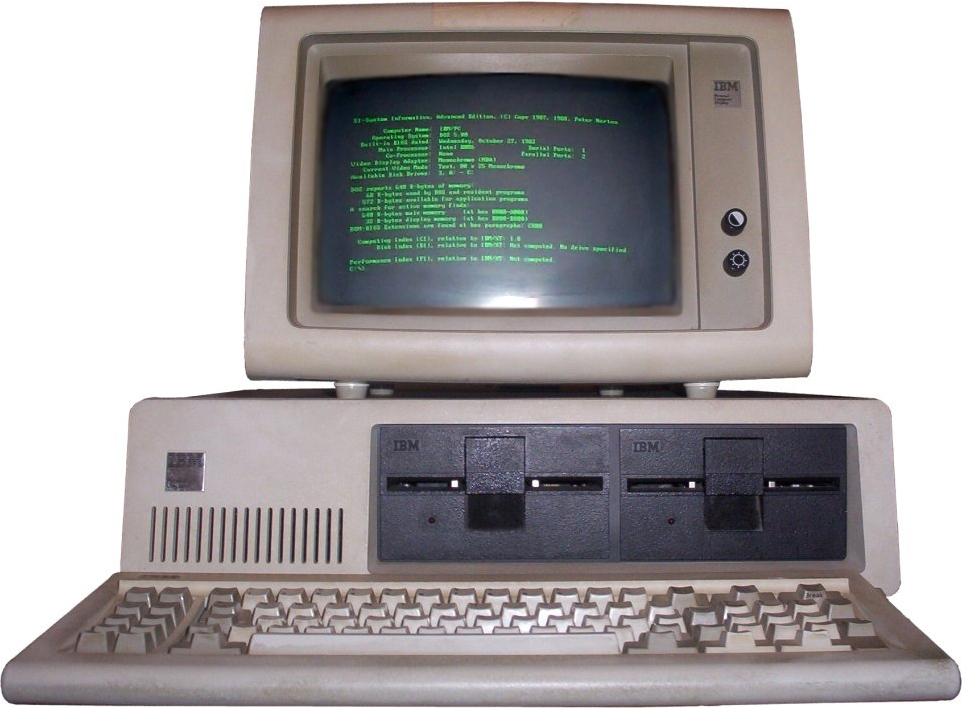

Video Graphics Array

Video Graphics Array (VGA) is a video display controller and accompanying de facto graphics standard, first introduced with the IBM PS/2 line of computers in 1987, which became ubiquitous in the PC industry within three years. The term can now refer to the computer display standard, the 15-pin D-subminiature VGA connector, or the 640×480 resolution characteristic of the VGA hardware. VGA was the last IBM graphics standard to which the majority of PC clone manufacturers conformed, making it the lowest common denominator that virtually all post-1990 PC graphics hardware can be expected to implement. IBM intended to supersede VGA with the Extended Graphics Array (XGA) standard, but failed. Instead, VGA was adapted into many extended forms by third parties, collectively known as Super VGA, then gave way to custom graphics processing units which, in addition to their proprietary interfaces and capabilities, continue to implement common VGA graphics modes and interfaces to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Enhanced Graphics Adapter

The Enhanced Graphics Adapter (EGA) is an IBM PC graphics adapter and de facto computer display standard from 1984 that superseded the CGA standard introduced with the original IBM PC, and was itself superseded by the VGA standard in 1987. In addition to the original EGA card manufactured by IBM, many compatible third-party cards were manufactured, and EGA graphics modes continued to be supported by VGA and later standards. History EGA was introduced in October 1984 by IBM,High-Resolution Standard Is Latest Step in DOS Graphics Evolution, ''InfoWorld'', June 26, 1989, p. 48News Briefs, Big Blue Turns Colors, ''InfoWorld'', October 8, 1984 shortly after its new PC/AT. The EGA could be installed in previously released IBM PCs, but required a ROM upgrade on the mainboard. Chips and Technologies first product, announced in September 1985, was a four chip EGA chipset that handled the functions of 19 of IBM's proprietary chips on the original Enhanced Graphics Adapter. By tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Color Graphics Adapter

The Color Graphics Adapter (CGA), originally also called the ''Color/Graphics Adapter'' or ''IBM Color/Graphics Monitor Adapter'', introduced in 1981, was IBM's first color graphics card for the IBM PC and established a De facto standard, de facto computer display standard. Hardware design The original IBM CGA graphics card was built around the Motorola 6845 display controller, came with 16 kilobytes of video memory built in, and featured several graphics and text modes. The highest display resolution of any mode was 640×200, and the highest color depth supported was 4-bit (16 colors). The CGA card could be connected either to a direct-drive Cathode ray tube, CRT monitor using a 4-bit computing, 4-bit digital (Transistor-transistor logic, TTL) RGB(I), RGBI interface, such as the IBM 5153 color display, or to an NTSC-compatible television or composite video computer monitor, monitor via an RCA connector. The RCA connector provided only baseband video, so to connect the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |