|

Logic Error

In computer programming Computer programming is the process of performing a particular computation (or more generally, accomplishing a specific computing result), usually by designing and building an executable computer program. Programming involves tasks such as anal ..., a logic error is a Software bug, bug in a program that causes it to operate incorrectly, but not to terminate abnormally (or crash (computing), crash). A logic error produces unintended or undesired output or other behaviour, although it may not immediately be recognized as such. Logic errors occur in both Compiler, compiled and Interpreter (computing), interpreted languages. Unlike a program with a syntax error, a program with a logic error is a valid program in the language, though it does not behave as intended. Often the only clue to the existence of logic errors is the production of wrong solutions, though Static program analysis, static analysis may sometimes spot them. Debugging logic errors One of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Programming

Computer programming is the process of performing a particular computation (or more generally, accomplishing a specific computing result), usually by designing and building an executable computer program. Programming involves tasks such as analysis, generating algorithms, profiling algorithms' accuracy and resource consumption, and the implementation of algorithms (usually in a chosen programming language, commonly referred to as coding). The source code of a program is written in one or more languages that are intelligible to programmers, rather than machine code, which is directly executed by the central processing unit. The purpose of programming is to find a sequence of instructions that will automate the performance of a task (which can be as complex as an operating system) on a computer, often for solving a given problem. Proficient programming thus usually requires expertise in several different subjects, including knowledge of the application domain, specialized algorit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

C (programming Language)

C (''pronounced like the letter c'') is a General-purpose language, general-purpose computer programming language. It was created in the 1970s by Dennis Ritchie, and remains very widely used and influential. By design, C's features cleanly reflect the capabilities of the targeted CPUs. It has found lasting use in operating systems, device drivers, protocol stacks, though decreasingly for application software. C is commonly used on computer architectures that range from the largest supercomputers to the smallest microcontrollers and embedded systems. A successor to the programming language B (programming language), B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the measuring programming language popularity, most widely used programming languages, with C compilers avail ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Programming Language Theory

Programming language theory (PLT) is a branch of computer science that deals with the design, implementation, analysis, characterization, and classification of formal languages known as programming languages. Programming language theory is closely related to other fields including mathematics, software engineering, and linguistics. There are a number of academic conferences and journals in the area. History In some ways, the history of programming language theory predates even the development of programming languages themselves. The lambda calculus, developed by Alonzo Church and Stephen Cole Kleene in the 1930s, is considered by some to be the world's first programming language, even though it was intended to ''model'' computation rather than being a means for programmers to ''describe'' algorithms to a computer system. Many modern functional programming languages have been described as providing a "thin veneer" over the lambda calculus, and many are easily described in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

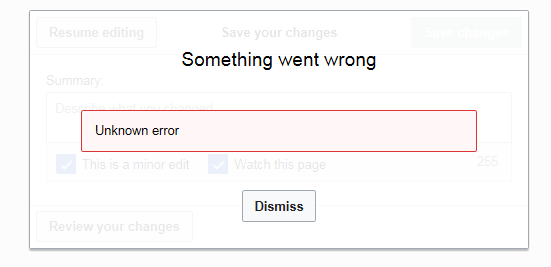

Computer Errors

An error message is information displayed when an unforeseen occurs, usually on a computer or other device. On modern operating systems with graphical user interfaces, error messages are often displayed using dialog boxes. Error messages are used when user intervention is required, to indicate that a desired operation has failed, or to relay important warnings (such as warning a computer user that they are almost out of hard disk space). Error messages are seen widely throughout computing, and are part of every operating system or computer hardware device. Proper design of error messages is an important topic in usability and other fields of human–computer interaction. Common error messages The following error messages are commonly seen by modern computer users: ;Access denied :This error occurs if the user doesn't have privileges to a file, or if it has been locked by some program or user. ;Device not ready :This error most often occurs when there is no floppy disk (or a b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Off-by-one Error

An off-by-one error or off-by-one bug (known by acronyms OBOE, OBO, OB1 and OBOB) is a logic error involving the discrete equivalent of a boundary condition. It often occurs in computer programming when an iterative loop iterates one time too many or too few. This problem could arise when a programmer makes mistakes such as using "is less than or equal to" where "is less than" should have been used in a comparison, or fails to take into account that a sequence starts at zero rather than one (as with array indices in many languages). This can also occur in a mathematical context. Cases Looping over arrays Consider an array of items, and items ''m'' through ''n'' (inclusive) are to be processed. How many items are there? An intuitive answer may be ''n'' − ''m'', but that is off by one, exhibiting a fencepost error; the correct answer is (''n'' – ''m'') + 1. For this reason, ranges in computing are often represented by half-open intervals; the r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Operator Precedence In C

This is a list of operators in the C and C++ programming languages. All the operators listed exist in C++; the column "Included in C", states whether an operator is also present in C. Note that C does not support operator overloading. When not overloaded, for the operators &&, , , , and , (the comma operator), there is a sequence point after the evaluation of the first operand. C++ also contains the type conversion operators const_cast, static_cast, dynamic_cast, and reinterpret_cast. The formatting of these operators means that their precedence level is unimportant. Most of the operators available in C and C++ are also available in other C-family languages such as C#, D, Java, Perl, and PHP with the same precedence, associativity, and semantics. Table For the purposes of these tables, a, b, and c represent valid values (literals, values from variables, or return value), object names, or lvalues, as appropriate. R, S and T stand for any type(s), and K for a class type or e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Arithmetic Mean

In mathematics and statistics, the arithmetic mean ( ) or arithmetic average, or just the '' mean'' or the ''average'' (when the context is clear), is the sum of a collection of numbers divided by the count of numbers in the collection. The collection is often a set of results of an experiment or an observational study, or frequently a set of results from a survey. The term "arithmetic mean" is preferred in some contexts in mathematics and statistics, because it helps distinguish it from other means, such as the geometric mean and the harmonic mean. In addition to mathematics and statistics, the arithmetic mean is used frequently in many diverse fields such as economics, anthropology and history, and it is used in almost every academic field to some extent. For example, per capita income is the arithmetic average income of a nation's population. While the arithmetic mean is often used to report central tendencies, it is not a robust statistic, meaning that it is great ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can perform automated deductions (referred to as automated reasoning) and use mathematical and logical tests to divert the code execution through various routes (referred to as automated decision-making). Using human characteristics as descriptors of machines in metaphorical ways was already practiced by Alan Turing with terms such as "memory", "search" and "stimulus". In contrast, a heuristic is an approach to problem solving that may not be fully specified or may not guarantee correct or optimal results, especially in problem domains where there is no well-defined correct or optimal result. As an effective method, an algorithm can be expressed within a finite amount of space ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Software Bug

A software bug is an error, flaw or fault in the design, development, or operation of computer software that causes it to produce an incorrect or unexpected result, or to behave in unintended ways. The process of finding and correcting bugs is termed "debugging" and often uses formal techniques or tools to pinpoint bugs. Since the 1950s, some computer systems have been designed to deter, detect or auto-correct various computer bugs during operations. Bugs in software can arise from mistakes and errors made in interpreting and extracting users' requirements, planning a program's design, writing its source code, and from interaction with humans, hardware and programs, such as operating systems or libraries. A program with many, or serious, bugs is often described as ''buggy''. Bugs can trigger errors that may have ripple effects. The effects of bugs may be subtle, such as unintended text formatting, through to more obvious effects such as causing a program to crash, freezing th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subroutine

In computer programming, a function or subroutine is a sequence of program instructions that performs a specific task, packaged as a unit. This unit can then be used in programs wherever that particular task should be performed. Functions may be defined within programs, or separately in libraries that can be used by many programs. In different programming languages, a function may be called a routine, subprogram, subroutine, method, or procedure. Technically, these terms all have different definitions, and the nomenclature varies from language to language. The generic umbrella term ''callable unit'' is sometimes used. A function is often coded so that it can be started several times and from several places during one execution of the program, including from other functions, and then branch back (''return'') to the next instruction after the ''call'', once the function's task is done. The idea of a subroutine was initially conceived by John Mauchly during his work on EN ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variable (programming)

In computer programming, a variable is an abstract storage location paired with an associated symbolic name, which contains some known or unknown quantity of information referred to as a ''value''; or in simpler terms, a variable is a named container for a particular set of bits or type of data (like integer, float, string etc...). A variable can eventually be associated with or identified by a memory address. The variable name is the usual way to reference the stored value, in addition to referring to the variable itself, depending on the context. This separation of name and content allows the name to be used independently of the exact information it represents. The identifier in computer source code can be bound to a value during run time, and the value of the variable may thus change during the course of program execution. Variables in programming may not directly correspond to the concept of variables in mathematics. The latter is abstract, having no reference to a p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |