|

Intel Parallel Building Blocks

Intel Parallel Building Blocks (PBB) was a collection of three programming solutions designed for multithreaded parallel computing. PBB consisted of Cilk Plus, Threading Building Blocks (TBB) and Intel Array Building Blocks (ArBB). Intel. Retrieved on 2010-09-14. See also * * (CnC) * |

Imperative Programming

In computer science, imperative programming is a programming paradigm of software that uses Statement (computer science), statements that change a program's state (computer science), state. In much the same way that the imperative mood in natural languages expresses commands, an imperative program consists of command (computing), commands for the computer to perform. Imperative programming focuses on describing ''how'' a program operates step by step (with general order of the steps being determined in source code by the placement of statements one below the other), rather than on high-level descriptions of its expected results. The term is often used in contrast to declarative programming, which focuses on ''what'' the program should accomplish without specifying all the details of ''how'' the program should achieve the result. Procedural programming Procedural programming is a type of imperative programming in which the program is built from one or more procedures (also termed s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Intel Array Building Blocks

Intel Array Building Blocks (also known as ArBB) was a C++ library developed by Intel Corporation for exploiting data parallel portions of programs to take advantage of multi-core processors, graphics processing units and Intel Many Integrated Core Architecture processors. ArBB provides a generalized vector parallel programming solution designed to avoid direct dependencies on particular low-level parallelism mechanisms or hardware architectures. ArBB is oriented to applications that require data-intensive mathematical computations. By default, ArBB programs cannot create data races or deadlocks. History Intel Ct was a parallel programming model developed by Intel in 2007 for its future multi-core processors as part of the Tera-Scale research program. In April 2009, Intel announced that "Ct sto appear in programmer tools by end of the year". On August 19, 2009, Intel acquired RapidMind, a privately held company founded and headquartered in Waterloo, Ontario, Canada. In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Concurrent Programming Languages

Concurrent computing is a form of computing in which several computations are executed '' concurrently''—during overlapping time periods—instead of ''sequentially—''with one completing before the next starts. This is a property of a system—whether a program, computer, or a network—where there is a separate execution point or "thread of control" for each process. A ''concurrent system'' is one where a computation can advance without waiting for all other computations to complete. Concurrent computing is a form of modular programming. In its paradigm an overall computation is factored into subcomputations that may be executed concurrently. Pioneers in the field of concurrent computing include Edsger Dijkstra, Per Brinch Hansen, and C.A.R. Hoare. Introduction The concept of concurrent computing is frequently confused with the related but distinct concept of parallel computing, Pike, Rob (2012-01-11). "Concurrency is not Parallelism". ''Waza conference'', 11 Janua ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Intel Developer Zone

The Intel Developer Zone is an international online program designed by Intel to encourage and support independent software vendors in developing applications for Intel hardware and software products. This support is provided for the key stages of the business life cycle from planning to development and in various forms: web sites, newsletters, developer conferences, trade media, and blogs. Products supported through Intel Developer Zone include support for multiprocessor offerings like Intel Threading Building Blocks (Intel TBB) and Intel Parallel Studio, as well as programming tools like Intel's compiler products (Intel C++ Compiler and Intel Fortran Compiler) and Intel VTune Amplifier, and libraries A library is a collection of Book, books, and possibly other Document, materials and Media (communication), media, that is accessible for use by its members and members of allied institutions. Libraries provide physical (hard copies) or electron ... like Intel Integrated Perform ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Intel Concurrent Collections

Concurrent Collections (known as CnC) is a programming model for software frameworks to expose parallelism in applications. The Concurrent Collections conception originated from tagged stream processing development with HP TStreams. TStreams Around 2003, Hewlett-Packard Cambridge Research Lab developed ''TStreams'', a stream processing forerunner of the basic concepts of CnC. Concurrent Collections for C++ ''Concurrent Collections for C++'' is an open source C++ template library developed by Intel for implementing parallel CnC applications in C++ with shared and/or distributed memory. Habanero CnC Rice University has developed various CnC language implementations based on their ''Habanero'' project infrastructure. See also * Stream processing * Flow-based programming (FBP) * Tuple space * Functional reactive programming (FRP) * Linda (coordination language) * Threading Building Blocks (TBB) * Cilk/ Cilk Plus * Intel Parallel Studio Intel Parallel Studio XE was a software ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Intel Parallel Studio

Intel Parallel Studio XE was a software development product developed by Intel that facilitated native code development on Windows, macOS and Linux in C++ and Fortran for parallel computing. Parallel programming enables software programs to take advantage of multi-core processors from Intel and other processor vendors. Intel Parallel Studio XE was rebranded and repackaged by Intel wheoneAPI toolkitswere released in December 2020. Intel oneAPI Base Toolkit + Intel oneAPI HPC toolkit contain all the tools in Parallel Studio XE and more. One significant addition is a Data Parallel C++ (DPC++) compiler designed to allow developers to reuse code across hardware targets (CPUs and accelerators such as GPUs and FPGAs). Components Parallel Studio is composed of several component parts, each of which is a collection of capabilities. * Intel C++ Compiler with OpenMP * Intel Fortran Compiler with OpenMP * IDE plug-in integration with Visual Studio, Eclipse and Xcode * Debugging via ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Thread (computer Science)

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. In many cases, a thread is a component of a process. The multiple threads of a given process may be executed concurrently (via multithreading capabilities), sharing resources such as memory, while different processes do not share these resources. In particular, the threads of a process share its executable code and the values of its dynamically allocated variables and non- thread-local global variables at any given time. The implementation of threads and processes differs between operating systems. History Threads made an early appearance under the name of "tasks" in IBM's batch processing operating system, OS/360, in 1967. It provided users with three available configurations of the OS/360 control system, of which Multiprogramming with a Variable Number of Tasks (MVT) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Threading Building Blocks

oneAPI Threading Building Blocks (oneTBB; formerly Threading Building Blocks or TBB) is a C++ template library developed by Intel for parallel programming on multi-core processors. Using TBB, a computation is broken down into tasks that can run in parallel. The library manages and schedules threads to execute these tasks. Overview A oneTBB program creates, synchronizes, and destroys graphs of dependent tasks according to ''algorithms'', i.e. high-level parallel programming paradigms (a.k.a. Algorithmic Skeletons). Tasks are then executed respecting graph dependencies. This approach groups TBB in a family of techniques for parallel programming aiming to decouple the programming from the particulars of the underlying machine. oneTBB implements work stealing to balance a parallel workload across available processing cores in order to increase core utilization and therefore scaling. Initially, the workload is evenly divided among the available processor cores. If one core com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Procedural Programming

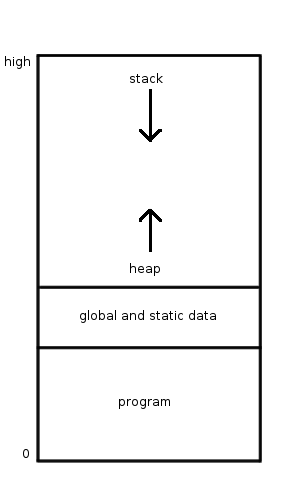

Procedural programming is a programming paradigm, classified as imperative programming, that involves implementing the behavior of a computer program as Function (computer programming), procedures (a.k.a. functions, subroutines) that call each other. The resulting program is a series of steps that forms a hierarchy of calls to its constituent procedures. The first major procedural programming languages appeared –1964, including Fortran, ALGOL, COBOL, PL/I and BASIC. Pascal (programming language), Pascal and C (programming language), C were published –1972. Computer processors provide hardware support for procedural programming through a stack register and instructions for Subroutine#Jump to subroutine, calling procedures and returning from them. Hardware support for other types of programming is possible, like Lisp machines or Java processors, but no attempt was commercially successful. Development practices Certain software development practices are often employed with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cilk Plus

Cilk, Cilk++, Cilk Plus and OpenCilk are general-purpose programming languages designed for multithreaded parallel computing. They are based on the C and C++ programming languages, which they extend with constructs to express parallel loops and the fork–join idiom. Originally developed in the 1990s at the Massachusetts Institute of Technology (MIT) in the group of Charles E. Leiserson, Cilk was later commercialized as Cilk++ by a spinoff company, Cilk Arts. That company was subsequently acquired by Intel, which increased compatibility with existing C and C++ code, calling the result Cilk Plus. After Intel stopped supporting Cilk Plus in 2017, MIT is again developing Cilk in the form of OpenCilk. History MIT Cilk The Cilk programming language grew out of three separate projects at the MIT Laboratory for Computer Science: * Theoretical work on scheduling multi-threaded applications. * StarTech – a parallel chess program built to run on the Thinking Machines Corporat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Manifest Typing

In computer science, manifest typing is explicit identification by the software programmer of the ''type'' of each variable being declared. For example: if variable ''X'' is going to store integers then its ''type'' must be declared as integer. The term "manifest typing" is often used with the term latent typing to describe the difference between the static, compile-time type membership of the object and its run-time type identity. In contrast, some programming languages use ''implicit typing'' (a.k.a. type inference) where the type is deduced from context at compile-time or allow for dynamic typing in which the variable is just declared and may be assigned a value of any type at runtime. It's important to know the difference between manifest/implicit typing and static/dynamic typing. The first one describes how the variables (and it's types) are defined, while the second describes whether the language checks the types at compile or execution time. Examples Consider the f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |