|

IBM ZEC12 (microprocessor)

The zEC12 microprocessor (''zEnterprise EC12'' or just ''z12'') is a chip made by IBM for their zEnterprise EC12 and zEnterprise BC12 mainframe computers, announced on August 28, 2012. It is manufactured at the East Fishkill, New York fabrication plant (previously owned by IBM but production will continue for ten years by new owner GlobalFoundries). The processor began shipping in the fall of 2012. IBM stated that it was the world's fastest microprocessor and is about 25% faster than its predecessor the z196. Description The chip measures 597.24 mm2 and consists of 2.75 billion transistors fabricated in IBM's 32 nm CMOS silicon on insulator fabrication process, supporting speeds of 5.5 GHz, the highest clock speed CPU ever produced for commercial sale. The processor implements the CISC z/Architecture with a superscalar, out-of-order pipeline and some new instructions mainly related to transactional execution. The cores have numerous other enhancements such as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IBM Z196

The z196 microprocessor is a chip made by IBM for their zEnterprise 196 and zEnterprise 114 mainframe computers, announced on July 22, 2010. The processor was developed over a three-year time span by IBM engineers from Poughkeepsie, New York; Austin, Texas; and Böblingen, Germany at a cost of US$1.5 billion. Manufactured at IBM's Fishkill, New York fabrication plant, the processor began shipping on September 10, 2010. IBM stated that it was the world's fastest microprocessor at the time. Description The chip measures 512.3 mm2 and consists of 1.4 billion transistors fabricated in IBM's 45 nm CMOS silicon on insulator fabrication process, supporting speeds of 5.2 GHz: at the time, the highest clock speed CPU ever produced for commercial sale. The processor implements the CISC z/Architecture with a new superscalar, out-of-order pipeline and 100 new instructions. The instruction pipeline has 15 to 17 stages; the instruction queue can hold 40 instructions; ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction (computer Science)

In computer science, an instruction set architecture (ISA), also called computer architecture, is an abstract model of a computer. A device that executes instructions described by that ISA, such as a central processing unit (CPU), is called an ''implementation''. In general, an ISA defines the supported instructions, data types, registers, the hardware support for managing main memory, fundamental features (such as the memory consistency, addressing modes, virtual memory), and the input/output model of a family of implementations of the ISA. An ISA specifies the behavior of machine code running on implementations of that ISA in a fashion that does not depend on the characteristics of that implementation, providing binary compatibility between implementations. This enables multiple implementations of an ISA that differ in characteristics such as performance, physical size, and monetary cost (among other things), but that are capable of running the same machine code, so th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DDR SDRAM

Double Data Rate Synchronous Dynamic Random-Access Memory (DDR SDRAM) is a double data rate (DDR) synchronous dynamic random-access memory (SDRAM) class of memory integrated circuits used in computers. DDR SDRAM, also retroactively called DDR1 SDRAM, has been superseded by DDR2 SDRAM, DDR3 SDRAM, DDR4 SDRAM and DDR5 SDRAM. None of its successors are forward or backward compatible with DDR1 SDRAM, meaning DDR2, DDR3, DDR4 and DDR5 memory modules will not work in DDR1-equipped motherboards, and vice versa. Compared to single data rate ( SDR) SDRAM, the DDR SDRAM interface makes higher transfer rates possible by more strict control of the timing of the electrical data and clock signals. Implementations often have to use schemes such as phase-locked loops and self-calibration to reach the required timing accuracy. The interface uses double pumping (transferring data on both the rising and falling edges of the clock signal) to double data bus bandwidth without a corresponding in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decimal Floating Point

Decimal floating-point (DFP) arithmetic refers to both a representation and operations on decimal floating-point numbers. Working directly with decimal (base-10) fractions can avoid the rounding errors that otherwise typically occur when converting between decimal fractions (common in human-entered data, such as measurements or financial information) and binary (base-2) fractions. The advantage of decimal floating-point representation over decimal fixed-point and integer representation is that it supports a much wider range of values. For example, while a fixed-point representation that allocates 8 decimal digits and 2 decimal places can represent the numbers 123456.78, 8765.43, 123.00, and so on, a floating-point representation with 8 decimal digits could also represent 1.2345678, 1234567.8, 0.000012345678, 12345678000000000, and so on. This wider range can dramatically slow the accumulation of rounding errors during successive calculations; for example, the Kahan summation alg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating-point Unit

In computing, floating-point arithmetic (FP) is arithmetic that represents real numbers approximately, using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. For example, 12.345 can be represented as a base-ten floating-point number: 12.345 = \underbrace_\text \times \underbrace_\text\!\!\!\!\!\!^ In practice, most floating-point systems use base two, though base ten ( decimal floating point) is also common. The term ''floating point'' refers to the fact that the number's radix point can "float" anywhere to the left, right, or between the significant digits of the number. This position is indicated by the exponent, so floating point can be considered a form of scientific notation. A floating-point system can be used to represent, with a fixed number of digits, numbers of very different orders of magnitude — such as the number of meters between galaxies or between protons in an atom. For this reason, floating-po ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Arithmetic Logic Unit

In computing, an arithmetic logic unit (ALU) is a combinational digital circuit that performs arithmetic and bitwise operations on integer binary numbers. This is in contrast to a floating-point unit (FPU), which operates on floating point numbers. It is a fundamental building block of many types of computing circuits, including the central processing unit (CPU) of computers, FPUs, and graphics processing units (GPUs). The inputs to an ALU are the data to be operated on, called operands, and a code indicating the operation to be performed; the ALU's output is the result of the performed operation. In many designs, the ALU also has status inputs or outputs, or both, which convey information about a previous operation or the current operation, respectively, between the ALU and external status registers. Signals An ALU has a variety of input and output nets, which are the electrical conductors used to convey digital signals between the ALU and external circuitry. When an ALU i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reduced Instruction Set Computing

In computer engineering, a reduced instruction set computer (RISC) is a computer designed to simplify the individual instructions given to the computer to accomplish tasks. Compared to the instructions given to a complex instruction set computer (CISC), a RISC computer might require more instructions (more code) in order to accomplish a task because the individual instructions are written in simpler code. The goal is to offset the need to process more instructions by increasing the speed of each instruction, in particular by implementing an instruction pipeline, which may be simpler given simpler instructions. The key operational concept of the RISC computer is that each instruction performs only one function (e.g. copy a value from memory to a register). The RISC computer usually has many (16 or 32) high-speed, general-purpose registers with a load/store architecture in which the code for the register-register instructions (for performing arithmetic and tests) are separate f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

EDRAM

Embedded DRAM (eDRAM) is dynamic random-access memory (DRAM) integrated on the same die or multi-chip module (MCM) of an application-specific integrated circuit (ASIC) or microprocessor. eDRAM's cost-per-bit is higher when compared to equivalent standalone DRAM chips used as external memory, but the performance advantages of placing eDRAM onto the same chip as the processor outweigh the cost disadvantages in many applications. In performance and size, eDRAM is positioned between level 3 cache and conventional DRAM on the memory bus, and effectively functions as a level 4 cache, though architectural descriptions may not explicitly refer to it in those terms. Embedding memory on the ASIC or processor allows for much wider buses and higher operation speeds, and due to much higher density of DRAM in comparison to SRAM, larger amounts of memory can be installed on smaller chips if eDRAM is used instead of eSRAM. eDRAM requires additional fab process steps compared with embedded S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

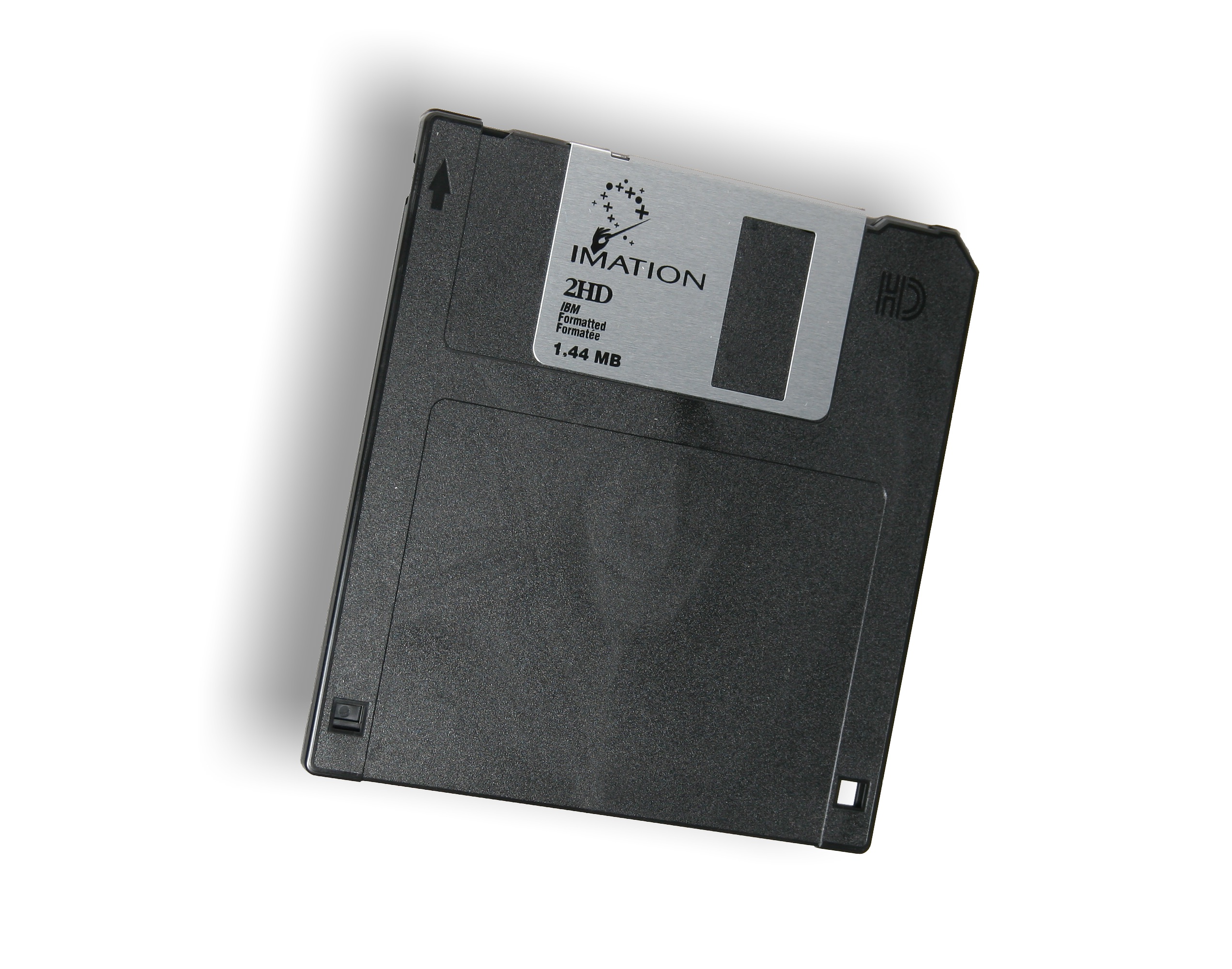

Megabyte

The megabyte is a multiple of the unit byte for digital information. Its recommended unit symbol is MB. The unit prefix ''mega'' is a multiplier of (106) in the International System of Units (SI). Therefore, one megabyte is one million bytes of information. This definition has been incorporated into the International System of Quantities. In the computer and information technology fields, other definitions have been used that arose for historical reasons of convenience. A common usage has been to designate one megabyte as (220 B), a quantity that conveniently expresses the binary architecture of digital computer memory. The standards bodies have deprecated this usage of the megabyte in favor of a new set of binary prefixes, in which this quantity is designated by the unit mebibyte (MiB). Definitions The unit megabyte is commonly used for 10002 (one million) bytes or 10242 bytes. The interpretation of using base 1024 originated as technical jargon for the byte multiples t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CPU Cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit (MMU) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kilobyte

The kilobyte is a multiple of the unit byte for digital information. The International System of Units (SI) defines the prefix '' kilo'' as 1000 (103); per this definition, one kilobyte is 1000 bytes.International Standard IEC 80000-13 Quantities and Units – Part 13: Information science and technology, International Electrotechnical Commission (2008). The internationally recommended unit symbol for the kilobyte is kB. In some areas of information technology, particularly in reference to solid-state memory capacity, ''kilobyte'' instead typically refers to 1024 (210) bytes. This arises from the prevalence of sizes that are powers of two in modern digital memory architectures, coupled with the accident that 210 differs from 103 by less than 2.5%. A kibibyte is defined by Clause 4 of IEC 80000-13 as 1024 bytes. Definitions and usage Base 10 (1000 bytes) In the International System of Units (SI) the prefix '' kilo'' means 1000 (103); therefore, one kilobyte is 1000 bytes. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)