|

Generalized Pareto Distribution

In statistics, the generalized Pareto distribution (GPD) is a family of continuous probability distributions. It is often used to model the tails of another distribution. It is specified by three parameters: location \mu, scale \sigma, and shape \xi. Sometimes it is specified by only scale and shape and sometimes only by its shape parameter. Some references give the shape parameter as \kappa = - \xi \,. Definition The standard cumulative distribution function (cdf) of the GPD is defined by : F_(z) = \begin 1 - \left(1 + \xi z\right)^ & \text\xi \neq 0, \\ 1 - e^ & \text\xi = 0. \end where the support is z \geq 0 for \xi \geq 0 and 0 \leq z \leq - 1 /\xi for \xi 0, and \xi\in\mathbb R) is : F_(x) = \begin 1 - \left(1+ \frac\right)^ & \text\xi \neq 0, \\ 1 - \exp \left(-\frac\right) & \text\xi = 0, \end where the support of X is x \geqslant \mu when \xi \geqslant 0 \,, and \mu \leqslant x \leqslant \mu - \sigma /\xi when \xi < 0. The |

Generalized Extreme Value Distribution

In probability theory and statistics, the generalized extreme value (GEV) distribution is a family of continuous probability distributions developed within extreme value theory to combine the Gumbel, Fréchet and Weibull families also known as type I, II and III extreme value distributions. By the extreme value theorem the GEV distribution is the only possible limit distribution of properly normalized maxima of a sequence of independent and identically distributed random variables. Note that a limit distribution needs to exist, which requires regularity conditions on the tail of the distribution. Despite this, the GEV distribution is often used as an approximation to model the maxima of long (finite) sequences of random variables. In some fields of application the generalized extreme value distribution is known as the Fisher–Tippett distribution, named after Ronald Fisher and L. H. C. Tippett who recognised three different forms outlined below. However usage of this name is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Extreme Value Theory

Extreme value theory or extreme value analysis (EVA) is a branch of statistics dealing with the extreme deviations from the median of probability distributions. It seeks to assess, from a given ordered sample of a given random variable, the probability of events that are more extreme than any previously observed. Extreme value analysis is widely used in many disciplines, such as structural engineering, finance, earth sciences, traffic prediction, and geological engineering. For example, EVA might be used in the field of hydrology to estimate the probability of an unusually large flooding event, such as the 100-year flood. Similarly, for the design of a breakwater, a coastal engineer would seek to estimate the 50-year wave and design the structure accordingly. Data analysis Two main approaches exist for practical extreme value analysis. The first method relies on deriving block maxima (minima) series as a preliminary step. In many situations it is customary and convenient to e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heavy-tailed Distribution

In probability theory, heavy-tailed distributions are probability distributions whose tails are not exponentially bounded: that is, they have heavier tails than the exponential distribution. In many applications it is the right tail of the distribution that is of interest, but a distribution may have a heavy left tail, or both tails may be heavy. There are three important subclasses of heavy-tailed distributions: the fat-tailed distributions, the long-tailed distributions and the subexponential distributions. In practice, all commonly used heavy-tailed distributions belong to the subexponential class. There is still some discrepancy over the use of the term heavy-tailed. There are two other definitions in use. Some authors use the term to refer to those distributions which do not have all their power moments finite; and some others to those distributions that do not have a finite variance. The definition given in this article is the most general in use, and includes all di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trigamma Function

In mathematics, the trigamma function, denoted or , is the second of the polygamma functions, and is defined by : \psi_1(z) = \frac \ln\Gamma(z). It follows from this definition that : \psi_1(z) = \frac \psi(z) where is the digamma function. It may also be defined as the sum of the series : \psi_1(z) = \sum_^\frac, making it a special case of the Hurwitz zeta function : \psi_1(z) = \zeta(2,z). Note that the last two formulas are valid when is not a natural number. Calculation A double integral representation, as an alternative to the ones given above, may be derived from the series representation: : \psi_1(z) = \int_0^1\!\!\int_0^x\frac\,dy\,dx using the formula for the sum of a geometric series. Integration over yields: : \psi_1(z) = -\int_0^1\frac\,dx An asymptotic expansion as a Laurent series is : \psi_1(z) = \frac + \frac + \sum_^\frac = \sum_^\frac if we have chosen , i.e. the Bernoulli numbers of the second kind. Recurrence and reflection fo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

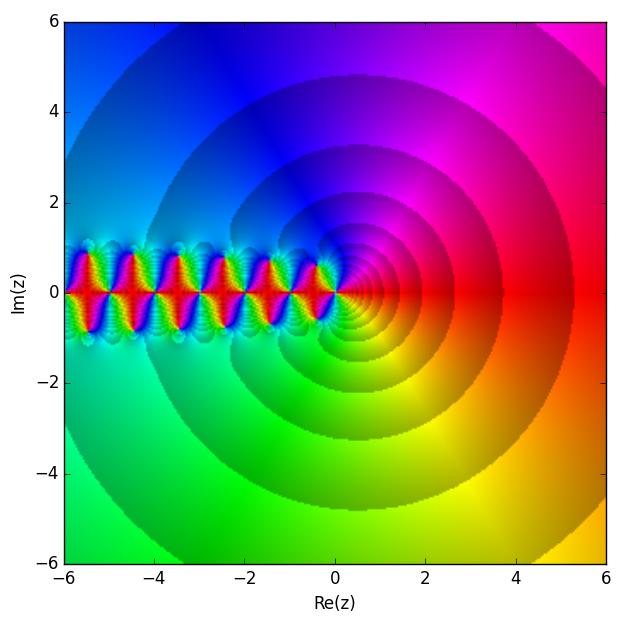

Polygamma Function

In mathematics, the polygamma function of order is a meromorphic function on the complex numbers \mathbb defined as the th derivative of the logarithm of the gamma function: :\psi^(z) := \frac \psi(z) = \frac \ln\Gamma(z). Thus :\psi^(z) = \psi(z) = \frac holds where is the digamma function and is the gamma function. They are holomorphic on \mathbb \backslash\mathbb_. At all the nonpositive integers these polygamma functions have a pole of order . The function is sometimes called the trigamma function. Integral representation When and , the polygamma function equals :\begin \psi^(z) &= (-1)^\int_0^\infty \frac\,\mathrmt \\ &= -\int_0^1 \frac(\ln t)^m\,\mathrmt\\ &= (-1)^m!\zeta(m+1,z) \end where \zeta(s,q) is the Hurwitz zeta function. This expresses the polygamma function as the Laplace transform of . It follows from Bernstein's theorem on monotone functions that, for and real and non-negative, is a completely monotone function. Setting in the above ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance

In probability theory and statistics, variance is the expectation of the squared deviation of a random variable from its population mean or sample mean. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers is spread out from their average value. Variance has a central role in statistics, where some ideas that use it include descriptive statistics, statistical inference, hypothesis testing, goodness of fit, and Monte Carlo sampling. Variance is an important tool in the sciences, where statistical analysis of data is common. The variance is the square of the standard deviation, the second central moment of a distribution, and the covariance of the random variable with itself, and it is often represented by \sigma^2, s^2, \operatorname(X), V(X), or \mathbb(X). An advantage of variance as a measure of dispersion is that it is more amenable to algebraic manipulation than other measures of dispersion such as the expected absolute deviatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Digamma Function

In mathematics, the digamma function is defined as the logarithmic derivative of the gamma function: :\psi(x)=\frac\ln\big(\Gamma(x)\big)=\frac\sim\ln-\frac. It is the first of the polygamma functions. It is strictly increasing and strictly concave on (0,\infty). The digamma function is often denoted as \psi_0(x), \psi^(x) or (the uppercase form of the archaic Greek consonant digamma meaning double-gamma). Relation to harmonic numbers The gamma function obeys the equation :\Gamma(z+1)=z\Gamma(z). \, Taking the derivative with respect to gives: :\Gamma'(z+1)=z\Gamma'(z)+\Gamma(z) \, Dividing by or the equivalent gives: :\frac=\frac+\frac or: :\psi(z+1)=\psi(z)+\frac Since the harmonic numbers are defined for positive integers as :H_n=\sum_^n \frac 1 k, the digamma function is related to them by :\psi(n)=H_-\gamma, where and is the Euler–Mascheroni constant. For half-integer arguments the digamma function takes the values : \psi \left(n+\tfrac12 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Value

In probability theory, the expected value (also called expectation, expectancy, mathematical expectation, mean, average, or first moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of a large number of independently selected outcomes of a random variable. The expected value of a random variable with a finite number of outcomes is a weighted average of all possible outcomes. In the case of a continuum of possible outcomes, the expectation is defined by integration. In the axiomatic foundation for probability provided by measure theory, the expectation is given by Lebesgue integration. The expected value of a random variable is often denoted by , , or , with also often stylized as or \mathbb. History The idea of the expected value originated in the middle of the 17th century from the study of the so-called problem of points, which seeks to divide the stakes ''in a fair way'' between two players, who have to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gamma Function

In mathematics, the gamma function (represented by , the capital letter gamma from the Greek alphabet) is one commonly used extension of the factorial function to complex numbers. The gamma function is defined for all complex numbers except the non-positive integers. For every positive integer , \Gamma(n) = (n-1)!\,. Derived by Daniel Bernoulli, for complex numbers with a positive real part, the gamma function is defined via a convergent improper integral: \Gamma(z) = \int_0^\infty t^ e^\,dt, \ \qquad \Re(z) > 0\,. The gamma function then is defined as the analytic continuation of this integral function to a meromorphic function that is holomorphic in the whole complex plane except zero and the negative integers, where the function has simple poles. The gamma function has no zeroes, so the reciprocal gamma function is an entire function. In fact, the gamma function corresponds to the Mellin transform of the negative exponential function: \Gamma(z) = \mat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |