|

Frobenius Covariant

In matrix theory, the Frobenius covariants of a square matrix are special polynomials of it, namely projection matrices ''A''''i'' associated with the eigenvalues and eigenvectors of .Roger A. Horn and Charles R. Johnson (1991), ''Topics in Matrix Analysis''. Cambridge University Press, They are named after the mathematician Ferdinand Frobenius. Each covariant is a projection on the eigenspace associated with the eigenvalue . Frobenius covariants are the coefficients of Sylvester's formula, which expresses a function of a matrix as a matrix polynomial, namely a linear combination of that function's values on the eigenvalues of . Formal definition Let be a diagonalizable matrix with eigenvalues ''λ''1, ..., ''λ''''k''. The Frobenius covariant , for ''i'' = 1,..., ''k'', is the matrix : A_i \equiv \prod_^k \frac (A - \lambda_j I)~. It is essentially the Lagrange polynomial In numerical analysis, the Lagrange interpolating polynomial is the unique polynomial of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix (mathematics)

In mathematics, a matrix (: matrices) is a rectangle, rectangular array or table of numbers, symbol (formal), symbols, or expression (mathematics), expressions, with elements or entries arranged in rows and columns, which is used to represent a mathematical object or property of such an object. For example, \begin1 & 9 & -13 \\20 & 5 & -6 \end is a matrix with two rows and three columns. This is often referred to as a "two-by-three matrix", a " matrix", or a matrix of dimension . Matrices are commonly used in linear algebra, where they represent linear maps. In geometry, matrices are widely used for specifying and representing geometric transformations (for example rotation (mathematics), rotations) and coordinate changes. In numerical analysis, many computational problems are solved by reducing them to a matrix computation, and this often involves computing with matrices of huge dimensions. Matrices are used in most areas of mathematics and scientific fields, either directly ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Square Matrix

In mathematics, a square matrix is a Matrix (mathematics), matrix with the same number of rows and columns. An ''n''-by-''n'' matrix is known as a square matrix of order Any two square matrices of the same order can be added and multiplied. Square matrices are often used to represent simple linear transformations, such as Shear mapping, shearing or Rotation (mathematics), rotation. For example, if R is a square matrix representing a rotation (rotation matrix) and \mathbf is a column vector describing the Position (vector), position of a point in space, the product R\mathbf yields another column vector describing the position of that point after that rotation. If \mathbf is a row vector, the same transformation can be obtained using where R^ is the transpose of Main diagonal The entries a_ () form the main diagonal of a square matrix. They lie on the imaginary line which runs from the top left corner to the bottom right corner of the matrix. For instance, the main diagonal of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Projection (linear Algebra)

In linear algebra and functional analysis, a projection is a linear transformation P from a vector space to itself (an endomorphism) such that P\circ P=P. That is, whenever P is applied twice to any vector, it gives the same result as if it were applied once (i.e. P is idempotent). It leaves its image unchanged. This definition of "projection" formalizes and generalizes the idea of graphical projection. One can also consider the effect of a projection on a geometrical object by examining the effect of the projection on points in the object. Definitions A projection on a vector space V is a linear operator P\colon V \to V such that P^2 = P. When V has an inner product and is complete, i.e. when V is a Hilbert space, the concept of orthogonality can be used. A projection P on a Hilbert space V is called an orthogonal projection if it satisfies \langle P \mathbf x, \mathbf y \rangle = \langle \mathbf x, P \mathbf y \rangle for all \mathbf x, \mathbf y \in V. A projecti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eigenvalue, Eigenvector And Eigenspace

In linear algebra, an eigenvector ( ) or characteristic vector is a vector that has its direction unchanged (or reversed) by a given linear transformation. More precisely, an eigenvector \mathbf v of a linear transformation T is scaled by a constant factor \lambda when the linear transformation is applied to it: T\mathbf v=\lambda \mathbf v. The corresponding eigenvalue, characteristic value, or characteristic root is the multiplying factor \lambda (possibly a negative or complex number). Geometrically, vectors are multi-dimensional quantities with magnitude and direction, often pictured as arrows. A linear transformation rotates, stretches, or shears the vectors upon which it acts. A linear transformation's eigenvectors are those vectors that are only stretched or shrunk, with neither rotation nor shear. The corresponding eigenvalue is the factor by which an eigenvector is stretched or shrunk. If the eigenvalue is negative, the eigenvector's direction is reversed. The e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

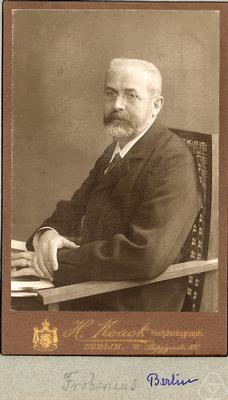

Ferdinand Georg Frobenius

Ferdinand Georg Frobenius (26 October 1849 – 3 August 1917) was a German mathematician, best known for his contributions to the theory of elliptic functions, differential equations, number theory, and to group theory. He is known for the famous determinantal identities, known as Frobenius–Stickelberger formulae, governing elliptic functions, and for developing the theory of biquadratic forms. He was also the first to introduce the notion of rational approximations of functions (nowadays known as Padé approximants), and gave the first full proof for the Cayley–Hamilton theorem. He also lent his name to certain differential-geometric objects in modern mathematical physics, known as Frobenius manifolds. Biography Ferdinand Georg Frobenius was born on 26 October 1849 in Charlottenburg, a suburb of Berlin, from parents Christian Ferdinand Frobenius, a Protestant parson, and Christine Elizabeth Friedrich. He entered the Joachimsthal Gymnasium in 1860 when he was nearly el ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sylvester's Formula

In matrix theory, Sylvester's formula or Sylvester's matrix theorem (named after J. J. Sylvester) or Lagrange−Sylvester interpolation expresses an analytic function of a matrix as a polynomial in , in terms of the eigenvalues and eigenvectors of ./ Roger A. Horn and Charles R. Johnson (1991), ''Topics in Matrix Analysis''. Cambridge University Press, Jon F. Claerbout (1976), ''Sylvester's matrix theorem'', a section of ''Fundamentals of Geophysical Data Processing''Online versionat sepwww.stanford.edu, accessed on 2010-03-14. It states that : f(A) = \sum_^k f(\lambda_i) ~A_i ~, where the are the eigenvalues of , and the matrices : A_i \equiv \prod_^k \frac \left(A - \lambda_j I\right) are the corresponding Frobenius covariants of , which are (projection) matrix Lagrange polynomials of . Conditions Sylvester's formula applies for any diagonalizable matrix with distinct eigenvalues, 1, ..., ''k'', and any function defined on some subset of the complex nu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix Function

In mathematics, every analytic function can be used for defining a matrix function that maps square matrices with complex entries to square matrices of the same size. This is used for defining the exponential of a matrix, which is involved in the closed-form solution of systems of linear differential equations. Extending scalar function to matrix functions There are several techniques for lifting a real function to a square matrix function such that interesting properties are maintained. All of the following techniques yield the same matrix function, but the domains on which the function is defined may differ. Power series If the analytic function has the Taylor expansion f(x) = c_0 + c_1 x + c_2 x^2 + \cdots then a matrix function A\mapsto f(A) can be defined by substituting by a square matrix: powers become matrix powers, additions become matrix sums and multiplications by coefficients become scalar multiplications. If the series converges for , x, < r, then t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Diagonalizable Matrix

In linear algebra, a square matrix A is called diagonalizable or non-defective if it is matrix similarity, similar to a diagonal matrix. That is, if there exists an invertible matrix P and a diagonal matrix D such that . This is equivalent to (Such D are not unique.) This property exists for any linear map: for a dimension (vector space), finite-dimensional vector space a linear map T:V\to V is called diagonalizable if there exists an Basis (linear algebra)#Ordered bases and coordinates, ordered basis of V consisting of eigenvectors of T. These definitions are equivalent: if T has a matrix (mathematics), matrix representation A = PDP^ as above, then the column vectors of P form a basis consisting of eigenvectors of and the diagonal entries of D are the corresponding eigenvalues of with respect to this eigenvector basis, T is represented by Diagonalization is the process of finding the above P and and makes many subsequent computations easier. One can raise a diag ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lagrange Polynomial

In numerical analysis, the Lagrange interpolating polynomial is the unique polynomial of lowest degree that interpolates a given set of data. Given a data set of coordinate pairs (x_j, y_j) with 0 \leq j \leq k, the x_j are called ''nodes'' and the y_j are called ''values''. The Lagrange polynomial L(x) has degree \leq k and assumes each value at the corresponding node, L(x_j) = y_j. Although named after Joseph-Louis Lagrange, who published it in 1795, the method was first discovered in 1779 by Edward Waring. It is also an easy consequence of a formula published in 1783 by Leonhard Euler. Uses of Lagrange polynomials include the Newton–Cotes method of numerical integration, Shamir's secret sharing scheme in cryptography, and Reed–Solomon error correction in coding theory. For equispaced nodes, Lagrange interpolation is susceptible to Runge's phenomenon of large oscillation. Definition Given a set of k + 1 nodes \, which must all be distinct, x_j \neq x_m for ind ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trace (linear Algebra)

In linear algebra, the trace of a square matrix , denoted , is the sum of the elements on its main diagonal, a_ + a_ + \dots + a_. It is only defined for a square matrix (). The trace of a matrix is the sum of its eigenvalues (counted with multiplicities). Also, for any matrices and of the same size. Thus, similar matrices have the same trace. As a consequence, one can define the trace of a linear operator mapping a finite-dimensional vector space into itself, since all matrices describing such an operator with respect to a basis are similar. The trace is related to the derivative of the determinant (see Jacobi's formula). Definition The trace of an square matrix is defined as \operatorname(\mathbf) = \sum_^n a_ = a_ + a_ + \dots + a_ where denotes the entry on the row and column of . The entries of can be real numbers, complex numbers, or more generally elements of a field . The trace is not defined for non-square matrices. Example Let be a matrix, with \m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eigendecomposition

In linear algebra, eigendecomposition is the factorization of a matrix into a canonical form, whereby the matrix is represented in terms of its eigenvalues and eigenvectors. Only diagonalizable matrices can be factorized in this way. When the matrix being factorized is a normal or real symmetric matrix, the decomposition is called "spectral decomposition", derived from the spectral theorem. Fundamental theory of matrix eigenvectors and eigenvalues A (nonzero) vector of dimension is an eigenvector of a square matrix if it satisfies a linear equation of the form \mathbf \mathbf = \lambda \mathbf for some scalar . Then is called the eigenvalue corresponding to . Geometrically speaking, the eigenvectors of are the vectors that merely elongates or shrinks, and the amount that they elongate/shrink by is the eigenvalue. The above equation is called the eigenvalue equation or the eigenvalue problem. This yields an equation for the eigenvalues p\left(\lambda\right) = \det\lef ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |